#ITS PROVER is all I could think of during the finale

Explore tagged Tumblr posts

Text

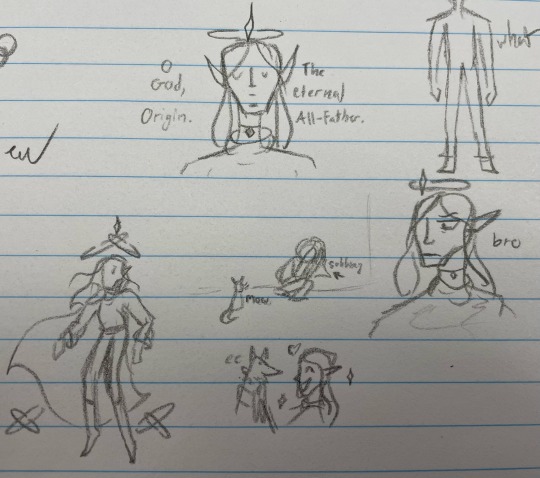

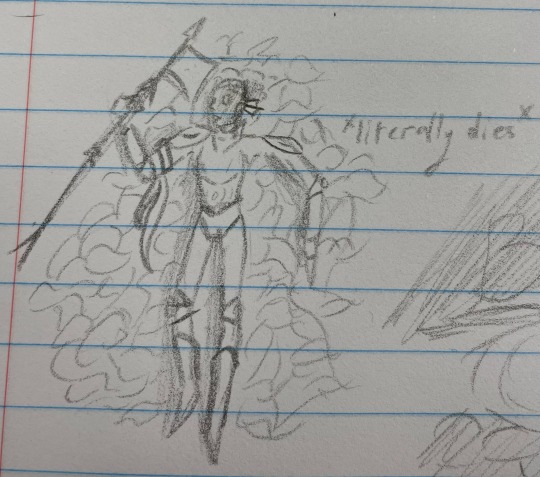

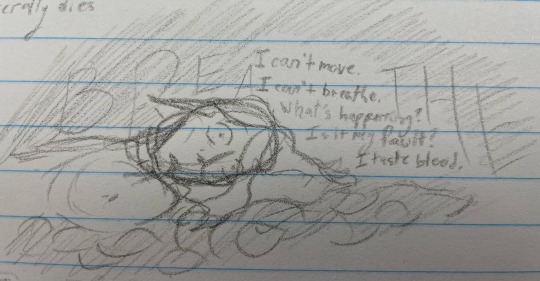

me when I can only doodle ruined reality

also that one drawing of Light is definitely heavily inspired by @viaphni ‘s illustration of that one scene in the finale lmao.

also also me when light suffocates because for some reason I imagine that losing a soul would feel like suffocating to death??? Idek man

k bye

#ruined reality#ruined reality au#RR light#general proxima#RR yellow leader#Fanart#ITS PROVER is all I could think of during the finale

13 notes

·

View notes

Text

Narrative edition, Week in Ethereum News, Jan 12, 2020

This is the 5th edition of the 6 annotated versions that I committed in my head to doing when I decided to see how an annotated edition would be received.

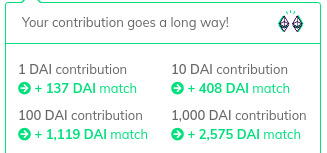

Given the response on my Gitcoin matching grant, I may have to keep going. Check it out - giving 1 DAI right now will get more than 100x matching.

Thanks to everyone who has given on my Gitcoin grant. It’s the best way to show you want these annotated editions to continue.

Even 1 Dai is super appreciated given the matching! Since Tumblr adds odd forwarders to links, here’s the text of the link: https://gitcoin.co/grants/237/week-in-ethereum-news

Eth1

Latest core devs call. Notes from Tim Beiko, discussion of EIPs 2456, 1962, 2348

EthereumJS v4.13 – bugfix release for Muir Glacier

Slockit released a stable version of their Incubed stateless ultralight client, aimed at IoT devices. 150kb to verify transactions or 500kb including EVM. incentive layer coming soon.

A little slow on in the eth1 section, but Incubed getting to be stable is cool. Obviously if they can get the incentive layer worked out, that will be fantastic.

When I think about that that I was wrong about, definitely if I go back to q1 or q2 2017, I thought that IoT Ethereum was much more of a thing. There was Brody’s early washing machine prototype, Airlock->Oaken, Slockit had some stuff 3 years ago, etc. It hasn’t really happened.

Why not? It seems to me like most of those things require enterprises to take the plunge. No one is going to go out and manufacture a domestic electronic device just yet with all the uncertainty there.

And light client server incentivization hasn’t happened yet. There’s just things to be built still. So it’s cool to see Incubed hitting a stable release. Slowly but surely all the necessary primitives get built. I still think there will be lots of robots transacting some day.

Eth2

Latest Eth2 implementer call. Notes from Mamy and from Ben.

Latest what’s new in Eth2

Spec version v0.10 with BLS standards

Prysmatic restarted its testnet with a newer version of the spec and mainnet config

Lodestar update on light clients and dev tooling

3 options for state providers

Eth2 for Dummies

Exploring validator costs

Eth2 for dummies was the most clicked this week. Even within the universe of people who subscribe to the newsletter, there’s always demand for high level explainers.

The spec is essentially finalized and out the door for auditing, so now it’s a sprint towards shipping, though of course there may be some minor changes as a result of further networking and of course from the audit.

Lately some in the community have been promoting a July ship date and I personally would be disappointed if it slipped that far.

Layer2

Optimistic rollup for tokens Fuel ships first public testnet

Optimistic Game Semantics for a generalized layer2 client

Loopring presents full results of their zk rollup testing

StarkEx says they can do 9000 trades per second at 75 gas per trade with offchain data, with the limiting factor being the prover, not onchain throughput.

9000 is a pretty crazy number, although since the data is offchain that makes it a Plasma construct and not a rollup. StarkWare to my memory hasn’t provided details on what the exit game is - as an end user do i get a proof I can submit if i need to exit? I have no idea.

Anyway, it’s very cool that the limiting factor is the prover, ie, nothing about Ethereum is what is currently limiting the 9000 transactions per second number.

Of course Loopring would also have a fairly crazy number if they did offchain data, but they have a thousand or 2k tps per second number with onchain data, and let’s be honest: right now the limiting factor here is the demand for dexes. No dex at the moment can fill even a hundred transactions per second. But as trades get cheaper, presumably there is more demand. And token trading will certainly increase in the next bull market.

Meanwhile Fuel shipped its first testnet. Very neat.

Stuff for developers

An update on the Vyper compiler: there’s now two efforts, a new one in Rust using YUL to target both EVM & ewasm as well as the existing one in Python.

A look at vulnerabilities of deployed code over time

a beginner’s guide to the K framework

Vulnerability: hash collisions with multiple variable length arguments

Verifying wasm transactions (and part2)

Austin Griffith’s eth.build metatransactions

Build your own customized Burner Wallet

Abridged v2 aiming to make it easy to onboard new users of web2 networks

Ethcode v0.9 VSCode extension

Embark v5

The Vyper saga is interesting. The existing Vyper compiler had a number of security holes found. EF’s Python team decided they didn’t like the existing codebase. So now some of the existing Vyper team is continuing on with the existing Python compiler, whereas there will also be an effort to write a Vyper compiler in Rust but to the intermediate language Yul, which means it will have both ewasm and EVM.

Also interesting to see the data viz of depoyed vulnerabilities over time. App security has been improving!

Ecosystem

RicMoo: SQRLing mnemonic phrases

ethsear.ch – Ethereum specific search engine

Avado’s RYO node – nodes opt-in and let users access them via load balancer

30 days of Eth ecosystem shipping

Aztec’s BN-254 trusted setup ceremony post-mortem. Confidential transactions launching this month

RicMoo always has interesting posts on techniques to use in Ethereum that aren’t mainstream. This one is pretty interesting.

I’m very excited about Aztec’s confidential transactions shipping this month. Much like Tornado, this is huge. The difference is that Aztec is about obscuring transaction amounts. Well, it’s about more than that and will be an interesting primitive for people to build with.

Enterprise

700m USD volume on Komgo commodity trade finance platform

TraSeable seafood tracker article on the challenges points out the troubles with no private chain interoperability

Caterpillar business process management system

Q&A with Marley Gray about the EEA’s Token Taxonomy Initiative

700m USD. It continues to strike me that enterprise is one of Ethereum’s biggest moats, and yet I don’t think I do a great job covering it. Much of what gets published is press release rewrites.

And of course I did a small bit of editorializing by noting that an article on private chains was finding how hard it was to have all these private chains. They need mainnet!

Governance and standards

EIP1559 implementation discussion

EIP2456: Time based upgrades

Metamask’s bounty for a generalized metatransaction standard

1559 is important because it kills economic abstraction forever! It’s happening in eth2, it’d be nice to have it happen in eth1. While I think some of the tradeoffs have not been written about - and that originally caused me to be a bit skeptical, perhaps i’ll write a post about that -- it’d be great to get 1559 into production.

In general, Eth needs better standardization around wallets for frontend devs.

Application layer

Flashloans within one transaction using Aave Protocol are live on mainnet

Orchid’s decentralized VPN launches

Data viz on dexes in 2019

ZRXPortal for ZRX holders to delegate their tokens to stakers

Dai Stability Fee and Dai Savings Rate go up to 6%, while Sai Stability Fee at 5%

EthHub’s new Ethereum user guides

It’s great that EthHub is doing user guides, that’s something that is missing. What’s also missing is a concerted Ethereum effort to link to stuff so that old uncle Google does a better job of returning search results.

Aave’s flashloans is another neat primitive. Things unique to DeFi.

Tokens/Business/Regulation

David Hoffman: the money game landscape

Australia experimenting with a digital Aussie dollar, with a prototype on a private Ethereum chain

3 cryptocurrency regulation themes for 2020

OpenSea’s compendium of NFT knowledge

A newsletter to keep track of the NFT space

Initial Sardine Coin Offering

NBA guard Spencer Dinwiddie’s tokenized contract launches January 13

Progressive decentralization: a dapp business plan

I wrote a Twitter thread about Dinwiddie’s tokens. I’m curious how they do, given that the NBA made him change plans and just do a bond. I haven’t seen the prospectus, and press accounts have conflicting information, but it appears that the annual interest rate is 14%. In that case, I wonder who puts fiat in? I doubt anyone would sell their ETH at these prices for a 14% USD return. There is some risk, but it basically requires Dinwiddie to lose the plot and get arrested or fail a drug test. So it seems like quite a good return given the low risk (assuming it is indeed 14%)

That Sardine token is wild, but not crazy. I won’t even try to summarize it, but perhaps even weirder is that they announced it at CES. Is your average CES attendee going to have any idea what ETH is during the depths of cryptowinter? Unclear to me.

Lots of good stuff in this section this week. Jesse Walden wrote up the “get product market fit, then community, then decentralize” which has worked for a bunch of DeFi protocols.

It’s also the opposite of what folks like Augur and Melon have done. So far the “decentralize later” camp has gotten more traction, but I personally don’t consider this argument decided at all, especially if you are in heavily regulated industries like Augur or Melon where regulators might put you into bankruptcy just because they got up on the wrong side of the bed that morning.

I also think Augur could be a sleeper hit for 2020, and I think Melon’s best days are still in front of it.

General

Andrew Keys: 20 blockchain predictions for 2020

Haseeb Qureshi’s intro to cryptocurrency class for programmers

Ben Edgington’s BLS12-381 for the rest of us

Visualizing efficient Merkle trees for zero knowledge proofs

Eli Ben-Sasson’s catalog of the Cambrian zero knowledge explosion

Bounty for breaking RSA assumptions

It’s amusing how heavy crypto stuff often gets put into this section. The cryptography stuff is all super interesting, but not sure I can provide much more context for it.

Juxtaposed with intense cryptography in this section is Andrew Keys’ annual new year predictions. Always a fun read.

Haseeb’s class looked great. A place to pick up all the knowledge that has taken some of us years to piece together, blog post by blog post.

Full Week in Ethereum News issue.

0 notes

Text

History, Waves and Winters in AI – Hacker Noon

“I don’t see that human intelligence is something that humans can never understand.”

~ John McCarthy, March 1989

Is it? Credits: DM Community

This post is highly motivated by Kai-Fu Lee talk on “Where Will Artificial Intelligence Take us?”

Here is the link to all the listeners. In case you like to read, I’m (lightly) editing them. All credit to Kai-Fu Lee , all blame to me, etc.

Readers can jump to next sections if their minds echo “C’mon, I know this!”. I will try to explain everything succinctly. Every link offers different insight into the topic (except the usual wiki) so give them a try!

Introduction

Buzzwords

Artificial Super Intelligence (ASI) One of AI’s leading figures, Nick Bostrom has defined super intelligence as “an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills.” A machine capable of constantly learning and improving itself could be unstoppable. Artificial Super intelligence ranges from a computer that’s just a little smarter than a human to one that’s trillions of times smarter — across the board. ASI is the reason the topic of AI is such a spicy meatball and why the words “immortality” and “extinction” will both appear in these posts multiple times. Think about HAL 9000 !

Artificial General Intelligence (AGI) Sometimes referred to as Strong AI, or Human-Level AI, Artificial General Intelligence refers to a computer that is as smart as a human across the board — a machine that can perform any intellectual task that a human being can. Professor Linda Gottfredson describes intelligence as “A very general mental capability that, among other things, involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience.” AGI would be able to do all of those things as easily as you can.

Artificial Intelligence (AI) AI is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable.

Intelligent Augmentation (IA) Computation and data are used to create services that augment human intelligence and creativity. A search engine can be viewed as an example of IA (it augments human memory and factual knowledge), as can natural language translation (it augments the ability of a human to communicate).

Machine Learning (ML) Machine learning is the science of getting computers to act without being explicitly programmed. For instance, instead of coding rules and strategies of chess into a computer, the computer can watch a number of chess games and learn by example. Machine learning encompasses a wide variety of algorithms.

Deep Learning (DL) Deep learning refers to many-layered neural networks, one specific class of machine learning algorithms. Deep learning is achieving an unprecedented state of the art results, by an order of magnitude, in nearly all fields to which it’s been applied so far, including image recognition, voice recognition, and language translation.

Big Data Big data is a term that describes the large volume of data — both structured and unstructured — that inundates a business on a day-to-day basis. This was an empty marketing term that falsely convinced many people that the size of your data is what matters. It also cost companies huge sums of money on Hadoop clusters they didn’t actually need.

Only some are mentioned! Credits: Nvidia

History

Let me start with a story.

Michael Jordan explains in his talk at SysML 18 the story about coining the term “ AI” and how it is little different than often told. It goes like this, “It wasn’t Minsky, Papert, Newell all sitting at a conference. It was McCarthy who arrives at MIT, he says I’m gonna work on intelligence in computing and they say well isn’t that Cybernetics, we already have Norbert Wiener who does that. He says, “no no it’s different”. ” And so, how is it different. Well, he couldn’t really convince people it was based on logic rather than control theory, signal processing, optimization. So, he had to give it a new buzzword and he invented “Artificial Intelligence”. “AI is a general term that refers to hardware or software that exhibits behavior which appears intelligent.” AI is designed around how people think. It’s an emulation of human intelligence.

The field of AI has gone through phases of rapid progress and hype in the past, quickly followed by a cooling in investment and interest, often referred to as “AI winters”.

Waves and Winters

First Wave (1956–1974)

The programs that were developed during this time were, simply astonishing. Computers were Daniel Bobrow’s program STUDENT solving algebra word problems, proving theorems in geometry such as Herbert Gelernter’s Geometry Theorem Prover and SAINT, written by Minsky’s student James Slagle and Terry Winograd’s SHRDLU learning to speak English. A perceptron was a form of neural network introduced in 1958 by Frank Rosenblatt predicting that “perceptron may eventually be able to learn, make decisions, and translate languages. (spoiler alert: it did)”

First Winter (1974–1980)

In the 1970s, AI was subject to critiques and financial setbacks. AI researchers had failed to appreciate the difficulty of the problems they faced. Their tremendous optimism had raised expectations impossibly high, and when the promised results failed to materialize, funding for AI disappeared. In the early seventies, the capabilities of AI programs were limited. Even the most impressive could only handle trivial versions of the problems they were supposed to solve; all the programs were, in some sense, “toys”.

Second Wave (1980–1987)

The belief at one point was that we would take human intelligence and implement it as rules that would have a way to act as people. We told them the steps in which we go through our thoughts. For example, if I’m hungry I would go out and eat, if I have used a lot of money this month I will go to a cheaper place. Cheaper place implies McDonald’s and McDonald’s I avoid fried foods, so I just get a hamburger. So, that “if-then-else” we think we reason and that’s how the first generation of so-called expert systems or symbolic AI proceeded. That was the first wave that got people excited thinking we could write rules. Another encouraging event in the early 1980s was the revival of connectionism in the work of John Hopfield and David Rumelhart.

Second Winter (1987–1993)

The expert systems or symbolic AI with handwritten “if-then-else” rules were limiting because when we write down the rules there were just too many. A professor at MCC named Douglas Lenat proceeded to hire 100s of people to write down all the rules they could think of thinking that one way they will be done and that will be the brain in a project called Cyc. But knowledge in the world was too much and their interaction were too complex. The rule-based systems that we knew really didn’t know how to build it, which failed completely, resulting in only a handful of somewhat useful applications and that led everybody to believe that AI was doomed and it is not worth pursuing. Expert systems could not scale and in fact, could never scale and our brains didn’t probably work the way we thought they work. We, in order to simplify the articulation of our decision process use “if-then-else” as a language that people understood but our brains were actually much more complex than that.

Third Wave (1993–present)

The field of AI, now more than a half a century old, finally achieved some of its oldest goals. In 2005, a Stanford robot won the DARPA Grand Challenge by driving autonomously for 131 miles along an unrehearsed desert trail. Two years later, a team from CMU won the DARPA Urban Challenge by autonomously navigating 55 miles in an Urban environment while adhering to traffic hazards and all traffic laws. In February 2011, in a Jeopardy! quiz show exhibition match, IBM’s question answering system, Watson, defeated the two greatest Jeopardy! Champion.

Starting in the early 2010s, huge amounts of training data together with massive computational power (by some of the big players) prompted a re-evaluation of some particular 30-year-old neural network algorithms. To the surprise of many researchers this combination, aided by new innovations, managed to rapidly catapult these ‘Deep Learning’ systems way past the performance of traditional approaches in several domains — particularly in speech and image recognition, as well as most categorization tasks.

In DL/ML the idea is to provide the system with training data, to enable it to ‘program’ itself — no human programming required! In laboratories all around the world, little AIs(narrow) are springing to life. Some play chess better than any human ever has. Some are learning to drive a million cars a billion miles while saving more lives than most doctors or EMTs will over their entire careers. Some will make sure your dishes are dry and spot-free, or that your laundry is properly fluffed and without a wrinkle. Countless numbers of these bits of intelligence are being built and programmed; they are only going to get smarter and more pervasive; they’re going to be better than us, but they’ll never be just like us.

Deep learning is responsible for today’s explosion of AI. This field gave birth to many buzzwords like CNN, LSTM, GRU, RNN, GAN, ___net, deep___, ___GAN, etc which also visited fields like RL, NLP, etc gave very interesting achievements like AlphaGo, AlphaZero, self-driving cars, chatbots, and may require another post to just cover its achievements. It has given computers extraordinary powers, like the ability to recognize spoken words almost as well as a person could, a skill too complex to code into the machine by hand. Deep learning has transformed computer vision and dramatically improved machine translation. It is now being used to guide all sorts of key decisions in medicine, finance, manufacturing — and beyond.

We don’t (and can’t) understand how machine learning instances operate in any symbolic (as opposed to reductive) sense. Equally, we don’t know what structures and processes in our brains enable us to process symbols in intelligent ways: to abstract, communicate and reason through symbols, whether they be words or mathematical variables, and to do so across domains and problems. Moreover, we have no convincing path for progress from the first type of system, machine learning, to the second, the human brain. It seems, in other words — notwithstanding genuine progress in machine learning — that it is another dead end with respect to intelligence: the third AI winter will soon be upon us. There’s already an argument that being able to interrogate an AI system about how it reached its conclusions is a fundamental legal right. There’s too much money behind machine learning for the third winter to occur in 2018, but it won’t be long before the limited nature of AI advances sinks in.

In short, this is how it happened Credits: matter2media

What’s Next?

Our lives are bathed in data: from recommendations about whom to “follow” or “friend” to data-driven autonomous vehicles.

We are living in the age of big data, and with every link we click, every message we send, and every movement we make, we generate torrents of information.

In the past two years, the world has produced more than 90 percent of all the digital data that has ever been created. New technologies churn out an estimated 2.5 quintillion bytes per day. Data pours in from social media and cell phones, weather satellites and space telescopes, digital cameras and video feeds, medical records and library collections. Technologies monitor the number of steps we walk each day, the structural integrity of dams and bridges, and the barely perceptible tremors that indicate a person is developing Parkinson’s disease.

Data in the age of AI has been described in any number of ways: the new gold, the new oil, the new currency and even the new bacon. By now, everyone gets it: Data is worth a lot to businesses, from auditing to e-commerce. But it helps to understand what it can and cannot do, a distinction many in the business world still must come to grips with.

“All of machine learning is about error correction.”

-Yann LeCun, Chief AI scientist, Facebook

Todays AI which we call Weak AI, is really an optimizer based on data in one domain that they learn to do one thing extremely well. It’s a very vertical single task where you cannot teach it many things, common sense, give emotion and no self awareness and therefore no desire or even an understanding of how to love or dominate. It’s great as a tool, to add value and creating value which will also replace many of human job mundane tasks.

If we look at history of AI, the deep learning type of innovation really just happened one time in 60 years that we have breakthrough. We cannot go and predict that we’re gonna have breakthrough next year and the month after that. Exponential adoption of applications is now happening which is great but exponential inventions is a ridiculous concept.

We are seeing speech-to-speech translation as good as amateur translator now not yet at professional level as clearly explained by Douglas Hofstadter in this article on the Atlantic. Eventually possibly in future, we don’t have to learn foreign languages, we’ll have a earpiece that translates what other people say which is wonderful addition in convenience, productivity, value creation, saving time but at same time we have to be cognizant that translators will be out of jobs. Looking back when we think about Industrial Revolution, we see it as having done lot of good created lot of jobs but process was painful and some of the tactics were questionable and we’re gonna see all those issues come up again and worse in AI revolution. In Industrial Revolution, many people were in fact replaced and displaced and their jobs were gone and they had to live in destitute although overall employment and wealth were created but it was made by small number of people. Fortunately, Industrial Revolution lasted a long time and it was gradual and governments could deal with one group at a time whose jobs were then being displaced and also during Industrial Revolution certain work ethic was perpetuated that the capitalist wanted the rest of the world to think that if I worked hard even if it is a routine repetitive job I will get compensated, I will have a certain degree of wealth that will give me dignity and self-actualization that people saw while he works hard, he has a house, he’s a good citizen of the society. That surely isn’t how we want to remembered as mankind but that is how most people on earth believe in their current existence and that’s extremely dangerous now because AI is going to be taking most of these boring, routine, mundane, repetitive jobs and people will lose their jobs. The people losing their jobs used to feel their existence as work ethic, working hard getting that house, providing for the family.

In understanding these AI tools that are doing repetitive tasks it certainly comes back to tell us that well doing repetitive task can’t be what makes us human and that AI’s arrival will at least remove what cannot be reason for existence on this earth. Potential reason for our existence is that we create, we invent things, we celebrate creation and we are very creative about scientific process, curing diseases, creative about writing books, telling stories, etc. These are the creativity we should celebrate and that’s perhaps what makes us human.

We need AI. It is the ultimate accelerator of a human’s capacity to fill their own potential. Evolution is not assembling. We still only utilize about 10 percent of our total brain function. Think about the additional brain functioning potential we will have as AI continues to develop, improve, and advance.

Computer scientist Donald Knuth puts it, “AI has by now succeeded in doing essentially everything that requires ‘thinking’ but has failed to do most of what people and animals do ‘without thinking.’”

To put things into perspective, AI can and will expand our neocortex and act as an extension to our 300 million brain modules. According to Ray Kurzweil, American author, computer scientist, inventor and futurist, “The future human will be a biological and non-biological hybrid.”

If you liked my article, please smash the 👏 below as many times as you liked the article (spoiler alert: 50 is limit, I tried!) so other people will see this here on Medium.

If you have any thoughts, comments, questions, feel free to comment below.

Further “Very very very Interesting” Reads

Geoffrey Hinton [https://torontolife.com/tech/ai-superstars-google-facebook-apple-studied-guy/]

Yann LeCun [https://www.forbes.com/sites/insights-intelai/2018/07/17/yann-lecun-an-ai-groundbreaker-takes-stock/]

Youshua Bengio [https://www.cifar.ca/news/news/2018/08/01/q-a-with-yoshua-bengio]

Ian Goodfellow GANfather [https://www.technologyreview.com/s/610253/the-ganfather-the-man-whos-given-machines-the-gift-of-imagination/]

AI Conspiracy: The ‘Canadian Mafia’ [https://www.recode.net/2015/7/15/11614684/ai-conspiracy-the-scientists-behind-deep-learning]

Douglas Hofstadter [https://www.theatlantic.com/magazine/archive/2013/11/the-man-who-would-teach-machines-to-think/309529/]

Marvin Minsky [https://www.space.com/32153-god-artificial-intelligence-and-the-passing-of-marvin-minsky.html]

Judea Pearl [https://www.theatlantic.com/technology/archive/2018/05/machine-learning-is-stuck-on-asking-why/560675/]

John McCarthy [http://jmc.stanford.edu/artificial-intelligence/what-is-ai/index.html]

Prof. Nick Bostrom — Artificial Intelligence Will be The Greatest Revolution in History [https://www.youtube.com/watch?v=qWPU5eOJ7SQ]

François Chollet [https://medium.com/@francois.chollet/the-impossibility-of-intelligence-explosion-5be4a9eda6ec]

Andrej Karpathy [https://medium.com/@karpathy/software-2-0-a64152b37c35]

Walter Pitts [http://nautil.us/issue/21/information/the-man-who-tried-to-redeem-the-world-with-logic]

Machine Learning [https://techcrunch.com/2016/10/23/wtf-is-machine-learning/]

Neural Networks [https://physicsworld.com/a/neural-networks-explained/]

Intelligent Machines [https://www.quantamagazine.org/to-build-truly-intelligent-machines-teach-them-cause-and-effect-20180515/]

Self-Conscious AI [https://www.wired.com/story/how-to-build-a-self-conscious-ai-machine/]

The Quartz guide to artificial intelligence: What is it, why is it important, and should we be afraid? [https://qz.com/1046350/the-quartz-guide-to-artificial-intelligence-what-is-it-why-is-it-important-and-should-we-be-afraid/]

The Great A.I. Awakening [https://www.nytimes.com/2016/12/14/magazine/the-great-ai-awakening.html]

China’s AI Awakening [https://www.technologyreview.com/s/609038/chinas-ai-awakening]

AI Revolution [https://getpocket.com/explore/item/the-ai-revolution-the-road-to-superintelligence-823279599]

Artificial Intelligence — The Revolution Hasn’t Happened Yet [https://medium.com/@mijordan3/artificial-intelligence-the-revolution-hasnt-happened-yet-5e1d5812e1e7]

AI’s Language Problem [https://www.technologyreview.com/s/602094/ais-language-problem/]

AI’s Next Great Challenge: Understanding the Nuances of Language [https://hbr.org/2018/07/ais-next-great-challenge-understanding-the-nuances-of-language]

Dark secret at the heart of AI [https://www.technologyreview.com/s/604087/the-dark-secret-at-the-heart-of-ai/]

How Frightened Should We Be of A.I.? [https://www.newyorker.com/magazine/2018/05/14/how-frightened-should-we-be-of-ai]

The Real Threat of Artificial Intelligence [https://www.nytimes.com/2017/06/24/opinion/sunday/artificial-intelligence-economic-inequality.html]

Artificial Intelligence’s ‘Black Box’ Is Nothing to Fear [https://www.nytimes.com/2018/01/25/opinion/artificial-intelligence-black-box.html]

Tipping point for Artificial Intelligence [https://www.datanami.com/2018/07/20/the-tipping-point-for-artificial-intelligence/]

AI Winter isn’t coming [https://www.technologyreview.com/s/603062/ai-winter-isnt-coming/]

AI winter is well on its way [https://blog.piekniewski.info/2018/05/28/ai-winter-is-well-on-its-way/]

AI is in bubble [https://www.theglobeandmail.com/business/commentary/article-artificial-intelligence-is-in-a-bubble-heres-why-we-should-build-it/]

0 notes

Text

Sidechains: Why These Researchers Think They Solved a Key Piece of the Puzzle

New blockchains are born all the time. Bitcoin was the lone blockchain for years, but now there are hundreds. The problem is, if you want to use the features offered on another blockchain, you have to buy the tokens for that other blockchain.

But all that may soon change. One developing technology called sidechains promises to make it easier to move tokens across blockchains and, as a result, open the doors to a world of possibilities, including building bridges to the legacy financial systems of banks.

In October 2017, Aggelos Kiayias, professor at the University of Edinburgh and chief scientist at blockchain research and development company IOHK; Andrew Miller, professor at the University of Illinois at Urbana-Champaign; and Dionysis Zindros, researcher at the University of Athens, released the paper “Non-Interactive Proofs of Proof-of-Work” (NiPoPoW), introducing a critical piece to the sidechains puzzle that had been missing for three years. This is the story of how they got there.

But, first, what exactly is a sidechain?

Same Coin, Different Blockchain

A sidechain is a technology that allows you to move your tokens from one blockchain to another, use them on that other blockchain and then move them back at a later point in time, without the need for a third party.

In the past, the parent blockchain has typically been Bitcoin, but a parent chain could be any blockchain. Also, when a token moves to another blockchain, it should maintain its same value. In other words, a bitcoin on an Ethereum sidechain would remain a bitcoin.

The biggest advantage of sidechains is that they would allow users to access a host of new services. For instance, you could move bitcoin to another blockchain to take advantage of privacy features, faster transaction speeds and smart contracts.

Sidechains have other uses, too. A sidechain could offer a more secure way to upgrade a protocol, or it could serve as a type of security firewall, so that in the event of a catastrophic disaster on a sidechain, the main chain would remain unaffected. “It is a kind of limited liability,” said Zindros in a video explaining how the technology works.

Finally, if banks were to create their own private blockchain networks, sidechains could enable communications with those networks, allowing users to issue and track shares, bonds and other assets.

Early Conversations

Early dialogue about sidechains first appeared in Bitcoin chat rooms around 2012, when Bitcoin Core developers were thinking of ways to safely upgrade the Bitcoin protocol.

One idea was for a “one-way peg,” where users could move bitcoin to a separate blockchain to test out a new client; however, once those assets were moved, they could not be moved back to the main chain.

“I was thinking of this as a software engineering tool that could be used to make widespread changes,” Adam Back, now CEO at blockchain development company Blockstream, said in an interview with Bitcoin Magazine. “You could say, we are going to make a new version [of Bitcoin], and we think it will be ready in a year, but in the meantime, you can opt in early and test it.”

According to Back, sometime in the following year, on the Bitcoin IRC channel, Bitcoin Core developer Greg Maxwell suggested an idea for a “two-way peg,” where value could be transferred to the alternative chain and then back to Bitcoin at a later point.

A two-way peg addressed another growing concern at the time. Alternative coins, like Litecoin and Namecoin, were becoming increasingly popular. The fear was these “altcoins” would dilute the value of bitcoin. It made sense, Bitcoin Core developers thought, to keep bitcoin as a type of reserve currency, and relegate new features to sidechains. That way, “if you wanted to use a different feature, you wouldn’t have to buy a speculative asset,” said Back.

To turn the concept of sidechains into a reality, Back along with Maxwell and a few other Bitcoin Core developers formed Blockstream in 2014. In October that year, the group released “Enabling Blockchain Innovations with Pegged Sidechains,” a paper describing sidechains at a high level. Miller appears as a co-author on that paper as well.

How Sidechains Work

One important component of sidechains is a simplified payment verification (SPV) proof that shows that tokens have been locked up on one chain so validators can safely unlock an equivalent value on the alternative chain. But to work for sidechains, an SPV proof has to be small enough to fit into a single coinbase transaction, the transaction that rewards a miner with new coins. (Not to be confused with the company Coinbase.)

At the time the Blockstream researchers released their paper, they knew they needed a compressed SPV proof to get sidechains to work, but they had not yet developed the cryptography behind it. So they outlined general, high-level ideas.

The Blockstream paper describes two types of two-way pegs: a symmetric two-way peg, where both chains are independent with their own mining; and an asymmetric two-way peg, where sidechain miners are full validators of the parent chain.

In a symmetric two-way peg, a user sends her bitcoins to a special address. Doing so locks up the funds on the Bitcoin blockchain. That output remains locked for a contest period of maybe six blocks (one hour) to confirm the transaction has gone through, and then an SPV proof is created to send to the sidechain.

At that point, a corresponding transaction appears on the sidechain with the SPV proof, verifying that money has been locked up on the Bitcoin blockchain, and then coins with the same value of account are unlocked on the sidechain.

Coins are spent and change hands and, at a later point, are sent back to the main chain. When the coins are returned to the main chain, the process repeats. They are sent to a locked output on the sidechain, a waiting period goes by, and an SPV proof is created and sent back to the main blockchain to unlock coins on the main chain.

In an asymmetric two-way peg, the process is slightly different. The transfer from the parent chain to the sidechain does not require an SPV proof, because validators on the sidechain are also aware of the state of the parent chain. An SPV proof is still needed, however, when the coins are returned to the parent chain.

The Search for a Compact Proof

In a sidechain, a compact SPV proof needs to contain a compressed version of all the block headers in the chain where funds are locked up from the genesis block through the contest period, as well as transaction data and some other data. In this way, an SPV proof can also be thought of as a “proof of proof-of-work” for a particular output.

Inspiration for the compact SPV proof comes from a linked-list-like structure known as a “skip list” developed 25 years ago. In applying this structure to a compact SPV proof, the trick was in finding a way to skip block headers while still maintaining a high level of security so that an adversary would not be able to fake a proof.

In working through the problem, Blockstream showed an early draft of its sidechains paper to Miller, who had been mulling over compact SPVs for a few years already.

In August 2012, in a post on a BitcoinTalk forum titled “The High-Value-Hash Highway,” Miller described an idea for a “merkle skip list” that a Bitcoin light client could use to quickly determine the longest chain and begin using it. In that post, he described the significance of the data structure as “absolutely staggering.”

When Miller read through the Blockstream draft, he spotted a vulnerability in the compact SPV proof described in the paper. Discussions ensued, but they “couldn’t find a way to solve that problem without compromising efficiency,” Miller said.

Miller’s non-trivial contributions to the Blockstream paper ended up being a few paragraphs in Appendix B that describe the challenges in creating a compact SPV proof.

It should “be possible to greatly compress a list of headers while still proving the same amount of work,” the section reads, but “optimising these tradeoffs and formalising the security guarantees is out of scope for this paper and the topic of ongoing work.”

That ongoing work remained stuck for three years.

Compact SPV

During that ensuing time, researchers at IOHK began taking a more serious interest in sidechains. Plans were taking shape for Cardano, a new proof-of-stake blockchain that IOHK had been contracted to build.

Cardano would consist of two layers: a settlement layer, launched in September 2017, where the money supply would be kept, and a smart contract layer. Those two layers would be two sidechain-enabled blockchains. In this way, the settlement could remain simple and secure from any attacks that might occur on the smart contract layer. But if IOHK was to get Cardano to work as intended, it needed to solve sidechains.

In February 2016, Kiayias, then a professor at the University of Athens, and two of his students, Nikolaos Lamprou and Aikaterini-Panagiota Stouka, released “Proofs of Proofs of Work with Sublinear Complexity” (PoPoW).

The paper was the first to formally address a compact SPV proof. Only, the proof described in the paper was interactive; whereas, to work for sidechains, it needed to be non-interactive.

In an interactive proof, the prover and the verifier enter into a back-and-forth conversation, meaning there could be more than one round of messaging. In contrast, a non-interactive proof would be a simple, short string of text that would fit neatly into a single transaction on the blockchain.

The PoPoW paper was presented at BITCOIN’16, a workshop affiliated with the International Financial Cryptography Association’s (IFCA) Financial Cryptography and Data Security conference. Miller, who was at the conference, approached Kiayias and shared an idea for making the protocol non-interactive.

It was a “nice observation,” Kiayias told Bitcoin Magazine, but making the proof secure was “not obvious at all” and would require significant work.

Zindros, who had just started working on his PhD under Kiayias, was also at the conference, and he needed a topic for his thesis. Kiayias saw a good fit, “so we pressed on, the three of us, and adapted the PoPoW protocol and its proof of security to the non-interactive setting,” Kiayias said.

In October 2016, Kiayias officially joined IOHK, and a year later, Kiayias, Miller and Zindros released “Non-Interactive Proofs of Proof-of-Work,” introducing a compact SPV proof five years after sidechains had first been talked about on Bitcoin forums.

“If it were interactive, I don’t know if it would have worked; with a non-interactive proof, it is really smooth,” Zindros told Bitcoin Magazine.

More Work to Be Done

Even with NiPoPoW, sidechains are still not fully specified. Several questions remain, including, how small can the proofs be made? After a transaction is locked up on one chain, how much time needs to pass before it can be spent on the other? And, will it be possible to move a token from a sidechain directly to another sidechain?

“A lot of theory still needs to be defined,” IOHK CEO Charles Hoskinson said in speaking to Bitcoin Magazine.

Also, while NiPoPoW is designed to work for proof-of-work blockchains, some believe that if blockchains are to take their place in the world on a grand scale, the future rests in proof-of-stake protocols like Ouroboros, Algorand or Snow White, which promise to be more energy-efficient than Bitcoin.

In particular, if Cardano, which is based on Ouroboros, is to work according to plan, IOHK researchers still need to discover a non-interactive proof of proof-of-stake (NiPoPoS).

Hoskinson is confident. “We can definitely do that,” he said. “We can definitely have a NiPoPoS. The question is how many megabytes or kilobytes is it going to be? Can we bring it down to 100 KB? That is really the question.”

This article originally appeared on Bitcoin Magazine.

from InvestmentOpportunityInCryptocurrencies via Ella Macdermott on Inoreader https://bitcoinmagazine.com/articles/sidechains-why-these-researchers-think-they-solved-key-piece-puzzle/

0 notes