#Enhanced LOD in VR

Explore tagged Tumblr posts

Text

《Asgard’s Wrath 2》首次更新:引入Quest 3視覺增強功能

自《阿斯加德之怒2》發布以來的四天裡,Sanzaru和Oculus工作室的團隊對玩家的反饋表示震驚。他們熱切地希望每位玩家都能擁有最佳遊戲體驗,因此迫不及待地分享了《阿斯加德之怒2》的第一次更新資訊。這次更新將在本週推出,主要包括Quest 3的視覺增強功能。 團隊清楚地聽到了玩家的反饋,迅速開發了兩個Quest 3視覺增強的選項,這些選項是可選的。他們還有更多增強選項在計劃中,但需要時間來驗證其可行性,這些討論將在新年之後才會��始。為了不讓玩家在假期裡等待,團隊決定現在就推出這些增強功能: 90Hz切換選項 增強視覺效果——這將提高屏幕解析度和細節層次(LOD)的距離乘數 開發團隊知道有些玩家正在嘗試自行超頻這些設置,但他們提醒,長時間在CPU/GPU 5級運行不是官方支持的,可能會對電池壽命和設備整體性能產生意想不到的影響。這次更新中的切換選項是官方支持的Quest…

View On WordPress

#90Hz Gaming Experience#90Hz遊戲體驗#Advanced VR Settings#Asgard&039;s Wrath 2 Update#Enhanced LOD in VR#進階VR設置#阿斯加德之怒2更新#虛擬實境#虛擬實境遊戲#虛擬實境資訊#虛擬實境冒險遊戲#虛擬實境新聞#高解析度VR遊戲#High-Resolution VR Gaming#META#Oculus Studios Release#Oculus工作室新聞#Quest 3#Quest 3 Visual Enhancements#Sanzaru Games News#Sanzaru遊戲動態#Virtual Reality Adventure Games#vr#vr game#VR Gaming Enhancements#vr news#vr news today#VR細節層次增強#VR遊戲提升#任務3視覺增強

0 notes

Text

Harnessing Unity 3D: The Best Platform for 3D Product Configurators

In today’s digital landscape, interactive and visually rich experiences are crucial for engaging users. One such application that has gained immense popularity across industries is the 3D product configurator. Whether in e-commerce, manufacturing, or real estate, businesses leverage 3D product configurators to enhance customer engagement and streamline the buying process. Unity 3D stands out as the most powerful and versatile choice among the various tools available for building these configurators. This blog will explore why Unity 3D is the ideal platform for developing a feature-rich 3D product configurator.

What is a 3D Product Configurator?

A 3D product configurator is an interactive tool that allows users to customize products in real-time. These configurators enable customers to modify various attributes, such as color, texture, materials, and components, providing a highly personalized buying experience. Industries such as automotive, furniture, fashion, and consumer electronics are increasingly adopting 3D product configurators to improve sales conversions and reduce return rates.

Why Choose Unity 3D for Building a Product Configurator?

Unity 3D has emerged as the go-to platform for creating immersive and interactive 3D applications. Here’s why Unity 3D is the best choice for developing a 3D product configurator:

1. Cross-Platform Compatibility

One of Unity 3D’s biggest advantages is its ability to deploy applications across multiple platforms, including web, mobile (iOS & Android), desktop, and even AR/VR devices. This cross-platform compatibility ensures that businesses can reach a broader audience without the need for extensive redevelopment.

2. High-Quality Rendering & Realistic Visuals

Unity’s powerful rendering engine supports physically based rendering (PBR), real-time lighting, and advanced shaders, enabling ultra-realistic product visualization. Features like HDRP (High Definition Render Pipeline) and URP (Universal Render Pipeline) allow developers to achieve photorealistic quality with optimal performance.

3. Real-Time Customization & Interactivity

Unity 3D provides an interactive environment where users can modify product features in real time. Whether changing colors, textures, or components, Unity’s robust scripting capabilities (using C#) ensure seamless interactions, making the customization experience engaging and intuitive.

4. AR & VR Integration

The growing demand for Augmented Reality (AR) and Virtual Reality (VR) has made immersive experiences a necessity. Unity supports ARKit, ARCore, Oculus, and HTC Vive, enabling businesses to take their 3D product configurators into extended reality (XR) environments for an even more interactive experience.

5. Easy-to-Use UI/UX Design Tools

A great 3D product configurator requires an intuitive user interface. Unity offers built-in UI tools like Canvas, UI Toolkit, and third-party UI frameworks, ensuring that businesses can create smooth and engaging interfaces for their configurators.

6. Integration with E-Commerce Platforms

Unity allows seamless API integrations with popular e-commerce platforms like Shopify, WooCommerce, Magento, and more. This enables businesses to connect their 3D product configurators directly to their online stores, allowing users to customize products and make purchases effortlessly.

7. Performance Optimization & Scalability

Unity offers multiple optimization techniques such as Level of Detail (LOD), occlusion culling, and GPU instancing, ensuring high performance even on low-end devices. This makes Unity-based 3D configurators scalable for businesses of all sizes.

8. Extensive Asset Store & Community Support

Unity’s Asset Store provides thousands of ready-made assets, plugins, and scripts, reducing development time and effort. Moreover, Unity boasts a large developer community, ensuring extensive support and knowledge sharing for troubleshooting and innovation.

9. Cost-Effective Development

Unity offers a free version (Unity Personal) for small businesses and affordable licensing options for larger enterprises. This makes it a cost-effective solution for startups and enterprises looking to implement high-quality 3D configurators.

Industries Benefiting from Unity-Based 3D Configurators

Unity 3D is being widely adopted across multiple industries, including:

E-commerce: Online stores use Unity-based configurators to allow customers to visualize and customize products before purchasing.

Automotive: Car manufacturers provide real-time vehicle customization, helping customers select features like paint, interiors, and wheels.

Furniture & Home Decor: Shoppers can design and visualize furniture in 3D before making a purchase.

Apparel & Accessories: Fashion brands leverage 3D configurators for personalized clothing, shoes, and accessories.

Industrial Manufacturing: Businesses use Unity for designing and simulating complex machinery and equipment.

Final Thoughts

With its cross-platform capabilities, high-quality rendering, real-time interactivity, and AR/VR integration, Unity 3D is the best choice for developing 3D product configurators. Businesses looking to enhance customer engagement, boost conversions, and offer a next-gen shopping experience should leverage Unity’s powerful features. As technology continues to evolve, Unity 3D remains at the forefront of innovation, helping businesses create immersive and engaging 3D configurators that set them apart from the competition.

If you’re considering building a 3D product configurator, Unity 3D is the ultimate solution to bring your vision to life. Ready to get started? Contact a Unity expert today and transform your product visualization experience!

0 notes

Text

Optimizing Performance in Unreal Engine: Best Practices and Tips

Optimizing performance in Unreal Engine is crucial for ensuring smooth gameplay, particularly in resource-constrained environments like VR or mobile platforms. Utilizing Unreal’s profiling tools, such as Unreal Insights and Stat Unit, allows developers to pinpoint performance bottlenecks. Level streaming, which involves loading only visible portions of a level, is essential for managing memory and improving load times (Reed et al., 2017). LOD (Level of Detail) management reduces the complexity of models based on their distance from the camera, optimizing GPU usage (Luebke et al., 2003).

Texture optimization is another key factor; compressed formats like DXT or ASTC help reduce memory usage while maintaining visual quality. Effective culling techniques, such as frustum culling, ensure only visible objects are rendered, further enhancing performance (Akenine-Möller et al., 2019).

By applying these strategies, developers can create more responsive and performant games. For a deeper exploration, see Akenine-Möller et al. (2019) on real-time rendering and Epic Games' official documentation.

References:

Reed, S., Marefat, M. M., & Speight, A. (2017). Efficient Use of Level Streaming in Unreal Engine 4. ACM Digital Library. Luebke, D., et al. (2003). Level of Detail for 3D Graphics. Morgan Kaufmann. Akenine-Möller, T., Haines, E., & Hoffman, N. (2019). Real-Time Rendering (4th ed.). CRC Press

0 notes

Text

The Evolution and Future of 3D Game Development

The world of 3D game development has seen a tremendous evolution since its inception. From the early days of rudimentary graphics and simple gameplay mechanics to today's intricate, immersive environments, 3D game development has become a cornerstone of the gaming industry. This article explores the history, technological advancements, and future prospects of 3D game development.

A Brief History

The journey of 3D game development began in the late 1970s and early 1980s with pioneering titles like "Battlezone" and "Star Wars," which used vector graphics to simulate a three-dimensional space. These early games laid the groundwork for future advancements by introducing basic 3D concepts.

The 1990s marked a significant leap with the advent of polygonal graphics, driven by the increasing power of personal computers and gaming consoles. Iconic games like "Doom" (1993), "Quake" (1996), and "Tomb Raider" (1996) pushed the boundaries of what was possible, offering players richer, more immersive experiences. These games introduced the use of 3D models and textures, dynamic lighting, and real-time rendering, setting new standards for the industry.

Technological Advancements

The exponential growth in computing power and the development of sophisticated game engines have revolutionized 3D game development. Modern game engines like Unity, Unreal Engine, and CryEngine have democratized the development process, making powerful tools accessible to indie developers and large studios alike.

Key technological advancements include:

Real-Time Ray Tracing: This technique simulates the physical behavior of light, enabling highly realistic lighting, shadows, and reflections. Real-time ray tracing, supported by GPUs like NVIDIA's RTX series, has significantly enhanced visual fidelity.

Procedural Generation: This allows developers to create vast, intricate worlds with relatively low effort. Games like "No Man's Sky" have demonstrated the potential of procedural generation, offering players an almost infinite universe to explore.

Virtual Reality (VR) and Augmented Reality (AR): VR and AR have introduced new dimensions to 3D game development, creating fully immersive experiences. Titles like "Half-Life: Alyx" showcase the potential of VR in delivering unparalleled interactivity and immersion.

Artificial Intelligence (AI): AI has improved non-player character (NPC) behavior, making them more realistic and responsive. Advanced AI algorithms enable complex decision-making, pathfinding, and adaptive learning, enhancing gameplay dynamics.

The Development Process

Creating a 3D game involves several stages:

Conceptualization and Design: This initial phase involves brainstorming ideas, defining the game mechanics, and creating design documents. Concept art and storyboarding help visualize the game's look and feel.

Modeling and Texturing: Artists create 3D models of characters, environments, and objects using software like Blender, Maya, or 3ds Max. Texturing involves applying detailed surface textures to these models, adding realism and depth.

Programming and Scripting: Developers write the code that powers the game. This includes implementing game mechanics, physics, AI, and user interfaces. Scripting languages like C# (Unity) and Blueprints (Unreal Engine) streamline the development process.

Animation and Rigging: Characters and objects are animated to move and interact realistically. Rigging involves creating a skeleton for 3D models, enabling smooth, lifelike animations.

Testing and Optimization: Rigorous testing ensures the game is free of bugs and runs smoothly across various platforms. Optimization techniques, such as level of detail (LOD) and occlusion culling, improve performance without compromising quality.

Deployment and Maintenance: Once completed, the game is released on appropriate platforms. Post-release support, including updates and patches, addresses any issues and keeps the game engaging for players.

The Future of 3D Game Development

The future of 3D game development is bright, with emerging technologies and trends poised to reshape the landscape. Key trends to watch include:

Cloud Gaming: Services like Google Stadia and NVIDIA GeForce Now are making high-quality gaming accessible without expensive hardware. Cloud gaming leverages powerful remote servers to stream games, reducing the reliance on local processing power.

Machine Learning: AI and machine learning will continue to enhance game development, from creating more intelligent NPCs to optimizing game design and personalization. Procedural content generation powered by AI could lead to even more dynamic and responsive game worlds.

Metaverse Integration: The concept of the metaverse—interconnected virtual worlds—promises to create vast, persistent environments where players can interact, create, and share experiences. Companies like Epic Games and Facebook (Meta) are investing heavily in this vision.

Cross-Platform Development: The demand for games that can be played seamlessly across multiple platforms is growing. Tools and engines that support cross-platform development will become increasingly important, allowing developers to reach a broader audience.

Conclusion

3D game development has come a long way from its humble beginnings, evolving into a complex and dynamic field that blends artistry, technology, and innovation. As new technologies emerge and the boundaries of what's possible continue to expand, the future of 3D game development promises even more exciting and immersive experiences for players around the world. Whether you're a seasoned developer or a newcomer to the field, the journey ahead in 3D game development is sure to be an exhilarating one.

0 notes

Text

Enhancing User Experience: Design Strategies for AR/VR Apps in 2024

The world of augmented reality (AR) and virtual reality (VR) has evolved significantly, and as we step into 2024, the potential for immersive experiences is greater than ever. To create compelling AR/VR applications that resonate with users, designers need to employ innovative strategies that enhance usability, engagement, and overall user experience. Here are some cutting-edge design strategies for AR/VR apps in 2024.

1. User-Centric Design

Empathy and User Research

Understanding the user's needs, preferences, and pain points is critical. Conducting comprehensive user research through surveys, interviews, and usability testing helps create experiences that are intuitive and satisfying. Empathy graphs and personas can guide designers in user reactions and adjust the experience properly.

Accessibility and Inclusivity

Designing for a diverse audience means considering various physical abilities and ensuring the application is accessible to everyone. Features like voice commands, adjustable text sizes, and customizable controls can make AR/VR experiences more enjoyable for all.

2. Intuitive Interactions

Natural User Interfaces (NUI)

Utilize NUIs to create more natural and intuitive interactions. Movement controls, eye tracking, and voice commands can make navigating AR and VR environments seamless and more engaging. These interactions should feel like an extension of the user’s body, reducing the learning shape and increasing immersion.

Haptic Feedback

In virtual reality, haptic feedback can greatly improve the sense of presence. Advanced haptic devices can simulate textures, impacts, and vibrations, providing a more tangible and realistic experience. This sensory feedback helps ground users in the virtual environment, making interactions more believable.

3. Spatial Awareness and Contextual Design

Spatial Anchors and Persistent Content

Using physical anchors allows digital content to remain fixed in the real world, providing a consistent AR experience. This is crucial for applications in education, navigation, and industrial training, where users need reliable and repeatable interactions with virtual objects in a physical space.

Context-Aware Content

Design content that responds to the user’s environment and context. For example, an AR navigation app should adapt to different lighting conditions and provide relevant information based on the user’s location. Context-aware design has become more popular and useful in AR/VR applications.

4. Immersive Storytelling

Narrative-Driven Experiences

Crafting can engage users. Whether it’s a VR game or an AR educational app, integrating scene elements can make the experience more memorable and impactful. Storyboarding and scriptwriting are essential for designers to create coherent and captivating narratives.

Interactive and Adaptive Stories

Engagement can allow users to influence the story through their actions and increase activities. Dynamic stories and adaptive narratives respond to user decisions, providing a personalized experience that can lead to multiple conclusions.

5. Performance Optimization

Efficient Rendering Techniques

Optimizing the performance of an AR/VR developer is crucial for a smooth experience. Techniques like level of detail (LOD) adjustments, the exclusion process, and foveated graphics can help maintain high frame rates while enhancing user comfort.

Edge Computing and Cloud Integration

Outsourcing processing work, edge computing, and cloud services can enhance performance and enable more intricate and data-intensive applications. This method can guarantee quick interactions, which may help real-time communication in AR/VR environments.

6. User Safety and Comfort

Ergonomic Design

Consider the practicality of using AR/VR devices. Design interfaces and interactions that reduce program and user discomfort, ensuring users can engage with the application for extended periods. Adjustable virtual environments and customizable user interfaces can cater to different user preferences and physical capabilities.

7. Continuous Improvement and User Feedback

Iterative Design Process

Use an iterative approach to design that requires user input to be considered at each level. The program is continuously tested and refined in response to user feedback and connections so that it adjusts to user expectations and quickly fixes any problems.

Community Engagement

Creating a community around your AR/VR software can increase the customer base and offer insightful information. Designers can better understand customer demands and make more informed design decisions by interacting with users through discussions, social media, and app feedback systems.

How XcelTec Helps You Integrate AI and Machine Learning in E-commerce Web Development

XcelTec can help you navigate the complexities of AR/VR development and achieve your goals. We specialize in integrating AI and Machine Learning technologies to enhance e-commerce operations. We tailor solutions for personalized shopping experiences, improved customer service through chatbots, optimized inventory management, fraud detection, dynamic pricing strategies, and enhanced user experiences.

Conclusion

Embrace the transformative power of AI and Machine Learning with XcelTec to elevate your e-commerce platform, driving growth, efficiency, and customer satisfaction in the digital age.

0 notes

Text

Exploring the Role of VFX in Immersive Virtual Reality Experiences

Exploring the Role of Visual Effects (VFX) in Immersive Virtual Reality (VR) Experiences opens up a realm of possibilities where storytelling transcends the boundaries of traditional filmmaking. In recent years, VR technology has advanced rapidly, offering users the opportunity to step into immersive digital worlds and experience narratives in a whole new way. VFX plays a crucial role in enhancing these experiences, creating lifelike environments, characters, and special effects that immerse users in captivating virtual worlds. Institutions like MAAC Pune have been instrumental in this evolution, providing aspiring VFX artists with the skills and knowledge needed to excel in the dynamic world of immersive storytelling. Through comprehensive programs and hands-on training, MAAC Pune prepares students to leverage VFX techniques to create stunning visual experiences that push the boundaries of virtual reality. With MAAC Pune's guidance, the next generation of VFX artists are poised to continue pushing the limits of creativity and innovation in immersive storytelling for years to come.

One of the primary functions of VFX in immersive VR experiences is to create realistic environments that transport users to different worlds and settings. Whether it's a futuristic cityscape, a lush jungle, or an alien planet, VFX artists use their skills to design and render detailed environments that users can explore and interact with. By leveraging advanced rendering techniques and high-resolution textures, VFX can create immersive landscapes that feel like they're right out of a blockbuster film.

Moreover, VFX is instrumental in creating lifelike characters and creatures that populate virtual reality experiences. Through techniques such as motion capture and performance capture, VFX artists can bring digital characters to life with realistic movements, expressions, and interactions. Whether it's an animated character in a fantastical adventure or a digital avatar representing a real-life person in a social VR environment, VFX plays a crucial role in making these characters feel believable and engaging.

In addition to environments and characters, VFX is also used to enhance the overall immersion and impact of VR experiences through special effects and visual storytelling techniques. From dynamic particle effects like fire, smoke, and explosions to dynamic lighting and atmospheric effects, VFX adds an extra layer of realism and excitement to VR environments. Whether it's a thrilling action sequence or a dramatic narrative moment, VFX helps to elevate the emotional impact of VR experiences and keep users engaged from start to finish.

Furthermore, VFX can be used to enhance the interactivity and user engagement of VR experiences by creating dynamic and responsive environments. Through techniques such as procedural generation and real-time physics simulations, VFX artists can create virtual worlds that react to user input in real-time, allowing for immersive interactions and gameplay mechanics. Whether it's manipulating objects, solving puzzles, or engaging in combat, VFX can enhance the sense of presence and agency that users feel in virtual reality.

Additionally, VFX plays a crucial role in optimizing VR experiences for performance and accessibility across a wide range of hardware platforms. By leveraging techniques such as dynamic level of detail (LOD) rendering and optimization algorithms, VFX artists can ensure that VR experiences run smoothly and efficiently on a variety of devices, from high-end VR headsets to mobile VR platforms. This allows for greater accessibility and reach, enabling users to enjoy immersive VR experiences regardless of their hardware setup.

In conclusion, the role of VFX in immersive virtual reality experiences is multifaceted and essential to the success of modern VR storytelling. From creating realistic environments and characters to enhancing interactivity and performance, VFX adds an extra layer of immersion and engagement to VR experiences, pushing the boundaries of what's possible in digital storytelling. As VR technology continues to evolve, VFX will undoubtedly play an increasingly crucial role in shaping the future of immersive entertainment, offering users unprecedented opportunities to explore and interact with captivating virtual worlds.

0 notes

Text

The Specialty of Improvement: Upgrading Execution in VR Game Turn of events

In the powerful domain of VR development services, accomplishing ideal execution remains as an urgent test. The unmistakable requests forced by computer generated reality conditions on both equipment and programming require a nuanced approach. This extensive investigation dives into key techniques utilised to enhance VR game execution, guaranteeing a consistent and profoundly vivid experience for clients. As we explore the complex scene of VR game turn of events, this guide enlightens the way for designers and lovers the same, stressing the craft of advancement. Investigate the advancing techniques, from outline rate elements to stage explicit contemplations, to raise VR game advancement administrations higher than ever.

Enhancing execution in VR game turn of events

Streamlining execution in computer generated simulation (VR) game improvement is significant to conveying a smooth and vivid experience for clients. VR puts extraordinary expectations on equipment and programming, and accomplishing elite execution requires cautious thought of different variables. Here is a manual for the specialty of enhancement in VR game turn of events:

Outline Rate Enhancement:

Keeping a steady high edge rate isn't only a specialised necessity however a foundation of client solace and submersion in VR. The business standard objective of 90 Hz or higher guarantees smooth movement, forestalling movement infection. Dynamic goal scaling adds a layer of versatility, cleverly changing picture quality in view of the multifaceted design of the scene and the delivering load.

Separating and Impediment Procedures:

The craft of delivering lies in displaying what is viewed as well as, similarly significantly, in hiding what isn't. Frustum separating specifically delivers objects inside the camera's view frustum, upgrading computational assets. Impediment winnowing, thusly, cleverly conceals objects that are non-noticeable because of others inside the scene, further improving execution.

Level of Detail (LOD) The board:

The spatial connection between the player and in-game items requests a nuanced way to deal with specifying. Carrying out different degrees of detail for models in light of their nearness to the client enhances delivering execution. All the while, dynamic changes in accordance with shader intricacy in light of distance guarantee productive GPU use.

Surface and Material Productivity:

The surface and material complexities fundamentally influence both visual quality and execution. Deciding on proficient surface pressure designs diminishes memory data transmission requests. Moreover, amalgamating comparative materials limits draw calls, a basic calculate delivering productivity.

Shader Streamlining:

The visual style of VR conditions is complicatedly attached to shaders, yet their enhancement is a craftsmanship. Smoothing out shaders for ideal execution, without compromising the lavishness of visual encounters, is a sensitive equilibrium. Limiting shader switches is basic to diminish the GPU responsibility and keep up with smooth delivering.

Physical science Productivity:

In the powerful transaction of articles inside VR spaces, physical science effectiveness is foremost. Improving on crash shapes and lessening the intricacy of physical science associations contribute essentially to generally speaking execution. Carrying out unique material science level-of-detail changes in view of item closeness further refines this angle.

Sound Delivering Improvement:

Spatial sound is a vital component in hoisting the vivid characteristics of VR encounters. Notwithstanding, it should be carried out reasonably to try not to overburden the computer chip. Methods like sound impediment, and reenacting reasonable sound spread, add to the productivity of sound delivering in VR conditions.

UI/UX Smoothing out:

The UI in VR ought to be a consistent expansion of the vivid climate. Keeping UI components clear is basic for execution. Enhancing UI delivering and limiting asset escalated UI movements guarantee a smooth client experience without compromising the visual trustworthiness of the VR space.

Offbeat Reprojection Execution:

In the unique scene of VR, periodic edge rate drops are unavoidable. Offbeat reprojection procedures keenly add outlines, guaranteeing a reliably smooth client experience, in any event, during these changes in delivering times.

Stage explicit Techniques:

VR stages accompany one of a kind particulars and contemplations. Complying with stage explicit rules is principal for fruitful improvement. Thorough profiling on track equipment recognizes and addresses execution bottlenecks, fitting the VR experience to the specificities of every stage.

Testing and Criticism Reconciliation:

The iterative idea of VR game development services requests ceaseless testing across different gadgets and stages. Client criticism turns into an urgent instrument in this streamlining symphony, offering significant bits of knowledge into execution issues and directing designated enhancements.

Multi-stringing and Parallelization:

The computational requests of VR encounters frequently require a circulated approach. Dispersing errands across different strings takes advantage of the abilities of current central processors, while key parallelization, particularly in computer chip concentrated assignments, opens the maximum capacity of the equipment.

Memory The executives:

Proficient memory of the executives is crucial to support execution. Executing surface map books limits memory above, while reasonably limiting trash assortment, particularly in dialects with programmed memory the board, adds to the general productivity of the VR application.

VR-explicit Contemplations:

The coming of VR presents another range of potential outcomes and difficulties. Using VR-explicit APIs and SDKs becomes central for taking advantage of the maximum capacity of VR equipment. Also, adjusting delivering processes for sound system sees in VR conditions guarantees an ideal visual encounter custom-made to the exceptional requests of augmented reality.

Persistent Enhancement Practices:

Enhancement is certainly not a one-time exertion yet a dynamic, iterative cycle. The advancement lifecycle should embrace persistent testing, profiling, and updates that consolidate execution improvements in view of client criticism. This iterative methodology guarantees that the VR game remaining parts finely tuned to developing necessities and equipment capacities.

Enhancing VR game execution is a steady cycle that includes a harmony between visual devotion and smooth communication. Normal testing and profiling are fundamental to recognize and address execution bottlenecks. Remember that VR equipment and programming are consistently developing, so remaining informed about the most recent progressions is vital for keeping up with ideal execution.

Shamla Tech: Raising VR Game Advancement with Ideal Execution Aptitude

Shamla Tech, a forerunner in VR improvement administrations, trailblazers ideal execution in VR game development . Their mastery sparkles in creating state of the art VR encounters, with a fastidious spotlight on execution enhancement. As industry pioneers, Shamla Tech consistently incorporates the most recent progressions, sticks to best practices, and utilises a constant streamlining approach. With a promise to client input, thorough testing, and stage explicit methodologies, Shamla Tech guarantees VR games surpass assumptions. Clients benefit from an essential organisation that embraces the powerful scene of VR as well as raises their computer generated experience to uncommon degrees of development and consistent client commitment.

1 note

·

View note

Text

The Art of Optimization: Enhancing Performance in VR Game Development

In the dynamic realm of VR development services, achieving optimal performance stands as a pivotal challenge. The distinctive demands imposed by virtual reality environments on both hardware and software necessitate a nuanced approach. This comprehensive exploration delves into key strategies employed to optimize VR game performance, ensuring a seamless and deeply immersive experience for users. As we navigate the intricate landscape of VR game development, this guide illuminates the path for developers and enthusiasts alike, emphasizing the art of optimization. Explore the evolving strategies, from frame rate dynamics to platform-specific considerations, to elevate VR game development to new heights.

Optimizing performance in VR game development

Optimizing performance in virtual reality (VR) game development is crucial to delivering a smooth and immersive experience for users. VR places unique demands on hardware and software, and achieving high performance requires careful consideration of various factors. Here's a guide to the art of optimization in VR game development:

Frame Rate Optimization:

Maintaining a consistent high frame rate is not merely a technical requirement but a cornerstone of user comfort and immersion in VR. The industry-standard target of 90 Hz or higher ensures fluid motion, preventing motion sickness. Dynamic resolution scaling adds a layer of adaptability, intelligently adjusting image quality based on the intricacy of the scene and the rendering load.

Culling and Occlusion Techniques:

The art of rendering lies not only in showcasing what is seen but, equally importantly, in concealing what is not. Frustum culling selectively renders objects within the camera's view frustum, optimizing computational resources. Occlusion culling, in turn, intelligently hides objects that are non-visible due to others within the scene, further enhancing performance.

Level of Detail (LOD) Management:

The spatial relationship between the player and in-game objects demands a nuanced approach to detailing. Implementing various levels of detail for models based on their proximity to the user optimizes rendering performance. Simultaneously, dynamic adjustments to shader complexity based on distance ensure efficient GPU utilization.

Texture and Material Efficiency:

The texture and material intricacies significantly impact both visual quality and performance. Opting for efficient texture compression formats reduces memory bandwidth demands. Furthermore, amalgamating similar materials minimizes draw calls, a critical factor in rendering efficiency.

Shader Optimization:

The visual aesthetic of VR environments is intricately tied to shaders, yet their optimization is an art. Streamlining shaders for optimal performance, without compromising the richness of visual experiences, is a delicate balance. Minimizing shader switches is imperative to reduce the GPU workload and maintain smooth rendering.

Physics Efficiency:

In the dynamic interplay of objects within VR spaces, physics efficiency is paramount. Simplifying collision shapes and reducing the complexity of physics interactions contribute significantly to overall performance. Implementing dynamic physics level-of-detail adjustments based on object proximity further refines this aspect.

Audio Rendering Optimization:

Spatial audio is a key element in elevating the immersive qualities of VR experiences. However, it must be implemented judiciously to avoid overburdening the CPU. Techniques like audio occlusion, and simulating realistic sound propagation, add to the efficiency of audio rendering in VR environments.

UI/UX Streamlining:

The user interface in VR should be a seamless extension of the immersive environment. Keeping UI elements straightforward is critical for performance. Optimizing UI rendering and minimizing resource-intensive UI animations ensure a smooth user experience without compromising the visual integrity of the VR space.

Asynchronous Reprojection Implementation:

In the dynamic landscape of VR, occasional frame rate drops are inevitable. Asynchronous reprojection techniques intelligently interpolate frames, ensuring a consistently smooth user experience, even during these fluctuations in rendering times.

Platform-specific Strategies:

VR platforms come with unique specifications and considerations. Adhering to platform-specific guidelines is paramount for successful optimization. Rigorous profiling on target hardware helps identify and address performance bottlenecks, tailoring the VR experience to the specificities of each platform.

Testing and Feedback Integration:

The iterative nature of VR game development services demands continuous testing across diverse devices and platforms. User feedback becomes a crucial instrument in this optimization orchestra, offering valuable insights into performance issues and guiding targeted improvements.

Multi-threading and Parallelization:

The computational demands of VR experiences often necessitate a distributed approach. Distributing tasks across multiple threads taps into the capabilities of modern CPUs, while strategic parallelization, especially in CPU-intensive tasks, unlocks the full potential of the hardware.

Memory Management:

Efficient memory management is fundamental to sustained performance. Implementing texture atlases minimizes memory overhead, while judiciously minimizing garbage collection, especially in languages with automatic memory management, contributes to the overall efficiency of the VR application.

VR-specific Considerations:

The advent of VR introduces a new realm of possibilities and challenges. Utilizing VR-specific APIs and SDKs becomes paramount for tapping into the full potential of VR hardware. Additionally, fine-tuning rendering processes for stereo views in VR environments ensures an optimal visual experience tailored to the unique demands of virtual reality.

Continuous Optimization Practices:

Optimization is not a one-time effort but a dynamic, iterative process. The development lifecycle must embrace continuous testing, profiling, and updates that incorporate performance enhancements based on user feedback. This iterative approach ensures that the VR game remains finely tuned to evolving requirements and hardware capabilities.

Optimizing VR game performance is a constant process that involves a balance between visual fidelity and smooth interaction. Regular testing and profiling are essential to identify and address performance bottlenecks. Keep in mind that VR hardware and software are continually evolving, so staying informed about the latest advancements is crucial for maintaining optimal performance.

Shamla Tech: Elevating VR Game Development with Optimal Performance Expertise

Shamla Tech, a leader in VR development services, pioneers optimal performance in virtual reality game development. Their expertise shines in crafting cutting-edge VR experiences, with a meticulous focus on performance optimization. As industry trailblazers, Shamla Tech seamlessly integrates the latest advancements, adheres to best practices, and employs a continuous optimization approach. With a commitment to user feedback, rigorous testing, and platform-specific strategies, Shamla Tech ensures VR games exceed expectations. Clients benefit from a strategic partnership that not only embraces the dynamic landscape of VR but also elevates their virtual reality endeavors to unprecedented levels of innovation and seamless user engagement.

0 notes

Text

Discuss the use of Unity in creating diverse genres of games.

With the help of the well-liked cross-platform game creation engine Unity, designers may produce engaging 2D and 3D experiences. It was created by Unity Technologies, and it was initially made available in 2005. Games, simulations, virtual reality (VR), and augmented reality (AR) applications are just a few of the projects that can benefit from Unity's adaptability, usability, and vast range of capabilities.

Unity's key attributes include:

Cross-platform compatibility: Unity enables programmers to create games and apps that can be used on a variety of operating systems, including Windows, macOS, Linux, Android, iOS, consoles (such the PlayStation and Xbox), and web browsers.

Writing bespoke behaviors and gameplay logic is made possible by Unity's usage of the C# programming language. Although they are less popular, it also supports JavaScript and Boo as alternative scripting languages.

Asset shop: The Asset shop by Unity is an online store where developers may buy a variety of pre-made components, including 3D models, textures, audio clips, scripts, and more. This enables developers to speed up their development process through the reuse of current assets or the acquisition of new ones.

Physics and animation: Unity has a physics engine integrated into it that manages collisions, interactions, and realistic physics simulations. It also offers a potent animation engine for producing and managing animations of characters and objects.

Performance enhancement: To enhance the performance of games and applications, Unity provides a number of tools and capabilities. This covers features including occlusion culling, level-of-detail (LOD) rendering, and asset bundling in addition to tools for benchmarking, debugging, and optimizing code.

Collaboration: Unity facilitates teamwork by including tools like scene merging, asset serialization, and version control integration. This makes it possible for numerous developers to work on the same project at once.

Extensibility: By utilizing unique plugins and packages, Unity can be made more functional or have third-party tools and libraries integrated.

For more information visit Now: https://www.fusiontechlab.com/

1 note

·

View note

Text

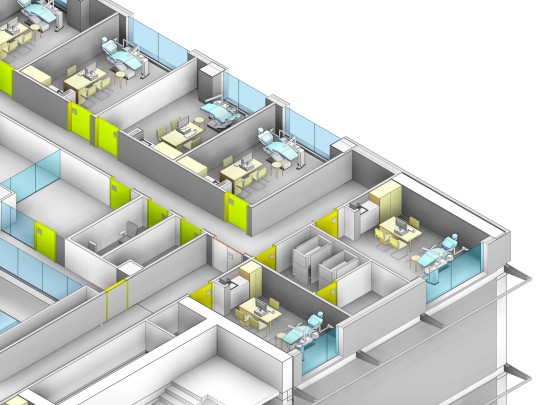

BIM Advantages for Healthcare Construction Projects

Execution of BIM technology for clinic foundation is a triumphant answer for AEC organizations to work with fast and quality development. Profoundly point by point, data rich and composed BIM models decrease the danger of blunders, make better planning, accomplish assessment precision and help project directors foster tweaked foundation. Construction gives extra speedy successes.

What are the challenges faced in hospital construction during Covid times?

Development of medical care offices in the midst of a pandemic of such extents accompanies difficulties of equivalent size. How well governments and the AEC area address them will choose the soundness of medical clinic construction in these quickly evolving times.

How BIM adoption helps overcome healthcare construction challenges

BIM in medical care improves joint effort between various partners – from draftsmen to general project workers, modelers, designers, fabricators, and proprietors. Regardless of whether it is sticking to the social separating standards or making new areas in hospitals to deal with expanded patient admission, completely incorporated and data rich BIM models smooth out endorsements, design and construction activities. BIM diminishes squander and augments esteem at each period of construction for medical services projects.

Build faster with BIM

While the requirement for medical services offices is on an outstanding ascent in the midst of COVID-19, the intrinsic intricacy of such construction projects takes steps to hinder the speed. BIM devices are best situated to accelerate construction of medical care projects. Preconstruction representation, interdisciplinary coordination and better booking and arranging help keep away from expensive adjust at a later stage and help quicker construction measure. Construction technology has likewise demonstrated to altogether shrivel courses of events.

Save costs with enhanced coordination

Nitty gritty 3D BIM models empower cross-practical groups to impart at a more profound level for clearness on project jobs, obligations, extent of work, LOD and so on This cooperation makes it more obvious shared objectives and smoothes out the total interaction. The resultant diminished revamp and higher asset use saves money on costs.

Reduce construction rework with clash detection and resolution

Producing conflict free 3D BIM models in the most punctual design stage assists save with timing and alleviate improve in the later phases of an undertaking. With different MEP frameworks working in a state of harmony, a combined 3D BIM model is the most practical answer for a powerful arranging of Healthcare Infrastructure.

The reception of a 3D model for conflict or obstruction identification decreases RFI's during the construction cycle. This saves money on enormous amounts of cash and time as conflicts are recognized carefully before they become potential issues nearby. Walkthroughs can be coordinated with VR/AR technology to comprehend basic courses through the hospital office while limiting the chance of revamp.

Medical care frameworks depend on fastidious space the executives. BIM and its new design models, formats and materials guarantee that design aim clings to customer details and assumptions.

BIM demonstrating permits exact room measurements, situation of medical care gadgets, energy estimations, lighting frameworks, strolling zones and so on This outcomes in building a space-advanced medical services office, guaranteeing an agreeable climate for patients and medical care work force.Manage space better with BIM

Track and monitor your hospital assets better

Modelers, designers and so on store building resource data in the Revit model, making it simple to concentrate and access it from anyplace.

As medical services units are contributed with a tremendous scope of instruments and devices, utilizing BIM permits all resource data to be taken care of straightforwardly into the stock for model representation. It even monitors the introduced hardware for tasks and offices the board through normalized techniques like COBie. An automated support the board framework permits survey of this information even in the pre-construction stage.

0 notes

Text

Arizona Sunshine Gets Special PlayStation 4 Pro Features

PlayStation 4 Pro owners have bragging rights in terms of the space boasted by the updated version of the console. It is, however, sometimes difficult to know exactly how those improved specifications affect the titles that are out on the console. In a Reddit ‘Ask Me Anything’, developers at Vertigo Games revealed exactly what enhanced features players of the PlayStation 4 Pro version of Arizona Sunshine were getting compared to the standard version.

The Reddit AMA revealed that most of the improvements were in the realm of graphics. While the core gameplay remains exactly the same between PlayStation 4 Pro and PlayStation 4 versions, PlayStation 4 Pro owners get improved visuals from Multisample anti-aliasing (MSAA), an advanced graphics technique that improves image quality. There are also higher render scales on the PS4 Pro version, which also contributes towards anti-aliasing. The fog distance goes further and there is a higher LOD bias, another technique in graphics technology that improves edge sharpness.

Though the PlayStation 4 and PlayStation 4 Pro have the same core processor and RAM, the PlayStation 4 Pro has a more powerful GPU, an AMD Radeon producing 4.2 TFLOPs as opposed to the standard model with 1.8 TFLOPs, which enables the PlayStation 4 Pro to produce 4K video output.

Arizona Sunshine will be heading to PlayStation 4 and PlayStation 4 Pro on 27th June, 2017. The title has been confirmed by developers to be ‘virtually identical’ to the PC version. The PlayStation VR version of the title will be compatible with the new PlayStation Aim controller, for a more immersive experience.

VRFocus will bring you further information on Arizona Sunshine and PlayStation VR titles as it becomes available.

from VRFocus http://ift.tt/2rBEUx6

1 note

·

View note

Text

Virtual construction modeling benefits Architectural firms in design processes

Architectural firms operate as if they are walking on a double-edged sword. They love designing buildings and it is a creative pursuit, but it is certainly more than just following the passion. Architects are compelled to invest in the construction systems and latest technology to support it, hiring, training and retaining junior architects; which obviously is not everyone’s favorite task. And, in spite of doing all these, architects struggle to keep pace with evolving trends in the construction industry with help of latest technology while pursuing building designs.

Building Information modeling or BIM is one such technology that changed the entire game. Also known as virtual construction modeling, BIM provides competitive advantage to architectural firms by enhancing profitability, ROI and increased count of successful construction projects. BIM modeling comes into existence right from conceptual design stage and continues across facility management. Increasing numbers of architectural firms are turning to BIM to attain competitive advantage and improve productivity. There are various studies and surveys which suggest rapid adoption of BIM across the building industry and around the world.

Let’s check out how Virtual construction modeling benefits Architectural firms in design processes.

Ever increasing BIM adoption

BIM (Building Information Modeling) is quickly becoming the standard for construction amongst major countries. The use of digital building models for virtual design, construction and collaboration is picking up pace like anything. To an extent that governments, organizations, and even inhabitants or owners; are inclined towards using BIM on new building projects. Most of these countries have BIM standards mandating that certain BIM levels are achieved on projects. BIM levels refer to levels of BIM maturity, which range from Level 0 to Level 3 and beyond.

· Level 0 - 2D CAD is utilized instead of 3D and hence no collaboration is done.

· Level 1 – 3D Cad for conceptualizing, 2D for drafting and approval documentation, shared data in CDE – common data environment

· Level 2 – Basically for information exchange, where everyone involved in the building construction project uses a 3D CAD model but they don’t collaborate on a single – shared model.

· Level 3 – Full team collaboration amongst all involved in the construction process across disciplines. Using a single and shared project model, they can modify it and also view changes done by others – instantaneously.

LOD - as a Lifecycle BIM tool

LOD helps architecture firms to specify and articulate the content and reliability of Building Information Models at various stages in the design and construction process. The LOD Framework identifies and resolves issues through industry developed standards to describe the state of development of various systems within a BIM. These LOD standards ensure consistency in execution and communication of BIM milestones and deliverables.

Architects with help of enlisted LODs can better serve their clients through information that can be extracted from the models.

· LOD 100 – Conceptual stage – Symbols with attached approximate information. No geometric info in the model elements.

· LOD 200 - Design development stage - Recognizable objects or space allocations for coordination between the disciplines.

· LOD 300 - Documentation stage - Design intent to support costing and bidding. Generate construction documents and shop drawings. Take accurate measurements from the models and drawings and locations.

· LOD 350 - Cross trade coordination stage - Includes connections and interfaces between disciplines

· LOD 400 - Construction stage - Detailing, fabrication and installation/ assembly. Contractor can split construction requirements to assign it further to sub-contractors.

· LOD 500 – Facility management - As-built and field verified geometry and information suitable to assist in building operations and maintenance.

MEP BIM Coordination

Until recent years, engineers/architects struggled to develop better schedules, minimize material and cost losses and maximize production efficiency. The major hurdle was spatial issues between various MEP – Mechanical, electrical and plumbing components. With help of virtual construction modeling avoidance and detection of clash using clash detection software like Revit and Navisworks, changed the game – entirely. Architectural firms can now extract detailed sectional views from coordination & BIM models to provide accurate information to Mechanical, HVAC, Electrical & Plumbing Contractors/ Engineers. Architects can lend comprehensive support to client’s engineering team across submittal, review, coordination, and post-construction processes.

BIM for photorealistic rendering

Architectural BIM modeling takes the 3D rendering process a step further. Models developed for the building information modeling can be used conveniently for photorealistic rendering and the same models then can be transformed into Architectural walkthrough AR/VR. Credit goes to the technical advancements taking place in the architectural fraternity. The 3D modeling process, if in hands of experienced BIM practitioners, is also capable of converting 2D plans or hand drawn sketches into virtual, but realistic, models which can be used for sales and marketing purposes.

Parametric BIM modeling

BIM modeling is synonyms to innovative and automated processes. To start with, the biggest benefit of BIM modeling is any changes made in a 3D environment reflect automatically in 2D view. Every single connected view and element is updated/changed automatically, hence saves a lot of time and efforts done in incorporating the design changes. No changes are required to be done manually ultimately chances of missing out any element is negligible which increases the accuracy quotient.

Designing and developing construction projects, may it be residential, commercial, mix use or even infrastructure projects, with help of Virtual Construction Modeling gives architectural firms the flexibility of managing design changes through multiple options made available by BIM software.

Right from adding attractive visual effect to 3D work created and rendering photorealistic images, extracting 3D floor plans and developing architectural simulations, colored elevations, 3D site view and topography; everything has become so very convenient for architects. Endless communication issues due to frequent design changes resulting in over budgeting, pushed timelines and several other issues across the construction project; all takes you back to the darkest era of building construction industry – prior to BIM.

Collaborative approach of BIM services enables bringing all AEC professionals; Architects, Engineers, Subcontractors, Contractors and owners as well together for design presentation in 3D view to visualize the virtual building and seek amiable feedback.

BIM for maintaining building data

Maintaining building data is a prominent added advantage, apart from improved operational efficiency and profits, of using BIM modeling for construction projects. Virtual 3D building models apart from offering 3D geometry enables AEC professionals and inhabitants with data management of the entire building including quantities & materials used, time schedule, etc. All these information can be rooted in the 3D BIM model when it is constructed – virtually. Data and rich information is used to produce accurate drawings and 3D models both, further reducing onsite errors and enabling on time completion of projects without compromising on quality, while allowing creative juices to flow freely.

Virtual building models for real-time feedback

BIM enables seamless design and communication process. BIM modeling speeds up the feedback, implementation, and suggested amendment process. Architects virtually construct buildings digitally by providing materials, textures, lighting, and photorealism inputs to BIM modelers. Now that the virtual building model is ready; providing and receiving feedback in real- time becomes an interactive activity. Feedback shared by anyone involved in the construction process becomes extremely useful, as required changes are done in the 3D model even before the construction begins. Providing multiple design options and 3D views to represent the design for better acceptance, as an added advantage, and can label you as a progressive architectural firm.

Ø BIM modeling services

Architects and architectural firms are supposed to be good at designing and developing buildings, hence their core forte. Keen at taking advantage of advancements in technology and trends, architects try their level best to keep pace with the trends in overall design process. Architectural BIM services is one such strong player when we talk about advanced technology, but unfortunately not a lot many architectural firms are inclined towards it. It is so because they look at Building Information Modeling as additional investment to be done to get BIM trained architects, upgrade existing systems and much more. However, they are ignoring the benefits of BIM for architectural firms in terms of accurate designs, ROI and successful project completions.

A sure shot solution to this situation is to outsource BIM services and start reaping operational advantages. So now when the ROI will increase and there will be no operational hazards while they focus on their core activity of designing and developing buildings; architectural firms would be ready financially and mentally to invest dollars to have an in-house team for 3D modeling and enjoy the benefits of Virtual Construction Modeling. Like every project outsourcing BIM to third party BIM experts, initially might have some teething issues, but then which construction project does not have one.

Virtual Construction Modeling, architectural firms & design process

Benefits of BIM for architectural firms are numerous. On one hand where CAD creates 2D or 3D drawings which are not capable of distinguishing amongst the elements; BIM has reached as far as incorporating 4D (Time) and 5D (Cost) elements for building construction projects of every size and even with multiple complexities. It helps architects manage the information intelligently across the construction project right from conceptual and detailed designs to analysis & documentation, and from manufacturing to construction logistics, and operation, maintenance and renovation.

source : https://www.linkedin.com/pulse/virtual-construction-modeling-benefits-architectural-firms-hitech-bim/

0 notes

Text

Optimizing XR Performance: QualityReality’s Strategies for Smooth Interactions

XR, or extended reality, has revolutionised gaming by fusing the real and virtual worlds. But the intricacy of XR might cause problems with performance, interfering with smooth communication. This blog discusses current trends, next predictions, and optimising XR performance in game production.

In order to achieve high frame rates and minimal latency, avoid motion sickness, and preserve immersion, real-time rendering — which relies on the fast operation of the CPU and GPU — is essential to XR performance. Performance bottlenecks can be found with the aid of profiling tools like Unity’s Profiler. Rendering load is decreased by asset and code optimisation, which includes improving 3D models, textures, animations, and developing effective code. Processing power is focused where it is needed with techniques like Single Pass Stereo rendering and foveated rendering.

With gadgets like the Oculus Quest 2 and developing technologies like Unity and Unreal Engine, the XR scene is progressing. By cutting down on latency and enhancing user experience, edge computing improves XR. Dynamic, individualised virtual worlds will be possible thanks to AI and machine learning. Interconnected virtual places will be created by the Metaverse, and transitions between AR and VR will be improved by mixed-reality ecosystems. XR will become increasingly commonplace and have an impact not only on gaming but also on education, healthcare, and other industries. Wearable integration and multisensory technology will further engross people.

Fog and edge computing to lower latency and increase throughput, texture reduction and Level of Detail (LOD) control for 3D assets, and the use of profiling tools to locate bottlenecks are some strategies for improving XR performance. 5G networks have promise.

AI-driven real-time improvements, 5G-enabled hyper-realistic experiences, haptic feedback integration, rendering powered by quantum computing, and brain interfaces for direct control over XR are some of the upcoming advancements in XR performance optimisation.

A subsidiary of GameCloud Technologies Pvt Ltd, QualityReality provides all-inclusive XR development and testing services. Their tactics make use of edge computing, blockchain, and AI to maximise XR performance and guarantee flawless, high-quality experiences.

For more details: Optimizing XR Performance: QualityReality’s Strategies for Smooth Interactions

0 notes

Text

Cinema 4D R19: European debut at IBC 2017

Although the new version of Cinema 4D is available worldwide now, the presentation at IBC 2017 will be a unique chance to meet with professionals using the program.

As ProVideo Coalition mentioned before, the new Cinema 4D Release 19 is available this September, from MAXON or its authorized dealers. MAXON Service Agreement customers whose MSA is active as of September 1, 2017 will be upgraded automatically, confirmed the company recently. Cinema 4D R19 is available for Mac OS X and Windows; Linux nodes are also available for network rendering.

This next generation of MAXON’s professional 3D application delivers both great tools and enhancements artists can put to use immediately, and provides a peek into the foundations for the future. Designed to serve individual artists as well as large studio environments, Release 19 offers a fast, easy, stable and streamlined workflow to meet today’s challenges in the content creation markets; especially general design, motion graphics, VFX, VR/AR and all types of visualization.

For a complete list of features of the new version, a visit to MAXON’s website is essential, but here are some of the highlights of Cinema 4D R19:

Viewport Improvements – Results so close to final render that client previews can be output using the new native MP4 video support.

MoGraph Enhancements – Added workflow capabilities in Voronoi Fracturing and an all-new Sound Effector.

New Spherical Camera – Lets artists render stereoscopic 360° Virtual Reality videos and dome projections.

New Polygon Reduction – Easily reduce entire hierarchies while preserving vertex maps, selection tags and UV coordinates to ensure textures continue to map properly and preserve polygon detail.

Level of Detail (LOD) Object – Define and manage settings to maximize viewport and render speed, or prepare optimized assets for game workflows. Exports FBX for use in popular game engines.

AMD’s Radeon ProRender – Now seamlessly integrated into R19, providing artists with a cross-platform GPU rendering solution.

Revamped Media Core – Completely rewritten software core to increase speed and memory efficiency for image, video and audio formats; native support for MP4 video without QuickTime.

Robust Modeling – A new modeling core with improved support for edges and N-gons can be seen in the Align and Reverse Normals commands.

BodyPaint 3D – Now uses an OpenGL painting engine, giving R19 artists a real-time display of reflections, alpha, bump or normal, and even displacement for improved visual feedback and texture painting when painting color and adding surface details in film, game design and other workflows.

MAXON Cinema 4D R19 will make its European debut at the MAXON booth in Hall 7, K30 at IBC 2017, which will take place from September 15 – 19, 2017, at the RAI Convention Center in Amsterdam. During the event, internationally-renowned 3D artists including Peter Eszenyi, Sophia Kyriacou and Tim Clapham, as well as creatives from other international studios, will share insights into and techniques used on projects created with Cinema 4D. Partners including Google, Insydium, and Redshift will present important workflow integrations with Cinema 4D. Detailed information as well as the live streaming of all presentations will be available at http://www.c4dlive.com/.

The post Cinema 4D R19: European debut at IBC 2017 appeared first on ProVideo Coalition.

First Found At: Cinema 4D R19: European debut at IBC 2017

0 notes