#Configure NSX Distributed Firewall

Explore tagged Tumblr posts

Text

Understand the configuration and benefits of the Distributed Logical Firewall in NSX. Our guide covers essential aspects to enhance your network's security and performance. https://www.dclessons.com/distributed-logical-firewall

0 notes

Text

Cloud, Associate (JNCIA-Cloud) JN0-212 Practice Test Questions

JN0-212 Cloud, Associate (JNCIA-Cloud) exam is the new exam replacement of JN0-211 exam. PassQuestion provides the latest Cloud, Associate (JNCIA-Cloud) JN0-212 Practice Test Questions to help you improve your preparation for the real exam. Make sure that you are using all the Cloud, Associate (JNCIA-Cloud) JN0-212 Practice Test Questions that will allow you to improve the preparation level before attempting a real exam. Once you have gone through all the JN0-212 Practice Test Questions, you will be able to clear Juniper JN0-212 exam on your first attempt. It is the right way to attempt a real exam so you can achieve the best results.

Cloud, Associate (JNCIA-Cloud) Certification

The Cloud track enables you to demonstrate competence with cloud networking architectures such as multiclouds, software-defined networking, SD-WAN, and other cloud technologies. JNCIA-Cloud, the associate-level certification in this track, is designed for networking professionals with introductory-level knowledge of Juniper Networks cloud-based networking architectures, theory, and best practices. The written exam verifies your understanding of cloud-based networking principles and technologies.

JNCIA-Cloud Exam Information

Exam Code: JN0-212 Prerequisite Certification: None Delivered by: Pearson VUE Exam Length: 90 minutes Exam Type: 65 multiple-choice questions Software Versions: Contrail 21.4, OpenStack Wallaby, Kubernetes 1.21

JNCIA-Cloud Exam TopicsCloud FundamentalsIdentify the concepts or functionality of various fundamental elements of cloud networking:

Deployment models (public, private, hybrid cloud)

Service models: Software as a Service (SaaS), Infrastructure as a Service (IaaS), Platform as a Service(PaaS)

Cloud native architectures

Cloud automation tools

Cloud Infrastructure: Network Functions Virtualization (NFV) and SDNIdentify the concepts, benefits, or functionality of network function virtualization:

NFV architecture

NFV orchestration

Virtualized network functions (VNFs)

Identify the concepts, benefits, or functionality of SDN:

SDN architecture

SDN controller

SDN solutions

Network VirtualizationIdentify concepts, operation, or functionality of network virtualization:

Virtual network types

Underlay and overlay networks

Encapsulation and tunneling (MPLSoGRE, MPLSoUDP, VXLAN, EVPN with VXLAN)

Cloud VirtualizationIdentify the concepts, operation, or functionality of Linux virtualization:

Linux architecture

Hypervisor type (type 1 and 2)

Hypervisor operations and concepts

Kernal-based virtual machine (KVM), Quick Emulator (QEMU) concepts and operations

Creation of virtual machines

Identify the concepts, operation, or functionality of Linux containers:

Container versus virtual machine

Container components

Creation of containers using Docker

Cloud Orchestration with OpenStackIdentify the concepts, operation, or functionality of OpenStack:

Creation and management of virtual machines in OpenStack

Automation using HEAT templates in Yet Another Markup Language (YAML)

OpenStack UIs usage

OpenStack networking plugins

OpenStack Security Groups

Cloud Orchestration with KubernetesIdentify the concepts, operation, or functionality of Kubernetes:

Creation and management of containers in Kubernetes

Kubernetes API Objects (Pods, ReplicaSets, Deployments, Services)

Kubernetes namespaces and Container Network Interface (CNI) plugins

Contrail NetworkingIdentify concepts, operation, or functionality of Contrail Networking:

Architecture

Orchestration integration

Multitenancy

Service chaining

Automation or security

Configuration

View Online Cloud, Associate (JNCIA-Cloud) JN0-212 Free Questions

Which statement is true about the vSRX Series and VMware NSX integration A.The NSX Distributed Firewall provides a container based layer of protection. B.VMware NSX provides advanced Layer 4 through Layer 7 security services. C.You can add the vSRX virtual firewall as security element in the VMware NSX environment. D.The NSX Distributed Firewall users application identification. Answer : C

Which two hypervisors does the vMX support? (Choose two) A.KVM B.xen C.ESXi D.Hyper V Answer : A, C

Which two product are required when deploying vSRX as a partner security service in VMware NSX? (Choose two) A.VMware vROPs B.VMware NSX manager C.Junos Space Security Director D.IDP sensor Answer : B, C

Which OpenStack component is responsible for user authentication and authorization? A.Glance B.Nova C.Keystone D.Neutron Answer : C

What are two roles of sandboxing in Sky ATP? (choose two) A.To test the operation of security rules B.To analyze the behavior of potential security threats C.To validate the operation of third-party components D.To store infected files for further analysis Answer : B, D

0 notes

Text

Palo Alto Ova File

Palo Alto Ova For Vmware

Palo Alto Ova File

The VM-Series firewall is distributed using the Open Virtualization Alliance (OVA) format, which is a standard method of packaging and deploying virtual machines. You can install this solution on any x86 device that is capable of running VMware ESXi.

In order to deploy a VM-Series firewall, you must be familiar with VMware and vSphere including vSphere networking, ESXi host setup and configuration, and virtual machine guest deployment.

To get a Palo Alto virtual firewall working and see how to configure its basic security settings. Downloading the OVA File Go to the page linked below, and log in with the credentials given in class. Downloading the Virtual Machines. Find the 'CNIT 140' section and download the Palo Alto Firewall. Complete the following steps to prepare the heat templates, bootstrap files, and software images needed to deploy the VM-Series firewall. After preparing the files. The Palo Alto Networks Education department does not offer or provide free evaluations, trial licenses, OVA files, or VMs for lab practice. We recommend contacting a sales representative to see what other options are available to you. As an alternative, learners can pay a.

You can deploy one or more instances of the VM-Series firewall on the ESXi server. Where you place the VM-Series firewall on the network depends on your topology. Choose from the following options (for environments that are not using VMware NSX):

One VM-Series firewall per ESXi host—Every VM server on the ESXi host passes through the firewall before exiting the host for the physical network. VM servers attach to the firewall via virtual standard switches. The guest servers have no other network connectivity and therefore the firewall has visibility and control to all traffic leaving the ESXi host. One variation of this use case is to also require all traffic to flow through the firewall, including server to server (east-west traffic) on the same ESXi host.

One VM-Series firewall per virtual network—Deploy a VM-Series firewall for every virtual network. If you have designed your network such that one or more ESXi hosts has a group of virtual machines that belong to the internal network, a group that belongs to the external network, and some others to the DMZ, you can deploy a VM-Series firewall to safeguard the servers in each group. If a group or virtual network does not share a virtual switch or port group with any other virtual network, it is completely isolated from all other virtual networks within or across the host(s). Because there is no other physical or virtual path to any other network, the servers on each virtual network must use the firewall to talk to any other network. Therefore, it allows the firewall visibility and control to all traffic leaving the virtual (standard or distributed) switch attached to each virtual network.

Hybrid environment—Both physical and virtual hosts are used, the VM-Series firewall can be deployed in a traditional aggregation location in place of a physical firewall appliance to achieve the benefits of a common server platform for all devices and to unlink hardware and software upgrade dependencies.

System Requirements

You can create and deploy multiple instances of the VM-Series firewall on an ESXi server. Because each instance of the firewall requires a minimum resource allocation number of CPUs, memory and disk space—on the ESXi server, make sure to conform to the specifications below to ensure optimal performance.

The VM-Series firewall has the following requirements:

The host CPU must be a x86-based Intel or AMD CPU with virtualization extension.

VMware ESXi with vSphere 5.1, 5.5, 6.0, or 6.5 for VM-Series running PAN-OS 8.0. The VM-Series firewall on ESXi is deployed with VMware virtual machine hardware version 9 (vmx-09); no other VMware virtual machine hardware versions are supported.

See VM-Series System Requirements for the minimum hardware requirements for your VM-Series model.

Minimum of two network interfaces (vmNICs). One will be a dedicated vmNIC for the management interface and one for the data interface. You can then add up to eight more vmNICs for data traffic. For additional interfaces, use VLAN Guest Tagging (VGT) on the ESXi server or configure subinterfaces on the firewall.

The use of hypervisor assigned MAC address is enabled by default. vSphere assigns a unique vmNIC MAC address to each dataplane interface of the VM-Series firewall. If you disable the use hypervisor assigned MAC addresses, the VM-Series firewall assigns each interface of a MAC address from its own pool. Because this causes the MAC addresses on each interface to differ, you must enable promiscuous mode (see Before deploying the OVA file, set up virtual standard switch(es) and virtual distributed switch(es) that you will need for the VM-Series firewall.) on the port group of the virtual switch to which the dataplane interfaces of the firewall are attached to allow the firewall to receive frames. If neither promiscuous mode nor hypervisor assigned MAC address is enabled, the firewall will not receive any traffic. This is because vSphere will not forward frames to a virtual machine when the destination MAC address of the frame does not match the vmNIC MAC address.

Data Plane Development Kit (DPDK) is enabled by default on VM-Series firewalls on ESXi. For more information about DPDK, see Enable DPDK on ESXi.

To achieve the best performance out of the VM-Series firewall, you can make the following adjustments to the host before deploying the VM-Series firewall. See Performance Tuning of the VM-Series for ESXi for more information.

Enable DPDK. DPDK allows the host to process packets faster by bypassing the Linux kernel. Instead, interactions with the NIC are performed using drivers and the DPDK libraries.

Enable SR-IOV. Single root I/O virtualization (SR-IOV) allows a single PCIe physical device under a single root port to appear to be multiple separate physical devices to the hypervisor or guest.

Do not configure a vSwitch on the physical port on which you enable SR-IOV. To communicate with the host or other virtual machines on the network, the VM-Series firewall must have exclusive access to the physical port and associated virtual functions (VFs) on that interface.

Enable multi-queue support for NICs. Multi-queue allows network performance to scale with the number of vCPUs and allows for parallel packet processing by creating multiple TX and RX queues.

Note:-

Do not use the VMware snapshots functionality on the VM-Series on ESXi. Snapshots can impact performance and result in intermittent and inconsistent packet loss.See VMWare’s best practice recommendation with using snapshots.

If you need configuration backups, use Panorama or Export named configuration snapshot from the firewall (Device > Set up > Operations). Using the Export named configuration snapshot exports the active configuration (running-config.xml) on the firewall and allows you to save it to any network location.

Limitations

The VM-Series firewall functionality is very similar to the Palo Alto Networks hardware firewalls, but with the following limitations:

Dedicated CPU cores are recommended.

High Availability (HA) Link Monitoring is not supported on VM-Series firewalls on ESXi. Use Path Monitoring to verify connectivity to a target IP address or to the next hop IP address.

Up to 10 total ports can be configured; this is a VMware limitation. One port will be used for management traffic and up to 9 can be used for data traffic.

Only the vmxnet3 driver is supported.

Virtual systems are not supported.

vMotion of the VM-Series firewall is not supported. However, the VM-Series firewall can secure guest virtual machines that have migrated to a new destination host, if the source and destination hosts are members of all vSphere Distributed Switches that the guest virtual machine used for networking.

My videos 3d pro. MyVideos 3D+ Pro v3.0 Apk play your HD videos with real time 3D effects, 3D.

VLAN trunking must be enabled on the ESXi vSwitch port-groups that are connected to the interfaces (if configured in vwire mode) on the VM-Series firewall.

To use PCI devices with the VM-Series firewall on ESXi, memory mapped I/O (MMIO) must be below 4GB. You can disable MMIO above 4GB in your server’s BIOS. This is an ESXi limitation.

Deploy Paloalto VM-Series

Register your VM-Series firewall and obtain the OVA file from the Palo Alto Networks Customer Support web site.

Note:- The OVA is downloaded as a zip archive that is expanded into three files: the .ovf extension is for the OVF descriptor file that contains all metadata about the package and its contents; the .mf extension is for the OVF manifest file that contains the SHA-1 digests of individual files in the package; and the .vmdk extension is for the virtual disk image file that contains the virtualized version of the firewall.

Before deploying the OVA file, set up virtual standard switch(es) and virtual distributed switch(es) that you will need for the VM-Series firewall.

If you are deploying the VM-Series firewall with Layer 3 interfaces, your firewall will use Hypervisor Assigned MAC Addresses by default. If you choose to disable the use of hypervisor assigned MAC address, you must configure (set to Accept) any virtual switch attached to the VM-Series firewall to allow the following modes:

Promiscuous mode

MAC address changes

Forged transmits

Log in to vCenter from the vSphere Web Client. You can also go directly to the target ESXi host if needed.

From the vSphere client, select FileDeploy OVF Template.

Browse to the OVA file that you downloaded and select the file and then click Next. Review the templates details window and then click Next again.

Name the VM-Series firewall instance and in the Inventory Location window, select a Data Center and Folder and click Next

Select an ESXi host for the VM-Series firewall and click Next.

Select the datastore to use for the VM-Series firewall and click Next ( Default virtual disk format is thick and you may change to Thin )

Palo Alto Ova For Vmware

Select the networks to use

Review the details window, select the Power on after deployment check box and then click Next.

Initial Configuration

Access the console of the VM-Series firewall.

Enter the default username/password (admin/admin) to log in.

Enter configure to switch to configuration mode.

Configure the network access settings for the management interface. You should restrict access to the firewall and isolate the management network. Additionally, do not make the allowed network larger than necessary and never configure the allowed source as 0.0.0.0/0.

Now you have to exit from configuration mode by entering exit

Access the Firewall from a web browser

Open the Browse and type the IP you have assigned to the VM to access the console.

Enter the credentials

You are logged in to the PA firewall and you can start using it

Rascal flatts broken road mp3 download. Conclusion

Palo Alto Ova File

Here we shared detailed information about the deployment of paloalto VM-Series edition and steps to follow for a successful deployment. Also what is the limitation and system requirements for a virtual edition with reference links have been added to it, you have to refer them before starting the deployment

0 notes

Text

Everything you should know about Anthos- Google’s multi-cloud platform

What is Anthos?

Recently, Google reported a general availability of Anthos. Anthos is an enterprise hybrid and multi-cloud platform. This platform is designed to allow users to run applications on-premise not just Google Cloud but also with other providers such as Amazon Web Services and Microsoft Azure. Anthos stands out as the tech behemoth’s official entry into the quarrel of data centers. Anthos is different from other public cloud services. It is not just a product but it is an umbrella brand for various services aligned with the themes of application modernization, cloud migration, hybrid cloud, and multi-cloud management.

Despite the extensive coverage at Google Cloud Next and, of course, the general availability, the Anthos announcement was confusing. The documentation is sparse, and the service is not fully integrated with the self-service console. Except for the hybrid connectivity and multi-cloud application deployment, not much is known about this new technology from Google.

Building Blocks of Anthos-1. Google Kubernetes Engine –

Kubernetes Engine is a central command and control center of Anthos. Clients utilize the GKE control plane to deal with the distributed infrastructure running in Google’s cloud on-premise data center and other cloud platforms

2. GKE On-prem–

Google is delivering a Kubernetes-based software platform which is consistent with GKE. Clients can deliver this on any compatible hardware and Google will manage the platform. Google will treat it as a logical extension of GKE from upgrading the versions of Kubernetes to applying the latest updates. It is necessary to consider that GKE On-prem runs as a virtual appliance on top of VMware vSphere 6.5. The support for other hypervisors, such as Hyper-V and KVM is in process.

3. Istio –

This technology empowers federated network management across the platform. Istio acts as the service work that connects various components of applications deployed across the data center, GCP, and other clouds. It integrates with software defined networks such as VMware NSX, Cisco ACI, and of course Google’s own Andromeda. Customers with existing investments in network appliances can integrate Istio with load balancers and firewalls also.

4. Velostrata –

Google gained this cloud migration technology in 2018 to enlarge it for Kubernetes. Velostrata conveys two significant capabilities – stream on-prem physical/virtual machines to create replicas in GCE instances and convert existing VMs into Kubernetes applications (Pods).

This is the industry’s first physical-to-Kubernetes (P2K) migration tool built by Google. This capability is available as Anthos Migrate, which is still in beta.

5. Anthos Config Management –

Kubernetes is an extensible and policy-driven platform. Anthos’ customers have to deal with multiple Kubernetes deployments running across a variety of environments so Google attempts to simplify configuration management through Anthos. From deployment artifacts, configuration settings, network policies, secrets and passwords, Anthos Config Management can maintain and apply the configuration to one or more clusters. This technology Is a version-controlled, secure, central repository of all things related to policy and configuration also.

6. Stackdriver –

Stackdriver carries observability to Anthos infrastructure and applications. Customers can locate the state of clusters running within Anthos with the health of applications delivered in each managed cluster. It acts as the centralized monitoring, logging, tracing, and observability platform.

7. GCP Cloud Interconnect –

Any hybrid cloud platform is incomplete without high-speed connectivity between the enterprise data center and the cloud infrastructure. While connecting the data center with the cloud, cloud interconnect can deliver speeds up to 100Gbps. Customers can also use Telco networks offered by Equinix, NTT Communications, Softbank and others for extending their data center to GCP.

8. GCP Marketplace –

Google has created a list of ISV and open source applications that can run on Kubernetes. Customers can deploy applications such as Cassandra database and GitLab in Anthos with the one-click installer. In the end, Google may offer a private catalog of apps maintained by internal IT.

Greenfield vs. Brownfield Applications-

The central theme of Anthos is application modernization. Google conceives a future where all enterprise applications will run on Kubernetes.

To that end, it invested in technologies such as Velostrata that perform in-place upgradation of VMs to containers. Google built a plug-in for VMware vRealize to convert existing VMs into Kubernetes Pods. Even stateful workloads such as PostgreSQL and MySQL can be migrated and deployed as Stateful Sets in Kubernetes. In general Google’s style, the company is downplaying the migration of on-prem VMs to cloud VMs. But Velostrata’s original offering was all about VMs.

Customers using traditional business applications like SAP, Oracle Financials and also Peoplesoft can continue to run them in on-prem VMs or select to migrate them to Compute Engine VMs. Anthos can provide interoperability between VMs and also containerized apps running in Kubernetes. With Anthos, Google wants all your contemporary microservices-based applications (greenfield) in Kubernetes while migrating existing VMs (brownfield) to containers. Applications running in non-x86 architecture and legacy apps will continue to run either in physical or virtual machines.

Google’s Kubernetes Landgrab-

When Docker started to get traction among developers, Google realized that it’s the best time to release Kubernetes in the world. It also moved fast in offering the industry’s first managed Kubernetes in the public cloud. As there are various managed Kubernetes offerings, GKE is still the best platform to run microservices.

With a detailed understanding of Kubernetes and also the substantial investments it made, Google wants to assert its claim in the brave new world of containers and microservices. The company wants enterprises to leapfrog from VMs to Kubernetes to run their modern applications.

Read more at- https://solaceinfotech.com/blog/everything-you-should-know-about-anthos-googles-multi-cloud-platform/

0 notes

Text

L2 bridging with VMware NSX-T

by Michal Grzeszczak

My name is Michal Grzeszczak and I am a senior consultant at Xtravirt (www.xtravirt.com), an independent cloud consulting business and VMware Master Services Competent Partner. In this blog, I’d like to share with you my experience of L2 bridging with VMware® NSX-T Data Center and help you to understand some of the differences between NSX-T and NSX-V, as well as cover some NSX use cases.

Customer background:

The customer is one of the leading organisations in the betting and gambling industry and have been using NSX-V for some time now. When it came to their new greenfield environment, they decided to deploy NSX-T 2.4 Data Center. This decision was largely based on the fact that NSX-T Data Center provides bridging firewalls natively for L2 software bridging, which was their main requirement. Also, given that NSX-T will be the de facto network and security virtualisation platform offered by VMware going forward, by specifying it for this environment the customer is able to future proof the deployment.

Future upgrades are also accounted for as upgrading from NSX-T 2.4 to future NSX-T releases is simpler than performing upgrades from a previous version of V or T.

NSX-T 2.4 was a major update and brought many changes to the architecture of the product, the main ones being:

The NSX Manager and NSX Controllers were merged into one appliance deployed in a Cluster of 3 Nodes therefore minimising the footprint of the solution and operational complexity.

The other major change was the introduction of the new Simplified UI which “requires just the bare minimum of user input, offering strong default values with prescriptive guidance for ease of use. This means fewer clicks and page hops are required to complete configuration tasks.”

NOTE: In NSX 2.4 two UIs are presented - one is the new Simplified UI, the other one is Advanced. The latter is taken from NSX-T 2.3. The future plan is to remove the Advanced UI and provide all of the functionality via the Simplified UI.

What are the key benefits of choosing NSX-T over NSX-V?

There are many benefits of NSX-T over NSX-V, to name a few:

NSX-T doesn’t require vCenter, however it can be added to NSX-T as a Compute Manager and allows for up to 16 Compute Managers per NSX 2.4 solution . There is no longer a 1:1 requirement like there was with NSX-V.

KVM Hypervisor support

Bare Metal Edges provide sub-second failover

Data Plane Development Kit (DPDK) Optimising Forwarding - DPDK is a set of data plane libraries and network interface controller drivers for fast packet processing

New, more flexible Overlay technology - Geneve replaces VXLAN https://docs.vmware.com/en/VMware-Validated-Design/4.3/com.vmware.vvd.sddc-nsxt-design.doc/GUID-CF3C47CA-9BEB-4213-8F08-1494261BF3EC.html

Bi-directional Forwarding Detection support

BGP (Border Gateway Protocol) expanded functionality with features like Route Maps

Much clearer User Interface (UI)

What were the specific use cases for this case customer and the design considerations?

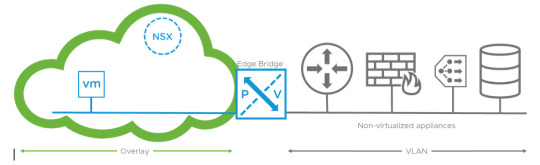

USE CASE 1 - NSX-T Edge L2 Bridging - Integration of physical, non-virtualised database servers that require L2 connectivity to the virtualised environment.

Design considerations:

In NSX-T 2.4 two options are possible to bridge L2 workloads - using ESXi Bridge Clusters or Edge Bridge Profiles. The latter is recommended to use as the former will be deprecated in future.

Ideally the use of dedicated Bare Metal Edges, however this is not a requirement.

The possibility to deploy Edge Bridges in collapsed vSphere Clusters > Management + Edge or Compute + Edge

Consider having active and standby Edge Nodes on different hosts to avoid throughput drop, because VLAN traffic needs to be forwarded to both Edge Nodes in promiscuous mode.

Overlay traffic can be tagged on a Distributed Port Group or Uplink Profile, but please avoid double tagging.

VLAN traffic should not be tagged on the Uplink Profile.

Distributed Port Group for VLAN traffic connecting to the Edge Node should be in a Trunk Mode. The reason for this is due to the fact that Edge doing Bridging adds 802.1Q tag when transposing Overlay traffic to VLAN.

Consider having the same MTU value across your environment - if you can, choose MTU of 9000 for better performance.

Several Bridge Profiles can be configured, and a given Edge can belong to several Bridge Profiles. By creating two separate Bridge Profiles, alternating active and backup Edge in the configuration, the user can easily make sure that two Edge nodes simultaneously bridge traffic between Overlay and VLAN

Constraints:

Edge Cluster with minimum 2 Edge Nodes in Active/Standby mode is needed.

Standby uplinks are not supported on the Edge Node.

Source Based Load Based Teaming is not supported on the Edge Node.

The port group on the VSS/VDS sending and receiving traffic on the VLAN side should be in promiscuous mode, allowing MAC Address changes and Forge Transmits.

Deployment:

Follow the deployment guide here - https://youtu.be/IwpujflzJhY

Or here https://docs.vmware.com/en/VMware-NSX-T-Data-Center/2.3/com.vmware.nsxt.admin.doc/GUID-7B21DF3D-C9DB-4C10-A32F-B16642266538.html

Validation:

Log in to the Edge Node with your credentials.

Type “nsxcli”.

“Get l2bridge-ports-config” will show the Bridge Port State (Active Edge will have Forwarding, Standby will have Stopped), VLAN ID configured and Bridge UUID which can be copied and used to do a packet capture on the Edge Node.

To do the packet capture type “start capture interface “copied Bridge UUID” and hit enter, ping from VM to Physical Server to see the flows.

USE CASE 2 - NSX bridging firewall - Secure communication between physical database servers and Overlay workloads.

The configuration of the bridging firewall can be done currently only on the Advanced UI.

Security rules are configured per Logical Switch aka Segment in Simplified UI.

NSX-T grouping objects like NSGroups can be used to provide abstraction from the IP based approach.

USE CASE 3 - vRealize Network Insight - Monitoring tool that can provide visibility into both Physical and Virtual environments.

NSX-T 2.4 is fully supported with the latest vRealize Network Insight version 4.1.

Make sure you are allowing ports for communication between vRNI and other devices after the deployment. For example, ESXi Hosts to vRNI Collector on UDP 2055. The full list of ports needed can be found here - https://docs.vmware.com/en/VMware-vRealize-Network-Insight/3.9/com.vmware.vrni.install.doc/GUID-FDDA5F2F-7C3B-472A-A17D-39582FBD5996.html

There is a new website that provides in-depth information about new features in vRNI, definitely worth visiting - https://vrealize.vmware.com/t/vmware-network-management/

What were the outcomes?

Overall, this deployment was a complete success as we were able to satisfy all of the requirements that the customer had. All the production physical databases were able to communicate on the same L2 segments, virtualised and connected to NSX-T segments workloads. On top of that, communication between those workloads was secured by NSX-T Bridging Firewall. vRealize Network Insight was used to provide visibility into the environment. Its ability to quickly troubleshoot network and firewall related issues in both virtual and physical realms allows admins to take a breath in the never-ending battle of making the networks stable.

I hope you enjoyed my blog, if you’d like to talk to Xtravirt about your business’s networking and security requirements, then please send an email to [email protected]

Some Useful links:

You can read more about the NSX-T 2.4 release here: https://blogs.vmware.com/networkvirtualization/2019/02/introducing-nsx-t-2-4-a-landmark-release-in-the-history-of-nsx.html/

0 notes

Text

Configure NSX Distributed Firewall

Once you complete a complete course configure NSX distributed firewall with us, you will get a certification of NSX Distributed Firewall. https://www.dclessons.com/distributed-logical-firewall/

0 notes

Text

Future Proof Your Career with Advanced CCIE Data Center Labs Course

This pandemic poised a threat to everybody’s career as it has broadened the demand and supply gap abruptly. https://www.reddit.com/user/dclessons12/comments/kke47t/future_proof_your_career_with_advanced_ccie_data/

0 notes

Photo

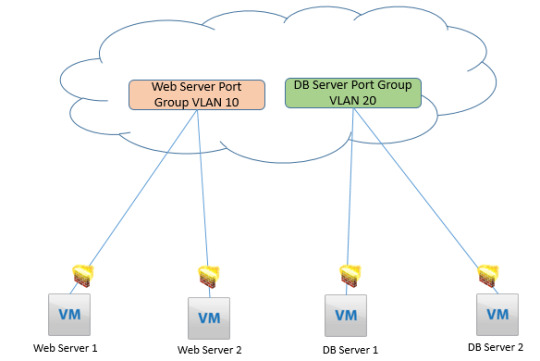

Once you complete a complete course with us, you will know how to configure NSX distributed firewall and keep the data safe for your clients. https://www.dclessons.com/distributed-logical-firewall/

0 notes

Photo

Once you complete a complete course with us, you will know how to #configure #NSX #distributed #firewall and keep the data safe for your clients. https://www.dclessons.com/distributed-logical-firewall

0 notes

Text

Endless options with VMware Horizon and VMware Workspace ONE

By Curtis Brown

There are a considerable number of blog posts and articles that have looked at the individual components of the VMware® End User Compute stacks, so I thought I’d take a high-level look at some of what’s possible when we take the whole solution in its entirety.

The Moving Parts

In the early days of VMware’s End User Compute efforts, we were looking at a relatively simple stack of VMware vCenter, ESX and Virtual Desktop Manager (or, to you young folks, Horizon View 1.0 or 2.0 back in 2007/8). These days, things are somewhat more powerful, offering a wider array of capabilities, but these capabilities also require more moving parts.

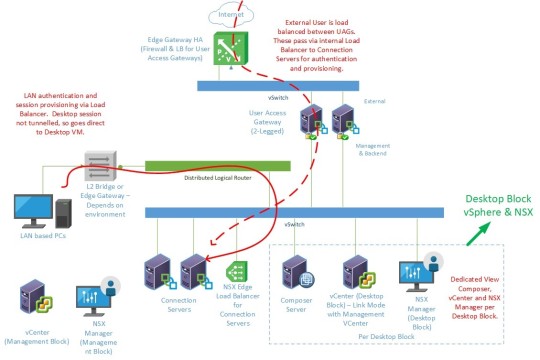

The diagram below shows the VMware components that could be deployed in a solution.

Now, let’s look a little deeper…

For Virtual Desktop delivery, we have the underlying infrastructure of VMware vSphere with vCenter and ESXi. However, we can enhance this further in two ways. Firstly, we can leverage local storage by implementing VMware vSAN rather than rely on a SAN/NAS based solution. Secondly, we could deploy VMware NSX to provide enhanced security in the form of micro-segmentation of the network as well as anti-malware protection using Guest Introspection.

For publishing desktops, we have VMware Horizon View. The connection servers provide the brains, managing entitlements and provisioning of desktop and application pools. For secure access from untrusted or external networks, we deploy either Security Servers, or, more recently, Unified Access Gateway appliances to serve as a proxy into the solution. VMware Horizon View Composer is used to manage and deploy non-persistent Linked Clone desktops. Although this approach is in decline as Instant Clone technology replaces it.

We then need to consider the management and delivery of applications and user settings. For the latter, we can integrate VMware User Environment Manager. This can manage both environmental settings (including delivery of application shortcuts, drive mappings etc) as well as eliminating the issues related to Windows Roaming Profiles. For application delivery, we can use App Volumes within the virtual estate or leverage the estate itself to publish Remote Desktop Session Host based remote applications. ThinApp, although somewhat out of favour these days, remains an option for direct delivery to Windows Endpoints (via VMware Workspace ONE Identity Manager) or within Horizon View desktops.

When it comes to monitoring the estate, we can use VMware vRealize Operations Manager with the VMware Horizon Management Pack. It’s possible to expand further still by leveraging more of the vRealize suite, notably VMware vRealize Log Insight for capturing logs from both the solution as well as the environment.

We then move out into two topics – The Endpoint and the User. These are somewhat integrated topics these days as they do overlap.

VMware Workspace ONE comprises two key elements:

Unified Endpoint Management can provide control, configuration and administration to endpoints, be they mobile devices or traditional desktops.

Identity Manager provides the user authentication layer into the solution as a whole, while also providing a unified catalogue of applications and services, whether publish via VMware Horizon or whether through single sign-on to cloud services.

Another offering that is often overlooked, but still a part of the VMware Horizon licensing (at the Advanced and Enterprise level) is VMware Mirage. This can provide image level management of Windows based client desktop/laptops. FLEX leverages the Mirage infrastructure in conjunction with VMware Workstation and VMware Fusion to provide an offline VDI capability.

What parts are available is largely defined by what is purchased. Some parts are included in the various VMware Horizon editions, while some, notably VMware vSAN, VMware NSX and VMware Horizon FLEX are separate products. In the case of Workspace ONE, VMware Horizon Advanced and above includes just Workspace ONE Identity Manager Standard. To get the full Workspace ONE suite requires purchase of Workspace ONE as a specific product.

VMware Horizon editions can be compared at:

https://www.vmware.com/content/dam/digitalmarketing/vmware/en/pdf/products/horizon/vmware-horizon-editions-compare-chart.pdf.

The Art of the Possible

For the purposes of looking at what is possible, let us assume that an Alien Space Bat has deemed it fit to leave an unlimited budget for us to acquire all these tools. Here’s a few ideas of what we could achieve:

By integrating the full VMware Workspace ONE with Horizon, we can fully manage security between a user, a managed endpoint and access to Virtual Desktops. By managing the device using VMware Workspace ONE Unified Endpoint Management and establishing Compliance checking, we can define an Identity Manager policy that allows access only to users with valid credentials who are using compliant devices to the Workspace ONE catalogue. In turn, users can then access a VDI desktop from the relevant icon in Workspace ONE.

It is possible to provide a single portal to a geographically spread VMware Horizon VDI offering that will connect users seamlessly to the nearest desktop instance. Workspace ONE Identity Manager can provide location awareness based on client IP address. By defining IP ranges, and relating these to the public DNS name for the local Horizon site, Workspace ONE will direct users to the nearest VMware Horizon site for optimum performance. This leverages VMware Horizon Cloud Pod Architecture to present a common entitlement across all instances.

App Volumes, User Environment Manager and NSX Distributed Firewall Rules can be tied to Active Directory groups. We can therefore deploy an application in an App Volumes App Stack, with a standard configuration provided by UEM and permit traffic from the application to a specific server all tied to a single Active Directory Group.

And these are but a few options. When you consider that a number of these offerings are now available in a cloud-based form, the options broaden still. Workspace ONE components both offer cloud and on-premises variants, while VMware Horizon now includes not only the on-premises offering, but also the ability to deploy on top of VMware Cloud on AWS or the full Desktop-as-a-Service offering of Horizon Cloud.

Closing Thoughts…

As a range of products that can be built in an array of different configurations, it is possible to design and deploy solutions that fit a broad variety of use cases, from simple to very specific.

If you are looking to deploy a new Digital Workspace solution or wish to enhance or upgrade what you currently have, then Xtravirt can help. We have a long track record of successful digital workspace projects and can provide advisory, design and implementation services to create the right solution for your organisation. Contact us and we’d be happy to use our wealth of knowledge and experience to assist you.

About the author

Curtis Brown is a Lead Consultant at Xtravirt. His specialist areas include End User Compute solutions and Virtual Infrastructure design and implementation with particular strength in VDI, storage integration, backup and Disaster Recovery design/implementation. He was awarded VMware vExpert 2018 and is a graduate of the VMware Advanced Architecture Course 2018.

About Xtravirt

Xtravirt is an independent cloud consulting business. We believe in empowering enterprises to innovate and thrive in an ever-changing digital world. We are experts in digital transformation and our portfolio of services cover digital infrastructure, digital workspace, automation, networking and security.

#xtracbrown#vmware#digitalworkspace#workspaceONE#horizon#EUC#horizoncloud#vcenter#horizonview#UEM#VDI

0 notes

Text

Encryption: Data Protection in VMware Hyper-Converged Infrastructure

By Curtis Brown

Introduction

In the wonderful world of VMware based virtualisation, there has been a concerted effort in recent years to not just virtualise the compute side of matters but expand out into networking (with VMware NSX) and the provisioning of storage (VMware vSAN). This all results in a single VMware Hyper-converged infrastructure offering.

An important factor within this is security. VMware vSphere itself has considerable security with its small footprint, hardened hypervisor and the move towards a hardened Linux based appliance as the preferred option for the VMware vCenter management server. VMware NSX can be argued to be a security product in and off itself – providing not only edge firewalling capabilities but per-VM based firewalling with the Distributed Firewall and support for agentless malware protection solutions with the Guest Introspection framework.

But what of VM storage? SAN solutions can offer security measures, subject to the SAN vendor, but how does this apply in a VMware vSAN environment?

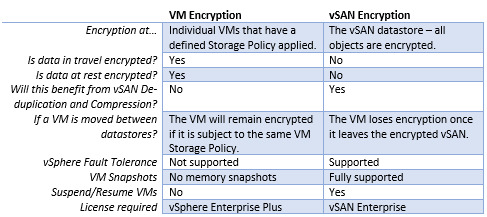

There are two options available:

VM Encryption

VMware vSAN Encryption

Each has some pros and cons – so let’s take a look.

VM Encryption

Fundamentally, this is the encryption of virtual machines themselves. There are third party (and, for that matter, in-guest) encryption offerings, but we’ll focus on the built-in VM encryption capability that was released in VMware vSphere 6.5.

It’s pretty straightforward to set up, requiring only two things – the deployment of an encryption Key Management Server (compatible ones are listed here and in the Interoperability guide) and a VMware vSphere Storage Policy. After that, apply the policy to a given VM and – hey Presto!

So, what’s the catch? The encryption works on all IO operations for the VM itself at the top level – as it’s written to the Virtual Disk. This means that all IO operations in transit are encrypted as well as at rest on the physical disk.

From a security standpoint, this is very secure, and as it’s on a per-VM basis, it’s granular – you don’t need to encrypt everything (though you could argue that this is a management overhead). However, the downside in a vSAN environment is that the encryption occurs before de-duplication, so no space saving is possible.

There are further caveats with VM encryption to be aware of:

The VM Snapshot ‘Capture the virtual machine memory’ function is not compatible.

vSphere Fault Tolerance does not work with VM encryption.

Suspending (and resuming) encrypted VMs is not supported.

In addition, not all vSphere integrated backup solutions can support VM level encryption.

vSAN Encryption

VMware vSAN Encryption uses the same Key Management Server, but this time the VMware vSAN storage is itself encrypted. It’s applied at the cluster level, so anything stored in the vSAN cluster is encrypted – no management overhead as such, but no granularity.

All data travel is in an unencrypted state – it is encrypted when the data hits the caching tier, decrypted when it is de-staged from the cache for de-duplication and writing to the data tier. This means that data-deduplication can be applied in combination with encryption, however the down-side is that encryption is therefore limited to data at rest (i.e. written to disk) as opposed to end-to-end as would be the case for VM encryption.

As encryption is at the storage tier, it is essentially invisible to VM operations – so vSphere operations such as Fault Tolerance and snapshots are fully supported and most vSAN aware backup solutions aren’t impacted.

Comparing the options

To compare these two options:

Closing Thoughts

Both mechanisms have their use cases. VM encryption is the more secure when you consider that it is capable of full end-to-end encryption. It also benefits from being able to support traditional SAN datastores as well as vSAN. However, some VMware vSphere functionality is impaired when compared to vSAN and there is more of a management overhead. In a modern all-flash estate, IO drops caused by encryption are negligible in both cases, so performance should not be a concern.

VM Encryption is worth considering, though it does add a layer of complexity to an estate. If the deployment of a robust KMS (Key Management Server) solution is a must, then there is configuration of the solution itself.

If you’re considering implementing or upgrading a VMware vSphere environment and data security is a concern, please contact Xtravirt, and we’d be happy to use our wealth of knowledge and experience to assist you.

About the Author

Curtis Brown joined the Xtravirt consulting team in October 2012. His specialist areas include End User Compute solutions and Virtual Infrastructure design and implementation with strengths in VDI, storage integration, backup and Disaster Recovery design/implementation. He is a VMware vExpert 2018.

#xtraCBrown#vSAN#vsphere#virtualisation#virtualization#cloud#xtravirt#encryption#Hyper-converged#data#protection

0 notes

Text

VMware NSX and vRNI – a customer solution

By Michal Grzeszczak

OLD WORLD

The landscape of modern IT is constantly evolving and an aggressive shift towards the software defined data centre is arising. Nowadays, virtualisation of storage and networking is becoming as, or even more important than the virtualisation of compute. In this rapidly transforming realm, traditional networking and security can’t keep up with location-agnostic and application-centric workloads and even if they could, there are now simply better ways to solve the problems occurring in traditionally managed data centres. Security, agility, automation and visibility are the key areas where there is a need for new tooling. During a recent customer engagement, we faced all of these issues and to mitigate them, VMware NSX® and VMware vRealize® Network Insight™ (vRNI) were deployed in the environment.

BACKGROUND

The customer's main business objective is to provide IT services to a number of hospitals. Security regulations in the health care world (the likes of HIPPA) can be difficult to comply with, especially with traditional security methods such as using perimeter firewalls for east-west traffic control. The customer had an old data centre that contained both virtual and physical workloads, 200+ undocumented applications, Cisco 6500s core switches and Cisco ASA firewalls. As they were facing multiple challenges with this setup, the decision was made to engage Xtravirt to migrate to a new NSX based data centre.

CHALLENGES

The main challenge the customer had in their previous environment was application traffic visibility, namely the 200+ applications without proper documentation outlining necessary ports to be open in the firewall. The communication between the networking team and application team wasn't as smooth as one could hope for either which created unnecessary holes in the overall security posture of the company’s IT department. Multiple DMZs from the Cisco ASA firewall did not scale well and the rule sprawl was becoming a problem. The security team was working reactively instead of pro-actively - a known issue with the traditional approach. All of these issues led to the environment becoming unmanageable.

To sum up the challenges:

Lack of visibility of the East-West traffic - resulting in poor understanding of the application flows and complicating the security policy, therefore becoming difficult to manage.

Communication breakdown between IT teams - back and forth email exchange proves time consuming and slows down the deployments or changes, identifying a need for a tool to provide a "source of truth” of what is actually happening in the environment.

Hair-Pinning to the perimeter firewalls and routers - sending traffic for inspection to the physical firewalls and routing to the core switches, even if the workloads reside on the same host. That is a waste of bandwidth. Also, latency sensitive applications might be affected.

GOALS

The customer decided to build a new data centre with NSX for micro-segmentation and vRNI for visibility and security planning. The next step was to migrate the applications from the old environment to a new one, then monitor traffic flows of the applications to create an adequate security policy.

Goals communicated before the engagement:

Use vRNI to monitor applications and services that are running in the current data centre to get a clear picture of the network flows before the migration of the applications to the new data centre.

Use vRNI to plan security policies for the environment based on the observed flows as opposed to the (lacking) documentation from the application team.

Design a security policy using NSX, based on the vRNI outcome.

Monitor the entire network, both virtual and physical workloads to get the full picture of what is happening in the environment. Then design an alert system using vRNI that will help with monitoring and troubleshooting.

D-DAY

After adding Virtual Distributed Switches as Data Sources in the vRNI we watched traffic flows of the applications. With a few clicks, we were able to see all the traffic - ports, protocols, IP addresses - to and from any selected application. To define an application in the vRNI we simply specify the workloads that are relevant. Then the visual graph shows all necessary information as well as recommended firewall rules. We could export interesting data in the CSV file format readable in Excel and use that as a base for Security Rules creation. It is recommended to collect the flows of the application for some time, like a day or two, as not all the ports of the applications are used in the given moment. It could be a good idea to send a request to the DevOps team to sweep through all the functionality of the application, just to make sure all the traffic needed to be allowed in the firewall is actually there in the output graph of vRNI. By default, vRNI collects data every 5 minutes.

Next steps involved creating a Security Policy using a distributed firewall - kernel based East-West firewall used for micro-segmentation. The decision was made to use Security Tags for all of the virtual workloads and IPsets for external ones.

The Security Tags allow for dynamic assignment of VMs. For example - you could create a Security Tag called “Web” and configure a Security Policy that will automatically add VMs with the name that contains “Web” to a specific Security Group. This is a useful feature as workloads quite often change IP addresses and location. We are simply shifting from network-based security to an object-based one that allows for agile and location-agnostic applications.

IPsets are sets of IP Addresses, subnets or a group of subnets that specify the scope of affected workloads for a given Security Group. In the customer’s environment, the zero-trust model was implemented which means that only necessary traffic is allowed on the Distributed Firewall and everything else is dropped. This type of design ensures maximum security.

Next, the vRNI notification system was used for monitoring the new Data Centre. There are two types of notifications:

System notifications, which are pre-defined. The list of notifications contains 100 of the most commonly appearing issues customers face during a deployment. For example, this could be a loss of network connection of the NSX Edge routers.

User notifications are defined by user and are very simple to set up. One can simply use a search bar to search for a specific query, for example, Change in the Application “App1” - and create a notification alert with the click of a mouse. Both System and User notifications are customisable. You can specify the severity of the alerts and tags to make searching for the problem easier and quicker, and contact recipients by email to notify a specific person or a team about an issue.

RESULTS

Quite simply, they are spectacular. In my opinion, none of the solutions available on the market could solve the customer challenges like NSX and vRNI. All the goals were met and challenges mitigated. The customer now has a new health care compliant and scalable data centre with full visibility of both physical and virtual traffic. The customer also has a solution that is centrally managed and application-centric with an agile security policy, plus a customised notification system for monitoring and troubleshooting.

ONE STEP AHEAD

VMware appears to be reading the future pretty well; by being flexible and adjusting to the market needs, this virtualisation pioneer managed to create a one stop shop for every IT requirement. With the acquisitions of Nicira and Arkin respectively, VMware expanded their catalogue with two extremely powerful and market changing solutions - NSX and vRNI. The first providing micro-segmentation, logical switching/routing and load balancing to name a few, and the second allowing for full visibility of physical/virtual networks and assisting in security planning. The fact that these two work together and give so many unique features is a game changer in the industry. Adding to the mix products like vSAN for storage virtualisation and vRA for automation, makes it even more interesting - all in software, all managed from your browser, location-agnostic and application-centric. This is a software defined revolution and it is happening right now. Join it and stay ahead of the game.

Xtravirt is a leading VMware NSX specialist and has the ultimate combination of deep experience and agility to design and deliver your IT transformation. If software-defined networking is on your roadmap then contact us today.

To find out more visit: https://xtravirt.com/nsx/

About the author

Michal Grzeszczak joined the Xtravirt consulting team in September 2017. He has an in-depth understanding of NSX along with experience in software-defined networking, virtualization and data centre support.

0 notes

Text

NSX in an End User Compute Environment

by Curtis Brown

VMware NSX® network virtualisation platform has been around for a little while now delivering an operational model for networking that forms the foundation of the Software-Defined-Data-Centre. It’s often associated more with server virtualisation or as a component within a Cloud Automation solution and with good reason; it’s readily able to deliver a scaled, secure environment, while providing several mechanisms to deliver advanced network functionality such as firewalling, load balancing and anti-malware protection. However, one area where its application is frequently overlooked is in the world of End User Compute – more specifically virtual desktop infrastructure.

Wouldn’t it be nice if…

Consider a full-on Horizon deployment (though, to be fair, these components could be replaced in the most part for an element of your choice) –

Horizon View – This will publish Virtual Desktops in Pools, with these pools assigned to Active Directory based Groups/Users.

App Volumes – This gives you a mechanism for delivering defined stacks of applications to the Virtual Desktops.Again, you can assign using Groups or users.

User Environment Manager – You use this to manage environmental and application settings.

So, you have the mechanisms in place to deliver Virtual Desktops, complete with some granular application and settings delivery. This is all pretty good, but wouldn’t it be nice if you could provide some intelligent network capabilities too?

Finetuned Firewalling.

Now take VMware NSX - this has some nice features that you can leverage in a VDI estate.

One of the key areas of concern is hardening the estate. There’s been a pretty big play in the NSX world around using NSX to provide segmentation – both at a macro level, separating workloads into different VXLANs on virtual switches – and at the micro level using the Distributed Firewall to provide firewall rules at the Vmnic. Both strategies can be used here.

You can also separate Pools at a macro level or a micro level if you wish to, with the latter being particularly interesting.

The Distributed Firewall can provide a great deal of flexibility when defining firewall rules and can leverage several different identifiers, including vSphere entities and Active Directory objects. Using these, you can generate a rule bases to lock down traffic quite tightly, in conjunction with the VDI solution and application delivery mechanism. Take this example:

You can set up a standard DFW ruleset that applies to your desktops that will prevent traffic between the virtual desktops, while still providing the core services and application support for anything required for the desktop. This means you can control LDAP traffic between the desktops and Domain Controllers or PCoIP between the desktops, the Connection Servers or direct to physical endpoints if you wish.

With App Volumes, you can define an AppStack with several applications which may well have discrete client/server network traffic requirements. The DFW can apply rules based upon the user logged into the desktop, so you can create and apply rules specific for these client server applications and tie them to the same Active Directory object that applies the AppStack.

The net result is that you end up with a combined ruleset applied to a desktop that is not just tied to a broad pool, but also to discrete needs for users within the pool. This provides strict granular access based upon the needs of the desktop and the application set for the user. And let’s remember that this is applied at the desktop’s connection to the network – not at any perimeter level.

Malware Protection

NSX also provides the ability to integrate a compatible antivirus/antimalware solution into the mix. This is not, however, limited to the more publicised Guest Introspection where virus scanning is offloaded at the hypervisor level. NSX can also integrate with both virtual and physical appliances such as Fortigate for intrusion detection, perimeter firewalling and more.

At the Infrastructure Level

As with any VDI estate, you have the upstream infrastructure to consider, several services can be provided here too.

Using VMware NSX Edge Gateway appliances, you can provide perimeter firewalling between networks.

For Horizon View, both Connection Servers as well as Access Gateways/Security Servers require Load Balancers, as do App Volumes Managers. The NSX Edge appliances are capable of being configured to provide load balancing services.

Perimeter firewalling using NSX Edge can also be provided, so you can have a DMZ layer for the Security Servers/Access Gateways as well.

Generally speaking, you could deploy something like this for the Horizon View management environment:

Our external traffic accesses the estate via a pair of NSX Edge Gateways, serving as firewall and load balancer for a pair of VMware User Access Gateways. These are configured as two-legged, tunnelling into the vSwitch beneath, where you have a dedicated NSX Edge appliance load balancing the Connection servers. This internal load balancer is also used by internal users who connect in via an L2 bridge or NSX Edge as appropriate.

You then deploy NSX for the Desktop blocks as well, in order to provide the previously mentioned firewalling and malware protection for a secure end-to-end solution.

Closing Thoughts…

With NSX, it’s possible to turbo-charge the security for a VDI desktop environment in a manner that can be applied regardless of the choice of broker solution – the above would work just as well in a third party VDI solution such as Citrix XenApp or, perhaps more importantly, XenDesktop, not just VMware Horizon. The ability to dynamically set and operate firewalling on egress outside of the guest Operating System means that such firewalling cannot be tampered with from within the VDI desktop, which might be useful in environments where desktops are used with untrusted users (public kiosks for example).

If you’re interested in exploring the combination of VMware NSX network virtualisation in a VDI estate, please contact Xtravirt, and we’d be happy to use our wealth of knowledge and experience to assist you.

About the Author

Curtis Brown joined the Xtravirt consulting team in October 2012. His specialist areas include End User Compute solutions and Virtual Infrastructure design and implementation with particular strength in VDI, storage integration, backup and Disaster Recovery design/implementation. He is a VMware vExpert 2017.

#xtraCBrown#xtravirt#virtualisation#virtualization#NSX#vmware#EUC#VDI#DaaS#Desktop#Horizon#View#AppVolumes#Automation#Curtis#Brown#VMnic

0 notes

Text

vRealize Automation 7.3 – a huge release for VMware’s Cloud Management Platform

by Sam McGeown

VMware have put a lot of development into their latest release of vRealize Automation 7.3 and it certainly feels like a bigger release than a ‘.1’ version – as is evident in the “What’s New” section of the release notes.

As part of a push to make it easier to manage and operate a vRA-based cloud platform, VMware have made some great improvements – the main features that stand out for me are:

Automated failover for the PostgreSQL database, and IaaS Manager Service – something that many customers have been asking for and now finally have, is a fully “HA” distributed platform.

A new API for installation, upgrade and migration of the platform itself. This means scripted installs, upgrades and migrations are now simple and can be orchestrated.

Parameterised blueprints – this allows out-of-the-box support for one of the most frequently asked for blueprint options – t-shirt sizes. In addition, the new Component Profiles will simplify the number of blueprints required and reduce catalogue management.

Integration with vRealize Operations 6.6 (vROps); allowing intelligent placement of workloads based on analytics data and a Workload Placement Policy.

Enhanced integration with IPAM – an extended IPAM framework allowing deeper integration with Infoblox initially, but expect other IPAM providers to start providing plugins.

Enhanced integration with ServiceNow – a new plugin version will deepen the integration with ServiceNow as a CMDB and governance engine, with support for things like day 2 operations.

There have been huge improvements and developments with the NSX integration, with all the native functionality now using the REST API rather than the vRealize Orchestrator (vRO) Plugin – this is great news and will simplify operations and troubleshooting. The plugin is still available for custom integrations if required.

The main highlights for me are:

Deeper integration with NSX Load Balancers – which allows customised load balancer configuration per-blueprint (algorithms, persistence, health monitors, port, etc.) as well as day 2 reconfiguration of the load balancer. This is a fantastic improvement!

NSX Security Group and Tag integration – much better integration with the UI and the ability to add and remove Security Groups and Tags as a day 2 operation

NSX Edge Sizing and HA – Edge Gateways can be customised on a per blueprint basis to size correctly or in HA mode for resilient services like load balancing, NAT and firewall.

vRA 7.3 should also have a greater appeal to those who have developer environments, with its deeper integration with Puppet, Docker, vSphere Integrated Containers and Docker volumes.

Puppet as a “1st class citizen” – this is part of a wider move to get configuration management tools integrated with the platform, but it’s starting with Puppet. This means drag and drop configuration management in the blueprint designer, and day 2 configuration management for deployed applications.

Container management has been improved with support for vSphere Integrated Containers, alongside the traditional Docker hosts, and support for Docker volumes.

And for those who are already running vRA, there are a couple of bug-bears that have been relieved, such as:

A force destroy option to get rid of those “stuck” deployments. These would previously require a CloudClient command line clean up, or a database edit under the watchful eye of GSS.

Improved integration with Storage Policies – anyone who has used the SPBM plugin will know that it’s been a little … clunky. A new plugin, shipped with the latest version of vRO, will make it easier to leverage vSAN and VVOL based storage.

Role based access for vRO – given the huge power within the SDDC that vRO has, it’s great to see this finally being implemented.

That’s a vast number of really significant improvements for a single point release and in this blog I’ve only drawn out the ones that I know customers will love. This release is without a doubt, a massive step forward in the maturity of VMware’s Cloud Management Platform.

If you are considering a move to a Cloud Management platform and need assistance in deciding on the right solution for you and your organisation, please contact us and we’d be happy to use our wealth of knowledge and experience to assist you.

#vmware#vrealize#automation#cloud#virtualisation#virtualization#cloud management#management#PostgreSQL#IaaS#vROps#IPAM#CMDB#ServiceNow#NSX#xtraSMcGeown

0 notes

Text

The rise of SDN: A practitioners deep dive into VMware NSX

by Andy Hine

Introduction

The hype about Software Defined Networking (SDN) has been around for years, during which time the technology has rapidly matured, leading to fast growing adoption. So what is it all about? In this article I’ll share a bird’s eye view at software based networking, and in particular VMware® NSX.

The areas covered are:

What is SDN?

Origins of NSX

Why virtualise the network?

Traditional network challenges

How does NSX achieve network virtualisation?

Features and architecture

What is SDN?

For those who have managed to avoid the marketing,_ _SDN enables networking and security functionality traditionally handled with hardware based equipment, eg: network switches, firewalls, load balancers, to be performed in software. This process is often called ‘Network Virtualisation’ and involves the abstraction of networking from its physical hardware.

VMware NSX is a network virtualisation platform that VMware hope will fundamentally transform the data centre’s network operational model, just like the server virtualisation ‘bonanza’ did over 10 years ago. This, in the eyes of VMware will continue the organisational march to realising the full potential of the Software Defined Data Centre (SDDC – a term coined by VMware a number of years ago). As part of this drive, NSX is now integrated into the later versions of VMware’s vSphere hypervisor.

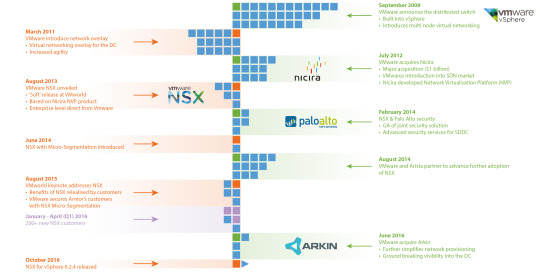

Origins of NSX

So where did NSX come from? Back in July 2012 VMware purchased Palo Alto based company Nicira for $1.2 billion. This represented the most expensive acquisition made by VMware (and most likely will be for a long time yet), showing how serious they were and are about their SDDC vision.

Nicira were founded in 2007 and had a modest (ish) customer base of mainly enterprise clients.. Whilst their focus was on network virtualisation and developing SDN products, most were for non-VMware and open source platforms. NSX was developed from Nicira’s existing NVP software.

Why virtualise the network?

By automating and simplifying many of the processes that go into running a data centre, network virtualisation helps organisations achieve major advances in simplicity, speed, agility and security. With this approach to the network organisations can:

Achieve greater operational efficiency by automating processes

Improve network security within the data centre

Place and move workloads independently of physical network activity

Traditional network challenges

Networking teams respond to the request for change from the business with manual and often complex provisioning of hardware devices, software and configuration. And this is usually performed by an engineer with specific knowledge of the particular technology. This can create bottlenecks in provisioning new networks and applying network related changes, along with introducing the possibility of configuration errors and even outages due to human factors.

To explain by way of example; imagine a new application is to be developed, it requires isolated test, development and production environments to support it, and each environment has its own web tier in a DMZ, and all need access to a central application library and code repository… and the deadline is yesterday. The virtualisation team have provisioned the virtual server resource and passed it over to the network team. Now what? The team manually provision new physical networks? Access ports? Trunk ports? VLANS? Firewall rules? Is there capacity on existing switches? Where is routing going to occur? Where will the DMZ be placed? And who’s got the skills and time to do it? … “This is complex…let us get back to you.”

You can see how this type of commonly repeated scenario can lead to a number of other challenges. What happens if a workload needs to move from one host or resource pool to another, for example due to maintenance. Can the new destination support the networks that the VM is currently part of? Will this require a change of IP address? Will that be in the right rule base, and please don’t tell us you need to move a VM from test to production. This traditional networking approach is static, inflexible and produces silos. The management overhead is increased further by sprawl of VLANs and firewall rule sets.

Years of server virtualisation has meant that IT infrastructure teams can now respond quicker to these type of business challenges, in fact they may well have a lot of their processes automated, maybe in provisioning new workloads or remediating issues, with integration into service desk and change management systems. So is there any chance the networking can follow suit? Well it’s certainly slower and more complex with the traditional approach.

The ability to rapidly provision, update, and decommission networks (including DMZ’s) in an agile, lower risk and highly available way can be achieved through network virtualisation, and is a major use case for VMware NSX. Add to that massively reduced management overhead, the ability to automate these processes and even integrate it with existing systems makes it a very compelling conversation.

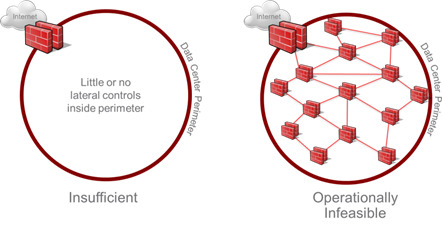

Security and routing are other networking challenges, traditionally it has been the ‘Castle’ approach where IT has secured the data centre perimeter. Often achieved by having a powerful hardware device at the edge (or multiple if a DMZ is required), sporting large throughput capacity, firewalling and L3 routing capability with maybe some anti-virus or intrusion detection.

I see a couple of issues with that approach, firstly performance is a concern, causing potential choke points and inefficiency as each workload’s networking may be subjected to ‘hair-pinning’. The process whereby the network packet travels from the source machine all the way up to the edge device, is processed and then set off on the return journey back down again to the destination (even if the destination is located on the same physical host by the way).

As more and more of the data centre is defined in software, the transition to east-west traffic is huge. A simple example is virtual machine A, which is part of internal network 10.1.1.x and running on DC host 1, wants to communicate over TCP port 80 with virtual machine B, which is part of internal network 10.1.2.x running on DC host 2 – this is traffic that does not need to leave the data centre for any reason like north-south traffic, so therefore should not need to bother with adding extra processing at the edge device and also slowing down its own round trip time.

And what happens if a threat slips through the cracks, some malware on a VDI machine for example – how is that contained? With the Castle model, once a threat is inside it can roam around freely connecting to as much as it can, of course attempts to counter this may be with software (“personal”) firewalls on each VM or having individual rules for every single machine on the perimeter device, or even having physical firewalls between each machine. None of those options are scalable or manageable, and the latter for your average organisation is nonsensical.

By creating granular security for network segments at layer 2 instead of layer 3, this not only allows IT to increase network efficiency and reduce chatter but also introduces firewalling to east-west traffic, establishing a much more secure zero-trust operating model for networking (even within the same VLAN).

Security can be deployed at the VM, at vNIC level. To stick with the analogy earlier we have now created the ‘hotel’ model. This process is called Micro-Segmentation, and is another major use case driving NSX adoption.

How does NSX achieve network virtualisation?

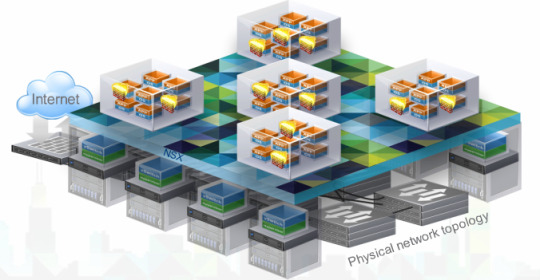

VMware NSX applies network virtualisation to the physical network, much like a hypervisor does for compute, this allows for software based networks to be created, managed and deleted.

When defining networks in software (or logically) we are providing an overlay that decouples the virtual plane from the underlying hardware rendering it a network backplane, so merely a vehicle for traffic to travel on the physical network. This introduces potential to extend the life of hardware or reduce cost of its replacement due to the transition of the intelligence into software.

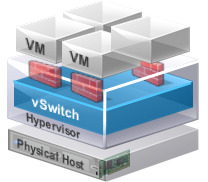

VMware NSX builds upon vSphere vSwitch technology as well as adding:

Encapsulation techniques at the hypervisor level

Introducing new vSphere kernel modules for VXLAN

Distributed logical routing and distributed firewall services together with edge gateway appliances to deal with north-south traffic routing

Advanced services such as load balancing

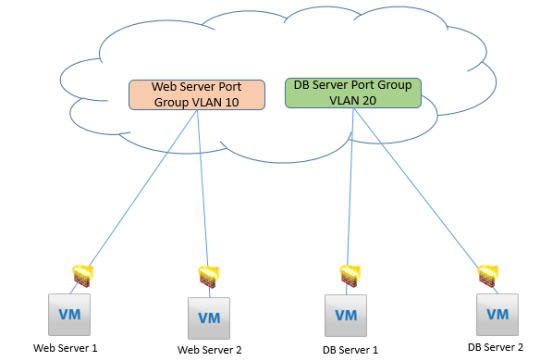

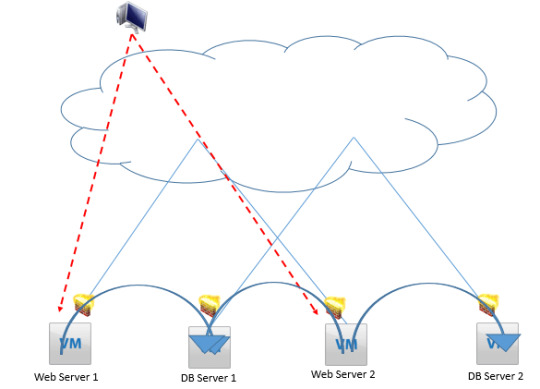

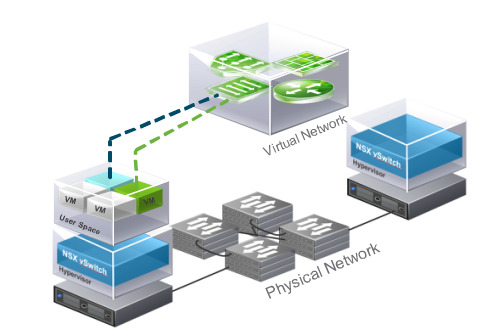

The example in the diagram above shows VMs (green and blue) on the same host but on different networks. Using NSX virtual networking services (2 x NSX vSwitches and 1 x NSX distributed logical router to be precise) the VM’s can communicate with one another without a single frame leaving the host.

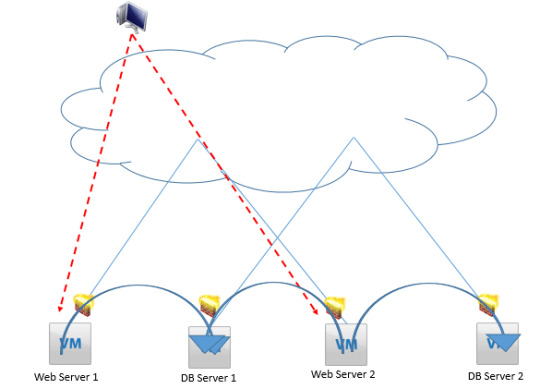

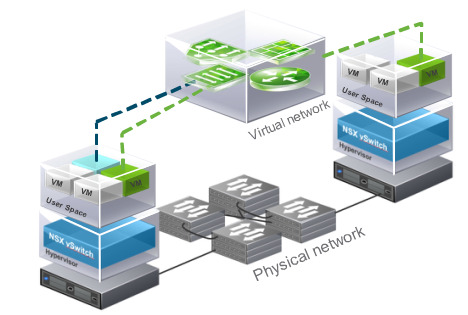

The diagram above shows Virtual machines on different hosts can communicate through NSX distributed logical switches even when the underlying physical network is not configured. In this example that would mean the network team would not have to do anything.

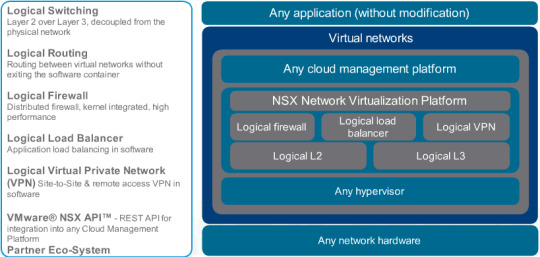

Features

NSX allows for networking functions previously defined in hardware to be realised virtually, including logical switching and routing, firewalling, load balancing and VPN services.

NSX also provides a REST API for integration with additional network/security products and cloud platforms.

Architecture

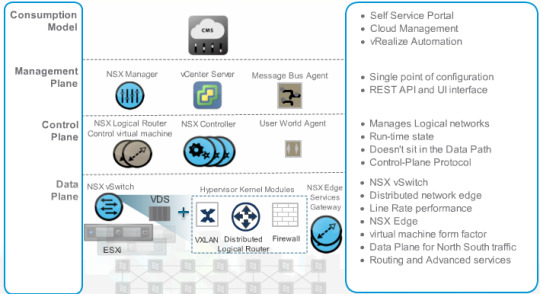

For those looking to realise network virtualisation it is important to understand that in the case of NSX there is no single component that will ‘switch on’ networking virtualisation. As shown in the overview of the architecture below, there are a number of integrated technologies working together to enable its introduction.

Organisations who have already invested in VMware infrastructure are at an obvious advantage. NSX data plane components are already embedded into the latest versions of vSphere at the hypervisor level, providing the base for distributed virtual switching and edge services along with L2 bridging to physical networks.

Control plane components are introduced on top, facilitating the use of logical networks and VMware vCentre is a prerequisite for the NSX management plane. At the top of the stack NSX integrates with the vRealize suite for cloud operations, automation and self-service.

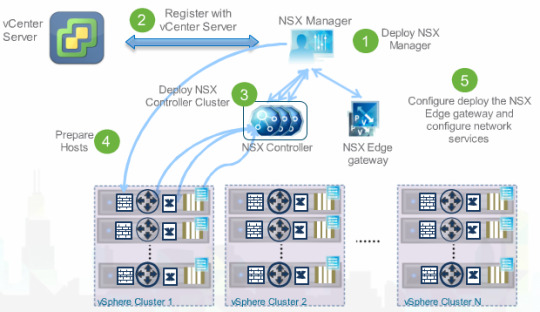

Deployment

Deploying NSX can be straightforward with the right planning and design, and can be installed on top of any network hardware. Note the direct relationship between vCentre and NSX Manager.

To maximise the technology, the real focus and effort comes with tight integration into an environment, whether upgrading an existing one or planning a greenfield site. NSX is a powerful platform which can drive complexity when considering configuration and customisation, and associated elements and polices.

Summary

NSX is changing the face of network virtualisation and with benefits already being realised at the enterprise customer level, indications are that its adoption will continue to grow as organisations understand the benefits of network virtualisation.

A well-engineered physical network will always be an important part of the infrastructure, but virtualisation makes it even better by simplifying the configuration, making it more scalable and enabling rapid deployment of network services.

Businesses are exploring NSX and network virtualisation because they are able to achieve:

Significant reduction in network provisioning time

Greater operational efficiency through automation

Improved network security within the data centre

Increased flexibility and agility

The use cases for NSX are moving from ‘presentation world’ to the real world and most major innovations VMware are working on rely on NSX for the virtualisation of the network. With the rise of containers and isolated application environment, the micro-segmentation use case will become even more prevalent as it delivers a fundamentally more secure data centre.

SDN has also created an interesting dynamic for the SysAdmin. Does the virtualisation expert become a networking expert as well? Or does it fall into the network engineer’s domain… we’re already seeing a transition to the former, and a further evolution of IT support roles in general so it will be interesting to see how this develops.

Xtravirt are the experts in NSX solution design and integration and can help deliver an accelerated and non-disruptive SDN transformation for your organisation. To find out more about how we can work with you, contact us today.

About the author