#AWQ

Explore tagged Tumblr posts

Text

Greetings darlings, I'm Lefty.

And I'm Rightyyyyyy!!! Hiiiiiii!!!

And we're hosting a show.

I promise you guys will love it!!

We'll have 30 Contestants battle for...

A Habitable planet thats all their own!! YIPEEEEE!!!

Thank you Righty, Dearest.

No prob, hon!

So

ENJOY!

#and we quote#and we quote osc#and we quote object show#AWQ#AWQ osc#AWQ object show#objectshow#osc community#osc#osc art#object shows#object oc#object show community#object show character#algebralien#algebralian oc#lefty#righty#lefty AWQ#righty AWQ#lefty osc#righty osc#new object show

33 notes

·

View notes

Text

girlypop your going to fall

#they are the friends ever <3#art#digital art#my art#artwork#artists on tumblr#osc#digital drawing#ibispaintx#object show art#object show community#awq#awq object show#awq osc#and we quote#and we quote osc#and we quote object show#egg roll awq#berry soda awq

13 notes

·

View notes

Note

(If your requests are open) Can you please draw Buldak 2x and/or Hyper Sprinkles in their canonical human versions?

Buldak2x Has octopus tentacles for hair and an octopus form

here are the hyper spinklez >.<!!

10 notes

·

View notes

Text

I felt like drawing this... just because... uhhhhh she reminds me of Pearl from Steven Universe!!!

@and-we-quote-osc

Inspo:

#object show community#object shows#objectshow#osc community#oc art#osc#lefty#lefty awq#lefty and we quote#awq object show community#awq#awq osc#and we quote object show community#and we quote object show#and we quote osc#and we quote#steven universe#pearl#pearl steven universe

13 notes

·

View notes

Text

@nogudfreak/eleven here again Was only able to do one of them at the moment, this was fun! :) @royalstarstorm

#and we quote osc#and we quote#and we quote object show#awq#awq object show#awq osc#object shows#object oc#object show character#object show community

12 notes

·

View notes

Text

Latest DirectML boosts AMD GPU AWQ-based LM acceleration

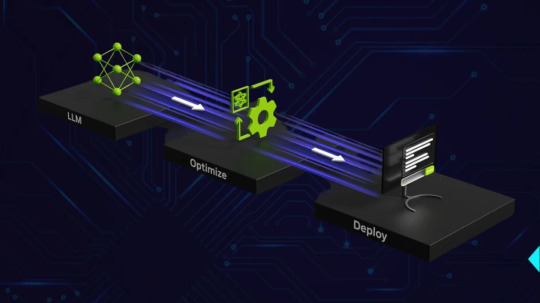

Minimize Memory Usage and Enhance Performance while Running LLMs on AMD Ryzen AI and Radeon Platforms Overview of 4-bit quantization.

AMD and Microsoft have worked closely together to accelerate generative AI workloads on AMD systems over the past year with ONNXRuntime with DirectML. As a follow-up to AMD’s earlier releases, AMD is pleased to announce that they are enabling 4-bit quantization support and acceleration for Large Language Models (LLMs) on discrete and integrated AMD Radeon GPU platforms that are using ONNXRuntime->DirectML in close cooperation with Microsoft.

NEW! Awareness-Based Quantization(AWQ)

Microsoft and AMD are pleased to present Activation-Aware Quantization (AWQ) based LM acceleration enhanced on AMD GPU architectures with the most recent DirectML and AMD driver preview release. When feasible, the AWQ approach reduces weights to 4-bit with little impact on accuracy. This results in a large decrease in the amount of memory required to run these LLM models while also improving performance.

By determining the top 1% of salient weights required to preserve model correctness and quantizing the remaining 99% of weight parameters, the AWQ approach can accomplish this compression while retaining accuracy. Up to three times the memory reduction for the quantized weights/LLM parameters is achieved by using this technique, which determines which weights to quantize from 16-bit to 4-bit based on the actual data distribution in the activations. Compared to conventional weight quantization methods that ignore activation data distributions, it is also possible to preserve model fidelity by accounting for the data distribution in activations.

To obtain a performance boost on AMD Radeon GPUs, AMD driver resident ML layers dequantize the parameters and accelerate on the ML hardware during runtime. This 4-bit AWQ quantization is carried out utilizing Microsoft Olive toolchains for DirectML. Before the model is used for inference, the post-training quantization procedure described below is carried out offline. It was previously impossible to execute these language models (LM) on a device on a system with limited memory, but our technique makes it viable now.

Making Use of Hardware Capabilities

Ryzen AI NPU: Make use of the Neural Processing Unit (NPU) if your Ryzen CPU has one integrated! Specifically engineered to handle AI workloads efficiently, the NPU frees up CPU processing time while utilizing less memory overall.

Radeon GPU: To conduct LLM inference on your Radeon graphics card (GPU), think about utilizing AMD’s ROCm software stack. For the parallel processing workloads typical of LLMs, GPUs are frequently more appropriate, perhaps relieving the CPU of memory pressure.

Software Enhancements:

Quantization: Quantization drastically lowers the memory footprint of the LLM by reducing the amount of bits required to represent weights and activations. AMD [AMD Ryzen AI LLM Performance] suggests 4-bit KM quantization for Ryzen AI systems.

Model Pruning: To minimise the size and memory needs of the LLM, remove unnecessary connections from it PyTorch and TensorFlow offer pruning.

Knowledge distillation teaches a smaller student model to act like a larger teacher model. This may result in an LLM that is smaller and has similar functionality.

Making Use of Frameworks and Tools:

LM Studio: This intuitive software facilitates the deployment of LLMs on Ryzen AI PCs without the need for coding. It probably optimizes AMD hardware’s use of resources.

Generally Suggested Practices:

Select the appropriate LLM size: Choose an LLM that has the skills you require, but nothing more. Bigger models have more memory required.

Aim for optimal batch sizes: Try out various batch sizes to determine the ideal ratio between processing performance and memory utilization.

Track memory consumption: Applications such as AMD Radeon Software and Nvidia System Management Interface (nvidia-smi) can assist in tracking memory usage and pinpointing bottlenecks.

AWQ quantization

4-bit AWQ quantization using Microsoft Olive toolchains for DirectML

4-bit AWQ Quantization: This method lowers the amount of bits in a neural network model that are used to represent activations and weights. It can dramatically reduce the model’s memory footprint.

Microsoft Olive: Olive is a neural network quantization framework that is independent of AMD or DirectML hardware. It is compatible with a number of hardware systems.

DirectML is a Microsoft API designed to run machine learning models on Windows-based devices, with a focus on hardware acceleration for devices that meet the requirements.

4-bit KM Quantization

AMD advises against utilizing AWQ quantization for Ryzen AI systems and instead suggests 4-bit KM quantization. Within the larger field of quantization approaches, KM is a particular quantization scheme.

Olive is not directly related to AMD or DirectML, even if it can be used for quantization. It is an independent tool.

The quantized model for inference might be deployed via DirectML on an AMD-compatible Windows device, but DirectML wouldn’t be used for the quantization process itself.

In conclusion, AMD Ryzen AI uses a memory reduction technique called 4-bit KM quantization. While Olive is a tool that may be used for quantization, it is not directly related to DirectML.

Achievement

Memory footprint reduction on AMD Radeon 7900 XTX systems when compared to executing the 16-bit version of the weight00000s; comparable reduction on AMD Ryzen AI platforms with AMD Radeon 780m.

Read more on Govindhtech.com

0 notes

Text

LEFY AMD RITY

Weird..I swore they were numbers a second ago..?

Also drew some matuals Ocs...part 2

@cyancoolthings @royalstarstorm

30 notes

·

View notes

Note

question, where will this object show be uploaded? (like is it on youtube or something else?)

It will be uploaded to youtube, juuussst as soon as we find volunteers to help us out with the show!

The show, like I said wont have a budget, and the show doesn't have to be perfect!

#and we quote#and we quote object show#and we quote osc#awq#awq object show#awq osc#object show community#object shows

13 notes

·

View notes

Text

NO BECAUSE IF I WERE IN THE EPISODE COBS WOULD HAVE ACCEPTED HIS FATE HAHAHHAHAHAHHAHAHAHHA

FUCK YOU COBS YO ASS IS MINEEEEEEE

#ii spoilers#inanimate insanity spoilers#inanimate insanity#inanimate insanity episode 16 spoilers#episode 16#ii steve cobs#ii suitcase#ii knife#awq object show community#awq#awq osc#awq object show#and we quote osc#and we quote object show community#and we quote object show#and we quote#hyper sprinkles#hyper sprinkles awq#hyper sprinkles and we quote

16 notes

·

View notes

Text

Hi y'all, Vanessa here! I recently just finished the story board for how I want the intro to look like (But still in the show's art style)

What I want in the intro:

1.) Contestant Name tags designed to the character's appearance and personality. @kr0tt3n is in charge of this

2.) For the part where it says: "Have the contestants do whatever." Have them doing what they want based off of personality.

3.) BFDIA inspired style (for all contestants except Lamp) @nogudfreak (when they have break)

4.) The algebrailens (Lefty, Righty, and Lamp) should be animated by @thealmondofspades

I will make the thumbnail for episode one soon!

#and we quote osc#and we quote#and we quote object show#awq object show#awq osc#awq#object shows#object oc#object show character#object show community

12 notes

·

View notes

Note

CHAT THIS IS NOW

🥤🫐🍓C A N O N🍓🫐🥤

Can you humanize your favorite character(s) from the AWQ object show? To the best of your ability?

BERRY BERRY SODA!!!! I love her I love her I love her FROTHS AND FROLICKS!!!!

(sorry this took so long SCHOOL AND LIFE AND TIME LIMITS GET AHEAD OF YOU YAKNOW!!! Hum. Hope you enjoy…)

#object ocs#osc art#humanization#and we quote#and we quote object show#and we quote osc#awq object show#awq#awq osc#object show community

16 notes

·

View notes

Note

For the sports event, LS Raph has decided to start up a game of tag!!!

Mikey had peacefully been basking in the sun when the tiny version of Raph appeared.

He knew this little Raph- he was part of their cabin! Such a sweetheart. His own Raph had already met their Mikey- really, the eldest snapper was a baby magnet. These guys, Misa, and the little babies he had brought when meeting Mike- how did he do it?

He opened his eyes when a shadow was cast over him- and smiled at the little snapper who stared at him with a grin.

A very... suspicious grin. The same grin the younger brothers had when they were planning something.

"Hey, big guy." Mikey waved, making no move to get up. Raph just stared at him... until he placed a clumsy, little paw on Mikey's snout.

"Tag! You're it!" He shrieked, turning around and running away at once. Mikey blinked... before grinning.

You didn't need stable hands for tag.

"Why you little-" he playfully called, getting up, and stretching his arms above his hands. If it was a race Raph wanted... it was a race he'll get.

He grinned, and started running.

Distantly... the box turtle wondered if the little snapper had ticklish spikes like his older brother too.

"Oh I'll get you alright!"

@tmnt-fandom-family-reunion

Raph meeting LS Mikey

Raph meeting Misa

Raph meeting Mike

#Raphs really meeting all the precious angels isn't he awq#oh I love this au so much dkdbka#rottmnt#rise of the teenage mutant ninja turtles#tmnt fandom family reunion#the wrong side of the portal#little subjects au#cabin 10#rottmnt mikey#rottmnt raph#tmnt ffr sports day event

33 notes

·

View notes

Note

CAN WE BE ART MOOTS?!!!!

Im also making an object show called, "And We Quote"

You can also be part of the crew if you want!!

Heres the account for AWQ if you're interested!:

@and-we-quote-osc

Sure I guess :)

8 notes

·

View notes

Text

USAAF 43-49219 / ZK-DAK masquerading as NZ3546, making a low(ish) pass over Dairy Flat.

msn 26480 was constructed as a C-47B-10-DK c. 1942 and was taken on charge by the USAAF as 43-49219 c. 1943.

By April 1959, it had been demobilised and sold to Philippines Airlines and given the c/r PI-C486. It flew with them until April 1970 when it was sold to Papuan Air Transport as VH-PNM. It was sold to Anssett Airlines of Papua New Guinea in July that year.

After bouncing around Queensland for a few years it was exported to Aotearoa with the c/r ZK-DAK in 1987.

Seen in D-Day colours. 6/07/04. It wore these colours from 1986-c. 2006.

In plain white livery. 1/12/06. By 2007 it was in RNZAF colours.

The real 3546 was briefly ZK-AWQ before becoming D6-CAG in the Comoros. It was sold to the RSAF becoming s/n 6863 and converted to a C-47TP by WonderAir c. 1980s(?). It became N81907 in 1998 and in 2001 became ZS-OJL. It was last seen in 2006 at Wonderboom National Aiport (PRY/FAWB) sans engines, wings and rudder.

16 notes

·

View notes

Note

I LOVE HOW YOU GAVE WATERMELON A TAIL THAT IS SO FUCKING ADORABLE WAHT!!!

I hope you do oc requests!!

If so, Can you please draw Watermelon and Buldak 2x Bonding but buldak is in her noodle form.

Like can you please draw Watermelon being confused with Buldak's noodle form like:

Watermelon: How the...

Buldak 2x: I don't even know at this point...

More Information about both at @and-we-quote-osc

#coolkiwifruitbird art#art#comic#coolkiwiyummy comic#awq object show community#awq#awq osc#awq object show#buldak#watermelon

34 notes

·

View notes

Note

Since bee is planned to be with Chloé and is a posible member of the AWQ, Chloé have a chance of be a lead 7?

Between her friendship with Adrien, her antagonistic role with Marinette and the class, canon's focus on her and her family, me planning on working off color coding, at least having characters planned with a Miraculous having at least one that matches with them, which sets her up as best for the Bee, and I do have a villain thought that would go after her family...

Yeah, she's really kinda set up to be a major character and will be a focused on character.

Though atm, it's too early to say whether she goes redemption or goes villainous. Chloe could really go either way.

11 notes

·

View notes