#AND LABELED THE DATA AS IF IT WAS OUR FINAL CALCULATION RESULTS

Explore tagged Tumblr posts

Text

The way that I act when I get angry about lab reports.......

#I am an angry lion I am a vibrating chihuahua I am a Karen shouting at an innocent worker#BABYBOY YOU COPIED THE DATA AND DID NOT DO A SINGLE CALCULATION#AND LABELED THE DATA AS IF IT WAS OUR FINAL CALCULATION RESULTS#AND THEN YOU STOP RESPONDING TO ME?????????#MISTER 'you've already done so much leave the rest to me'#YOU DID NOTHING THAT A CHIMP WITH A KEYBOARD COULDN'T DO#EVERYTHING HE DID NEEDS TO BE FIXED NOW AND I DONT GOT THE PATIENCE FOR IT#I wanna be like ITS YOUR FUALT/MISTAKE YOU FIX IT but alas I do not have that type of trust and also i want good grades#IF WE UPLOADED THIS WE WOULD GET FUCKING /ROASTED/ DUDE. ROASTED!!!!!!!!!!!!!!!!!!!!

2 notes

·

View notes

Text

data-analysis-tools Course _Week_1

Running an analysis of variance

(Durchführung einer Varianzanalyse ANOVA)

I have udes the gapminder data for my analysis

I'm checking relation between breastcancer and CO2 Emissions

data with columns breastcancer100th , co2emissions and lifeexpectancy labels.

First creation of 9 CO2 emissions groups. In last group there is no more information. First one and last one have only one information in this group..because of that there can be not calculate a variation.

Here again, we see our F statistic, an associated p value for

our explanatory variable with more than two levels.(9levels)

F Value is 10,47

associated p value= 1.00e-08 it is smaller than 0,05

So this tells me I can safely reject the null hypothesis and

say that there is an association between Co2 emissions and breastcancer100th

The F-test and

the p-value do not provide insight into why the null hypothesis can be rejected

because there are multiple levels to my categorical explanatory variable.

They do not tell us in what way the population means are not

statistically equal.

mean values and standard deviation for each group is below. we can see some differences between 9 groups 15e6 and 15e7 means are nearly but 15e8,15e9, 15e10, and last one 15e11 means are different..

By 15e11 is only one measure point (important to remember.. is it a outliner? is it a false measuring?

In the case where the explanatory variable represents more than two groups,

a significant ANOVA does not tell us which groups are different from the others.

To determine which groups are different from the others,

we would need to perform a post hoc test.

Finally, I ask Python to print these results with the summary function.

Here we see a table displaying the Tukey post hoc paired comparisons.

That is, differences in breastcanser100th for each co2emissions group pair.

we see the comparison between co2emissions groups

In the last column, we can determine which groups

significantly different mean number breastcanser10th than the others

by identifying the comparisons in which we can reject the null hypothesis, that is, in which reject equals true.

0 notes

Text

Revolutionizing Industries With Deep Learning: Real-World Applications And Success Stories

Welcome to a world where machines outperform humans in various tasks, revolutionizing industries one breakthrough at a time. Deep learning, the cutting-edge technology behind this remarkable achievement, has taken the world by storm with its ability to analyze vast amounts of data and make accurate predictions. From healthcare to finance, from manufacturing to transportation – deep learning is transforming every sector it touches. In this blog post, we will explore the real-world applications of deep learning and dive into inspiring success stories that showcase how this powerful technology is shaping our future. So buckle up and get ready for an exhilarating journey into the realms of artificial intelligence as we unveil the game-changing impact of deep learning on industries worldwide!

Introduction to Deep Learning

Deep learning is a subset of artificial intelligence that has been gaining widespread attention in recent years due to its ability to solve complex problems and make accurate predictions. It involves training neural networks, which are algorithms modeled after the human brain, with large amounts of data to recognize patterns and make decisions.

One of the key features that sets deep learning apart from traditional machine learning techniques is its use of multiple layers in the neural network. These layers allow for a hierarchical representation of data, where each layer learns more abstract features from the previous one. This enables deep learning models to handle highly complex and unstructured data such as images, speech, and natural language.

The concept of deep learning has been around since the 1950s, but it wasn’t until recently when advancements in computing power and access to big data made it possible to train these models effectively. Today, deep learning is being used in various industries such as healthcare, finance, retail, transportation, and many others. Let’s take a closer look at some real-world applications and success stories that showcase how deep learning is revolutionizing these industries.

1. Healthcare: Deep Learning for Medical Image Analysis

Medical imaging plays a crucial role in diagnosing diseases and planning treatments. However, interpreting medical images can be time-consuming for doctors and prone to human error. Deep learning has shown promising results in automating this process by accurately identifying abnormalities on medical scans such as X-rays, MRIs, CT scans, etc.

For instance, researchers at Stanford University developed an

Understanding the Basics of Neural Networks

Neural networks are a fundamental component of deep learning, which has revolutionized industries across the board with its powerful capabilities. These artificial neural networks (ANN) are models inspired by the structure and function of the human brain, designed to process large amounts of data and make complex decisions. In this section, we will delve deeper into the basics of neural networks and understand how they work.

The foundation of a neural network lies in its basic unit called a neuron. This is an interconnected node that receives input data from other neurons, performs calculations with them, and produces an output. Multiple neurons come together to form layers within a neural network. The first layer takes in raw data as input and passes it on to the next layer for further processing. Each subsequent layer becomes more specialized in understanding patterns within the data until it reaches the final output layer.

To train a neural network, we use a process called backpropagation where we feed labeled training data into the network multiple times. During each iteration, the network adjusts its internal parameters based on how well it performed compared to the expected output. This enables it to continuously improve its ability to recognize patterns in new data.

One key aspect of neural networks is their ability to learn through experience or past examples rather than relying solely on explicit programming instructions. This allows them to tackle complex problems that would be difficult or impossible for traditional computer programs.

There are different types of neural networks used for various purposes such as image recognition, natural language processing, time-series prediction, etc.

Real-world Applications of Deep Learning

Deep learning, a subfield of artificial intelligence, has gained immense popularity in recent years due to its ability to process large amounts of data and extract meaningful insights. This revolutionary technology has found numerous applications in various industries, transforming the way businesses operate and improving efficiency and accuracy in decision making.

In this section, we will explore some real-world applications of deep learning that have revolutionized industries across the globe.

1) Healthcare: One of the most significant applications of deep learning is in the healthcare industry. With the help of advanced algorithms and neural networks, medical professionals can now accurately diagnose diseases and predict treatment outcomes. Deep learning models can analyze vast amounts of patient data such as medical records, lab results, imaging scans, and genetic information to identify patterns that may not be visible to human eyes. This has led to improved disease diagnosis rates and personalized treatment plans for patients.

For example, Google’s deep learning model was able to detect diabetic retinopathy (a leading cause of blindness) with 97% accuracy by analyzing retinal images. Another success story is IBM Watson Health’s use of deep learning for cancer treatment recommendations based on patient data analysis.

2) Finance: The finance industry generates an enormous amount of data every day from stock market fluctuations to customer transactions. Deep learning techniques have enabled financial institutions to process this data quickly and accurately for tasks like fraud detection, risk assessment, investment predictions, and credit scoring. By identifying hidden patterns in financial data, deep learning models can make more informed decisions than traditional

Healthcare and Medicine

The healthcare and medicine industry is one of the most essential sectors in our society, as it is responsible for maintaining the health and well-being of individuals. With the constant advancements in technology, deep learning has emerged as a game-changing tool in this field. It has revolutionized the way medical professionals diagnose diseases, make treatment plans, and monitor patient progress.

One of the significant applications of deep learning in healthcare is medical imaging analysis. Traditional methods of analyzing medical images were time-consuming and prone to errors. However, with deep learning techniques such as convolutional neural networks (CNNs), radiologists can now quickly and accurately detect abnormalities in X-rays, MRI scans, CT scans, and other medical images. This has significantly improved diagnostic accuracy, leading to better treatment outcomes.

Another area where deep learning has made a considerable impact is drug discovery and development. The process of developing new drugs is time-consuming and expensive. However, with deep learning algorithms that can analyze vast amounts of data from various sources such as scientific literature, clinical trials, and chemical compounds databases; researchers can now identify potential drug candidates more efficiently. This not only speeds up the drug development process but also reduces costs significantly.

Deep learning has also played a crucial role in personalized medicine – an approach that takes into account an individual’s genetic makeup, lifestyle choices, environment factors when making treatment plans. With deep learning models trained on large datasets containing information about patients’ genetics and medical history; doctors can now predict which treatments will be most effective for specific patients

Finance and Banking

Finance and Banking is one of the industries that has been greatly impacted by the rise of Deep Learning technology. In this section, we will explore how financial institutions are utilizing Deep Learning to improve their processes, enhance customer experience, and drive business growth.

1. Fraud Detection: Fraud detection has always been a major concern for banks and financial institutions. With the increase in online transactions and digital payments, fraudsters have found new ways to exploit vulnerabilities in the system. Deep Learning techniques such as anomaly detection, predictive modeling, and behavioral analysis are being used to identify fraudulent activities in real-time. This not only helps in preventing financial losses but also ensures a secure environment for customers.

2. Risk Management: Traditional risk management models relied heavily on historical data and statistical analysis which were often limited in their ability to predict future trends accurately. By using Deep Learning algorithms, banks can analyze large volumes of structured and unstructured data from various sources including social media, news articles, market trends, etc., to detect patterns and make more accurate risk assessments. This enables them to mitigate potential risks and make better-informed decisions.

3. Personalized Customer Experience: Personalization has become key in the highly competitive banking industry where customers expect tailored products and services based on their individual needs. With Deep Learning, banks can analyze customer behavior patterns from past transactions and interactions with the bank’s website or app to understand their preferences better. This information can then be used to offer personalized recommendations for financial products or services that best suit each customer

Retail and E-commerce

Retail and e-commerce have been greatly impacted by the advancements in deep learning technology. From improving customer experience to optimizing supply chain management, this powerful tool has revolutionized the way these industries operate.

One of the major applications of deep learning in retail and e-commerce is in personalized marketing and recommendation systems. Using deep learning algorithms, companies are able to analyze vast amounts of data on customer behavior, preferences, and purchase history to create personalized product recommendations. This not only improves the overall shopping experience for customers but also increases sales for businesses.

In addition to personalization, deep learning has also greatly improved inventory management for retailers. Traditional methods of forecasting demand and managing inventory can be time-consuming and often lead to inaccurate predictions. With deep learning techniques such as neural networks, retailers can now analyze a wide range of data points including historical sales data, current market trends, weather patterns, and social media activity to make more accurate demand forecasts. This helps businesses avoid overstocking or understocking products, ultimately leading to cost savings and increased efficiency.

Another significant impact of deep learning in retail is its ability to detect fraud and prevent losses for both online and brick-and-mortar stores. Deep learning algorithms can quickly analyze large volumes of transactions in real-time and identify suspicious activities such as fraudulent purchases or stolen credit card information. This enables businesses to take immediate action before any damage occurs.

Apart from these operational benefits, deep learning has also enhanced the overall customer experience with advanced chatbots powered by natural language processing (NLP).

Automotive Industry

The automotive industry has been at the forefront of technological advancements in recent years, with self-driving cars, electric vehicles, and advanced driver assistance systems becoming increasingly prevalent. One technology that has played a crucial role in these innovations is deep learning. Deep learning algorithms have revolutionized the automotive industry by enabling machines to learn from vast amounts of data and make complex decisions without explicit programming.

One of the most significant applications of deep learning in the automotive sector is autonomous driving. Companies like Tesla and Waymo have made considerable progress in developing self-driving vehicles that can navigate through traffic, recognize road signs and signals, and avoid obstacles using deep learning algorithms. These algorithms use a combination of sensors such as cameras, lidar, radar, and ultrasonic sensors to gather real-time data about their surroundings. This data is then fed into deep neural networks that process it to make decisions about steering, braking, accelerating, and other critical functions.

Deep learning has also transformed how engineers design cars. With traditional methods, designing a car’s shape could take months or even years. However, with generative adversarial networks (GANs), designers can quickly generate multiple designs based on specific criteria such as aerodynamics or aesthetics. GANs are trained on thousands of existing car designs to learn the underlying patterns and generate new designs that meet the desired specifications. This process not only saves time but also opens up possibilities for more creative and efficient vehicle designs.

Another area where deep learning has had a significant impact is in predictive maintenance for automobiles.

Education Sector

The education sector has always been at the forefront of embracing new technologies and innovative approaches to enhance learning outcomes. With the rise of deep learning, this trend has only accelerated as educational institutions around the world are leveraging its capabilities to revolutionize the way students learn and teachers teach.

One of the most significant applications of deep learning in education is personalized learning. By utilizing sophisticated algorithms and data analysis techniques, educators can now create tailored lesson plans and activities for each student based on their individual strengths, weaknesses, and learning style. This personalized approach not only improves engagement but also leads to better academic performance.

Another area where deep learning is making a significant impact is in language translation. With students from diverse backgrounds studying together, language barriers can often hinder effective communication and collaboration. However, with advancements in natural language processing (NLP), deep learning models can now accurately translate text from one language to another in real-time. This technology not only makes classrooms more inclusive but also prepares students for a globalized workforce.

Assessment and grading have always been essential components of the education system, but they are often time-consuming and prone to errors. Deep learning-powered assessment tools can automatically grade assignments and exams using advanced scoring algorithms, freeing up valuable time for teachers to focus on providing feedback and support to their students. These tools also offer real-time insights into student performance, allowing educators to identify areas that need improvement quickly.

Apart from improving traditional teaching methods, deep learning is also paving the way for innovative teaching tools such as virtual tutors or

Success Stories of Companies Utilizing Deep Learning

Deep learning, a subset of artificial intelligence (AI), has been making waves in various industries with its ability to analyze large amounts of data and identify patterns and relationships that were previously unattainable. By mimicking the way the human brain processes information, deep learning algorithms have proven to be incredibly powerful in solving complex problems and revolutionizing industries. In this section, we will explore some success stories of companies that have successfully implemented deep learning technology in their operations.

1. Google: Image Recognition Google has been at the forefront of using deep learning to improve its products and services. One notable application is its image recognition technology which is used in Google Photos and Google Lens. Using deep learning algorithms, Google can accurately identify objects, people, and even text within images uploaded by users. This has greatly improved user experience by making it easier to search for specific photos or translate foreign languages through the camera lens.

2. Netflix: Personalized Recommendations With millions of subscribers worldwide, Netflix generates an immense amount of data on user preferences, watching habits, and ratings. To provide personalized recommendations for each user based on this data, Netflix utilizes deep learning algorithms to analyze viewing history and make predictions about what they might enjoy watching next. This has significantly increased customer satisfaction and retention rates for the streaming giant.

3. Walmart: Inventory Management With over 11,000 stores worldwide, managing inventory efficiently is crucial for Walmart’s operations. In order to optimize their supply chain management process, Walmart turned to deep learning technology

Google’s use of DeepMind in its services

Google is known for its innovative use of technology to improve their services and products. One of the most significant advancements that Google has made in recent years is the incorporation of Deep Learning through their partnership with DeepMind, an artificial intelligence company that Google acquired in 2014.

DeepMind’s cutting-edge technologies have revolutionized how Google operates and delivers its services to users worldwide. By using advanced machine learning algorithms and neural networks, DeepMind has helped enhance various aspects of Google’s services, such as search engine results, voice recognition, image processing, and more.

One notable example of DeepMind’s integration into Google’s services is the improvement of the Google Translate app. With Deep Learning algorithms, the app can now translate between languages more accurately and efficiently than ever before. This application uses neural networks to analyze patterns in different languages’ grammatical structures to provide accurate translations in real-time.

Another revolutionary innovation that DeepMind brought to Google was the development of AlphaGo, an AI program capable of playing Go – a complex board game with trillions of possible moves. In 2016, AlphaGo famously defeated Lee Sedol – one of the world’s best Go players – in a five-game match. This achievement demonstrated how powerful Deep Learning can be when applied correctly.

Furthermore, DeepMind has also helped improve user experience on YouTube by implementing algorithms that recommend videos based on a user’s viewing history and preferences. These suggestions help users discover new content they may enjoy while keeping them engaged on the platform for longer periods

Amazon’s use of deep learning for product recommendations

Amazon is a pioneer in the use of deep learning for product recommendations. The company has been utilizing this cutting-edge technology to provide highly personalized and relevant product suggestions to its customers. With over 197 million active users worldwide, Amazon’s recommendations have become an integral part of its customer experience and have contributed significantly to the company’s success.

So, how does Amazon use deep learning for product recommendations? Let’s dive into the details.

1. Understanding Customer Behavior: At the core of Amazon’s recommendation system lies deep learning models that are trained on vast amounts of data collected from its customers. These models analyze a variety of factors such as purchase history, browsing behavior, search queries, and even mouse movements to understand each customer’s preferences and interests. This allows Amazon to create a comprehensive profile for each user, enabling them to make accurate predictions about what products they are most likely to be interested in purchasing.

2. Collaborative Filtering: Another crucial aspect of Amazon’s recommendation system is collaborative filtering, which involves analyzing patterns among different users’ behaviors. By analyzing their interactions with products and purchases, deep learning algorithms can identify similar interests among various user groups and recommend products accordingly. This approach not only helps in generating more precise recommendations but also enables cross-selling by suggesting complementary products that users may not have considered before.

3. Natural Language Processing: In recent years, Amazon has also incorporated natural language processing (NLP) techniques into its recommendation engine using deep learning algorithms. NLP allows computers to understand human language better and

Tesla’s self-driving cars powered by deep learning algorithms

Tesla’s self-driving cars have been making headlines since their introduction, promising to revolutionize the automotive industry. One of the key components that makes this possible is deep learning algorithms. These powerful algorithms are at the core of Tesla’s autonomous driving technology, allowing the vehicles to make decisions based on real-time data and environmental conditions.

At its most basic level, deep learning involves training a neural network with large amounts of data to recognize patterns and make predictions. This technology has proven to be incredibly effective in many industries, but it is particularly well-suited for self-driving cars.

The first step in creating a self-driving car powered by deep learning algorithms is collecting vast amounts of data. Tesla’s fleet of vehicles captures massive amounts of information every day through cameras, sensors, radar, and other sources. This data includes images, video footage, audio recordings, and more – all providing valuable insights about how humans interact with their environment while driving.

Once this data has been collected, it is fed into a neural network that has been specifically designed for autonomous driving tasks. The network then trains itself by analyzing patterns in the data and adjusting its weights accordingly – much like how our brains learn new things through experience.

As the neural network continues to train on more and more data, it becomes increasingly accurate at detecting different objects such as cars, pedestrians, traffic signals, and road signs. It also learns how these objects move within their surroundings and can predict potential outcomes based on previous experiences.

One of the most significant advantages of using

–

The use of deep learning has revolutionized various industries, bringing about unprecedented levels of efficiency and innovation. In this section of the blog, we will explore some real-world applications and success stories that demonstrate how deep learning is transforming different sectors.

1. Healthcare: Deep learning has made a significant impact in the healthcare industry by providing accurate diagnosis and treatment solutions. For instance, Google’s DeepMind project uses deep learning algorithms to detect eye diseases like diabetic retinopathy with an accuracy level comparable to human doctors. This technology can help save time and improve patient outcomes by detecting diseases at an early stage.

In addition, deep learning algorithms are also being used for medical image analysis, such as MRI scans and X-rays, to assist doctors in making more precise diagnoses. This not only reduces the chances of misdiagnosis but also improves the speed of diagnosis, allowing for faster treatment.

2. Automotive Industry: The automotive industry has also embraced the power of deep learning to enhance their products’ performance and safety features. With the rise of self-driving cars, companies like Tesla, Waymo, and Uber have heavily invested in deep learning technologies to develop advanced driver assistance systems (ADAS).

Deep learning algorithms enable vehicles to recognize traffic signs, pedestrians, other vehicles on the road, and make decisions based on that information in real-time. This technology has shown promising results in reducing accidents caused by human error.

3. Retail: Retail businesses are leveraging deep learning for various tasks such as inventory management, customer service chatbots,

0 notes

Text

A Summary Of The Divorce Procedure

Divorce proceedings have a negative impact on a person's financial and mental wellbeing. Although it's challenging, not knowing where to begin or how to go simply makes matters worse. In this piece, we'll give you a bird's-eye view of the divorce procedure.

The first step in doing this is to select a reputable and skilled divorce attorney. If you have a capable lawyer on your side, you've already won half the battle. As a result, you may always contact our Manassas Divorce Lawyers and use their knowledge to help you quickly address your divorce-related concerns.

You must compile information and documents. You should print out the necessary documents, such as

Final documentation includes any court orders or other documents relating to a marriage or the property of a marriage.

1. A wedding certificate

2. Children's birth certificates

3. Children's Social Security numbers

4. A record-keeping scheduler

5. Mailing labels for certified mail with return receipts

Files must be prepared and evaluated after you are completely confident that you will get the divorce and relief you want. You should carefully fill out and proofread each form you submit. Remember that you are verifying the truth and accuracy of the facts when you fill out court documents.

Before presenting the complaint to the court, double-check that everything is included in it. Use a schedule to keep track of deadlines and time constraints. Missing deadlines can have serious consequences.

While the divorce is proceeding, temporary orders might be obtained for things like child custody, visitation rights, spousal support, or property usage.

Using techniques including depositions, interrogatories, and document requests, both spouses' attorneys collect data and proof on their clients' assets, debts, income, and other relevant topics. To keep you focused and keep you from being distracted throughout the operation, a Divorce Lawyers Arlington VA will firmly grip your hands.

After obtaining the required paperwork, you must decide what outcome you want from the divorce, such as child custody, parenting time/visitation, child support, debt division, insurance policy and premium division, alimony/spousal support, real estate division, personal property division, and debt division.

Before presenting the complaint to the court, double-check that everything is included in it. Use a schedule to keep track of deadlines and time constraints. Missing deadlines can have serious consequences.

Based on the facts you have provided, the concerned attorney will construct your case to ensure a favorable outcome. When it comes to calculating child support and alimony, you can probably expect a Divorce Lawyers Roanoke VA to take a methodical approach.

Post-divorce issues, such as adjustments to custody or support orders, the enforcement of court decisions, and updates to legal documents, may come up after the divorce is finalized.

To comprehend the particular rules and processes that apply to your case, it is crucial to speak with a family law specialist in your jurisdiction. To settle disputes peacefully without going to court, alternative dispute resolution techniques like mediation or collaborative divorce may be investigated.

0 notes

Text

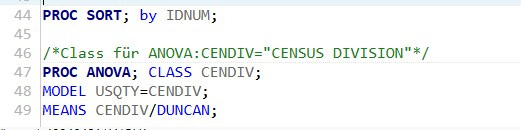

Running an ANOVA with Post Hoc

With the nesarc-dataset and by using the method “Analysis of Variance” “ANOVA“ it is possible to conclude several hypotheses between a categorical explanatory variable (as for example the state an american citizen is living in) and a quantative response value (as for example the numbers of cigarettes smoked a week).

For doing this, we first set our null hypothesis, in my case I assume that there is no difference in the numbers of beers consumed in excessive beer drinking in one sitting (5+ beers in one sitting) for the categorical variable as “Census division” where the test persons are originated.

Meaning if the null hypothesis Ho is true, the means of excessive beer drinking quantities per census division should be the same for all 9 divisions.

Ho = µ1 = µ2= µ3= µ4= µ5= µ6= µ7= µ8= µ9

The alternative hypothesis resulting out of it is:

Ha = not all means are equal.

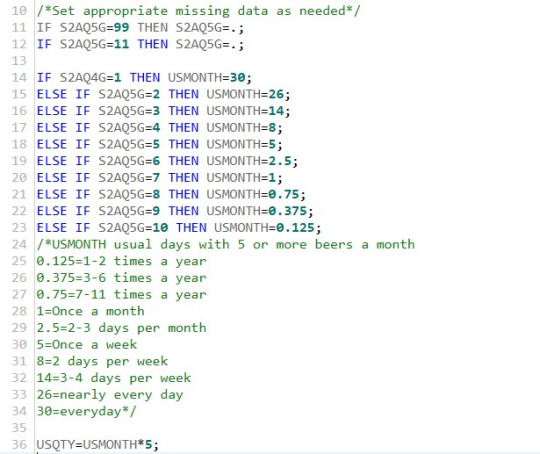

For performing the test I used the Data Analytics tool “SAS” and the nesarc dataset.

At first, I include the dataset and import the used variables for this test.

I defined the labels of thes used variables and explain what kind of data from the nesarc base should be used running the test.

To get a meaningful quantative responsible variable, I include the number of days with excessive beer drinking (min. 5 a day), calculate a value for the monthly use and multiply it with the minimum amount of beers in those sittings to get the variable USQTY (usual quantity)

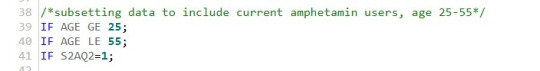

Then I only include data of interest in filtering for middle aged people (25 to 55 years old) who at least once drank beer in the last year.

Finally the data is sorted by ID-number of the nesarc participants and the ANOVA itself is run.

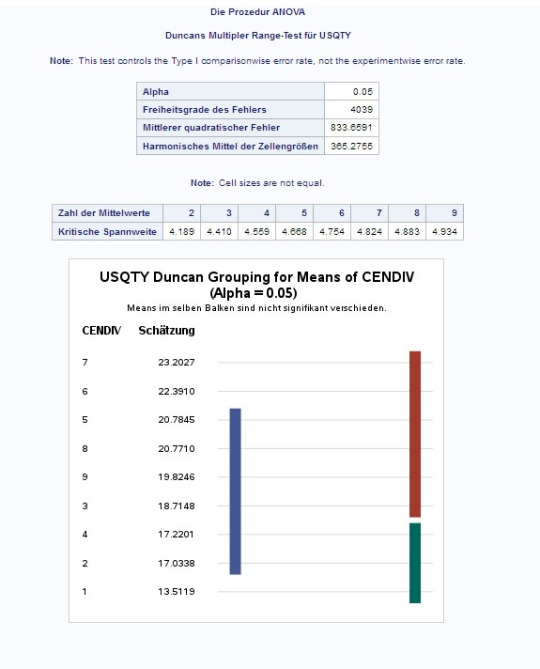

I also included the “Duncan's new multiple range test” as unction of the SAS tool to perform a post hoc test on the results of the ANOVA.

This shows if there really are significant differences in the means of more than two categorical variables and therefore shows, if the results of the ANOVA are valid for the used test case.

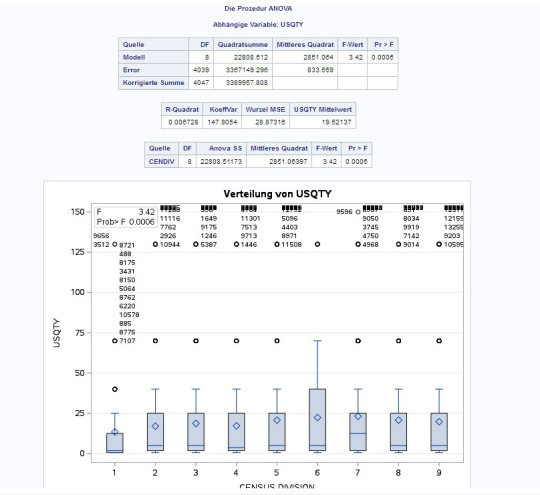

The Results are the following:

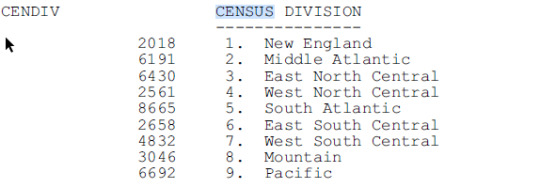

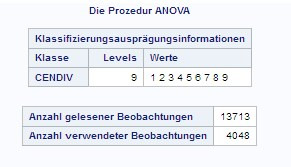

The 9 different CENDIVS with values 1 to 9 were used as categorical explanatory values.

With the included data, 13713 arguments were looked at, while 4048 match the criteria and were used for the test.

The test results in an F-statistic value of 3.42 and a p-value of 0,0006. It is way lower than our alpha value of 5%.

Therefore we can reject the null hypothesis and accept the alternative hypothesis: Not all means are equal.

The Duncan Post Hoc treatment also shows the same:

There are three different groups of means that are not significantly different to another, but to the other groups.

This tells us, that it is true to reject the null hypothesis and protects us against type 1 errors (rejecting the null hypothesis, although it is true).

1 note

·

View note

Text

Black Navy Print Cashmere Leopard Scarf In Blue

This offer isn't relevant to purchases being shipped internationally. Take your fashion to the wild aspect with this leopard print Autograph scarf. Crafted from ultra-soft and opulent cashmere. Please email us on to advise us you are returning the garment and if you'll like a refund or exchange for one more garment https://strandfirm.com/product/cashmere-leopard-scarf/.

My new scarf was beautiful over simple black pants and prime, seemed very glamour.

We will organize a return postage label so the merchandise could be returned to us when in a position. We will ship you an e mail as quickly as we obtain the returned garment and purpose to course of your return inside 2 enterprise days of receipt. All items returned should be in the identical situation during which they had been acquired.

Scarves are uniquely hand woven and hand printed making every bit tremendous luxurious and tremendous gentle. Embrace fine and opulent layers with this pure cashmere leopard scarf from hush. With a sizeable size and width, this scarf is roofed in a catchy leopard print that is related and on-point season after season. I ordered this online and was really disappointed when I opened the parcel. For virtually £70 I was anticipating a luxury merchandise - nevertheless it fell well short.

We have never met a Leopard print we didn’t love !! And we are huge fans of this lovely deep cobalt blue color. This cashmere scarf was created to put on all winter lengthy with leather jackets and good blazer fits to offer you a luxury look. Update your 2018 accent assortment with the hanging colors of our new scarf. Created from the best cashmere and silk and that includes our classic leopard print, unique to Leatham Cashmere, this scarf will smarten any outfit. All 100 percent cashmere, our scarves, hats and socks are loving made by hand within the tiny kingdom of Nepal.

If you have already got items in your basket, please note that they wiil be shipped to the country you will choose. Please also note that the shipping rates for lots of items we sell are weight-based. The weight of any such item can be discovered on its element web page. To reflect the policies of the shipping corporations we use, all weights shall be rounded as much as the following full pound.

Please note that lots of our merchandise are individually crafted by skilled artisans. Slight variations are a pure results of this process and add to the distinctive magnificence and persona of every piece. Our Leopard Scarves are hand-woven and hand-printed in Nepal. The weavers "dress" the loom in a width that's higher than the finished size, and make it longer, too. The resulting fabric is then felted by hand, which involves washing and mild agitation to aid within the interlocking of the very fine cashmere fibers.

All clothes are checked by us earlier than preparing your order for packing. In the unlikely event that the garment is faulty on arrival, incorrect or broken in transit, please notify us immediately on receipt of goods. We will arrange for the merchandise to be returned to us. If we're unable to provide you with a replacement, we will refund your cost of the merchandise. This is a 100% cashmere fine-woven scarf, however it is massive sufficient to also make a comfortable wrap. The background is the pure white color of the cashmere goat hair, with pale camel and black spots screen printed on the individual items.

We won't accept returns of used, dirty, or broken merchandise. We have the best to deny a credit if the merchandise returned doesn't meet our return policy necessities. To return an alpinecashmere.com item, email to acquire a return authorization quantity and pay as you go return label.

This wrap is noted as one hundred pc cashmere, however the weave and thread rely is similar to that of a burlap bag. To calculate the general star rating and proportion breakdown by star, we don’t use a easy average. Instead, our system considers things like how current a review is and if the reviewer purchased the merchandise on Amazon. It additionally analyses critiques to verify trustworthiness.

Modifying the language doesn't modify the chosen country and currency. 3) The color perhaps slightly difference compare the picture with actual merchandise as a outcome of monitor replicate. Sign as a lot as our newsletter and be the primary to hear the most recent offers, occasions, information and updates from Wolf & Badger.

The leopard print is just on one facet of the fabric and the quality of the cashmere wasn’t as anticipated. Machine wash your 100% cashmere scarf underneath the wool setting with temperature set as cold, and spin it at 500 rpm. You can also hand wash your cashmere scarf in chilly water. Handwoven cashmere scarves printed by hand with striking leopard prints in all the richest trend colours imaginable. Asneh's leopard scarf is a recent tackle a timeless classic. It’s crafted from the softest cashmere, expertly woven and screen printed by hand.

The delivery provide is mechanically applied at checkout when standard transport is chosen and the threshold is reached in a single transaction. Orders arrive inside three to four enterprise days if orders are positioned by three PM ET . Orders containing fragrances, rugs, or lighting and orders higher than 30 units are not eligible for quick delivery. Regular expenses will apply to all other transport strategies. Amounts donated to the Pink Pony Fund don't count towards the brink quantity.

1) Selecting high quality cashmere materials, keeps you heat all day lengthy. Please observe we're unable to supply a value match for merchandise sold through impartial retailers, or being shipped internationally. Every time you put on or wash your cashmere, it'll reward you by changing into somewhat softer. Please be assured that these cookies don't retailer any personal knowledge.

This course of provides bulk, softness and loft to the completed scarf. Pashmina is the traditional name for the very finest grade of cashmere wool. Ralph Lauren presents packaging designed to reduce waste. To receive your order with Reduced Packaging, choose the verify box on the Shipping web page during checkout. Register to obtain exclusive offers tailored to you, plus rewards and promotions earlier than anyone else. Just choose ‘YES’ throughout step three on the subsequent web page and by no means miss a thing.

In the case of an exchange, if the garment is in inventory we'll dispatch it inside 2 enterprise days of receiving the returned garment. If we do not have it in stock, but will probably be arriving into inventory shortly, we'll notify you and you'll determine whether or not you want to wait or obtain a refund. If there's a distinction in price between the returned garment and the garment you wish to change for, we are going to contact you to organise fee. Your personal data might be used to assist your experience all through this web site, to manage access to your account, and for different purposes described in our privacy coverage.

This is a timeless accent that will elevate any outfit and keep you toasty through the colder months. You might return your purchase for a full credit, so long as the product is returned in the identical situation because it was despatched. Altered products can't be returned for refunds or change.

They additionally improve the functionality and personalization of our website, such as using movies. Receive by Thursday, May 27, when you order by 3 PM ET and select Fast transport at checkout. Scarfe exactly as described and superbly packaged. This company clearly cares very a lot about who they're and their buyer's experience. I think I will get a lot of use of the wrap this winter.

Items are shipped to you instantly by our brands, using tracked, contactless supply. Instead of hanging, retailer your cashmere folded so it'll keep its shape. Smooth out the garment on a clear, dry towel and allow it to dry naturally, molding it back to its authentic form because it dries. ALL FLASH SALE ITEMS FINAL SALE. All other claims have to be made inside 10 days of supply for a refund. Please note, we don't ship on Saturdays, Sundays, and U.S. holidays.

Personalized items and reward packing containers can't be returned. I bought this scarf on-line after a lot of deliberation contemplating hearty value. The service I acquired in local M&S meals corridor on choose up left me very upset. I had obtained notification my parcel was prepared for choose up. Staff arguing with me in retailer in front of orher clients made me feel very uncomfortable. The scarf is so gentle and warm, it is a pleasure to put on - which I have already got.

Please notice, we can not provide pay as you go return labels for international returns. Customers are answerable for the delivery for all returns coming from outside the United States. Luxurious handcrafted cashmere knits and hand woven scarves from Nepal. Designed in England for a up to date wardrobe. A Lily and Lionel signature, the leopard print has been shrunk to a micro scale this season for an summary, polka dot design, on a caramel-toned backdrop. Printed on one hundred pc cashmere, finished with an eyelash hem.

It is obligatory to acquire person consent previous to running these cookies in your web site. When you place an order, we are going to estimate transport and delivery dates for you based mostly on the provision of your items and the transport options you select. Depending on the delivery supplier you choose, transport date estimates may seem on the shipping quotes web page. Please hand wash with impartial detergent after which dry within the air. Knitted cashmere may be dry cleaned, or ideally, washed by hand.

The return delivery is your duty. We make elegance easy with trendy laid-back designs for real life. All our designs are made from cashmere, silk and other natural fibres. Our products are fall-in-love-pieces in irresistible quality and design.

We do not compromise; looking gorgeous and feeling good are mutually imperative for us. Woven in Italy from a lustrous cashmere-and-silk mix, this elegant scarf showcases a traditional leopard print. Meticulously completed with hand-rolled edges, its elongated silhouette makes for a highly versatile accent. Free Fast Shipping on Orders $150+ & Free Returns |Details Enjoy free quick delivery on orders of $150 or more and free returns at RalphLauren.com only.

In caramel tones, our monster leopard scarf is a worthwhile cold climate funding piece. In this classic color way, it'll work with all of your present wardrobe and can look good draped casually spherical your neck. Enjoy free returns and exchanges within 30 days of the order shipment date.

Shipping time is calculated primarily based on when the order is shipped, not when the order is placed. We wish to get your Alpine Cashmere gadgets to you as quickly as possible and so strive to ship orders positioned by midday EST the identical day, but that isn't assured. Typically orders ship within one business day. Cookies permit us to report details about shopping through our web site so as to give you personalised provides.

Wear it with a jacket for or layer it with a sweater and coat in cold weather. We recommend teaming it with our Fallon beret and fingerless gloves. A luxurious scarf in basic leopard print and crafted using Grade A cashmere.

1 note

·

View note

Text

What Actually is SEO Today?

Website improvement is a natural abbreviation that numerous organizations request yet not many comprehend.Our Digital Marketing course in Pune, gives deeper information about Digital Marketing course.

In its easiest structure, SEO is making content on the web that web crawlers are probably going to suggest.

to know more about Digital Marketing course, go through the course.

Everything SEO we can manage today is

1) answer inquiries with pleasing, centered substance, and

2) get tenable, significant locales to connection to it. Individuals are searching for an answer, so web indexes reward the most fitting answers.

Previously, web crawlers weren't adequately brilliant to locate the most intelligent answers across the unstructured web, so they over-depended on catchphrases and other site labels for signs. Clearly, organizations discovered approaches to swindle.

Today, web indexes are shrewd and a lot harder (outlandish?) to swindle. You’ll get opportunity to enter into the IT world by Digital Marketing course.

Over the long haul and web indexes improve at setting, language translation, and purpose, the calculation stuff will be irrelevant to the normal business.

The solitary thing that will matter? Coordinated, quality substance.

In Our Digital Marketing course in Pune, We provide Affordable Fees and practical knowledge.We also provide

Web indexes will discover it all over the place, get it, and serve it up. Here's my interpretation of SEO today. While there are less deceives and strategies than previously, there's still a great deal we can do to improve our internet searcher rankings.

1. On Page SEO:

Watchwords are out

I've been doing SEO for customers since 2004. I recall when catchphrases enormously affected pursuit positioning.

SEOs (individuals actualizing SEO, who were not called that in 2004) stuffed watchwords into titles, meta labels, and the initial two sentences of each passage. Some even filled their footers with white-on-white arrangements of watchwords (so people wouldn't see them yet web crawlers would).

These strategies worked until web crawlers began punishing it. SEOs would then mask catchphrases into text "normally," frequently making less-meaningful substance. This, as well, worked until web crawlers halted it.

Today, the majority of my customers actually consider "watchwords" when they hear "Web optimization." Google and other web crawlers, be that as it may, don't. They can comprehend complex subjects and points from more common (and harder to cheat) hints.

In the event that you need to rank for a particular theme today, compose/record/make great data about the point. On the off chance that your dermatology-centered substance merits appearing, web crawlers may show it whether somebody look "dermatology," "skin specialist," "skincare," or even "how might I look more youthful?"

Content center points are in

The present variant of catchphrases (something specialized to bump the web indexes) is the "content center." Content centers are content association models that make website design and substance chain of importance clear for internet searcher crawlers.

Content centers additionally help people. People really need content association to discover data more than PCs do!

Center models split substance into pieces by point, so every theme gets a remarkable greeting page and URL. Web crawlers love this, since they'd preferably give a connection straightforwardly to an answer. They don't need the searcher to click anything extra or even read through a superfluous section to find their solution.

2. Off-site SEO

Indexes and nasty backlinks are out

Gone are the days when we could get recorded on index destinations, drop our connection in remarks, or even exchange joins with other eager for seo sites.

Today, if a connect to your site comes from a not exactly trustworthy or inconsequential site, it very well might be comparable to no connection by any stretch of the imagination.

Besides, sites and distributions with a great deal of active connections utilize the "rel=nofollow" trait liberally nowadays. Bigger locales use them to debilitate things like malicious blog remarks (with a connection stuffed in).

Connections from legitimate sources are in

All things being equal, center around joins from destinations that are characteristic and applicable to your site. The better the alluding site, the better it is for your SEO, however first it must be both regular and pertinent.

By and by, web indexes have gotten keen enough to all the more precisely get on these quality signs.

On the off chance that other site writers, particularly those with enormous crowds, connection to your substance in related substance they compose, it's evidence that your substance upgrades the data, and it's conceivable comparative or better quality.

There's not actually an approach to swindle this, which is the reason it's a decent framework. To get respectable sources to connection to your substance, it simply must be adequate.

100% Job to our students with Best Digital Marketing Course.

What's left on the specialized SEO side?

In the case of composing great, coordinated substance that straightforwardly answers search questions isn't sufficient for you to do, there are a couple of specialized SEO rehearses that will help your odds of positioning. Possibly. They unquestionably won't do any harm.

Organized information

Organized information is code in an all inclusive arrangement that enlightens web crawlers explicit insights about your substance.

Item surveys, for instance, can be coded in various ways, yet utilizing organized information, everything audits can be normalized and shown straightforwardly on query output pages.

Business data, plans, work postings, item information, occasions and more have organized information principles. Utilizing organized information is savvy in light of the fact that as more organizations do it and the datasets develop, more items and administrations will be based on top of them (which implies more opportunities to be found/seen).

Also, as Google puts it:

"As a rule, characterizing more suggested highlights can create it more probable that your data can show up in Search results with improved showcase." (connect)

Sitemap

Sitemaps are another approach to direct web index crawlers. Sitemaps spread out the pages on a site, how every now and again they change, and how significant they are comparative with the remainder of the site.

Sitemaps follow a normalized design, as organized information does.

Encryption

It's by and large saw today that all web traffic ought to be scrambled. That is the "https" rather than "http" and the lock in the url bar.

Google has authoritatively begun punishing locales that are not scrambled (beforehand the standard), yet there is an open-source, free security testament choice considered Let's Encrypt that permits any site to meet important encryption prerequisites. It likewise shows regard for your site guests.

Site speed

Some portion of serving individuals the ideal answer as fast as conceivable includes the heap season of the substance. In the event that two bits of substance answer a question and one loads quicker, it will rank higher.

Site speed was less significant before, yet with cell associations (and versatile information designs), the "weight" of substance presently factors into search rankings in Digital Marketing.

Responsive (or if nothing else versatile well disposed)

A few enterprises are just about as high as 80% portable traffic, so web crawlers reward destinations that show well on cell phones.

Responsive plan is site code that adjusts to screen size (rather than a different versatile site or a helpless encounter on little screens). Web crawlers like responsive plan on the grounds that there are no curve balls and no sidetracks. A similar connection in the indexed lists on work area will function admirably on portable.

That implies web indexes likewise debilitate interstitials. In the event that the connection works on work area yet obstructs content out of the blue on portable, that is not a decent encounter for the searcher.

AMP and partnership

Finally, there are other SEO-related specialized changes that may get more eyes on your substance. Organizations can make AMP variants of their substance by adding explicit code to their site. AMP pages load exceptionally quick, and on the grounds that that is a superior encounter for the searcher, Google will show AMP-prepared substance first. There are a few trade offs to this one.

There are other partnership designs, as well, similar to Apple News and RSS/Atom channels. I'd think about partnership as a type of SEO, yet we're getting to the edges of the SEO discipline with this one.

Web optimization used to incorporate a great deal of "strategies," however today it's truly to a greater degree a substance/schooling/promoting/local area activity.

In the event that it sounds sort of hard, that is on the grounds that it is. Consider this. In the event that it were just about as simple as changing a few watchwords, everybody would appear as the principal result. Be that as it may, there must be one first outcome.

Search engine optimization today implies making the most appropriate answer, across the entire web, for each particular inquiry in turn.

SEO mainly comes under the Digital Marketing Course. When you have some believability when it's shared and referred to by different people web indexes will have the evidence they need to unhesitatingly show that answer.

Simple peasy, isn't that so?

#seo and web design#digital marketing#Digital Marketing Institute#digital marketing trends#digital marketing course#digital marketing classes near me#online marketing#on page seo#off page seo#amp#websitespeed

1 note

·

View note

Text

Introduction, Implementations, Current & Possible Future Applications of Artificial Intelligence for Hybridization & Management of HEV (Hybrid electric vehicle) system

I. INTRODUCTION TO ARTIFICIAL INTELLIGENCE IN EV

Artificial Intelligence can be stated as the Augmentation of natural humans senses by computational systems which can be used to help a device embedded in a system or the system as a whole to think various possible solutions to the provided data or to manipulate those data to form something more sensible in order to handle many real world complex problems, involving imprecision, uncertainty and vagueness, high-dimensionality. Fundamental stimulus to the research and development of hybrid electric system is for the need of system to be self-aware and be capable to manage various variables & constraints of predefined & simulated conditions while working in the real world. The Integration of AI(Artificial Intelligence) is for distinct methodologies that can be done in various form, either by a modular integration of two or more intelligent methodologies, which maintains the identity of each methodology, or by fusing one methodology into another, or by transforming the knowledge representation in one methodology into another form of representation, characteristic to another methodology.

Artificial Intelligence powered systems are embedded inside of current electric vehicles in order to revolutionize the way various control systems manage the data flow coming out of the various embedded sensors and actuators through data extraction with the On-Board Diagnostics (OBD) system or ECU and can be used to alert the driver to any impending distortion in the vehicle system or components or else assist the user while commuting under various driving conditions to keep the overall performance of vehicle to the optimal possible degree.

Various types of Implementations of AI in HEV:

POWER SPLITTING - Hybrid systems can be instructed to split the required power between the EV components and ICE (Internal Combustion Engine) to meet the specified needs like fuel consumption, efficiency, performance, and emissions. The power splitting phenomenon, which is the key point of hybridization, is in fact, the control strategy or energy management of the hybrid automobile. Performance of the system, therefore, depends on the control strategy which needs to be robust (independent from uncertainties and always be stable) and reliable.

REAL TIME DATA MANAGEMENT: In order to improve the hybrid drive system, the control strategy should always be adaptive to keep track of all the demands of changes from the driver or drive cycle for optimization purposes. In order to fulfil these conditions, there is a need to develop an efficient control strategy, which can split power based on demands of the driver and driving conditions. Hence, for optimal energy management of in an HEV, interpretation of driver command and driving situation is most important.

RANGE EXTENDER : Although electric vehicles nowadays already have been provided with the R.E (range extender) technology mounted on them in order to assist the driver to cover reasonable length of distance after most the energy present in the batteries run out but upon controlling that range extender technology with the help of an AI can result in improved battery consumption economy and improvised drive patterns along with quick and efficient powertrain rotation, etc in order the improve the overall distance that vehicle is supposed to meant to cover.

AI ASSISTED OR SELF DRIVING AI TECHNOLOGY: The use of Artificial Intelligence to command a vehicle to drive on its own in real time along the traffic from one point to another using tons of pre-processed and simulated driving pattern’s data also with vehicle’s own real time cognitive response to the outside environment by embedded sensors and actuators accumulated while driving in order to assist the driver reach his/her destination while taking in consideration of various safety measures for both the people sitting inside the vehicles and also outside is the prime goal of developing self-driving technology.

360-DEGREE PERCEPTION TECHNOLOGY: Hybrid electric vehicles are being designed and developed not only to work with complex driving patterns or conditions on the road but also to mind their conditional surroundings such to the activate the stop function as a pedestrian suddenly decides to cross the road or also when there’s an unexpected deposition of roadblocks or traffic guidance structures commonly referred as “Channelizing devices” which comprises of cones or drums usually found nearby the construction zones or to guide the traffic stream en route towards that direction to any other alternative route. So, the AI is being used to generate accurate and precise 360-degree perception to its surrounding environment in order to improve functionality and prevent any fatality or accidents. The drivers are the leading cause of critical pre-crash events when compared to the other factors such as vehicle, environment or other. Research points out that most of the vehicles crashes occurs due to event recognition & decision error prior to crash rather than performance errors. The AI technology obviously will be able to understand and react more efficiently and quickly in order to ensure those conditions do not occurs which will lead to disastrous crashes and hence eventually prevent fatalistic automobile crash cases and save lives.

II. UNDERSTANDING OF THE TOPIC The Artificial Intelligence technology possesses and provides a wide range of natural human perspective on various problems encountered by us, filter all of those information based on the current provided conditions and provides us with the best possible logical output by using various pre-processed or real time processing of computational data gathered by the sensors and actuators working with various deep learning algorithms and delivers data or act with respect to possible event predictive techniques to improve the overall vehicle performance. AI which is to be implemented in HEVs plays a tremendous role in converting that vehicle into AGVs(Automated Guided Vehicles) or simply to assist the driver while travelling from point A to point B in numerous possible ways starting from the battery management systems, navigation through the geographic location of the vehicle, assistance in following the traffic guidelines set by higher authority, preventing poor decision making by the driver under harsh road conditions, etc. As AI is designed based on our own natural understanding of the surroundings and knowledge generated by us combined with the powerful data analysing tools and computational softwares it will be hugely beneficial for us to implement this deep learning algorithm guided softwares to power the hardwares of our electric vehicles and generate higher pile of data as feedback to the AI systems in order to record and analyse various driving patterns and conditions of various groups of people, study their decision making pattern, their demands with the infotainment systems, range expectations and eventually develop a better intelligence system able to sustain their demands according to the constraints set by them. AI-powered softwares in the automotive sectors with the help of cloud connection will not just gather real-time data, but also store it for analytics and statistics. In combination with permanent access to real-time updates that are recorded every single second, AI can detect activity that is impeding a car’s performance or analyze the potential failure scenario and prevent it. The best thing about it is that AI in your car software doesn’t complicate the user experience whatsoever. All the inner check-ins happen with no human interaction, and a driver would be bothered only in case you have to step up.

III. NEED FOR RESEARCH NEED OF AI IN HYBRIDIZATION

The need of AI-powered systems in the process of Hybridization & Management of HEVs using range of CPUs & GPUs on it responsible for processing all those data in real time and taking absolute essential decisions is very much important in order to widen our perspective towards the ways of commuting in our everyday life. AI working through what we term as machine learning is actually supervised learning where humans are creating and wide variety of labels to the outside surroundings and compiling at the data and using them to solve other similar possible scenarios where similar labels or elements of environment is found and then use the previously acquired data to tackle current problems and using current problem’s data to tackle future problematic scenarios and so on.

DEEP LEARNING: In case of deep learning the inputs that are being recorded through the various cameras mounted on the vehicles are in the form of raw pixels that are to be taken into account in order to form an architecture which will create dozens or even hundreds of layers of neurons yielding millions of parameters to fit into the program which will eventually provide us with numerous key insights in the form of lots of data & lots of cycles along with careful tuning of the data & cycles to turn into successful learning algorithm.

SYSTEM ARCHITECTURE OF AI IN HEV: The System Architecture of a HEV when is designed to work with the AI will first and foremost take the input destination specified by the driver and begins the process of routing wherein it calculates the total estimated distance between the starting point and the ending point along with the best possible route to get there keeping track of the real-time traffic conditions or personalized conditions set by the drivers by adding stops in-between the current location & final destination according to their designated needs. After AI gets done with the routing process it begins the process of motion planning which is the process of utmost importance as here the system takes in various data which is collected through Devices and Sensors such as LiDAR(Light detection and Ranging), GPS(Global Positioning System), RADAR(Radio detection and Ranging), IMU(Inertial Measurement unit), Cameras and Encoders which are being used for mapping and localizing the position of the vehicle with respect to it’s surrounding to create a very logical perception of the on-going traffic conditions and guide the vehicle from Point A to Point B by producing best possible predictions based on the data collected through Imitation Learning & Smart Cognitive responses generated through Computational softwares provided on board the vehicle.

PERFORMANCE ANALYSIS: Performance Analysis using AI technology can result in monumental development which can lead to improved performance of the vehicle and timely maintenance alerts being provided to the driver. With the help of new computational softwares & pre-processed data which consists of tons of previously logged data acquired through simulation and real time track testing the vehicle can be designed to be self-aware and capable of handling the situations of its surroundings prior to its launch. The vehicles are deployed in small numbers for testing it under various types of environment variables and then finally the vehicles are deployed in fleet wide numbers.

IV.PROS AND CONS OF AI IN HEV

When we start to think radically about the principles and aspects of AI in order to separate the Pros & Cons associated with its implementation in the AI industry in particular, the weight is far more heavier on the positive end of that spectrum but that doesn’t give us permission to not overhaul the entire system looking for elements that still needs to get worked on in some alternative way in order to carry & deliver the better outcome. Every aspects of intelligence in terms of principles holds the potential within themselves to precisely deducted and get described so that a machine can be constructed in order to simulate them.

PROS OF AI IN HEV:

AVAILABILITY AROUND THE CLOCK 24/7: The emerging AI technology is designed to work based on the cloud network infrastructure tirelessly around the clock and still provide the most accurate and precise results at any given time interval. We need not to develop a routine revolving around active and inactive state of AI as in order to cooperate with them, but the AI technology is available to each one of us around the clock to use as per our schedule dictates. So, while using AI in HEVs we need not to worry about its availability to us with respect to time because its functionality is just sublime around the clock 24/7.

HELPS IN REDUCING HUMAN ERROR: There are certain times when we wish for someone who is much more intelligent, efficient & also quick to do our work because humans cannot always be accurate with their results while dealing with sensitive data or while computing large piles of data consisting of large number of variations and constraints. It is even harder to provide precise readings using real time data as you’re working with respect to the time and the data always changes over time, for example predicting weather forecast based on the current data cannot determine with absolute certainty that it’s going to rain tomorrow. So, here’s where AI come into play and does the math for us by using complex computational methodologies and compiling the data at a much faster and accurate rate than humans and hence reducing the scope of errors and distortion in the output. Using AI technologies in HEVs will ensure proper cooperation between each and every components such as sensors, actuators, battery management systems, fuel management system, maintenance system, infotainment system, traffic alert system, etc in a well designed algorithm and runs things more smoothly and precisely providing us with the optimal results.

PREDICTIVE MAINTENANCE USING AI: The proper functionality of a vehicle is always dependent of various elements embedded inside the system. To always ensure the proper functionality of these components inside a system we need a very defined system organized, interlinked and responsive to the driver all at any given time. So, with the help of artificial intelligence technology we can ensure better monitoring of all these system components guiding the system all together and keeping the vehicle in pristine conditions by alerting us about the timely maintenance updates by predicting the need with the help of artificial cognitive response system working with various devices distributed all around the vehicle to provide us with best possible detailed reports about them on a regular basis and also sometimes takes care of things on their own with asking the driver to step up and get out of their comfort zone to get the specified error fixed. This system will help automotive manufacturers to eventually provide the best possible service in least amount of time as they will receive update on the vehicle’s condition prior to the driver’s visit to the workshop and allow them to take least amount of time to tackle it and get that vehicle back on the road with optimal functionality.

AI DRIVING: Nowadays the vehicles which are being manufactured are being provided with everything they need to have to deliver best performance but by including an autonomous system designed to control the flow of all that data and understand the responses generated through them can enable to the system to take place as the driver of the vehicle or function as an assistant to help the owners or drivers of those vehicles to sit back and enjoy the ride as the system itself will do all the work for them. Starting from blind-spot monitoring in case of a hard turn, the emergency braking system which will ensure proper timing of the activation of braking system to prevent disastrous events, cross-traffic detectors which can study & generate a logical perspective of the surrounding traffic and predict necessary route to ensure safe driving conditions, alert the emergency response unit or the driver itself in case the driver nods off in the vehicle unwillingly, etc. This autonomy in HEVs with the help of AI can result for decline in fatalistic cases all around the world and improve one’s perspective towards safe driving pattern and improvement in decision making and responsiveness.

CONS OF AI IN HEV:

HIGH COST OF IMPLEMENTATION: The rate at which the AI industry is progressing it is projected to reach $169.41 billion dollar industry by 2025. The implementation and manufacturing of high-end industry components which are to be subjected to work in correlation with AI technology will cost most of the consumers a fortune. Just as when any new technology is introduced, the prices of the products generated through them or with them skyrockets in the market similarly to make use of the current AI technology will remain to cost most of us a huge pile of money. Although the driver will be able to reap the benefits in a very simplified way and also they will be able to choose from Semi-AI mode (AI-Assist) or fully Autonomous vehicle which can provide the option to the customers to choose their vehicle of choice according to their budget still the industry will take some time to make these high-end technologies more affordable and conceivable by everyone.

AUTONOMY IN HEVs WILL PROMOTE LACKADAISICAL BEHAVIOURAL PATTERN IN FUTURE DRIVING COUNTERPARTS: The autonomous future of driving HEVs using AI surely guarantees safe & secure driving experience with numerous other incentives of having a smarter & reasonably self-sufficient system along with decline in possible human errors while driving but on the other hand it is also going to promote lethargic nature towards driving with respect to time as the continuum in advancement of AI will lead to fully autonomous driving and will not require drivers to assist them in any way. Customers will only use their vehicle just as any regular product just to meet their necessity and will remain idle during the travel period. It will also lead to decline in their understanding and importance of responsiveness and dependency on autonomous system will rise.

2 notes

·

View notes

Text

Improve your Blog’s Relevance Ranking in Google with SSL

You're going to have done a lot of research to improve your blog by now, and that's a good indication of professionalism and dedication. However, other than the features and content, you must also take care of some quality standards such as consistency and, in specific, the protection of personal information of your visitors.

What is an SSL Certificate?

Basic SSL (Secure Sockets Layer) technology allows for strong encryption between servers and browsers by using authentication.

Using this technology makes online communication much easier by shielding confidential information from cybercrimes such as identity theft, passwords and credit card numbers.

SSL protected blogs are shown on most servers in the address bar with a small padlock (see illustration below).

You must be knowing the fundamental elements utilized in Google's positioning calculations: regular content (made by people, for people), good picked titles, utilization of meta labels, well completed heading labels, cordial URLs, an appropriately designed site map that is obvious in Google Search Console, in addition to correctly enforced keywords Obviously, there's significantly more to it than that.

Google has been considering SSL use as one of the reasons for increasing website rankings since the end of 2014, including blogs.

The explanation why? To allow greater security for those who use the search engine to access all sorts of unidentified websites. It has sense, don't you agree?

With competitiveness becoming increasingly intense by the day, any fruitful SEO strategy must be deemed for better positioning. SSL design is one of those variables that could differentiate among one website and another should Google be "in question." Effective positioning also leads to more profits for those who use a content delivery strategy.

How to obtain SSL for your blog?

You can buy an SSL certificate via several internet providers, such as the same host server of your site, who must also provide this type of service. If your blog is already up and live, after downloading the SSL, you will need to take other steps: check the certificate, turn from http to https, upgrade the website and search console, submit a 301 redirect, etc. On the off chance that you are not a master, approach a specialist for help or search for data on the Internet, as while the procedure is genuinely essential, it requires some information. Any mix-ups made during execution can bring your blog offline.

If you want to save money and have a good quality SSL certificate, there are free services:

The SSL Online: A Premium Global Leader in the SSL Industry.

They are the pioneer SSL store that offers SSL certificates at much lower prices. They are an authorized partners of well-known Certificate Authorities (CA); thus they buy SSL certificates in bulk at an extremely discounted price and pass those savings to our customers, with a team of professionals and SSL experts available 24/7 via email, live chat, and telephone with an all-hands-on-deck attitude all of the time.

Want more benefits? You will be protected from hackers, that are increasingly more common and have become a constant security challenge for all sizes of business.

According to The SSL Online: “We are Platinum Partners with world's leading SSL certificate authorities. Our goal is to establish an approachable SSL market for everyone who wishes to make web security a priority just as we do.”

Let’s get to work!

Finally, you know that supplying your guests with protection isn't just an act of generosity, but it also increases your Google ranking, which might result in higher income. Now that you have heard about the advantages SSL certificates may offer for your article, what do you expect?

2 notes

·

View notes

Text

Version 379

youtube

windows

zip

exe

macOS

app

linux

tar.gz

source

tar.gz

Happy New Year! Although I have been ill, I had a great week, mostly working on a variety of small jobs. Search is faster, there's some new UI, and m4a files are now supported.

search

As hoped, I have completed and extended the search optimisations from v378. Searches for tags, namespaces, wildcards, or known urls, particularly if they are mixed with other search predicates, should now be faster and less prone to spikes in complicated situations. These speed improvements are most significant on large clients with hundreds of thousands or millions of files.