#A Brief History of Intel CPUs

Text

The Rise of AMD: A Look at their Advancements in CPU Technology

In recent years, AMD has been making waves in the tech industry with their innovative advancements in CPU technology. From challenging competitors to pushing the boundaries of performance, AMD has solidified its position as a major player in the market. In this article, we will explore the key advancements Find out more that have propelled AMD to the forefront of the industry and examine how they are shaping the future of computing.

Introduction

AMD, short for Advanced Micro Devices, is a semiconductor company known for its cutting-edge CPUs and GPUs. Founded in 1969, AMD has a long history of innovation and has established itself as a formidable competitor to industry giants like Intel and Nvidia. With a focus on pushing the boundaries of performance and efficiency, AMD has consistently delivered products that cater to both consumers and professionals alike.

The Evolution of AMD Processors The Early Days: A Brief History of AMD

AMD vs Competition: In the early days, AMD primarily focused on manufacturing second-source microprocessors for companies like Intel. However, it wasn't until the launch of the AMD K5 processor in 1996 that they began to establish themselves as a serious contender in the market. The K5 marked the beginning of AMD's journey towards developing their own line of processors that could rival Intel's offerings.

The Athlon Era

AMD at GPU: In 1999, AMD introduced the Athlon processor, which quickly gained popularity for its impressive performance and competitive pricing. The success of the Athlon series laid the foundation for future innovations from AMD and firmly established them as a major player in the CPU market. Around this time, AMD also made significant strides in GPU technology with their Radeon graphics cards, further solidifying their position as a leading tech company.

Ryzen Revolution

Innovative Technologies of AMD: Fast forward to 2017, when AMD launched their Ryzen line of processors based on their Zen architecture. The Ryzen CPUs marked a significant leap forward in terms of performance and efficiency, offering consumers a viable alternative to Intel's offerings. With features like simultaneous multithreading (SMT) and Precision Boost technology, Ryzen processors quickly gained a reputation for being powerful yet affordable options for gamers and content creators alike.

Advancements in CPU Technology Zen 2 Architecture

The Rise of AMD: A Look at Their Advancements in CPU Technology: One of the most significant advancements from AMD in recent years has been the introduction of their Zen 2 architecture. Built on a 7nm process node, Zen 2 CPUs offer improved IPC (instructions per cycle) performance and higher clock speeds compared to previous generations. This architectural leap has allowed AMD to compete head-to-head with Intel's offerings across various market segments.

youtube

Chiplet Design

AMD and AI: Another key innovation from AMD is their chiplet design approach, which involves using multiple smaller dies interconnected on a single package. This modular design allows for greater scalability and efficiency compared to traditional monolithic designs. By leveraging chiplets, AMD can optimize performance while reducing costs associated with manufacturing larger monolithic dies.

Infinity Fabric Interconnect

One of the key technologies that enable AMD's chiplet design is their Infinity Fabric int

1 note

·

View note

Text

The Rise of AMD: A Look at their Advancements in CPU Technology

In recent years, AMD has been making waves in the tech industry with their innovative advancements in CPU technology. From challenging competitors to pushing the boundaries of performance, AMD has solidified its position as a major player in the market. In this article, we will explore the key advancements that have propelled AMD to the forefront of the industry and examine how they are shaping the future of computing.

Introduction

AMD, short for Advanced Micro Devices, is a semiconductor company known for its cutting-edge CPUs and GPUs. Founded in 1969, AMD has a long history of innovation and has established itself as a formidable competitor to industry giants like Intel and Nvidia. With a focus on pushing the boundaries of performance and efficiency, AMD has consistently delivered products that cater to both consumers and professionals alike.

The Evolution of AMD Processors The Early Days: A Brief History of AMD

AMD vs Competition: In the early days, AMD primarily focused on manufacturing second-source microprocessors for companies like Intel. However, it wasn't until the launch of the AMD K5 processor in 1996 that they began to establish themselves as a serious contender in the market. The K5 marked the beginning of AMD's journey towards developing their own line of processors that could rival Intel's offerings.

The Athlon Era

AMD at GPU: In 1999, AMD introduced the Athlon processor, which quickly gained popularity for its impressive performance and competitive pricing. The success of the Athlon series laid the foundation for future innovations from AMD and firmly established them as a major player in the CPU market. Around this time, AMD also Additional info made significant strides in GPU technology with their Radeon graphics cards, further solidifying their position as a leading tech company.

youtube

Ryzen Revolution

Innovative Technologies of AMD: Fast forward to 2017, when AMD launched their Ryzen line of processors based on their Zen architecture. The Ryzen CPUs marked a significant leap forward in terms of performance and efficiency, offering consumers a viable alternative to Intel's offerings. With features like simultaneous multithreading (SMT) and Precision Boost technology, Ryzen processors quickly gained a reputation for being powerful yet affordable options for gamers and content creators alike.

Advancements in CPU Technology Zen 2 Architecture

The Rise of AMD: A Look at Their Advancements in CPU Technology: One of the most significant advancements from AMD in recent years has been the introduction of their Zen 2 architecture. Built on a 7nm process node, Zen 2 CPUs offer improved IPC (instructions per cycle) performance and higher clock speeds compared to previous generations. This architectural leap has allowed AMD to compete head-to-head with Intel's offerings across various market segments.

Chiplet Design

AMD and AI: Another key innovation from AMD is their chiplet design approach, which involves using multiple smaller dies interconnected on a single package. This modular design allows for greater scalability and efficiency compared to traditional monolithic designs. By leveraging chiplets, AMD can optimize performance while reducing costs associated with manufacturing larger monolithic dies.

Infinity Fabric Interconnect

One of the key technologies that enable AMD's chiplet design is their Infinity Fabric int

1 note

·

View note

Text

The Rise of AMD: A Look at their Advancements in CPU Technology

In recent years, AMD has been making waves in the tech industry with their innovative advancements in CPU technology. From challenging competitors to pushing the boundaries of performance, AMD has solidified its position as a major player in the market. In this article, we will explore the key advancements that have propelled AMD to the forefront of the industry and examine how they are shaping the future of computing.

Introduction

AMD, short for Advanced Micro Devices, is a semiconductor company known for its cutting-edge CPUs and GPUs. Founded in 1969, AMD has a long history of innovation and has established itself as a formidable competitor to industry giants like Intel and Nvidia. With a focus on pushing the boundaries of performance and efficiency, AMD has consistently delivered products that cater to both consumers and professionals alike.

The Evolution of AMD Processors The Early Days: A Brief History of AMD

AMD vs Competition: In the early days, AMD primarily focused on manufacturing second-source microprocessors for companies like Intel. However, it wasn't until the launch of the AMD K5 processor in 1996 that they began to establish themselves as a serious contender in the market. The K5 marked the beginning of AMD's journey towards developing their own line of processors that could rival Intel's offerings.

youtube

The Athlon Era

AMD at GPU: In 1999, AMD introduced the Athlon processor, which quickly gained popularity for its impressive performance and competitive pricing. The success of the Athlon series laid the foundation for future innovations from AMD and firmly established them as a major player in the CPU market. Around this time, AMD also made significant strides in GPU technology with their Radeon graphics cards, further solidifying their position as a leading tech company.

Ryzen Revolution

Innovative Technologies of AMD: Fast forward to 2017, when AMD launched their Ryzen line of processors based on their Zen architecture. The Ryzen CPUs marked a significant leap forward in terms of performance and efficiency, offering consumers a viable alternative to Intel's offerings. With features like simultaneous multithreading (SMT) and Precision Boost technology, Ryzen processors quickly gained a reputation for being powerful yet affordable options for gamers and content creators alike.

Advancements in CPU Technology Zen 2 Architecture

The Rise of AMD: A Look at Their Advancements in CPU Technology: One of the most significant advancements from AMD in recent years has been the introduction of their Zen 2 architecture. Built on a 7nm process node, Zen 2 CPUs offer improved IPC (instructions per cycle) performance and higher clock speeds compared to previous generations. This architectural leap has allowed AMD to compete head-to-head with Intel's offerings across various market segments.

Chiplet Design

AMD and AI: Another key innovation from AMD is their chiplet design approach, which involves using multiple smaller dies interconnected on a single package. This modular design allows for greater scalability and efficiency compared to traditional monolithic designs. By leveraging chiplets, AMD can optimize performance while reducing costs associated Additional reading with manufacturing larger monolithic dies.

Infinity Fabric Interconnect

One of the key technologies that enable AMD's chiplet design is their Infinity Fabric int

1 note

·

View note

Text

The Rise of AMD: A Look at their Advancements in CPU Technology

In recent years, AMD has been making waves in the tech industry with their innovative advancements in CPU technology. From challenging competitors to pushing the boundaries of performance, AMD has solidified its position as a major player in the market. In this article, we will explore the key advancements that have propelled AMD to the forefront of the industry and examine how they are shaping the future of computing.

Introduction

AMD, short for Advanced Micro Devices, is a semiconductor company known for its cutting-edge CPUs and GPUs. Founded in 1969, AMD has a long history of innovation and has established itself as a formidable competitor to industry Article source giants like Intel and Nvidia. With a focus on pushing the boundaries of performance and efficiency, AMD has consistently delivered products that cater to both consumers and professionals alike.

The Evolution of AMD Processors The Early Days: A Brief History of AMD

AMD vs Competition: In the early days, AMD primarily focused on manufacturing second-source microprocessors for companies like Intel. However, it wasn't until the launch of the AMD K5 processor in 1996 that they began to establish themselves as a serious contender in the market. The K5 marked the beginning of AMD's journey towards developing their own line of processors that could rival Intel's offerings.

The Athlon Era

AMD at GPU: In 1999, AMD introduced the Athlon processor, which quickly gained popularity for its impressive performance and competitive pricing. The success of the Athlon series laid the foundation for future innovations from AMD and firmly established them as a major player in the CPU market. Around this time, AMD also made significant strides in GPU technology with their Radeon graphics cards, further solidifying their position as a leading tech company.

Ryzen Revolution

Innovative Technologies of AMD: Fast forward to 2017, when AMD launched their Ryzen line of processors based on their Zen architecture. The Ryzen CPUs marked a significant leap forward in terms of performance and efficiency, offering consumers a viable alternative to Intel's offerings. With features like simultaneous multithreading (SMT) and Precision Boost technology, Ryzen processors quickly gained a reputation for being powerful yet affordable options for gamers and content creators alike.

Advancements in CPU Technology Zen 2 Architecture

The Rise of AMD: A Look at Their Advancements in CPU Technology: One of the most significant advancements from AMD in recent years has been the introduction of their Zen 2 architecture. Built on a 7nm process node, Zen 2 CPUs offer improved IPC (instructions per cycle) performance and higher clock speeds compared to previous generations. This architectural leap has allowed AMD to compete head-to-head with Intel's offerings across various market segments.

youtube

Chiplet Design

AMD and AI: Another key innovation from AMD is their chiplet design approach, which involves using multiple smaller dies interconnected on a single package. This modular design allows for greater scalability and efficiency compared to traditional monolithic designs. By leveraging chiplets, AMD can optimize performance while reducing costs associated with manufacturing larger monolithic dies.

Infinity Fabric Interconnect

One of the key technologies that enable AMD's chiplet design is their Infinity Fabric int

1 note

·

View note

Text

The Rise of AMD: A Look at their Advancements in CPU Technology

In recent years, AMD has been making waves in the tech industry with their innovative advancements in CPU technology. From challenging competitors to pushing the boundaries of performance, AMD has solidified its position as a major player in the market. In this article, we will explore the key advancements that have propelled AMD to the forefront of the industry and examine how they are shaping the future of computing.

Introduction

AMD, short for Advanced Micro Devices, is a semiconductor company known for its cutting-edge CPUs and GPUs. Founded in 1969, AMD has a long history of innovation and has established itself as a formidable competitor to industry giants like Intel and Nvidia. With a focus on pushing the boundaries of performance and efficiency, AMD has consistently delivered products that cater to both consumers and professionals alike.

The Evolution of AMD Processors The Early Days: A Brief History of AMD

AMD vs Competition: In the early days, AMD primarily focused on manufacturing second-source microprocessors for companies like Intel. However, it wasn't until the launch of the AMD K5 processor in 1996 that they began to establish themselves as a serious contender in the market. The K5 marked the beginning of AMD's journey towards developing their own line of processors that could rival Intel's offerings.

The Athlon Era

AMD at GPU: In 1999, AMD introduced the Athlon processor, which quickly gained popularity for its impressive performance and competitive pricing. The success of the Athlon series laid the foundation for future innovations from AMD and firmly established them as a major player in the CPU market. Around this time, AMD also made significant strides in GPU technology with their Radeon graphics cards, further solidifying their position as a leading tech company.

Ryzen Revolution

Innovative Technologies of AMD: Fast forward to 2017, when AMD launched their Ryzen line of processors based on their Zen architecture. The Ryzen CPUs marked a significant leap forward in terms of performance and efficiency, offering consumers a viable alternative to Intel's offerings. With features like simultaneous multithreading (SMT) and Precision Boost technology, Ryzen processors quickly gained a reputation for being powerful yet affordable options for gamers and content creators alike.

Advancements in CPU Technology Zen 2 Architecture

The Rise of AMD: A Look at Their Advancements in CPU Technology: One of the most significant advancements from AMD in recent years has been the introduction of their Zen 2 architecture. Built on a 7nm process node, Zen 2 CPUs offer improved IPC (instructions per cycle) performance and higher clock speeds compared to previous generations. This architectural leap has allowed AMD to compete head-to-head with Intel's offerings across various market segments.

youtube

Chiplet Design

AMD and AI: Another key innovation from AMD is their chiplet design approach, which involves using multiple smaller dies interconnected on a single package. This modular design allows for greater scalability and efficiency compared to traditional monolithic designs. By leveraging chiplets, AMD can optimize performance while reducing costs associated with manufacturing larger monolithic dies.

Infinity Fabric Interconnect

One of the key technologies that enable AMD's chiplet design is their Browse this site Infinity Fabric int

1 note

·

View note

Text

The Rise of AMD: A Look at their Advancements in CPU Technology

In recent years, AMD has been making waves in the tech industry with their innovative advancements in CPU technology. From challenging competitors to pushing the boundaries of performance, AMD has solidified its position as a major player in the market. In this article, we will explore the key advancements that have propelled AMD to the forefront of the industry and examine how they are shaping the future of computing.

youtube

Introduction

AMD, short for Advanced Micro Devices, is a semiconductor company known for its cutting-edge CPUs and GPUs. Founded in 1969, AMD has a long history of innovation and has established itself as a formidable competitor to industry giants like Intel and Nvidia. With a focus on pushing the boundaries of performance and efficiency, AMD has consistently delivered products that cater to both consumers and professionals alike.

The Evolution of AMD Processors The Early Days: A Brief History of AMD

AMD vs Competition: In the early days, AMD primarily focused on manufacturing second-source microprocessors for companies like Intel. However, it wasn't until the launch of the AMD K5 processor in 1996 that they began to establish themselves as a serious contender in the market. The K5 marked the beginning of AMD's journey towards developing their own line of processors that could rival Intel's offerings.

The Athlon Era

AMD at GPU: In 1999, AMD click here introduced the Athlon processor, which quickly gained popularity for its impressive performance and competitive pricing. The success of the Athlon series laid the foundation for future innovations from AMD and firmly established them as a major player in the CPU market. Around this time, AMD also made significant strides in GPU technology with their Radeon graphics cards, further solidifying their position as a leading tech company.

Ryzen Revolution

Innovative Technologies of AMD: Fast forward to 2017, when AMD launched their Ryzen line of processors based on their Zen architecture. The Ryzen CPUs marked a significant leap forward in terms of performance and efficiency, offering consumers a viable alternative to Intel's offerings. With features like simultaneous multithreading (SMT) and Precision Boost technology, Ryzen processors quickly gained a reputation for being powerful yet affordable options for gamers and content creators alike.

Advancements in CPU Technology Zen 2 Architecture

The Rise of AMD: A Look at Their Advancements in CPU Technology: One of the most significant advancements from AMD in recent years has been the introduction of their Zen 2 architecture. Built on a 7nm process node, Zen 2 CPUs offer improved IPC (instructions per cycle) performance and higher clock speeds compared to previous generations. This architectural leap has allowed AMD to compete head-to-head with Intel's offerings across various market segments.

Chiplet Design

AMD and AI: Another key innovation from AMD is their chiplet design approach, which involves using multiple smaller dies interconnected on a single package. This modular design allows for greater scalability and efficiency compared to traditional monolithic designs. By leveraging chiplets, AMD can optimize performance while reducing costs associated with manufacturing larger monolithic dies.

Infinity Fabric Interconnect

One of the key technologies that enable AMD's chiplet design is their Infinity Fabric int

1 note

·

View note

Text

Check CPU For AVX and SSE4 Instructions Set

Advanced Vector Extensions (AVX) is a powerful instruction set extension for processors that was introduced with Intel's Sandy Bridge and AMD's Bulldozer lineups.

AVX was developed to improve the performance of mathematically complex applications, such as scientific simulations, financial analysis, and video editing. This technology can speed up processes without requiring additional computational power.

AVX offers several benefits for high-performance workstations. With the introduction of AVX, developers can efficiently use larger registers and perform more calculations per clock cycle, resulting in faster processing times. The full potential of AVX can be realized in systems that require extensive mathematical calculations.

A Brief History of AVX

Intel introduced the first AVX instruction set with the Sandy Bridge processors in Q1 2011. At the same time, AMD launched its Bulldozer lineup, which also included support for AVX. AVX was further improved in 2013, and AVX2 was released with Intel's Haswell lineup of processors and AMD's Excavator lineup. AVX2 was designed to provide even more significant performance improvements compared to the original AVX instruction set.

In 2016, Intel expanded AVX support to include up to 512-bit SIMD registers. However, support for this feature was only brought to high-performance processors such as the Xeon Phi "Knights Landing" processors.

List of Processors Supporting AVX and AVX2

Most consumer-grade processors available today come with support for both AVX and AVX2. However, high-performance processors support AVX 512, which allows for even faster processing times. Here is a list of processors that support AVX and/or AVX2:

AMD

- AMD Jaguar Family 16h (2013)

- AMD Puma Family 16h (2014)

- AMD Bulldozer (2011)

- AMD Piledriver (2012)

- AMD Steamroller (2014)

- AMD Excavator (2015)

- AMD Zen (2017)

- AMD Zen+ (2018)

- AMD Zen 2 (2019)

- AMD Zen 3 (2020)

Intel

- Intel Sandy Bridge (2011)

- Intel Sandy Bridge E (2011)

- Intel Ivy Bridge (2012)

- Intel Ivy Bridge E (2012)

- Intel Haswell (2013)

- Intel Haswell E (2014)

- Intel Broadwell (2013)

- Intel Skylake (2015)

- Intel Broadwell E (2016)

- Intel Kaby Lake (2017)

- Intel Skylake-X (2017)

- Intel Coffee Lake (2017)

- Intel Cannon lake (2017)

- Intel Whiskey lake (2018)

- Intel Cascade lake (2018)

- Intel Ice Lake (2019)

- Intel Comet lake (2019)

- Intel Tiger Lake (2020)

- Intel Rocket Lake (2021)

- Intel Alder lake (2021)

- Intel Gracemont (2021)

VIA

- VIA Eden X4

- VIA Nano QuadCore

Zhaoxin

- WuDaoKou (KX-5000 and KH-20000 SKUs)

How to Check Whether Your Processor Supports AVX/AVX2/SSE4?

Hardware monitor programs can determine if your system supports the AVX/AVX2 instruction set. High-performance server-grade systems may also support the AVX 512 instruction set. Here are some ways to check if your processor supports AVX, AVX2, or SSE4:

How To Check your Processor for AVX and AVX2?

Use CPU-Z

CPU-Z is a free utility that provides detailed information about your processor, motherboard, and memory. It can also show whether your processor supports AVX, AVX2, or SSE4. To check, download and install CPU-Z, and then click on the CPU tab. Under Instructions, you will see the supported instruction sets.

Use Windows System Information

Windows System Information is a built-in tool that provides detailed information about your system hardware and software. To check if your processor supports AVX, AVX2, or SSE4, open Windows System Information by pressing Windows key + R and then typing "msinfo32" and pressing Enter.

In the System Summary section, look for the Processor line, which will show the name and model of your processor. You can then check the specifications of your processor on the manufacturer's website to see if it supports AVX, AVX2, or SSE4.

Use Command Prompt

You can also use the Command Prompt to check if your processor supports AVX, AVX2, or SSE4. To do this, open Command Prompt by pressing Windows key + R and then typing "cmd" and pressing Enter. In the Command Prompt window, type "wmic cpu get caption" and press Enter.

This will display the name of your processor. You can then check the specifications of your processor on the manufacturer's website to see if it supports AVX, AVX2, or SSE4.

*Note that the command "wmic cpu get caption" will only display the name of your processor. To check if your processor supports AVX, AVX2, or SSE4 using Command Prompt, you can use the following commands:

To check if your processor supports AVX

Type "wmic cpu get InstructionSet /value" and press Enter. Look for "AVX" in the list of instruction sets. If it is listed, then your processor supports AVX.

To check if your processor supports AVX2

Type "wmic cpu get InstructionSet /value" and press Enter. Look for "AVX2" in the list of instruction sets. If it is listed, then your processor supports AVX2.

To check if your processor supports SSE4

Type "wmic cpu get InstructionSet /value" and press Enter. Look for "SSE4_1" and "SSE4_2" in the list of instruction sets. If they are listed, then your processor supports SSE4.

Also read: Modern Warfare 2 Dev Error 6144 Fix

Read the full article

#AVX#AVXandSSE4InstructionsSet#CheckCPUForAVXandSSE4InstructionsSet#cpuwithavx2support#HowtocheckCPUforAVXandSSE4InstructionsSet

0 notes

Text

How to get adobe flash on android

#HOW TO GET ADOBE FLASH ON ANDROID HOW TO#

#HOW TO GET ADOBE FLASH ON ANDROID APK#

#HOW TO GET ADOBE FLASH ON ANDROID INSTALL#

#HOW TO GET ADOBE FLASH ON ANDROID UPGRADE#

#HOW TO GET ADOBE FLASH ON ANDROID ANDROID#

The different types of browsers mentioned above are capable of delivering superior results for any gamer out there, and even help give new meaning to the flash support features. Ultimately, the voice command and voice recognition features make flash games so interactive and easy to play. It has managed to combine the greatest tools in bookmarks management, such as drag and drop, batch delete, import-export, new folder, and synchronization as well as add-ons to make it more powerful.

#HOW TO GET ADOBE FLASH ON ANDROID ANDROID#

Using the boat browser almost feels like using a combination of all the Android browsers. The browser also darkens the screen to save power, downloads and supports any flash content, saves the bandwidth and removes ads. Instead of swiping left and right through the old-fashioned way, you can navigate through flash games using gestures, tilting and flipping the phone like a book. You can also keep your data synced between devices through the Firefox sync.Ĭlaiming to be one of the best browsers for advanced Android devices, the dolphin browser incorporates gesture technology and even a multiple tab options to open countless tabs at the same time. Other than the remarkable support for the flash player and flash games, it also offers a great browsing experience. Its light, simple menu with a navigable architecture makes it similar to Chrome for Android. If you value sleek designs and simple interfaces, the flash fox is the right android browser for you.

#HOW TO GET ADOBE FLASH ON ANDROID INSTALL#

If you see Install blocked warning screen, hit Settings. It’s about viewing multimedia, executing web apps, and streaming audio and video.

#HOW TO GET ADOBE FLASH ON ANDROID APK#

How great would it be to play free games online on a browser that has a gamepad?Īt only USD$2, it will truly be worth your while Next download the Adobe Flash Player 11.1 and open the APK file. Install this software to use content designed on the Adobe Flash platform. With an extremely fast experience in browsing, the puffin browser not only provides flash support, and remote technology, but also has a virtual trackpad that makes the whole experience similar to the desktop. It is more fun since it has advanced customization options changing of themes, colors, backgrounds and automatic erasing of browsing history. Also note that the old Flash 11.1.x Plugin from Adobe is only for phones/tablets with a ARMv7/ARMv6 cpu and not a Intel cpu which some more newer devices are using instead.

#HOW TO GET ADOBE FLASH ON ANDROID UPGRADE#

You can download the Photon browser on the play store for free, or upgrade to the premium package which doesn’t have any ads. The good thing about photon browser that distinguishes it from the rest, is that it has a button to activate flash support a much cooler way of separating the normal browser, and the flash support entities. This fast player that allows any form of video browsing and flash gaming does not need the installation of any flash plugin.

#HOW TO GET ADOBE FLASH ON ANDROID HOW TO#

Below is a brief summary of 5 browsers that support flash content on Android. Users of modern gadgets ask again and again how to install and use Adobe Flash Player on the new version of Android 4.2 or even a completely new 4.3. Knowing the right browsers that support such online games on Android will give you exposure and a great deal of knowledge about this gaming platform.Īlternatively, you can install Adobe flash player on your Android device manually but installing the compatible browsers would be the most convenient option for you. The well-known online games are now compatible with Android phones and you can continue with your gameplay in the school bus, or on your way to work.Įven so, not all browsers support flash games and flash players. One fairly common problems faced by users of devices running Android - installation of Flash Player, which would allow to play flash at different sites. If you have one of the latest Android smartphones, flash actually runs really fast and you can enjoy all of flash-enabled sites right there on your Android smartphone or tablet.If you thought flash games were limited to computers only, think again. Q: Why would I want to install Flash Player on my phone?Ī: While flash has been discontinued for many years, there’s still many flash-only sites you can only view with Adobe Flash Player. If your browser support flash, there will be a setting for it in browser settings.Ī: Yup, you can use on any Android smartphone or tablet with Android 5.0, 5.0.1, 5.0.2, 5.1, and probably will work on future Android versions too. Unfortunately, my favorite browser Chrome does not support it but you can use browers like Dolphin, Firefox, and many more. No root is required.Ī: Yes, you can use any browser that supports Flash Player. There are actually two solutions depending on which device you have. I’ve verified working fine also on my Galaxy Note 4, Note 3, and Galaxy S5. As many of you know by now, Adobe Flash isn’t supporting Android 4.1 Jelly Bean, but there is a solution and it’s very easy. This method will work on all Android smartphones and tablets running the latest Android Lollipop (should also work fine on KitKat). Go to a flash-enabled site like and verify flash works.

0 notes

Text

The History of AMD and ATI, and my History with Them

A Brief History of AMD

AMD is actually the oldest company in this pair. They incorporated on May 1st, 1969. They started manufacturing microchips for National Semiconductor and Fairchild Semiconductor. In 1971 they entered into the Random Access Memory (RAM) chip industry. In 1981 they entered into a technology exchange with Intel. It led to AMD becoming a second source for Intel's 8088, 8086, and 80286 chips.

In the early 90's AMD and Intel's relationship soured. It started when Intel refused to divulge the 80386 technology. A legal battle ensued with AMD winning the case in the California Supreme Court. Intel canceled the technology exchange contract. Mainly because they wanted to be the sole producer of their X86 line of microprocessors. AMD began focusing on clones of Intel microprocessors. Intel then sued AMD over intellectual property rights of the X86 microcode. AMD solved the problem by creating their own clean-room microcode for X86 execution. They released their AM386, AM486, and AM586 to an eager user base because of the AMXXX series lower prices.

In 1996 AMD released the K5 to compete with Intel's Pentium. The K5 was a RISC microprocessor running a X86 code translation front end. It was more advanced than the Pentium. It had a larger data buffer and was the first chip with speculative execution. Advanced technologies that are still incorporated in modern microprocessors.

AMD purchased NextGen in 1996 for their NX based X86 technology that led to the AMD K6. The AMD K6 is a completely different microprocessor from the K5. It still used an X86 code translation front end, and added microcode execution for MMX. The K6II added 3D-Now technologies. This extended microcode aided GPU processing, and seemed to work best with ATI based GPUs. The K6 and K6II competed with the Intel Pentium II

In 1999 AMD released the AMD K7 Athlon microprocessor. The Athalon used a parallel X86 code translation unit, and more processing registers. The Athalon had the new SSE microcode set. It also had the more advanced MMX microcode that shipped with it's competitor the Pentium III.

There are several versions of AMD Athlon microprocessors. In fact there are even current ZEN based architecture chips bearing the Athalon name. In 2006 AMD Purchased ATI. We are going to put a pin in AMD for just a moment and talk about ATI. We will get back to AMD in a bit.

A Brief History of ATI

Array Technologies Incorporated started as a Graphics Processing Unit development company. Founded in 1985, they manufactured OEM (Original Equipment Manufactured) graphics chips. Their primary customers were IBM and Commodore. By 1987 ATI grew into an independent GPU manufacturer. ATI's most notable early GPU was the Wonder series. It remained a mainstay in desktop computers until 1994 with the release of the Mach 64. The Mach 64 led to the 3D Rage featuring the Mach 64 2-D rendering engine with elementary 3D acceleration. ATI’s next generation card the Rage Pro was the first competitive GPU to 3Dfx's Voodoo. In 1999 ATI released the Rage 128 which provided DirectX 6 acceleration.

In 1996 ATI entered the mobile 3D acceleration market with the Rage Mobility GPU. In 1997 they purchased Tseng Labs to help with GPU manufacturing. This led to the development of future Rage mobility GPUs, as well as the ATI Radeon GPU that was released in 2000.

The ATI Radeon is a completely new design from the Rage series. It offered DirectX 7.0 2D and 3D acceleration. The Radeon GPU uses a series of stream processors (or cores) that allows for scalable GPUs. This is like Nvidia's CUDA Core design.

AMD purchased ArtX the designer of the Flipper graphics system in 2000. Flipper is built into the NEC graphics system on Nintendo GameCube and Wii systems. In 2005 Microsoft contacted ATI to develop graphics for the XBox 360. In 2006 AMD purchased ATI.

Back to AMD

With the Acquisition of ATI Technologies AMD retired the ATI brand name. ATI's GPU development assets are now known as the Radeon Technology Division. AMD also spun off their chip manufacturing facilities into Globalfoundries. This turned AMD into an Intellectual property, microprocessor, and GPU developer.

In 2007 AMD released their APU mobile microprocessor series. Called the Fusion series it was a marriage of AMD Athlon mobile CPUs and ATI mobile GPUs. They use an integrated PCI express link on the SOC (system on a chip) package.

AMD was a major competitor to Intel and Nvidia until 2011 with the release of the AMD FX series. Known as Bulldozer it was meant to be AMDs high powered server and desktop CPU. They provided advanced hybrid speculative execution, and multi level branch target buffering (multi-threading). In 2015 AMD was hit with a false advertising suit. This is due to the fact that their FX-series 8-core microprocessors were really a 4-core, 8-thread chip. In 2019 AMD settled the suit. They offered bulldozer owners a coupon towards new CPUs.

In 2014 AMD hired Dr. Lisa SU (PH.d. in electrical engineering) as CEO which led to the development of Zen. In fact it has led to a competitive push against Intel and Nvidia. In 2017 AMD released the Ryzen microprocessor. It is based on the ZEN micro architecture. The Zen Microarchitecture wasn't quite enough to compete with Intel's current CPUs. They were comparable though, and with their lower price they became popular. In 2019 AMD released the Zen 2 architecture with the Ryzen 3000 series CPUs. This year (2020) AMD released a series of mobile APUs based on the Zen 2 architecture. The Zen 2 CPUs and APUs match or outperform Intel's 9th and 10 gen CPUs and APUs at a much lower price.

AMD has begun to put pressure on Nvidia with NAVI and Big NAVI. They utilize next gen technology, and Big NAVI will compete with the Nvidia RTX 3000 series.

My History with ATI and AMD

I have had several AMD (ATI) GPUs, but I don't really know what they are. For the most part as an ex-Mac-user I chose systems based on my usage case, and ignored the specs. Other than the PowerMac 8500, which got a complete overhaul. I did make some upgrades to the other Macs like upgrading the RAM and in the case of my Mac Pro I upgraded the CPUs. So there are only a couple GPUs (on the Macs) and a CPU of note.

My first ATI GPU that I actively chose was a Rage 128 PCI for Mac. I purchased it in 1998. At the time I was trying to get a few more years out of my PowerMac 8500. I upgraded the RAM, hard drive, and added a Sonnet Technologies Crescendo G3 500, and I installed the Rage 128 PCI. I used this upgraded rig until 2003 when I replaced it with a new PowerMac G4.

In early 2007 I purchased a Mac Pro for my masters level video production classes. I didn't feel the GeForce 7300 GT was powerful enough for On-The-Fly-Rendering. I bought the Radeon 5870 with the much larger 1 GB of video RAM which made video editing faster and more efficient.

In 2019 I decided to rebuild my workstation PC, and move away from Intel CPUs. I purchased an AMD Ryzen 9 3900X as my workstation CPU. The CPU is a video encoding and raw data processing beast. It is a real trooper.

In 2020 I purchased an eGPU from Sonnet Technologies called the eGFX Breakaway Puck. The model I purchased is the Radeon RX560. I use it with my 2017 HP Spectre X360. It works great. You can read my review here (https://waa.ai/e6Ui).

Next year (2021) I will likely buy an AMD powered laptop. This is an attempt to move away from the Specter and Meltdown exploits that plague Intel CPUs.

Conclusion

It's easy to say that AMD and ATI have helped shape the modern computer. Whether your computer has AMD parts or not the competition pushed Intel, ARM, and Nvidia. Computers would likely have been different without them.

2 notes

·

View notes

Text

A Brief History of Intel CPUs, Part 2: Pentium II Through Comet Lake

All the CPU cores, none of the motherboard upgrade requirements? Count a lot of people in -- if it works.

In Part 1 of this guide, we discussed the various Intel CPUs from the beginning of the company through to the Pentium Pro. Before we dive into the other CPUs in Intel’s overall history, in celebrating the 40th anniversary of the 8086, let’s take a moment to further discuss the Pentium Pro. In a very real sense, the PPro is the core that revolutionized the x86 architecture and Intel’s microprocessor design and can be thought of as the “father” from which modern CPUs are descended (the original 8086 itself, in this context, is more of a grandfather).

The Pentium Pro was, in many ways, a true watershed of CPU design. Up until its debut, CPUs executed programs in the order in which program instructions were received. This meant that performance optimizations heavily relied on the expertise of the programmer in question. Instruction caches and the use of pipelines improved performance in-hardware, but neither of these technologies changed the order in which instructions were executed. Not only did the Pentium Pro implement out-of-order execution to improve performance by allowing the CPU to re-order instructions for optimal execution, it also began the now-standard process of decoding x86 instructions into RISC-like micro-ops for more efficient execution. While Intel didn’t invent either capability out of whole cloth, it took a substantial risk when it built the Pentium Pro around them. Needless to say, that risk paid off.

The Pentium Pro’s P6 microarchitecture would be used for the Pentium II and III (all forms) before being replaced by the Pentium 4 “Netburst” — at least for a little while. It resurfaced with the Pentium M (Intel’s mobile CPU family) and Core 2 Duo family. Modern Intel CPUs are still considered to have descended from the Pentium Pro, despite the numerous architectural revisions between then and now. The original Pentium Pro, however, didn’t perform particularly well with 16-bit legacy code and was therefore mostly restricted to Intel’s workstation and server product families. The Pentium II was intended to change that — so let’s pick up our history from there.

To trace the history of Intel CPU cores is to trace the history of various epochs in the evolution of CPU performance. In the 1980s and 1990s, clock speed improvements and architectural enhancements went hand in hand. From 2005 forward, it was the era of multi-core chips and higher efficiency parts. Since 2011, Intel has focused on improving the performance of its low power CPUs more than other capabilities. This focus has paid real dividends — laptops today have far better battery life and overall performance than they did a decade ago.

Compare the Core i7-10810U with the Core i7-2677M to see what we mean. The Sandy Bridge-era CPU had a maximum clock of 2.9GHz, supported just 8GB of RAM (not shown), and offered one-third the cores and L3 cache of the modern Coffee Lake SKU. Overall mobile performance per watt has improved dramatically. At the same time, Intel faces real challenges, both from AMD and ARM. We’ve compared against the Core i7-10810U rather than the Ice Lake-based Core i7-1065G7, because the 10nm ICL CPUs are balanced differently between CPU and GPU, and they make the straight-line CPU improvements harder to see.

But regardless of what the future holds, Intel CPUs have driven consistent performance improvements over the past four decades, revolutionizing the personal computer in the process. We hope you’ve enjoyed the trip down memory lane.

Now Read:

MSI Launches a Water-Cooled Motherboard That Won’t Break the Bank

Killer Move: Intel Acquires Rivet Networks

Intel Doesn’t Want to Talk About Benchmarks Anymore

from ExtremeTechExtremeTech https://www.extremetech.com/computing/271105-a-brief-history-of-intel-cpus-part-2-pentium-ii-through-coffee-lake

from Blogger http://componentplanet.blogspot.com/2020/06/a-brief-history-of-intel-cpus-part-2.html

0 notes

Photo

Google Hires Intel CPU Expert, Doubles Down on SoCs as the “New Motherboard” Https://www.slw-ele.com; Email: [email protected]

Google is slowly building its reputation in custom hardware development. While a design engineer may not be able to buy a standalone IC from Google, the company has built its own cloud compute and server infrastructure largely in-house with many ASICs in its repertoire.

Google’s cloud infrastructure is largely built in-house. Image used courtesy of

The company made hardware headlines this week when it announced that . The new team headed by Frank will be headquartered in Israel.

What exactly is Google’s history in custom hardware? And what does this new hire tell us about Google's plans for future integration?

A Brief History of Google Hardware

One of Google’s first big achievements in custom hardware was its .

The TPU is an ASIC designed specifically for neural network acceleration and is utilized in Google’s data centers, powering many famous applications such as Street View, Rank Brain, and Alpha Go. At the time of its release, the TPUs provided an order of magnitude improvement in performance per watt for ML applications, according to Google.

Google's TPU board. Image used courtesy of

Another hardware endeavor for Google was OpenTitan, an open-source silicon root-of-trust project built off Google’s Titan chip.

As explained by AAC contributor Cabe Atwell, , which served to create secure ICs for data center applications. Central to the platform is the fact that it is open-source, which may help identify and iron out security vulnerabilities in the OpenTitan ICs.

Last year also brought reports that including an 8-core Arm processor on 5nm technology, to eventually power Pixel smartphones and even Chromebooks.

“The SoC Is the New Motherboard”

In Google's most recent announcement on Uri Frank's new position, the tech giant also offered a window into the direction of its custom hardware.

Uri Frank, Google's new VP of engineering for server chip design. Image used courtesy of Inter and

“To date, the motherboard has been our integration point, where we compose CPUs, networking, storage devices, custom accelerators, memory, all from different vendors, into an optimized system," the press release reads.

"But that’s no longer sufficient: to gain higher performance and to use less power, our workloads demand even deeper integration into the underlying hardware.” To meet these demands, Google has decided that high levels of integration, specifically in the form of SoCs, is the future of its hardware.

Instead of connecting individual ICs via traces on a board, SoCs provide significant improvements in latency, bandwidth, power, and cost while eliminating off-chip parasitics. It's for these reasons that Google is banking on integration—where all peripheral and interconnected ICs are brought together on one SoC. For Google, this means “the SoC is the new motherboard.”

Google Joins the Trend of In-house Processors

Google isn't the first tech giant to make the dive into in-house processors. In the past few years, . Facebook has also to fortify its AI technology. More recently, Apple took a step back from Inter silicon altogether to .

Now, with Google's selection of Frank—a CPU design expert—the company plans to build a chip design team in Israel, where Google has found past success in innovating products like Waze, Call Screen, and Velostrata’s cloud migration tools.

0 notes

Text

Neuromorphic computing: The long path from roots to real life

This article is part of the Technology Insight series, made possible with funding from Intel.

Ten years ago, the question was whether software and hardware could be made to work more like a biological brain, including incredible power efficiency. Today, that question has been answered with a resounding “yes.” The challenge now is for the industry to capitalize on its history in neuromorphic technology development and answer tomorrow’s pressing, even life-or-death, computing challenges.

KEY POINTS

Industry partnerships and proto-benchmarks are helping advance decades of research towards practical applications in real-time computing vision, speech recognition, IoT, autonomous vehicles and robotics.

Neuromorphic computing will likely complement CPU, GPU, and FPGA technologies for certain tasks — such as learning, searching and sensing — with extremely low power and high efficiency.

Forecasts for commercial sales vary widely; with CAGRs of 12-50% by 2028.

From potential to practical

In July, the Department of Energy’s Oak Ridge National Laboratory hosted its third annual International Conference on Neuromorphic Systems (ICONS). The three-day virtual event offered sessions from researchers around the world. All told, the conference had 234 attendees, nearly double the previous year. The final paper, “Modeling Epidemic Spread with Spike-based Models,” explored using neuromorphic computing to slow infection in vulnerable populations. At a time when better, more accurate models could guide national policies and save untold thousands of lives, such work could be crucial.

Above: Virtual attendees at the 2020 ICONS neuromorphic conference, hosted by the U.S. Department of Energy’s Oak Ridge National Laboratory.

ICONS represents a technology and surrounding ecosystem still in its infancy. Researchers laud neuromorphic computing’s potential, but most advances to date have occurred in academic, government and private R&D laboratories. That appears to be ready to change.

Sheer Analytics & Insights estimates that the worldwide market for neuromorphic computing in 2020 will be a modest $29.9 million — growing 50.3% CAGR to $780 million over the next eight years. (Note that a 2018 KBV Research report forecast 18.3% CAGR to $3.7 billion in 2023. Mordor Intelligence aimed lower with $111 million in 2019 and a 12% CAGR to reach $366 million by 2025.) Clearly, forecasts vary but big growth seems likely. Major players include Intel, IBM, Samsung, and Qualcomm.

Researchers are still working out where practical neuromorphic computing should go first. Vision and speech recognition are likely candidates. Autonomous vehicles could also benefit from human-like learning without human-like distraction or cognitive errors. Internet of Things (IoT) opportunities range from the factory floor to the battlefield. To be sure, neuromorphic computing will not replace modern CPUs and GPUs. Rather, the two types of computing approaches will be complementary, each suited for its own sorts of algorithms and applications.

Familiarity with neuromorphic computing’s roots, and where it’s headed, is useful for understanding next-generation computing challenges and opportunities. Here’s the brief version.

Inspiration: Spiking and synapses

Neuromorphic computing began as the pursuit of using analog circuits to mimic the synaptic structures found in brains. The brain excels at picking out patterns from noise and learning . A neuromorphic CPU excels at processing discrete, clear data.

For that reason, many believe neuromorphic computing can unlock applications and solve large-scale problems that have stymied conventional computing systems for decades. One big issue is that von Neumann architecture-based processors must wait for data to move in and out of system memory. Cache structures help mitigate some of this delay, but the data bottleneck grows more pronounced as chips get faster. Neuromorphic processors, on the other hand, aim to provide vastly more power-efficient operation by modeling the core workings of the brain.

Neurons send information pulses to one another in pulse patterns called spikes. The timing of these spikes is critical, but not the amplitude. Timing itself conveys information. Digitally, a spike can be represented as a single bit, which can be much more efficient and far less power-intensive than conventional data communication methods. Understanding and modeling of this spiking neural activity arose in the 1950s, but hardware-based application to computing didn’t start to take off for another five decades.

DARPA kicks off a productive decade

In 2008, the U.S. Defense Advanced Research Projects Agency (DARPA) launched a program called Systems of Neuromorphic Adaptive Plastic Scalable Electronics, or SyNAPSE, “to develop low-power electronic neuromorphic computers that scale to biological levels.” The project’s first phase was to develop nanometer-scale synapses that mimicked synapse activity in the brain but would function in a microcircuit-based architecture. Two competing private organizations, each backed by their own collection of academic partners, won the SyNAPSE contract in 2009: IBM Research and HRL Laboratories, which is owned by GM and Boeing.

In 2014, IBM revealed the fruits of its labors in Science, stating, “We built a 5.4-billion-transistor chip [called TrueNorth] with 4096 neurosynaptic cores interconnected via an intrachip network that integrates 1 million programmable spiking neurons and 256 million configurable synapses. … With 400-pixel-by-240-pixel video input at 30 frames per second, the chip consumes 63 milliwatts.”

Above: A 4×4 array of neuromorphic chips, released as part of DARPA’s SyNAPSE project. Designed by IBM, the chip has over 5 billion transistors and more than 250 million “synapses.”

By 2011, HRL announced it had demonstrated its first “memristor” array, a form of non-volatile memory storage that could be applied to neuromorphic computing. Two years later, HRL had its first neuromorphic chip, “Surfrider.” As reported by MIT Technology Review, Surfrider featured 576 neurons and functions on just 50 mW of power. Researchers built the chip into a sub-100-gram drone aircraft equipped with optical, infrared, and ultrasound sensors and sent the drone into three rooms. The drone “learned” the layout and objects of the first room through sensory input. From there, it could “learn on the fly” if it was in a new room or could recognize having been in the same room before.

Above: HRL’s 2014 neuromorphic-driven quadcopter drone.

Other notable research included Stanford University’s 2009 analog, synaptic approach called NeuroGrid. Until 2015, the EU funded the BrainScaleS project, which yielded a 200,000-neuron system based on 20 systems. The University of Manchester worked to tackle neural algorithms on low-power hardware with its Spiking Neural Network Architecture (SpiNNaker) supercomputer, built from 57,600 processing nodes, each of eighteen 200 MHz ARM9 processors. The SpiNNaker project spotlights a particularly critical problem in this space: Despite using ARM processors, the solution still spans 10 rack-mounted blade enclosures and requires roughly 100 kW to operate. Learning systems in edge-based applications don’t have the liberty of such power budgets.

Intel’s wide influence

Intel Labs set to work on its own lines of neuromorphic inquiry in 2011. While working through a series of acquisitions around AI processing, Intel made a critical talent hire in Narayan Srinivasa, who came aboard in early 2016 as Intel Labs’ chief scientist and senior principal engineer for neuromorphic computing. Srinivasa spent 17 years at HRL Laboratories, where (among many other roles and efforts) he served as the principal scientist and director of the SyNAPSE project. Highlights of Intel’s dive into neuromorphic computing included the evolution of the Intel’s Loihi, a neuromorphic manycore processor with on-chip learning, and follow-on platform iterations such as Pohoiki Beach.

Above: A close-up of Intel’s Nahuku board, which contains 8 to 32 Loihi neuromorphic chips. Intel’s latest neuromorphic system, Pohoiki Beach, is made up of multiple Nahuku boards and contains 64 Loihi chips. Pohoiki Beach was introduced in July 2019.

The company also formed the Intel Neuromorphic Research Community (INRC), a global effort to accelerate development and adoption that includes Accenture, AIrbus, GE, and Hitachi among its more than 100 global members. Intel says it’s important to focus on creating new neuromorphic chip technologies and a broad public/private ecosystem. The latter is backed by programming tools and best practices aimed at getting neuromorphic technologies adopted and into mainstream use.

Srinivasa is also CTO at Eta Compute, a Los Angeles, CA-based company that specializes in helping proliferate intelligent edge devices. Eta showcases how neuro-centric computing is beginning to penetrate into the market. While not based on Loihi or another neuromorphic chip technology, Eta’s current system-on-chip (SoC) targets vision and AI applications in edge devices with operating frequencies up to 100 MHz, a sub-1μA sleep mode, and running operation of sub-5μA per MHz. In practice, Eta’s solution can perform all the computation necessary to count people in a video feed on a power budget of just 5mW. The other side of Eta’s business works to enable machine learning software for this breed of ultra-low-power IoT and edge device — a place where neuromorphic chips will soon thrive.

In a similar vein, Canadian firm Applied Brain Research (ABR) also creates software tools for building neural systems. The company, which has roots in the University of Waterloo as well as INRC collaborations, also offers its Nengo Brain Board, billed as the industry’s first commercially available neuromorphic board platform. According to ABR, “To make neuromorphics easy and fast to deploy at scale, beyond our currently available Nengo Brain Boards, we’re developing larger and more capable versions with researchers at the University of Waterloo, which target larger off-the-shelf Intel and Xilinx FPGAs. This will provide a quick route to get the benefits of neuromorphic computing sooner rather than later.” Developing the software tools for easy, flexible neuromorphic applications now will make it much easier to incorporate neuromorphic processors when they become broadly available in the near to intermediate future.

These ABR efforts in 2020 exist, in part, because of prior work the company did with Intel. As one of the earliest INRC remembers, ABR presented work in late 2018 using Loihi to perform audio keyword spotting. ABR revealed that “for real-time streaming data inference applications, Loihi may provide better energy efficiency than conventional architectures by a factor of 2 times to over 50 times, depending on the architecture.” These conventional architectures included a CPU, a GPU, NVIDIA’s Jetson TX1, the Movidius Neural Compute Stick, and the Loihi solution “[outperforming] all of these alternatives on an energy cost per inference basis while maintaining equivalent inference accuracy.” Two years later, this work continues to bear fruit in ABR’s current offerings and future plans.

Benchmarks and neuromorphic’s future

Today, most neuromorphic computing work is done through deep learning systems processing on CPUs, GPUs, and FPGAs. None of these is optimized for neuromorphic processing, however. Chips such as Intel’s Loihi were designed from the ground up exactly for these tasks. This is why, as ABR showed, Loihi could achieve the same results on a far smaller energy profile. This efficiency will prove critical in the coming generation of small devices needing AI capabilities.

Many experts believe commercial applications will arrive in earnest within the next three to five years, but that will only be the beginning. This is why, for example, Samsung announced in 2019 that it would expand its neuromorphic processing unit (NPU) division by 10x, growing from 200 employees to 2000 by 2030. Samsung said at the time that it expects the neuromorphic chip market to grow by 52 percent annually through 2023.

One of the next challenges in the neuromorphic space will be defining standard workloads and methodologies for benchmarking. Benchmarking applications such as 3DMark and SPECint have played a critical role in helping technology adopters to match products to their needs. Unfortunately, as discussed in the September 2019 Nature Machine Intelligence, there are no such benchmarks in the neuromorphic space, although author Mike Davies of Intel Labs makes suggestions for a spiking neuromorphic system called SpikeMark. In a technical paper titled “Benchmarking Physical Performance of Neural Inference Circuits,” Intel researchers Dmitri Nikonov and Ian Young lay out a series of principles and methodology for performing neuromorphic benchmarking.

To date, no convenient testing tool has come to market, although Intel Labs Day 2020 in early December took some big steps in this direction. Intel compared, for example, Loihi against its Core i7-9300K in processing “Sudoku solver” problems. As the image below shows, Loihi achieved up to 100x faster searching.

Researchers saw a similar 100x gain with Latin squares solving and achieved solutions with remarkably lower power consumption. Perhaps the most important result was how different types of processors performed against Loihi for certain workloads.

Loihi pitted not only against conventional processors but also IBM’s TrueNorth neuromorphic chip. Deep learning feedforward neural networks (DNNs) decidedly underperform on neuromorphic solutions like Loihi. DNNs are linear, with data moving from input to output in a straight line. Recurrent neural networks (RNNs) work more like the brain, using feedback loops and exhibiting more dynamic behavior. RNN workloads are where Loihi shines. As Intel noted: “The more bio-inspired properties we find in these networks, typically, the better the results are.”

The above examples can be thought of as proto-benchmarks. They are a necessary, early step towards a universally accepted tool running industry standard workloads. Testing gaps will eventually be filled, new applications and use cases will arrive. Developers will continue working to deploy these benchmarks and applications against critical needs, like COVID-19.

Neuromorphic computing remains deep in the R&D stage. Today, there are virtually no commercial offerings in the field. Still, it’s becoming clear that certain applications are well suited to neuromorphic computing. Neuromorphic processors will be far faster and more power-efficient for these workloads than any modern, conventional alternatives. CPU and GPU computing isn’t disappearing; neuromorphic computing will merely slot in beside them to handle roles better, faster, and more efficiently than anything we’ve seen before.

0 notes

Text

A Brief History of Intel CPUs, Part 1: The 4004 to the Pentium Pro

To celebrate the 42nd anniversary of the 8086 and the debut of the x86 architecture, we’re bumping our previous retrospectives on some of Intel’s most important CPU designs. In this article, we’ve rounded up the first decades of history, from the 4004 in 1971 to the Pentium Pro in 1994. This period covers the first two eras of Moore’s Law (a concept we’ve discussed elsewhere), in which discrete capabilities were rapidly integrated on to a single contiguous wafer, and then as microprocessor transistor counts and clock speeds continued to rise.

Today, x86 chips are the backbone of modern computing. ARM may dominate the smartphone industry, but the cloud-based services and platforms that smartphones rely on are sitting in data centers running on x86-based hardware. What’s surprising, looking back, is that no one at Intel had even an inkling that this was going to take place. Intel had sunk its hopes and dreams into the i432APX, a 32-bit microprocessor with a radically different design than anything the company had tried before. Early sales of the 8086 and 8088 weren’t very strong, since the entire computer market was facing something of a hardware glut. Intel’s Operation Crush, an aggressive marketing and support effort around the 8086, helped change that and caught IBM’s attention in the process.

Enter IBM. When Big Blue decided to build its first PC, it narrowed the field to three choices: Motorola’s 68000, the Intel 8086, and the Intel 8088. Because the 8088 and 8086 were compatible with each other, it ultimately didn’t matter which Intel CPU IBM picked. IBM was more familiar with Intel than Motorola and Microsoft had a BASIC interpreter with x86 support already baked in. If IBM had gone the other way, we might well be sitting here talking about the rise of “Motosoft” instead of “Mintel.” IBM’s decision to back Intel shaped the future of computing, and Intel’s future processors. Over the next few years, OEMs like Compaq brought new systems to market, powered by new, more advanced x86 CPUs.

Below, we’ll discuss the next series of Intel CPUs, starting with the 80286 and running through the Pentium Pro. The 80186, while it technically existed, was actually primarily used as an embedded microcontroller rather than a PC CPU (with a bare handful of exceptions). For most, the line of succession jumped from the 8086/8088 to the 80286.

The 8086 to Pentium can arguably be grouped as a single family of products, albeit a family that evolved enormously in less than 20 years. All of these chips executed native x86 instructions using what we now call in-order execution (prior to the invention of out-of-order execution we just called this “execution.”) Intel rose to dominate the personal computing market on the strength of these cores. In October 1985, the fastest 80386DX was clocked at 12MHz. By June of 1995, the Pentium 133 was on-sale — a greater-than 10x speed improvement, on top of all the architectural improvements, in just a decade.

By this point, Intel had already largely conquered the personal computer market and begun making early inroads into the workstation and data center spaces, but the bulk of the market still belonged to various RISC architectures backed by entrenched players like Sun, MIPS, and HP. Intel wanted to expand into data centers and professional workstations, but to do that it needed a CPU architecture that would allow it to compete against these high-end workstation chips on absolute performance. Intel had added manufacturing capacity through the 1980s and 1990s, and any new chip needed to do more than simply boost performance — it needed to be a CPU that could leverage Intel’s growing economies of scale.

The Pentium Pro and its descendants were that CPU. We’ll discuss how they evolved — and the features they brought to market — in Part 2.

Now Read:

Intel Shares PL1, PL2, and Tau Values on 10th Generation CPUs

Intel Doesn’t Want to Talk About Benchmarks Anymore

Intel May Not Launch a New HEDT CPU This Year

from ExtremeTechExtremeTech https://www.extremetech.com/computing/270933-a-brief-history-of-intel-cpus-part-1-the-4004-to-the-pentium-pro

from Blogger http://componentplanet.blogspot.com/2020/06/a-brief-history-of-intel-cpus-part-1.html

0 notes

Text

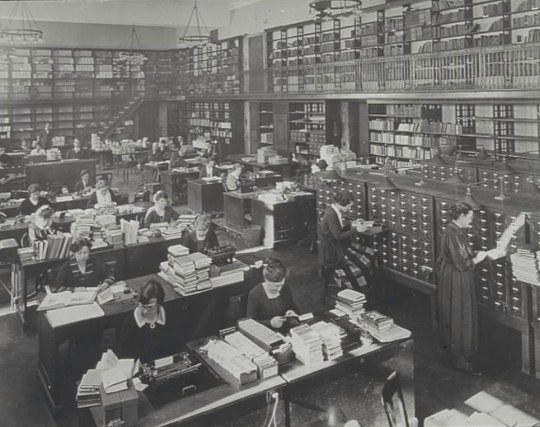

The Picture

Browsing Reddit I stumbled upon this image of Room 100 of the Central Building in the New York Public Library. Its catalog details are dateless but the clothes, hairdos and office equipment seem to place the moment in the teens of the 20th century.

My New York chauvinism presumes this was state-of-the-art in the go-go days of early modernity. Room 100 would have been its brain, the system’s central processing unit with its card catalog system indexing a vast database of manuscripts, objects and books.

I count thirteen women and six men, a healthy two-to-one ratio. This suggests, anecdotally, the early days of information management system culture were dominated by female knowledge workers. Then I see a guy, that stern-looking guy in the back. His hands clasped behind in the classic pose of ready reproach. This is the boss. Some things in business change more slowly than others, and males dominating the management class remains infuriatingly one of them.

We Were Warned

Aldous Huxley, writing in 1932, imagined his Brave New World of techno-dystopia as a huge card catalog index. He might have guessed wrong on the storage media, but his prediction betrays an insight: library sciences in the early 20th century was the innocent precursor for the Wild West information economy we inhabit today.

So, how did we get from that mostly girl-powered Room 100 to the brave new marketplace of facial recognition and black-box algos? In less than a hundred years we galloped from an open-access, community-financed, human value-add information culture to something Huxley basically nailed: a violent chaos of data energized by the cold logic of value extraction - a surveillance economy.

Boys and Their Toys

History’s short, brutal arc from our image above to that touch-screen you’re staring at this very moment has a defining characteristic - phallo-technic. An insurgent whiff of testosterone infuses every leap beyond the analog. Turing’s machine thinking starts sparking early innovations like Colossus, the mighty Allies’ crypto-breaking war machine. From there, the guys blast genius paths to stored memory on tubes instead of cards, and by the 60s they came up with microprocessors and CPUs. Big businesses formed around these break-throughs - companies like Sperry Univac, IBM and Intel all laying claim to the emerging frontiers of cutting-edge tech. Then the 70s happened, and the soon-to-be unholy trinity of business, government and academia spawned the defining product Eisenhower warned us about - Messrs. Cerf and Kahn’s development of TCP/IP and the birth of ARPANET. Recognizing early they had a naming issue, somebody in the vast military-industrial complex had the branding smarts to re-christen their new everywhere machine, the Internet.

The battle matrix for humans versus machines was locked and loaded. If only there was a simple way to connect it all. Thinking through the problem of what regular people might be able to with all those connected servers, Tim Berners-Lee came up with the protocol for the World Wide Web. The lowly but lovely browser was born and released into the early 1990s world with the promise of a “free and open” web for the world.

And so began the most fertile, frenzied fifteen business years the planet has ever known. A handful, you guessed it, of guys puttered their way from garage labs to an almost total takeover of the Web. Today was born, the state of human affairs defined dystopically by the *FAANG-ilization of everything. Huxley, dude – how did you know?

Theft as Gift

If there’s a key to understanding how we got here it’s the human data-theft at the heart of our devil’s bargain with the FAANG/BATs* (*adding the emerging Chinese tech conglomerates Baidu, Alibaba and Tencent.) A theft calculated by the imbalanced exchange of our data for their “free” services, utility and content. The swindle sealed by a brilliant if insidious deception, that what was sensed as newfound control turned out to be full frontal surrender.

This human data theft premises, privileges and permissions the dominating platforms to treat this new connected culture lab as their shareholder piggy bank, ringing up billions in $s, ¥s and €s each hour with its super-efficient violence of value extraction by-design.

Capital’s OS

There’s nothing new about the insight connecting the human male with aggression. The history of everything – science, philosophy, art, sport, invention – has always been told through the muscular narrative of men on the march. Business and its platform of capital perhaps even more so. But the 20th century’s technological advances, along with their owners, sponsors and profiteers were definingly male, fueled by states, their militaries and compliant academic accomplices.

Marx in all his prescient insight about how capital morphs and snakes through a social system couldn't have predicted such omnipotence. No one (if only Huxley’s insight was more economic) could have imagined how capital might behave when unleashed across the digitally collapsed frontiers of nations, markets and human autonomy itself.

But here in the teen years of the 21st century, capital has found its most perfect operating system. Essentially owned and operated by the FAANG/BATs, finally exceeding states' powers to regulate by cutting them in on the deal. The merits seemed obvious, for a few cycles. But now the darker downsides unsettle, even if muted by the seeming benefits. All we have to do is lean forward, accept the terms of service and enjoy the pleasing haze of surrender. Or not.

Fork You

One of my more unlikely sources of insight is Audre Lorde. In the late 70s, in her call-to-arms for a broader reset of culture, politics and governance she suggested, “the master’s tools will never dismantle the master’s house”. Lorde added a caveat to her provocation – “in the long run, anyway…”. I take her qualification as inspiration for an alternative path to our shared futures together.

Lorde understood that, in the near term, sometimes the fastest and most efficient way to ignite change was to borrow from the master's tool-box. So, from our reigning overlords of the technosphere I borrow a term – forking. Originally coined by application engineers working in the early Unix operating system, it describes how a system makes a copy of itself in order to launch a new program or procedure.

It’s useless to ponder what if we hadn’t succumbed so completely to the current techno-economic regime. But it’s not crazy to suggest an act of shared re-imagination about what’s next – and doing something about it. Given the choice and taking the chance, ask ourselves what a more equitable human fork beyond this replicant economy might look like.

Look at Me... I matter!

Fredric Jameson and Slavoj Žižek lay equal claim for musing, “it’s easier to imagine the end of the world than the end of capitalism”. We won’t be solving for capitalism, but it’s certainly within our imaginative grasp to re-engineer a fresh fork in the fabric of our shared technosphere.

Our first leap together is the toughest, but everything depends on it. We must re-imagine a reward incentive to replace the predatory value extraction at the heart of the current model. Imagination without insight is an empty exercise. The stakes are too high and the opportunities too sweet. We arm ourselves with an insight grounded in the ancient core of human need: recognition and affirmation.

This is the essential human insight driving our behavior together since we sat around communal fires 50,000 years ago to tell stories and share things like food, heat and protection against the forces from without. It’s the insight we’ve been baking into our creative briefs for a more radical marketing for the past twenty years.

It’s also an insight we’re all intimately familiar with, given our newfound togetherness on socially connected devices. Whether you’re a lurker, a poseur or somewhere in between, that feeling you get when someone likes your lunch picture or comments on your pithy comebacks is a pleasing if minor reward. A gift minted in a scalable social currency of affirmation and recognition.

If we need an insight to spark our imaginative leap into a different future of everything, I’m going with - “Look at me... I matter!” The challenge remains what we do with it to disrupt the disruptors out of their ubiquitous ownership of our 24/7 lives.

The Big Lie

Our primary solve for applying this insight is cost. The cynical lie at the heart of the surveillance economy is that the companies who give us all this free stuff – image storage, social connectivity, email and massaging, document management, navigation tools, video services – need to pay for them with advertising.

I’ve been in the global advertising and marketing racket for over thirty years and I can tell you, as we say on Madison Avenue, this is absolute bullshit. From the radio soap operas of the 1930s through to the sitcoms of the current twilight of linear TV, the default economic model was to exchange content costs with eyeball reach.

But advertising is an increasingly moribund model when it comes to getting and keeping customers. Brand marketing teams, agencies, publishers and broadcasters have known this for the past several years and are frantically trying to figure out alternative models. Don’t expect what they come up with will have you the human at the heart of its value equation.

Note well: if we’re imagining a more equitable human technosphere, someone will need to pay for it. The shareholders of the FAANG/BATs – full disclosure that includes me – aren’t candidates. We, and that includes everybody, will need to be ready to offer up some value in exchange. This new currency of exchange, unlike the attention we spend under today’s regime, will be something quite different.

Identity and Attention

Under the dominant rules the most valuable asset we control is not our attention. It’s our identify itself.

In the past fifteen years Google, Facebook, Twitter, Amazon, Apple and LinkedIn (the shortlist) all have aimed for a lock on owning and managing our identity. Each developed APIs and competed for publishers to offer their credentialing service in return for reducing the friction of visitors to their sites or apps to register. This was a brilliant strategy for aiming to "own" human identity on the Web. The winners would become the custodians of our identity, allowing them to monetize our attention and continue gathering our personal data trails, even when we were off their sites and networks.

We underscore this founding and enduring connection between identify and attention, and the pair's critical role in the business model of platform value extraction. Pundits coined the benign term the “attention economy” to sugar-coat the brutal mechanics of the emerging surveillance economy. But what we thought we were so freely exchanging wasn’t really our attention. That was simply the metric (see views, impressions) of their very last-century media model. What this economy was actually based upon was the signs and manifestation of our identity itself - our behaviors, our transactions, our attitudes and our fears, our desires and our demographics. Our human identity data serving as fuel for all this perfidy.