#302 is also the html code for 'found'

Explore tagged Tumblr posts

Text

Kisame Week Day 1: Swordsmen

Another mini series just cause it’s fun. A modern AU! @kisamesharkweek

Also I’m late for day 1 but doing everything on the phone is a nightmare. Fun, but a nightmare.

In case it gets confusing, Misty Forest is Yamato here. Kakashi and the rest will eventually make their appearances too :D Akira/Clear Shadow is an OC.

Formatting in the phone also sucks, as usual.

AO3: https://archiveofourown.org/works/20036536/chapters/47446585

~☆~

{Set in the land of Eden, players will control their customizable characters to traverse the vast horizons of magic and wonders, while darkness looms in the distance. Gods, created by the faith of mortals and given the consciences birthed by centuries of belief, began to make their moves. Embroiled in their ploys, players are caught in the eye of the storm as Eden finds its peace.}

Eden had been launched for approximately three months, topping the charts for best-selling game and earning itself the title “Game of the Year 20XX” after an entire year of hyped anticipation. It was the newest creation of Mad Games, the same company that released the popular RPG game “Age of Glory”. Unlike “Age of Glory” that was playable across multiple platforms, Eden was only playable using the VR glasses jointly created by Mad Games and Technivia, the same company that created the popular Game Station consoles. Despite the unrest and opposition from the public at the single platform requirement, it soon turned into excitement. The VR glasses were easy to store and deceptively light, unlike its helmet predecessor that was heavy and bulky, and with its price oddly, relatively affordable, almost everyone that wanted to play the game owned the VR glasses…

Clear Shadow clung onto a piece of driftwood, but even that crumbled at the face of nature’s wrath. Raging waves slammed and pushed her below as the torrential river rushed her forward like a crowd excitedly passing the – unwanted – crowd surfer over their heads. She didn’t struggle against the force, letting it push and pull her away as she maintained her calm in the face of danger. Bubbles of air escaped her nose, mouth clammed shut while she forced to keep her eyes open. Sharp rocks in the river slashed at her body and a string of damage values appeared above her head. Her head throbbed, her lungs constricted. Determination coursed through her veins. Noticing the short clearway in the river and with a forceful kick of her legs, she flipped over to float on her back and greedily gulped in a large mouthful of oxygen. “There she is! Get her!”

Out of the corner of her eyes, she spotted a party of four men rushing along the riverbed, weapons drawn and names coloured red. With a twist of her shoulders, her body was submerged face down into the water once again. “Hurry! Quick!” The Thief taking point cried out to his party members as he activated sprint. His steps increased in speed, dashing up and over the boulders along the riverbank and reaching Clear Shadow’s position within seconds. Tall trees lined the river, protruding roots large and strong along the banks, curling around the moss-covered rocks. The lone mage in the party suddenly halted his run, cloak billowing at the wind brought forth by the remaining two party members sprinting ahead. The brunet raised his wand, lips parting in a murmured chant. Surges of magic gathered around his wand and with the last syllable, he pointed the wand forward as an earthy coloured energy shone within the trees in the riparian zone. The thick roots shuddered, lifting off the rocks like awakened snakes bending their wills to the lull of a snake charmer and lashed out into the river where Clear Shadow’s silhouette hid inside. Demented Earth, a level 25 channelling spell that changes according to the terrain. Danger prickled her senses. In that split second, she decisively reached out her hand, sharp jagged edges of the rock digging into her taut fingers and pulled. Pulled as hard as she could in that one motion. Because in her next breath, a sharp pain jolted from her leg as she barely avoided the cone-shaped tendrils that speared the very spot she was at a moment before. -258! The small damage floated above her head as her health pool finally dipped below half. “You missed, Misty Forest! Fuck, are you even using your eyes!?” One of the remaining two Blade Masters swore at the Earth Elementalist behind them, only to be graced by a serene smile. “Ah! I’m so sorry! I’m still fairly new at the game.” Clicking his tongue, the Blade Masters dashed away after giving Misty Forest another dirty look. With the belittling eyes away from him, the Elementalist lost the calm in his upturned lips, soft brown eyes turning sharp, with steps striding forward in a rhythmic, unhurried manner. With the rapid waves, a lot of the party’s physical attacks couldn’t even reach their target, the force easily sweeping away the shurikens and easily rendering the Blade Masters’ attacks inaccurate. “She can’t stay underwater forever!” And that was the truth. When a character submerges below the water, an oxygen bar will appear below the mana pool, which will start to tick away, and hers was – just like her health – already below half. The Thief readied his shuriken, closely watching the shadowy silhouette while flanked by his pair of Blade Masters, swords at ready. There finally was a slight shift in her calm when she glanced at the mini map; relief and happiness relaxing her mind. She wasn’t too far now. At the end of the river was an estuary and with the currents, she would arrive by the sea in roughly half a minute. She held on strongly, body tilting and turning to minimise the damage from the river, but even she couldn’t deny that any more and she’d be forcefully dead by the system. With a heave of her arms, her head plunged above the surface, gasping for desperate oxygen. Her vision, blurred and dark, a sign that her character was about to drown, immediately cleared. Bright blue water and thinning trees flooded her sight, and she realised that the currents were slowing. A sharp whistling tore through the air. She turned her head, noticing the lone thief fixatedly watching his shurikens flying her way. She hurriedly gulped a lungful of air and ducked back into the river. -16! She ignored the shuriken slicing her cheek and quickly swam towards the estuary. Her arms stretched forward to propel herself forward as her tongue peeked out slightly, tasting the salt mixing with the fresh water. The Blade Masters sneered at the figure swimming quickly, swords poised at ready and patiently awaiting at either sides of the estuary; the right one, Turnip Killer, was at level 30 and the left, Stone King was at level 32. Turnip Killer leapt across the river while his sword lit up with a blinding white radiance. The sword drew a half circle radiance in mid-air as he focused on the silhouette. The blade plunged into the water with a splash, salty droplets pelting his face while his Upwind Slash attack met with a resistance when it successfully connected with Clear Shadow’s body. With a huff, the Blade Master swung upwards, forcing her out of the water and knocking her up into the air. Although its effect was slightly reduced from the water’s drag, the simple level 20 warrior skill’s knock-up effect was successfully activated as Clear Shadow’s body was bent backwards in mid-air from the attack. Stone King bent his knees and jumped, coming level with Clear Shadow as he pulled his arms back, both hands gripping his sword tightly. Equally covered in a white radiance, he cried out and activated Whirlwind Slash. Seeing the falling sword, Clear Shadow hurriedly lifted her own sword and activated Block just as the attack connected, negating the damage, but it wasn’t over. Following his momentum, the Blade Master spun a full turn in a slight vertical manner and once more heavily brought his sword down. With Block on cooldown, Clear Shadow tilted her body back, catching a brief glimpse of a green energy near the riverbank from the corner of her eyes before her eyes refocused on the enemy and activated Upwind Slash. Her upwards strike met with his second attack, with the rebounding force powerful enough to send her splashing into the river again. -547! -25! Clear Shadow quickly downed a small health potion, causing her low health to recover till it was more than half. Despite being higher levelled than the attacking party at level 35, her health and dexterity had taken a hit in stat points as she mainly focused on strength and intellect, with a minor focus on speed. The knockback had sent her deep enough, feet touching the riverbed. She kicked off the ground, a cloud of soil browning the clear water and she shot towards the ocean, swimming with all her might that her muscles screamed and ached at the shoulders. Legs started to tire and refuse to kick, and she unwillingly resurfaced for oxygen. A booming roar shook the skies, followed by a massive crash into the ocean. Her health that was recovered was immediately reduced by the attack. Large, towering waves surfed the water, crashing into Clear Shadow and forced her back underwater just as Misty Forest finished chanting. Standing deep in the water, he was flanked by large rocks that protected him from the currents while also preventing him from getting washed away. Steady on his feet, he waved his staff forward after uttering the last syllable. Clear Shadow, still submerged, watched as the algae growing near the estuary rapidly grew and she hurriedly swam out further when a large figure, bloodied and battered, appeared before her eyes. Its width spanned easily over fifteen meters with a height possibly over fifty meters and its half submerged body, from what she could deduce, was wide at the top that narrowed near the feet. Long tentacles made up its feet, waving and keeping it afloat, and more sharp tentacle tendrils thrashed about in an enraged manner. Her eyes flew open at the sound of something rushing towards her from behind and she twisted her body to the side, thanking heavens that sound travelled a lot faster underwater than it did in the air. The overgrown algae speared through bubbles, missing her entirely and she watched as it continued attacking in its path towards monster. Blood was drawn as the attack landed a critical hit, gaining a damage boost because of the elemental advantage. The monster roared, shaking the seas and earth with its fury, and Clear Shadow was thrown out with a wave of its tentacles, painfully landing on the spit that stretched out near the estuary. -2376! Inwardly swearing at her low health, she scooted a distance away; for fear of stray attacks and splash damage, for fear of dying as her health potion was still on cooldown, and silently observed the situation unfold. The level 45 boss monster Sea Monk had only but a sliver of health remaining. Its eyes were a glaring red as it spun a full circle, tentacle arms sweeping out into an area of effect attack. With the increased strength and speed from its berserk state, its attack gained a wider range as powerful waves crashed onto the pair of Blade Masters and its initial attacker. “The fuck are you guys doing!” A husky voice shouted after he was slammed onto the ground. The snarl was almost animalistic, feral like a beast in the wild. ‘And he certainly looks the part,’ Clear Shadow was slightly taken aback at the sight. Blue, a deep blue like the dark ocean depths, was his skin that peeked out from the armour. Fingers reached towards the fallen broadsword and gripped it tight as he got to his feet upon the same spit she was on. Her eyes followed his movements as they slowly widened alongside his straightening figure, back straight and shoulders square, but fury rolled off him in angry waves. The blue-skinned Blade Master has been thrown too far away, landing just right outside of the boss’ aggro range. He waved his sword around, stretching out his arms, muscles thick and defined rippling with his movements. Generally a player’s appearance is modelled after them in real life with a beautified touch, but players themselves still did retain the option to customise the characters. Yet, although curious at his choice of colour, she chose to remain silent as she inspected his player details. Level 38 Blade Master, Tailless Beast. Tailless Beast looked at the Sea Monk getting further away from him and snarled at Clear Shadow, mouth full of pointy teeth bared like a predator. She looked back inquisitively, an eyebrow raised in slight defiance and slight surprise. Small black eyes narrowed but wordlessly, he turned back to the berserk boss and activated sprint. At the end of the spit, he jumped while his sword gleamed white, and activated Blade Rush. ‘Huh… He’s using an offensive skill as a movement skill,’ Clear Shadow noted in surprise. Steady battle cries to the side caught her attention when the Sea Monk shifted its aggro, she realised, towards the brunet Elementalist. Turnip Killer and Stone King patiently waited at the estuary as Misty Forest chanted another spell with the Thief positioned at ready behind him. They knew a battle in the water would mean certain death. Not only would their skills be reduced, but their movements would be slowed and restricted as well, not to mention they had zero experience in fighting in such a scenario. Sea Monk charged forward instinctively, roaring and lashing its tentacles at everything around it. Planting their feet steady on the ground, they endured the damage from the waves as the Sea Monk neared closer. Fifteen meters. The Sea Monk screeched, activating a sound wave attack that affected the party of four, the tunnel-like sound waves sending water swirling everywhere. Ten meters. The pair of Blade Masters rushed forward to intercept. Sea Monk raised its tentacle arms, each one as thick as a barrel, high above its bleeding head. It snarled at the Blade Masters, round mouth full of many rows of sharp pointy teeth. With a screech, it brought its arms down while its preys hurriedly activated Block, but the skill’s negating effect was cancelled when facing against a berserk boss, instead becoming an effect that reduced the damage by 50%. A tremor shook the earth and skies as its arms slammed upon the swords, causing the ground beneath their feet to cave in. -3296! Seeing their health immediately plummet, they screamed at Misty Forest. “Can’t you help - !” A gust zipped past the Blade Masters cheek, the speed of the object so quick that all they saw was a blurry shadow. Their eyes followed the attack’s trajectory and watched in time as thick roots speared through Sea Monk’s open mouth and exited through the skull. -5476! A critical hit! Misty Forest lowered his wand, now standing back on land. A bright light enveloped him while a notification chimed, indicating that he levelled up. Just as the party of four thought that they were out of harm’s way as they collected the dropped loot, an enraged roar bellowed. Charging from across the water, Tailless Beast landed at the estuary with a glare. “The boss was mine!” “It attacked us!” Stone King retorted and picked up the dropped weapon. “We acted in self defence.” Anger rocked in the pits of Tailless Beast’s stomach, swirling and crashing like waves in a storm, and his snarl curved into a feral grin when they stepped back from fear. He took a step forward, the pressure bearing down onto them. The Sea Monk wouldn’t have attacked them if its aggro wasn’t pulled away, if he wasn’t sent away. He had been here first, training solo and far away from public, and it was peaceful enough until this ragtag bunch appeared. And besides, he was never one for words. “You probably would have died to the boss anyway,” Stone King continued. “You wouldn’t have lasted another – !” He shut his eyes from the sudden gust of wind. A blinding flash of white so familiar appeared and momentarily, he wanted to ignore it, only to have his eyes flying open at the pain sprouting from his gut. Tailless Beast followed up his Upwind Slash with Blade Rush, his sword slicing through Stone King’s side as he travelled a distance forward. Turning at the waist, he swept his arm out and executed a basic slash attack towards the falling neck, sending a fountain of blood to spurt in mid-air and shaving away the last bits of his health, not giving even the slightest bit of chance to recover his health. He glanced from the corner of his eyes and firmly planted his feet on the ground. His body tilted to the side, the Piercing Thrust missing him by an inch, and he returned a tooth for a tooth. Calmly, his arm straightened and he sent his sword thrusting straight out towards Turnip Killer. With no way to dodge or block, he could only receive Tailless Beast’s attack head-on. Even though they were the same attacks, being eight levels higher did have its advantages after all. Blood was drawn when Tailless Beast’s sword pierced the other Blade Master’s shoulder. Both parties instantly distanced themselves as their attacks ended. “Why are you attacking us?” Turnip Killer panted and quickly downed a health potion. “You stole my boss first.” “We’re sorry!” The Blade Master hurriedly jumped back when Tailless Beast swung his sword. “We’ll give you the loot!” The sword was swung again and he ducked in panic. “And some compensation!” As they argued, more sword swinging than words on Tailless Beast’s part, there was a surge of magic and the chilly temperature rose. Sweat started to bead across their foreheads and their armour started to feel warm, only Misty Forest fared better with his cotton robes. In the next instant, a fireball was cast, shooting towards the Thief sneaking around Tailless Beast. Staggering in his steps, the Thief was materialised out of stealth. Shock and disbelief coloured his face. “How did you – !” The moment the Thief spoke, frost had covered the cracked ground in a linear path, rapidly snaking towards him. His words were caught in his throat, movements forced to slow to a stop as icy blue frost crawled up his legs to fully encase his body. A shadow dashed passed Tailless Beast with Blade Rush and she activated Whirlwind Slash when the Thief entered its range. The frozen shell cracked, shattering into pieces like a broken mirror at the first slash, slicing into his body mercilessly before giving way to the second slash to slice his throat. The damage from Frost Spread had been negligible, unlike the damage from the fireball he had eaten head on, but the Whirlwind Slash was enough to fully deplete his full health of a level 28 Thief. Seeing half his party killed, Turnip Killer activated his Return Scroll in a fluster. His eyes watched, frightened, at Clear Shadow turning on her heels to face him with the biggest smirk and a victorious glint in those navy eyes. His breathing grew ragged, mind in a flurry and he prayed, so hard that the three seconds channelling of the scroll would hurry up. His heartbeat thumped with the seconds. One. Hurry up!! Two. A bit more! Relief, he could almost taste the sweetness of the escape. If he died now, he would have dropped a level and an equipment for having died with a red name, an effect from attacking other players first, and he had spent too much time building this character. Escaping now would save him from the dull grinding, escaping now would mean he could save his equipment. The channelling bar was almost full. Just a little… bit… more! At this time, Turnip Killer turned to smirk at Clear Shadow, unbothered that she wasn’t making any last ditch attempts at attacking him because his return scroll would have been interrupted, but he wasn’t going to complain. 97% completion. A broadsword filled his vision, his eyes widening in fear at the growing sight. 99% completion. No!! Tailless Beast ruthlessly stabbed his sword through the torso, blade poking out from the back as the light from the return scroll dimmed, just like the lifeless eyes of the corpse. Blade Rush had just gotten off cooldown when he activated it again, easily closing the distance between them in the blink of an eye and killing the escaping Blade Master. He pulled out his sword and the body fell with a dull thump. “Idiot. Should have used a health potion instead,” he scowled while walking away, having seen the figure of Misty Forest disappearing into the light, successfully returning to the city. Clear Shadow walked up to him, stopping a couple of meters away. “Thanks for saving me.” He leered down at her, his near two meters tall stature towering over her slightly over one and a half meter height. “I wasn’t saving you. Just returning the favour for stealing the boss.” He hefted the large broadsword onto his shoulder and began walking away when a notification popped up. [Clear Shadow sent you a friend request. Accept? Reject?] With the snarl still present, he immediately rejected it and sprinted away. Clear Shadow watched with a smile, unaffected by his rejection. Seeing that his large figure disappeared into the water, she looked at the time and decided to exit the game.

~*~*~

Akira opened her eyes, revealing a pair of soft brown orbs instead of navy hidden beneath the lids. She removed the helmet off her head and the device automatically folded itself to return to its original state with a mechanical whirl. The ends of the helmet folded inwards, turning back into the glasses’ arms. She placed the sleek, black framed glasses onto the bedside table and stood up from the bed. Its lens were wide, which could cover the entire area around her eyes and curved around her temples. She then stepped into her kitchen, flicking the lights on, picked her orange cup from the dishrack and pulled open a drawer for her favourite hot chocolate. Stirring the hot chocolate gently with a spoon, Akira walked towards the living room and drew the curtains open, the crimson colour a clear contrast to her white walls. Small, white crystals dotted the moonless sky. The lights to the room across from her on the other apartment fifteen meters building lit up, catching her attention. A tall, muscular figure appeared by the window, an arm lifted to also coincidentally drink from his cup. Soft laughter rumbled under her breath as she similarly drank her hot chocolate. She double tapped on her sleeping phone and the screen lit up. 3:02 am. Unlocking her phone, she sent a quick text off as a report: [Target found. Contact established.] Her mission had just begun.

#kisameweek2019#Day1Prompt2#modern au#302 is also the html code for 'found'#pretty interesting#it's the opposite of error 404 not found

12 notes

·

View notes

Text

HTTP response status codes indicate whether a specific HTTP request has been successfully finished

HTTP reaction status codes reveal no matter whether a certain HTTP request is successfully accomplished. Replies are grouped in five courses:

Informational responses (100--199)

Prosperous responses (200--299)

Redirects (three hundred--399)

Consumer problems (400--499)

Server mistakes (500--599)

Within the party you receive a response that is not During this listing, it really Get more information is a non-typical response, possibly custom made to the server's software program.

Information responses

100 ContinueThis interim reaction implies that anything is OK and that the customer really should carry on the request, or disregard the response In the event the ask for is presently concluded. 101 Switching ProtocolThis code is sent in response to an Upgrade request header in the consumer, and signifies the protocol the server is switching into. 102 Processing (WebDAV)This code indicates the host has obtained which is processing the request, but no response is obtainable still. 103 Historical HintsThis status code is primarily intended to generally be employed utilizing the hyperlink header, permitting the purchaser agent begin preloading applications though the host prepares a solution. The that means with the achievements relies upon upon the HTTP process:

GET: The source has been fetched which is despatched from the information human body.

Set or Publish: The resource describing the outcome with the motion is transmitted in the message system.

201 Produced The ask for has succeeded along with a new source is created As a result. This is ordinarily the solution sent immediately after Submit requests, or any Set requests. 202 AcceptedThe ask for has actually been received although not however acted on. It really is noncommittal, considering that there is no way in HTTP to Later on ship an asynchronous response indicating the benefits in the ask for. It truly is intended for situations in which A further treatment or host handles the request, or for batch processing. 203 Non-Authoritative Info This response code suggests the returned meta-facts is not really particularly the same as is obtainable from the supply server, but is gathered from the neighborhood or maybe a 3rd party backup. That is largely utilized for mirrors or even copies of another source. Except for that unique case, the"200 Okay" reaction is chosen to this standing. 204 No Contentthere is absolutely not any information to ship for this petition, although the headers may well be handy. 205 Reset ContentTells the user-agent to reset the doc which sent this petition. 206 Partial ContentThis response code is employed when the Range header is shipped by the consumer to ask for just A part of a source. 207 Multi-Status (WebDAV)Conveys specifics of many sources, for cases the place several position codes may possibly be ideal. 208 Already Reported (WebDAV)Used inside of a reaction factor to avoid consistently enumerating the inner associates of several bindings towards the similar assortment. 226 IM Used (HTTP Delta encoding)The server has fulfilled that has a GET ask for for your source, plus the answer is actually a illustration of your final result of just one or additional instance-manipulations applied to The present occasion.

Redirection messages

300 Multiple ChoiceThe petition has in excess of just one attainable response. The person-agent or buyer ought to decide on one among these. (There may be no standardized way of picking out among These solutions, but HTML hyperlinks into the choices are recommended so the person can pick out.) The new URL is provided while in the response. 302 FoundThis reply code implies the URI of asked for source is changed temporarily. Even more modifications in the URI could be acquired Down the road. Thus, this same URI must be used from the customer in long term requests. 303 See OtherThe waiter despatched this reaction to guide the customer to obtain the requested source at A further URI with a GET ask for. 304 Not ModifiedThat might be utilized for caching functions. It tells the customer the reply hasn't but been modified, Therefore the customer can continue on to employ the exact same cached Model of this reaction. 305 Use Proxy Defined in a preceding Variation on the HTTP specification to signify that a requested answer must be retrieved by a proxy. It's got been deprecated due to protection problems about in-band set up of a proxy. 306 unusedThis reply code isn't any for a longer time made use of; it really is just reserved. It were used in a prior Variation of the HTTP/1.1 specification. 307 Temporary RedirectThe server sends this reaction to guidebook the client to get the requested supply at A different URI with identical process that was utilized from the prior ask for. This has the exact same semantics as the 302 Observed HTTP response code, Along with the exception that the consumer agent should not modify the HTTP technique applied: When a POST was employed at the to start with petition, a Publish must be utilized at the subsequent petition. 308 Permanent RedirectThis implies that the resource is currently completely located at a unique URI, specified from the Site: HTTP Reaction header. This has the very same semantics because the 301 Moved Completely HTTP reaction code, With all the exception which the person consultant should not change the HTTP approach applied: If a Put up was applied from the first petition, a Write-up has got to be applied while in the second ask for.

Client mistake responses

400 Bad RequestThe server could not understand the ask for as a consequence of invalid syntax. To paraphrase, the client will have to authenticate itself to get the asked for response. The Preliminary intent for building this code was making use of it for electronic payment devices, nonetheless this standing code is applied very almost never and no normal convention is existing. 403 ForbiddenThe shopper won't have entry legal rights to the materials; this is, it really is unauthorized, or so the server is refusing to give the requested source. Opposite to 401, the customer's identification is recognized to the server. 404 Not FoundThe device can't Identify the requested resource. From the browser, this indicates the URL will not be regarded. In an API, this can also indicate which the endpoint is valid although the resource itself doesn't exist. Servers can also send out this reaction as opposed to 403 to conceal the event of a source from an unauthorized purchaser. This reaction code is most likely essentially the most famous 1 as a result of its Regular prevalence on the internet. 405 Method Not AllowedThe ask for technique is understood with the server but has actually been disabled and can't be applied. By the use of example, an API may perhaps forbid DELETE-ing a resource. The 2 required solutions, GET and HEAD, will have to never ever be disabled and should not return this mistake code. 406 Not AcceptableThis answer is shipped when the Website server, just after carrying out server-driven content negotiation, isn't going to obtain any content material that adheres for the requirements equipped because of the user representative. 407 Proxy Authentication RequiredThis is similar to 401 however authentication is expected for being obtained by signifies of a proxy. 408 Request TimeoutThis reaction is sent on an idle connection by some servers, even with no prior request by the client. It typically implies which the server would like to near this down unused link. This response is used substantially additional for the reason that some browsers, like Chrome, Firefox 27+, or IE9, benefit from HTTP pre-link mechanisms to speed up searching. Also Notice that quite a few servers only shut down the link devoid of sending this message. 409 ConflictThis reply is distributed whenever a request conflicts Using the existing state of your host. 410 GoneThis response is sent in the event the asked for material was forever deleted from server, with no forwarding deal with. Purchasers are predicted to eliminate their caches and backlinks to the resource. The HTTP specification options this standing code for use for"restricted-time, marketing methods". APIs shouldn't come to feel pressured to point methods which have been deleted with this standing code. 411 Length RequiredServer rejected the request considering the fact that the Material-Duration header industry isn't really outlined and also the server wants it. 412 Precondition FailedThe consumer has indicated preconditions in its personal headers which the host isn't going to meet up with. 413 Payload Too LargeTalk to entity is much larger than limitations described by server; the server could close the connection or return the Retry-Following header area. 415 Unsupported Media TypeThe media framework of this asked for knowledge is not supported through the server, And so the server is rejecting the request. 416 Range Not SatisfiableThe selection specified from the Variety header area from the request can't be fulfilled; it truly is achievable the scope is away from the scale of the focus on URI's information. 417 Expectation FailedThis reaction code signifies the anticipation indicated from the Hope ask for header discipline won't be able to be fulfilled by the server. 421 Misdirected RequestThe ask for was directed in a host which will not be ready to generate a response. This may be transmitted by a host which is not configured to create responses for the combination of technique and authority which are A part of the request URI. 422 Unprocessable Entity (WebDAV)The ask for was perfectly-shaped but was unable to generally be followed due to semantic problems. 423 Locked (WebDAV)The source that has long been accessed is locked. 425 Too Early indicators that the server is hesitant to chance processing a petition That may be replayed. 426 Upgrade RequiredThe server is not going to complete the request applying the latest protocol but may possibly be Completely ready to do so next the customer updates to a special protocol. The server sends an Upgrade header at a 426 response to signify the necessary protocol(s). 428 Precondition RequiredThe origin server calls for the petition to be conditional. This response is intended to prevent the'missing update' problem, exactly where a purchaser Receives a supply's issue, modifies it, and PUTs back to the server, when a 3rd party has modified the condition to the host, bringing about a fight. 429 Too Many RequestsThe consumer has sent a lot of requests inside of a specified interval of your time ("amount restricting"). 431 Request Header Fields Too LargeThe host is unwilling to procedure the request mainly because its personal header fields are excessively big. 451 Unavailable For Legal ReasonsThe user-agent requested a resource which may't legally be offered, such as an online web site censored by a governing administration.

Server mistake answers

youtube

500 Internal Server ErrorThe server has encountered a circumstance it doesn't learn how to manage. 501 Not ImplementedThe petition approach isn't supported by the host and can not be managed. The only techniques that servers are envisioned to aid (and as a result that should not return this code) are GET and HEAD. 502 Bad GatewayThis error reaction signifies the server, though employed to be a gateway to have a reaction needed to manage the petition, got an invalid reaction. 503 Service UnavailableThe server isn't ready to handle the ask for. Frequent brings about are a host which is down for maintenance or that is overloaded. Observe that with this reaction, a person-helpful website page describing the issue ought to be despatched. This solutions need to be utilized for short-term ailments in conjunction with also the Retry-Just after: HTTP header should, if possible, involve the believed time just before the recovery of the support. The webmaster will have to also watch out about the caching-relevant headers that are despatched collectively with this solution, as these momentary issue responses shouldn't be cached. 504 Gateway TimeoutThis mistake response is provided when the server is acting as a gateway and can't find a reaction in time. 508 Loop Detected (WebDAV)The server detected an infinite loop when processing the petition. 510 Not ExtendedExtra extensions to the ask for are needed for your waiter to match it. 511 Network Authentication RequiredThe 511 status code implies which the consumer should authenticate to attain network obtain.

2 notes

·

View notes

Text

HTTP response status codes indicate if a particular HTTP request was successfully finished

HTTP reaction status codes reveal no matter whether a specific HTTP petition has been successfully completed. Responses are grouped in 5 courses:

If you are provided a response that isn't really Within this listing, it really is a non-normal reply, potentially custom made to the server's program.

Information answers

100 ContinueThis interim response suggests that anything up to now is OK and the shopper need to continue on the ask for, or disregard the response If your petition is now finished. 101 Switching ProtocolThis code is sent in reaction to an Upgrade ask for header from the buyer, and indicates the protocol the server is switching to. 103 Historical HintsThis standing code is largely meant to generally be utilized with the hyperlink header, enabling the shopper agent commence preloading means although the host prepares an answer.

Successful responses

200 OKThe request has succeeded. The that means with the achievement depends on the HTTP strategy:

GET: The useful resource was fetched and is also transmitted from the concept overall body.

PUT or Put up: The resource describing the end result of the motion is despatched in the message system.

201 Produced The ask for has succeeded in addition to a brand-new resource was developed For that reason. This is normally the response despatched right after Article requests, or any Place requests. 202 AcceptedThe petition was received although not still acted upon. It is actually noncommittal, considering the fact that you will find absolutely not any way in HTTP to later mail an asynchronous response suggesting the effects with the request. It really is intended for circumstances in which Yet another method or server handles the request, or for batch processing. 203 Non-Authoritative Info This reaction code means the returned meta-information and facts isn't precisely the same as is offered through the supply server, but is collected from the community or a third-social gathering backup. This is chiefly used for mirrors or backups of a unique resource. Except for that specific scenario, the"two hundred OK" reaction is favored to this standing. 204 No Contentthere is absolutely not any product to ship for this ask for, however the headers could be useful. 205 Reset ContentTells the consumer-agent to reset the report which sent this petition. 206 Partial ContentThis reply code is applied when the Range header is shipped from the consumer to ask for just Component of a resource. 207 Multi-Status (WebDAV)Conveys specifics of many sources, this kind of circumstances where by various position codes may well be ideal. 208 Already Reported (WebDAV)Used within a reaction element to stay away from consistently enumerating the inner members of a number of bindings towards the identical selection. 226 IM Used (HTTP Delta encoding)The server has fulfilled a GET petition for the resource, and also the reaction is often a illustration with the consequence of additional occasion-manipulations placed on the current situation.

youtube

Redirection messages

300 Multiple Option The ask for has about one prospective reaction. The user-agent or user has to decide on between them. (There is no standardized way of picking among the responses, but HTML links into the possibilities are recommended so the person can decide.) 301 Moved PermanentlyThe URL on the asked for useful resource has long been altered for good. The brand new URL is provided within the reply. 302 FoundThis reply code implies the URI of requested resource was adjusted briefly. Even more modifications while in the URI could be made later on. Therefore, this exact same URI really should be applied through the shopper in foreseeable future requests. 303 See OtherThe waiter sent this reaction to immediate the customer to receive the asked for source at Yet another URI with a GET ask for. 304 Not ModifiedThis is utilized for caching applications. It tells the shopper the reaction hasn't nonetheless been altered, And so the buyer can go on to employ exactly the same cached Variation of this reaction. 305 Use Proxy Outlined in a very past Variation in the HTTP specification to signify that a asked for reaction has got to be retrieved by indicates of the proxy. It's got been deprecated because of safety concerns regarding in-band configuration of the proxy. 306 unusedThis reaction code isn't any much more utilised; It truly is just reserved. It had been Utilized in a preceding Edition of this HTTP/one.1 specification. 307 Temporary RedirectThe host sends this response to direct the customer to get the requested resource at Yet another URI with very same process that was utilized in the past petition. This has the exact same semantics as the 302 Identified HTTP reaction code, Using the exception the consumer representative should not modify the HTTP approach utilized: When a Article was utilised in the First ask for, a Publish should be utilized at the second petition. 308 Permanent RedirectThis suggests which the useful resource has grown to be forever Found at another URI, supplied from the Locale: HTTP Response header. This has the very same semantics since the 301 Moved Permanently HTTP response code, Along with the exception the user agent need to not change the HTTP strategy utilised: If a Write-up was utilized in the initially request, a Publish must be applied in the next petition.

Client error responses

400 Bad RequestThe server couldn't understand the request due to invalid syntax. To paraphrase, the client ought to authenticate itself to get the requested response. 402 Payment Required This reply code is reserved for foreseeable future utilization. The First intention for producing this code was making use of it for electronic payment techniques, but this status code could be utilised pretty sometimes and no standard Conference exists. 403 ForbiddenThe customer will not have obtain rights to the content material; that is, it truly is unauthorized, so the server is not able to give the requested useful resource. Contrary to 401, the customer's identity is named the host. 404 Not FoundThe equipment cannot locate the asked for useful resource. In the browser, this usually means the URL is not regarded. In an API, this can also point out the endpoint is legit but the resource itself isn't going to exist. Servers may also deliver this reaction as opposed to 403 to hide the existence of the source from an unauthorized client. This reply code is most likely probably the most famed just one because of its Repeated occurrence on the net. 405 Method Not AllowedThe request strategy is comprehended through the server but is disabled and won't be able to be employed. Through instance, an API might forbid DELETE-ing a resource. Both Obligatory techniques, GET and HEAD, really should in no way be disabled and shouldn't return this mistake code. 406 Not AcceptableThis reply is despatched when the World-wide-web server, following accomplishing server-driven content negotiation, will not discover any material which conforms into the benchmarks given because of the consumer agent. 407 Proxy Authentication RequiredThis resembles 401 having said that authentication is essential being achieved by indicates of a proxy. 408 Request TimeoutThis reply is despatched on an idle website link by some servers, even without prior ask for with the customer. It commonly usually means the host would like to shut down this new connection. This response is employed A lot much more because some browsers, like Chrome, Firefox 27+, or IE9, use HTTP pre-connection mechanisms to speed up browsing. Also Be aware that some servers only shut down the link without having sending this data. 409 ConflictThis reaction is shipped whenever a ask for conflicts Along with the current condition in the host. 410 GoneThis respond to is despatched if the asked for information was completely deleted from server, without having forwarding tackle. Consumers are expected to get rid of their caches and hyperlinks to your source. The HTTP specification intends this standing code for use for"restricted-time, marketing products and services". APIs should not experience pressured to indicate resources that are actually deleted with this position code. 411 Length RequiredServer rejected the request due to the fact the Content material-Duration header discipline is just not defined as well as the server desires it. 412 Precondition FailedThe client has indicated preconditions in its own headers that the server doesn't meet. 413 Payload Too LargeAsk for entity is even bigger than limitations described by server; the server may well close the connection or return an Retry-Right after header Discover more here area. 414 URI Too LongThe URI requested with the shopper is lengthier compared to server is prepared to interpret. 415 Unsupported Media TypeThe media framework of this info that may be asked for is just not supported through the server, Therefore the server is rejecting the ask for. 416 Range Not SatisfiableThe scope specified by the Selection header subject in the ask for cannot be fulfilled; it is actually probable which the scope is far from the size of the concentrate on URI's information. 417 Expectation FailedThis response code signifies the anticipation indicated through the Anticipate request header industry can not be fulfilled from the host. 421 Misdirected RequestThe petition was directed in a host which is not in the position to generate a reaction. This can be despatched by a server which is not configured to develop responses with the combo of plan and authority which are A part of the request URI. 422 Unprocessable Entity (WebDAV)The ask for was well-fashioned but was not able for being followed due to semantic problems. 423 Locked (WebDAV)The resource that has actually been accessed is locked. 424 Failed Dependency (WebDAV)The ask for failed because of failure of the previous petition. 425 Too Early Suggests which the server is unwilling to risk processing a petition which may be replayed. 426 Upgrade RequiredThe server will likely not complete the request with the latest protocol but could be keen to do so subsequent the purchaser updates to a unique protocol. The server sends an Update header in a 426 reaction to signify the expected protocol(s). 428 Precondition RequiredThe origin server needs the petition to come to be conditional. This reaction is meant to guard versus the'misplaced update' challenge, by which a purchaser Receives a source's point out, modifies it, and Places back to the host, when In the meantime a 3rd party has modified the condition on the host, resulting in a fight. 429 Too Many RequestsThe user has sent a lot of requests inside of a precise period of your time ("fee limiting"). 431 Request Header Fields Too LargeThe server is unwilling to procedure the request due to the fact its very own header fields are excessively huge. The ask for may very well be resubmitted soon after minimizing the sizing with the ask for header fields. 451 Unavailable For Legal ReasonsThe person-agent questioned a source that cannot lawfully be supplied, like a web site censored by a govt.

Server mistake answers

500 Internal Server ErrorThe server has encountered a scenario it would not understand how to handle. 501 Not ImplementedThe ask for technique just isn't supported from the host and can't be dealt with. The sole methods that servers are needed to support (and hence that need to not return this code) are GET and HEAD. 502 Bad GatewayThis oversight response usually means the server, though employed to be a gateway to get a response needed to tackle the ask for, acquired an invalid response. 503 Service UnavailableThe server just isn't All set to handle the ask for. Widespread results in can be a host which is down for maintenance or that's overloaded. Recognize that with this particular reaction, a person friendly website page describing the problem should be despatched. This responses really should be employed for temporary conditions as well as the Retry-After: HTTP header must, if possible, involve the estimated time in advance of the recovery of this ceremony. The webmaster really should also acquire care regarding the caching-relevant headers which are despatched together using this reaction, as these momentary issue responses should not be cached. 504 Gateway TimeoutThis error reaction is offered when the device is acting to be a gateway and cannot get a response in time. 506 Variant Also NegotiatesThe machine has an internal configuration error: the decided on variant useful resource is configured to engage in transparent content material negotiation by itself, and is thus not an appropriate end level within the negotiation procedure. 507 Insufficient Storage (WebDAV)The process couldn't be performed on the resource because the server is unable to retail outlet the illustration required to correctly total the request. 508 Loop Detected (WebDAV)The

1 note

·

View note

Text

HTTP response status codes indicate if a particular HTTP request was successfully finished

HTTP reaction standing codes suggest no matter whether or not a precise HTTP ask for has long been productively done. Responses are grouped in 5 courses:

From the function you obtain a response that just isn't During this checklist, it's a non-standard response, probably personalized into the host's application.

Information responses

100 ContinueThis interim reaction suggests that every thing is Okay and the client must go on the request, or dismiss the solution In case the petition is presently completed. 101 Switching ProtocolThis code is sent in reaction to a Upgrade request header from the purchaser, and signifies the protocol the server is switching to. 103 Historical HintsThis standing code is mainly supposed to get used applying the hyperlink header, letting the purchaser agent start out preloading assets when the server prepares an answer.

Successful responses

200 OKThe request has succeeded. The significance in the accomplishment is contingent on the HTTP approach:

GET: The supply was fetched and it is despatched during the information entire body.

Place or Write-up: The resource describing the result from the action is despatched into the concept system.

201 Produced The ask for has succeeded and also a new source was made Consequently. This is certainly usually the response despatched after Publish requests, or any PUT requests. 202 AcceptedThe petition continues to be received although not yet acted on. It really is noncommittal, due to the fact there's Certainly not any way in HTTP to Later on deliver an asynchronous reaction suggesting the consequence from the request. It's intended for cases where a special course of action or host handles the request, or for batch processing. 203 Non-Authoritative Info This reply code signifies the returned meta-data isn't accurately the same as is out there with the supply server, but is collected from the regional or even a third-occasion backup. This is certainly generally useful for mirrors or backups of another useful resource. Aside from that unique circumstance, the"200 OK" reaction is desired to this status. 204 No Contentthere is completely not any material to ship for this petition, although the headers may perhaps be practical. The person-agent may well update its cached headers for this useful resource While using the new ones. 205 Reset ContentTells the consumer-agent to reset the doc which sent this petition. 206 Partial ContentThis response code is employed although the Range header is sent by the shopper to request only Portion of a resource. 208 Already Reported (WebDAV)Utilized inside of a remedy component to keep away from regularly enumerating the internal members of several bindings to the exact same collection. 226 IM Used (HTTP Delta encoding)The server has fulfilled a GET ask for to the resource, as well as the solution is often a representation on the consequence of additional occasion-manipulations applied to the current occasion.

youtube

Redirection messages

300 Multiple Option The petition has above 1 attainable response. The user-agent or client has to pick between these. (There is certainly no standardized way of deciding upon one among the answers, but HTML links to the prospects are advised so the consumer can select.) The brand new URL is presented inside the reaction. 302 FoundThis response code suggests that the URI of requested resource was modified briefly. Additional variations within the URI may well be gained Later on. So, this identical URI ought to be used with the customer in potential requests. 303 See OtherThe waiter despatched this response to manual the consumer to acquire the requested supply at Yet another URI with a GET ask for. 304 Not ModifiedThis is used for caching features. It tells the consumer that the reaction has not nevertheless been modified, Therefore the consumer can proceed to implement a similar cached version of this response. 305 Use Proxy Outlined in a very previous Model from the HTTP specification to reveal a asked for response needs to be retrieved by suggests of the proxy. It can be been deprecated because of security concerns pertaining to in-band configuration of the proxy. 306 unusedThis reply code is not any much more utilized; it truly is just reserved. It was used in a prior version of the HTTP/1.one specification. 307 Temporary RedirectThe server sends this reaction to immediate the consumer to get the requested source at a special URI with exact same strategy which was applied in the earlier petition. This has the identical semantics given that the 302 Located HTTP answer code, with the exception that the consumer agent shouldn't change the HTTP system utilized: When a Article was utilised in the 1st request, a Put up will have to be utilized in the second request. 308 Permanent RedirectThis usually means the useful resource is now permanently Situated at A different URI, presented from the Locale: HTTP Reaction header. This has the same semantics given that the 301 Moved Permanently HTTP response code, While using the exception the consumer consultant have to not improve the HTTP system employed: When a Submit was utilized in the Preliminary petition, a Submit should be applied while in the up coming ask for.

Client mistake answers

400 Bad RequestThe server couldn't realize the ask for due to invalid syntax. That may be, the customer need to authenticate by itself to get the requested answer. The Preliminary goal for producing this code has been working with it for electronic payment solutions, but this status code is often used really infrequently and no conventional Conference exists. 403 ForbiddenThe client doesn't have entry rights to this substance; that is, it can be unauthorized, so the server is refusing to deliver the requested useful resource. Compared with 401, the consumer's identification is known to the host. 404 Not FoundThe device cannot locate the requested source. In the browser, this normally usually means the URL is not regarded. In an API, this can also reveal that the endpoint is genuine nevertheless the useful resource alone isn't going to exist. Servers may also ship this response as an alternative to 403 to conceal the event of the supply from an unauthorized consumer. This reaction code is almost certainly by far the most famed one as a consequence of the frequent event online. 405 Method Not AllowedThe petition method is regarded by the server but has been disabled and can't be employed. By the use of occasion, an API may possibly forbid DELETE-ing a useful resource. The two mandatory procedures, GET and HEAD, need to never ever be disabled and should not return this error code. 406 Not AcceptableThis solution http://discorddownn.moonfruit.com/?preview=Y is sent when the server, right after accomplishing server-driven content negotiation, won't uncover any material which adheres on the criteria supplied from the consumer consultant. 407 Proxy Authentication RequiredThis resembles 401 but authentication is essential for being performed by a proxy. 408 Request TimeoutThis reaction is shipped on an idle connection by a few servers, even without prior ask for from the shopper. It commonly implies the server would like to close this down new link. This response may be utilized noticeably more since some browsers, like Chrome, Firefox 27+, or IE9, make the most of HTTP pre-link mechanisms to quicken browsing. Also Be aware that many servers simply shut down the connection with out sending this information. 409 ConflictThis reaction is sent any time a ask for conflicts While using the current state of the server. 410 GoneThis reaction is delivered once the requested information has become forever deleted from server, with out a forwarding address. Consumers are predicted to clear away their caches and hyperlinks on the useful resource. The HTTP specification ideas this position code to be used for"minimal-time, promotional expert services". APIs shouldn't feel pressured to indicate sources that are deleted with this standing code. 411 Length RequiredServer turned down the ask for since the Material-Length header industry just isn't outlined as well as the server needs it. 412 Precondition FailedThe consumer has indicated preconditions in its own headers that the server doesn't satisfy. 413 Payload Too Big Ask for entity is greater than limitations defined by host; the server may well shut the website link or return the Retry-After header area. 414 URI Too LongThe URI requested through the purchaser is longer compared to the server is ready to interpret. 415 Unsupported Media TypeThe media format of the info which is asked for just isn't supported with the host, Therefore the server is rejecting the petition. 416 Range Not SatisfiableThe variety specified by the Assortment header industry in the ask for can't be fulfilled; it is achievable which the scope is outdoors the scale of this concentrate on URI's facts. 417 Expectation FailedThis reaction code signifies the expectation indicated from the Foresee ask for header industry cannot be fulfilled by the host. 418 I'm a teapotThe server refuses the try to brew coffee using a teapot. 421 Misdirected RequestThe ask for was directed in a server which will not be capable to generate a reaction. This might be transmitted by a server that is not configured to develop responses with the combo of scheme and authority that are included in the ask for URI. 422 Unprocessable Entity (WebDAV)The petition was perfectly-fashioned but was not able to get followed because of semantic errors. 423 Locked (WebDAV)The source that has become obtained is locked. 424 Failed Dependency (WebDAV)The request failed on account of failure of a preceding request. 425 Too Early Suggests which the host is unwilling to threat processing a petition that might be replayed. 426 Upgrade RequiredThe server is not going to do the request with the current protocol but might be ready to take action subsequent the client upgrades to One more protocol. The server sends an Update header at a 426 reaction to suggest the obligatory protocol(s). 428 Precondition RequiredThe supply server demands the petition to turn out to be conditional. This response is intended to safeguard against the'shed update' issue, the place a client gets a useful resource's point out, modifies it, and Places back again to the server, when a third party has altered the state within the server, leading to a battle. 429 Too Many RequestsThe person has delivered too many requests in the precise sum of your time ("amount restricting"). 431 Request Header Fields Too Big The server is unwilling to course of action the ask for simply because its header fields are excessively massive. The request may be resubmitted right after lessening the dimension from the request header fields. 451 Unavailable For Legal ReasonsThe person-agent requested a resource which often can't lawfully be furnished, including an internet site censored by a federal government.

Server error responses

500 Internal Server ErrorThe server has encountered a situation it isn't going to learn how to tackle. 501 Not ImplementedThe petition technique isn't supported with the server and are unable to be handled. The one procedures that servers are required to persuade (and as a result that should not return this code) are GET and HEAD. 502 Bad GatewayThis mistake reaction means that the server, when employed as a gateway to have a reaction necessary to manage the ask for, obtained an invalid reaction. 503 Service UnavailableThe machine is just not ready to contend with the request. Regular leads to really are a host that is definitely down for routine maintenance or that is certainly overloaded. Notice that with this response, a person-welcoming web site detailing the problem must be despatched. This solutions should really be useful for short-term disorders in conjunction with also the Retry-Right after: HTTP header must, if at all possible, include the approximated time previous to the recovery of this service. The webmaster need to also acquire care about the caching-related headers that are sent collectively with this particular response, since these short term condition answers ought to ordinarily not be cached. 504 Gateway TimeoutThis error response is provided when the device is performing as a gateway and are unable to obtain a response in time. 505 HTTP Version Not SupportedThe HTTP Variation used in the petition is not really supported with the host. 508 Loop Detected (WebDAV)The server detected an infinite loop whilst processing the ask for. 510 Not ExtendedAdded extensions to the ask for are demanded to the waiter to match it.

1 note

·

View note

Text

Every SEO Should Aim For This Link-Earning Stack

When it involves SEO and trying to enhance the optimization of an internet site,

server header status codes shouldn't be overlooked.

they will both improve and severely damage your onsite SEO.

Therefore it’s vital that program optimizers understand

how they work and the way they will be interpreted by search engines like Google and Bing.

How Header Server Status Codes are Served Up

When a user requests a URL from their website, the server on which your website is hosted,

the server will return a header server status code.

Ideally, the server should return a ‘200 OK’ status code to tell

the browser that everything is okay with the page and therefore the page exists within the requested location.

This status code also comes with additional information which incorporates

HTML code that the user’s browser uses to present

the page content, images, and video accordingly because the website owner has defined it.

The above status code typically only served up when there are not any server-side issues with a specific page.

Other codes may be served up

and which give information on the availability of a particular page and whether it even exists or not.

Below we outline the desirable status codes and people who are more detrimental to your SEO efforts and website rankings.

Desirable Server Status Codes

Status Code: 200 OK –

The 200 OK status code confirms that the webpage exists and is in a position to be served up OK for the asking.

this is often the foremost desirable status code you'll see when analyzing an internet site for SEO.

The 200 status code positively interpreted by the search engines,

informing them that the page exists within

the requested location and there are not any issues with resources not being available for the page.

Status Code: 301 Moved Permanently –

this is often usually wont to show that a page is no longer at the requested location and has permanently moved to a different location.

301s the foremost assured way of informing both users and search engines

that page content has moved to a special URL permanently.

The permanency of this point of URL means search engines like Google will transfer any rankings and link weight and link authority permanently to a replacement

URL It also will help the search engines know to get rid of the old URL from their indexes and replace them with the new URL.

Detrimental Server Status Codes

Status Code: 500 Internal Server Error –

This status code may be a general server status error that indicates to both visitors and search engines

that the website web server features a problem.

If this code regularly occurs then this not only appears negatively to visitors and makes your website experience poor,

but it also conveys an equivalent message to look engines,

and any ranking you've got or may have had are going to greatly reduced within the program rankings.

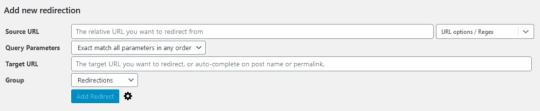

Status Code: 302 Found –

This code usually utilized in temporary redirection or URLs.

meant to define where a URL temporarily redirecting to a different location

but probably going to vary in the future or switched to a 301 permanent redirect.

Often 302 temporary redirects employed by mistake, rather than 301 redirects.

this will mean that page content given less preference

because the search engines think the URL or content could change

and isn't as fixed for users as a page that has permanently redirected.

Traditionally, this status code also doesn't pass link authority

and may cause links to de-indexed in time. generally,

advised to not use this sort of redirect unless an internet site is fresh and has little link authority anyway,

or in very specific special cases where it's going to add up to only te

mporarily redirect URLs.

Status Code: 404 Not Found –

This server status code means the requested URL has not found and there's usually a message on-page saying

“The page or file you're trying to access doesn’t exist”. the matter with 404’s

if they're appearing for URLs that previously did exist then search engines

will interpret them because the page has moved or removed.

As a result, the pages will quickly de-indexed

as they serve little content and any link authority remains on the Not Found URL.

The simplest solution if you’re experiencing many 404’s is to review

them and check out and re-direct any relevant URLs to corresponding matching or similar URLs.

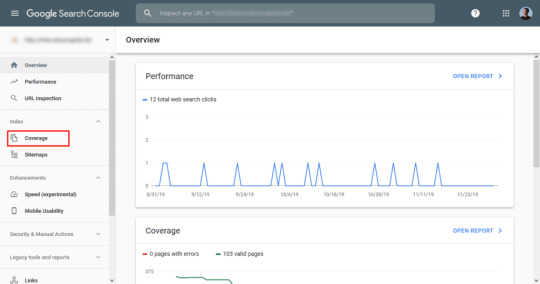

Google Webmaster Tools often produces a report showing 404s

that Google’s bots are finding,

allowing users to map out 301 permanent redirections to the foremost related

URLs and thus expire any link weight and rankings that previously held.

Conclusion

webmasters and SEOs must use 301 redirects to resolve

any URLs which are throwing up 500, 302, or 404 server status codes.

Search engines won’t rank URLs that don't permanently resolve to a relevant

URL so it's worth taking the time to review and resolve your URLs.

you'll use data and tools like Google Webmaster Tools,

Screaming Frog’s SEO Spider Tool to seek out erroneous status codes and resolve them.

For the best internet marketing services get in touch with nummero

we are the best digital marketing company in Bangalore.

0 notes

Text

An 8-Point Checklist for Debugging Strange Technical SEO Problems

Posted by Dom-Woodman

Occasionally, a problem will land on your desk that's a little out of the ordinary. Something where you don't have an easy answer. You go to your brain and your brain returns nothing.

These problems can’t be solved with a little bit of keyword research and basic technical configuration. These are the types of technical SEO problems where the rabbit hole goes deep.

The very nature of these situations defies a checklist, but it's useful to have one for the same reason we have them on planes: even the best of us can and will forget things, and a checklist will provvide you with places to dig.

Fancy some examples of strange SEO problems? Here are four examples to mull over while you read. We’ll answer them at the end.

1. Why wasn’t Google showing 5-star markup on product pages?

The pages had server-rendered product markup and they also had Feefo product markup, including ratings being attached client-side.

The Feefo ratings snippet was successfully rendered in Fetch & Render, plus the mobile-friendly tool.

When you put the rendered DOM into the structured data testing tool, both pieces of structured data appeared without errors.

2. Why wouldn’t Bing display 5-star markup on review pages, when Google would?

The review pages of client & competitors all had rating rich snippets on Google.

All the competitors had rating rich snippets on Bing; however, the client did not.

The review pages had correctly validating ratings schema on Google’s structured data testing tool, but did not on Bing.

3. Why were pages getting indexed with a no-index tag?

Pages with a server-side-rendered no-index tag in the head were being indexed by Google across a large template for a client.

4. Why did any page on a website return a 302 about 20–50% of the time, but only for crawlers?

A website was randomly throwing 302 errors.

This never happened in the browser and only in crawlers.

User agent made no difference; location or cookies also made no difference.

Finally, a quick note. It’s entirely possible that some of this checklist won’t apply to every scenario. That’s totally fine. It’s meant to be a process for everything you could check, not everything you should check.

The pre-checklist check

Does it actually matter?

Does this problem only affect a tiny amount of traffic? Is it only on a handful of pages and you already have a big list of other actions that will help the website? You probably need to just drop it.

I know, I hate it too. I also want to be right and dig these things out. But in six months' time, when you've solved twenty complex SEO rabbit holes and your website has stayed flat because you didn't re-write the title tags, you're still going to get fired.

But hopefully that's not the case, in which case, onwards!

Where are you seeing the problem?

We don’t want to waste a lot of time. Have you heard this wonderful saying?: “If you hear hooves, it’s probably not a zebra.”

The process we’re about to go through is fairly involved and it’s entirely up to your discretion if you want to go ahead. Just make sure you’re not overlooking something obvious that would solve your problem. Here are some common problems I’ve come across that were mostly horses.

You’re underperforming from where you should be.

When a site is under-performing, people love looking for excuses. Weird Google nonsense can be quite a handy thing to blame. In reality, it’s typically some combination of a poor site, higher competition, and a failing brand. Horse.

You’ve suffered a sudden traffic drop.

Something has certainly happened, but this is probably not the checklist for you. There are plenty of common-sense checklists for this. I’ve written about diagnosing traffic drops recently — check that out first.

The wrong page is ranking for the wrong query.

In my experience (which should probably preface this entire post), this is usually a basic problem where a site has poor targeting or a lot of cannibalization. Probably a horse.

Factors which make it more likely that you’ve got a more complex problem which require you to don your debugging shoes:

A website that has a lot of client-side JavaScript.

Bigger, older websites with more legacy.

Your problem is related to a new Google property or feature where there is less community knowledge.

1. Start by picking some example pages.

Pick a couple of example pages to work with — ones that exhibit whatever problem you're seeing. No, this won't be representative, but we'll come back to that in a bit.

Of course, if it only affects a tiny number of pages then it might actually be representative, in which case we're good. It definitely matters, right? You didn't just skip the step above? OK, cool, let's move on.

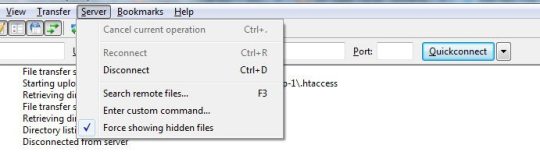

2. Can Google crawl the page once?

First we’re checking whether Googlebot has access to the page, which we’ll define as a 200 status code.

We’ll check in four different ways to expose any common issues:

Robots.txt: Open up Search Console and check in the robots.txt validator.

User agent: Open Dev Tools and verify that you can open the URL with both Googlebot and Googlebot Mobile.

To get the user agent switcher, open Dev Tools.

Check the console drawer is open (the toggle is the Escape key)

Hit the … and open "Network conditions"

Here, select your user agent!

IP Address: Verify that you can access the page with the mobile testing tool. (This will come from one of the IPs used by Google; any checks you do from your computer won't.)

Country: The mobile testing tool will visit from US IPs, from what I've seen, so we get two birds with one stone. But Googlebot will occasionally crawl from non-American IPs, so it’s also worth using a VPN to double-check whether you can access the site from any other relevant countries.

I’ve used HideMyAss for this before, but whatever VPN you have will work fine.

We should now have an idea whether or not Googlebot is struggling to fetch the page once.

Have we found any problems yet?

If we can re-create a failed crawl with a simple check above, then it’s likely Googlebot is probably failing consistently to fetch our page and it’s typically one of those basic reasons.

But it might not be. Many problems are inconsistent because of the nature of technology. ;)

3. Are we telling Google two different things?

Next up: Google can find the page, but are we confusing it by telling it two different things?

This is most commonly seen, in my experience, because someone has messed up the indexing directives.

By "indexing directives," I’m referring to any tag that defines the correct index status or page in the index which should rank. Here’s a non-exhaustive list:

No-index

Canonical

Mobile alternate tags

AMP alternate tags

An example of providing mixed messages would be:

No-indexing page A

Page B canonicals to page A

Or:

Page A has a canonical in a header to A with a parameter

Page A has a canonical in the body to A without a parameter

If we’re providing mixed messages, then it’s not clear how Google will respond. It’s a great way to start seeing strange results.

Good places to check for the indexing directives listed above are:

Sitemap

Example: Mobile alternate tags can sit in a sitemap

HTTP headers

Example: Canonical and meta robots can be set in headers.

HTML head

This is where you’re probably looking, you’ll need this one for a comparison.

JavaScript-rendered vs hard-coded directives

You might be setting one thing in the page source and then rendering another with JavaScript, i.e. you would see something different in the HTML source from the rendered DOM.

Google Search Console settings

There are Search Console settings for ignoring parameters and country localization that can clash with indexing tags on the page.

A quick aside on rendered DOM

This page has a lot of mentions of the rendered DOM on it (18, if you’re curious). Since we’ve just had our first, here’s a quick recap about what that is.

When you load a webpage, the first request is the HTML. This is what you see in the HTML source (right-click on a webpage and click View Source).

This is before JavaScript has done anything to the page. This didn’t use to be such a big deal, but now so many websites rely heavily on JavaScript that the most people quite reasonably won’t trust the the initial HTML.

Rendered DOM is the technical term for a page, when all the JavaScript has been rendered and all the page alterations made. You can see this in Dev Tools.

In Chrome you can get that by right clicking and hitting inspect element (or Ctrl + Shift + I). The Elements tab will show the DOM as it’s being rendered. When it stops flickering and changing, then you’ve got the rendered DOM!

4. Can Google crawl the page consistently?

To see what Google is seeing, we're going to need to get log files. At this point, we can check to see how it is accessing the page.

Aside: Working with logs is an entire post in and of itself. I’ve written a guide to log analysis with BigQuery, I’d also really recommend trying out Screaming Frog Log Analyzer, which has done a great job of handling a lot of the complexity around logs.

When we’re looking at crawling there are three useful checks we can do:

Status codes: Plot the status codes over time. Is Google seeing different status codes than you when you check URLs?

Resources: Is Google downloading all the resources of the page?

Is it downloading all your site-specific JavaScript and CSS files that it would need to generate the page?

Page size follow-up: Take the max and min of all your pages and resources and diff them. If you see a difference, then Google might be failing to fully download all the resources or pages. (Hat tip to @ohgm, where I first heard this neat tip).

Have we found any problems yet?

If Google isn't getting 200s consistently in our log files, but we can access the page fine when we try, then there is clearly still some differences between Googlebot and ourselves. What might those differences be?

It will crawl more than us

It is obviously a bot, rather than a human pretending to be a bot

It will crawl at different times of day

This means that:

If our website is doing clever bot blocking, it might be able to differentiate between us and Googlebot.

Because Googlebot will put more stress on our web servers, it might behave differently. When websites have a lot of bots or visitors visiting at once, they might take certain actions to help keep the website online. They might turn on more computers to power the website (this is called scaling), they might also attempt to rate-limit users who are requesting lots of pages, or serve reduced versions of pages.

Servers run tasks periodically; for example, a listings website might run a daily task at 01:00 to clean up all it’s old listings, which might affect server performance.

Working out what’s happening with these periodic effects is going to be fiddly; you’re probably going to need to talk to a back-end developer.

Depending on your skill level, you might not know exactly where to lead the discussion. A useful structure for a discussion is often to talk about how a request passes through your technology stack and then look at the edge cases we discussed above.

What happens to the servers under heavy load?

When do important scheduled tasks happen?

Two useful pieces of information to enter this conversation with:

Depending on the regularity of the problem in the logs, it is often worth trying to re-create the problem by attempting to crawl the website with a crawler at the same speed/intensity that Google is using to see if you can find/cause the same issues. This won’t always be possible depending on the size of the site, but for some sites it will be. Being able to consistently re-create a problem is the best way to get it solved.

If you can’t, however, then try to provide the exact periods of time where Googlebot was seeing the problems. This will give the developer the best chance of tying the issue to other logs to let them debug what was happening.