#(mostly because the mobile port controls like butter)

Explore tagged Tumblr posts

Text

^ my bBeautiful wives

#꒰💘꒱ ❝ Words of Love ❞#˚₊· ͟͟͞͞➳❥ ꒰🌾🏜️꒱ ❝ We'll Stick Together. Even If We're History. ❞ ˎˊ˗#yes I'm technically not married to Wambus and Triffany. no I will call them my wives regardless. what're you a cop#not to say I HAVEN'T waffled the idea of doing one of those fancy RP weddings but [hot Dr. Pepper video voice] THAT'S NOT THE TOPIC HERE#I already have the game both on my phone AND my Playstation... I 100% the PS port but I never did the DLC on the mobile port#(mostly because the mobile port controls like butter)#so if I ever get the PC port... you'll see... you'll ALL see#I'll take so many pictures of my wives it won't even be funny

5 notes

·

View notes

Text

Freesync 2 Monitor Vs G Sync Monitor: Information on Similarities

Adaptive sync technology was first released in 2014 and has come a long way since its introduction. Both AMD and NVIDIA are in the market. Those who are into PC gaming will want to learn as much as they can about these two options to determine which one will be more intuitive and able to provide a seamless feel.

What Is Adaptive Sync?

The vertical refresh rate of a monitor can have a big impact on how well it is able to play a game. If the vertical refresh rate is too slow, there will be subtle lines running through the screen, causing interference.

While your graphics card is an important part of your gaming experience, it can only move images so fast. A traditional monitor is going to have a slow refresh rate. For instance, a 60Hz monitor will refresh every 1/60th of a second. Unfortunately, your graphics card may not be on the same rate which could end up causing multiple frames to be in the same window for a brief second or so. This is called screen tearing and it is something every PC gamer wants to avoid.

Both FreeSync and G-Sync eliminate tearing and stuttering, making your graphics flow like melted butter. Adaptive sync monitors make a huge difference in your gaming experience on the PC. It is amazing to put a traditional monitor and an adaptive sync monitor side-by-side and see how they perform.

Without adaptive sync monitors, there will be stuttering and tearing which can destroy your image play quality and lead to games being less enjoyable. Today’s gaming monitors must include adaptive sync to be labeled as a gaming monitor. There are two main types of adaptive sync technology and this guide will break them both down and help you understand how they are similar and how they are different. You will also learn how they are both used for gaming.

How Does FreeSync and G-Sync Work Monitors Work

How Does FreeSync and G-Sync Work Monitors Work?

As mentioned above, sometimes your graphics card and monitor refresh rate are not perfectly synchronized which is when you start getting stuttering and tearing. Both FreeSync and G-Sync work with your graphics card to synchronize the refresh rate so there is no tearing or stuttering when your game is graphically intense. Whatever rate your graphics card is generating images at, the monitor will refresh at the exact same rate for a seamless play.

FreeSync Vs. G-Sync: Application

Although FreeSync and G-Sync perform much the same, there are some major differences. Application is one of the areas where these two differ greatly.

Out of the two, FreeSync is the one that is most easily used by monitors. It affixes over the VESA adaptive sync standard which is placed on DisplayPort 1.2a. Because AMD does not charge any royalties or fees for use, there is very little cost for manufacturers to include FreeSync with their monitors. If you are looking for a gaming monitor, you are likely to find this technology in a variety of models and brands, even those on the low end of the cost spectrum.

G-Sync, on the other hand, requires manufacturers to use Nvidia hardware modules and they remain in full control of quality, making it more expensive for manufacturers to use this technology in the production of their gaming monitors. Because of the added cost for manufacturers, you will likely never find a low-cost monitor that features G-Sync. Most manufacturers consider this to be a premium add-on and they charge more for it.

Everything You Need to Know About AMD FreeSync

Before you decide on any adaptive sync monitor, you need to know the pros and cons of each type. Being fully informed on the pros and cons of each type will help you to choose the one that will best meet your gaming needs and stop the screen tearing and stuttering that make you crazy.

Although AMD is not the first to develop a product that addresses screen tearing and stuttering, they are currently the most widely used by gamers and that could be due to cost and availability. As stated before, AMD does not charge royalties, leading to lower costs for manufacturers.

Pros of AMD FreeSync

One of the biggest things AMD Freesync has going for it is the cost. Monitors that feature AMD FreeSync are much more affordable than those with NVIDIA G-Sync technology. The lowered cost means this type of monitor is more widely available to gamers with a range of budgets.

Because it is a software solution, it is easier to obtain and does not cost a tremendous amount of money. You will find AMD FreeSync is available on budget monitors as well as high-end models.

Connectivity is another pro of AMD FreeSync. Monitors that feature FreeSync typically have more ports available. AMD has introduced its FreeSync in HDMI which allows this technology to be used by many more monitors than NVIDIA G-Sync.

Cons of AMD FreeSync

Although it would certainly seem AMD FreeSync is the perfect choice because of its performance and price, there is a con to consider. Unfortunately, AMD FreeSync only works with AMD graphics cards. If your computer has an NVIDIA graphics card, FreeSync will not be able to synchronize the refresh rate.

AMD also has less strict standards which could result in inconsistent experiences with different monitors. AMD does not retain control over their technology which means manufacturers can take liberty in creating their monitors with AMD FreeSync. If choosing a gaming monitor with FreeSync, it is wise to carefully research the manufacturer and read reviews to ensure the right sync level is achieved.

If you are searching for a monitor with AMD FreeSync, make sure you carefully check the specs. AMD has released a Low Framerate Compensation addition to FreeSync that allows it to run smoother when it is being run in monitors with lower than the minimum supported refresh rate.

FreeSync Vs G-Sync Application

Pros and Cons: Everything You Need to Know About NVIDIA G-Sync

Having balanced information about both types of adaptive sync manufacturers will help you to make the right decision. Both top manufacturers have their pros and cons, so it is not always easy to make a choice.

Pros of NVIDIA G-Sync

The biggest benefit of using NVIDIA G-Sync is consistent performance. Unlike AMD, NVIDIA retains complete control over quality. Every single monitor must pass NVIDIA’s stringent guidelines for extreme quality and performance. The certification process is so strict, NVIDIA has turned down many monitors.

As mentioned above, AMD has come out with their Low Framerate Compensation, but every single NVIDIA monitor offers the equivalent. Any monitors with G-Sync will also offer frequency dependent variable overdrive. This simply means these monitors will not experience ghosting. Ghosting is what occurs as the frame slowly changes, leaving behind a slightly blurred image that fades as the new frame comes in.

When you purchase an NVIDIA G-Sync monitor, you can rest assured the quality and performance will be consistent among different manufacturers because NVIDIA ensures it will. It does not matter which monitor you purchase, if it includes NVIDIA technology, it will have met the stringent certification standards of NVIDIA before being put on the market.

Cons of NVIDIA G-Sync

As with any product, there are some cons to consider with NVIDIA G-Sync. One of the biggest cons is the expense. On average, you are going to spend much more on an NVIDIA G-Sync monitor than an AMD FreeSync. This limits NVIDIA technology to mostly high-end gamers. NVIDIA requires all manufactures to use proprietary hardware modules, adding to the expense for manufacturers.

There is also the problem with limited ports available. If you have a lot of gaming gear to connect, you may not be happy with the limited ports that are offered. In addition to this problem, just like with AMD, NVIDIA G-Sync does not work with AMD graphics cards. If your computer uses an AMD card, you will be stuck with using AMD FreeSync.

AMD FreeSync Vs. NVIDIA G-Sync: Laptops

If you are looking for a gaming laptop, both AMD and NVIDIA have models that make gaming graphics smoother and more consistent than ever before. For the most part, AMD has been out of the mobile technology industry, so NVIDIA has the market when it comes to laptop availability.

You will be able to find NVIDIA G-Sync laptops from almost every major manufacturer. The new laptops can now handle framerates at close to 120Hz, where they were once limited to 75Hz or lower. This has made laptop gaming much more attractive to gamers who play high graphic-demanding PC games.

Although AMD is a little late to the party, ASUS recently released their ROG Strix GL702ZC which includes an AMD FreeSync display. It will be interesting to see how the competitive landscape changes as AMD FreeSync laptops begin being released in greater abundance.

AMD FreeSync Vs. NVIDIA G-Sync: What About HDR Monitors?

The demand for high-definition is increasing and manufacturers are taking note. With ultra-high-resolution now growing in depth, both AMD and NVIDIA seem to be responding. There are now new gaming monitors hitting retailers and they are bringing the highest resolution that has ever been seen with laptop gaming.

While this is exciting for gamers, it is likely going to be pricey. AMD has always remained rather lax about the use of their technology, but with AMD FreeSync 2, they are committed to remaining more in control. AMD will not give manufacturers the okay unless their monitors include Low Frame Rate Compensation. They have also put in certain standards for low latency and dynamic color displays which produce a double brightness and color richness than the standard sRGB. One of the coolest things about these AMD displays is they automatically switch over to FreeSync as long as it is supported by the game you are playing.

There are announced versions of NVIDIA G-Sync monitors in 4k and ultrawide models that rise as high as 200Hz. These displays offer amazing fluidity which cannot be matched by AMD, even though FreeSync is certainly at the precipice of greatness. Playing a game on one of these models will amaze you because it offers the highest level of brightness, color richness, and crispness of any gaming display you have ever seen.

It is clear these two are at in a battle for gamers’ loyalty. For most people, AMD FreeSync products are a more affordable option, but can they measure up if cost is not involved?

AMD FreeSync Vs NVIDIA G-Sync

Here’s the Bottom Line

It is clear that screen tearing, ghosting, and stuttering are the biggest irritations for PC gamers. Playing PC games like Mass Effect can quickly send you over the edge if screen tearing is constantly occurring. Screen tearing takes a beautifully exquisite game and turns it into a boxed mess that does not flow as it should. If you’ve experienced this, you know how annoying it can be. Sometimes, the tearing is so consistent it makes the game unplayable. Many people think it is their graphics card alone that is to blame, but this is not always so.

Both AMD and NVIDIA have the same potential, but it seems NVIDIA, in most cases, holds to a higher standard with manufacturers using their technology. Now that AMD has created FreeSync 2, they may be giving NVIDIA more of a run for their money.

AMD FreeSync is featured in many more gaming displays than G-Sync simply because of availability and price. When manufacturers are not held to stringent certifications, they are able to produce more affordable products. With this freedom comes the price of inconsistency.

If you can afford it, NVIDIA G-Sync is likely going to be your best bet. Although it is not superior in concept, NVIDIA keeping close reigns on their products means consistency across the board with all manufacturers.

Just make sure to remember that the type of graphics card you have will determine which will work. Neither AMD nor NVIDIA allow their adaptive sync hardware or applications to work with competitors’ graphics cards. There are some reported workarounds with this problem, but they are not widely recommended. In the end, only you can make the choice based on your budget and needs.

Freesync 2 Monitor Vs G Sync Monitor: Information on Similarities published first on https://gaminghelix.tumblr.com

0 notes

Text

Freesync 2 Monitor Vs G Sync Monitor: Information on Similarities

Adaptive sync technology was first released in 2014 and has come a long way since its introduction. Both AMD and NVIDIA are in the market. Those who are into PC gaming will want to learn as much as they can about these two options to determine which one will be more intuitive and able to provide a seamless feel.

What Is Adaptive Sync?

The vertical refresh rate of a monitor can have a big impact on how well it is able to play a game. If the vertical refresh rate is too slow, there will be subtle lines running through the screen, causing interference.

While your graphics card is an important part of your gaming experience, it can only move images so fast. A traditional monitor is going to have a slow refresh rate. For instance, a 60Hz monitor will refresh every 1/60th of a second. Unfortunately, your graphics card may not be on the same rate which could end up causing multiple frames to be in the same window for a brief second or so. This is called screen tearing and it is something every PC gamer wants to avoid.

Both FreeSync and G-Sync eliminate tearing and stuttering, making your graphics flow like melted butter. Adaptive sync monitors make a huge difference in your gaming experience on the PC. It is amazing to put a traditional monitor and an adaptive sync monitor side-by-side and see how they perform.

Without adaptive sync monitors, there will be stuttering and tearing which can destroy your image play quality and lead to games being less enjoyable. Today’s gaming monitors must include adaptive sync to be labeled as a gaming monitor. There are two main types of adaptive sync technology and this guide will break them both down and help you understand how they are similar and how they are different. You will also learn how they are both used for gaming.

How Does FreeSync and G-Sync Work Monitors Work

How Does FreeSync and G-Sync Work Monitors Work?

As mentioned above, sometimes your graphics card and monitor refresh rate are not perfectly synchronized which is when you start getting stuttering and tearing. Both FreeSync and G-Sync work with your graphics card to synchronize the refresh rate so there is no tearing or stuttering when your game is graphically intense. Whatever rate your graphics card is generating images at, the monitor will refresh at the exact same rate for a seamless play.

FreeSync Vs. G-Sync: Application

Although FreeSync and G-Sync perform much the same, there are some major differences. Application is one of the areas where these two differ greatly.

Out of the two, FreeSync is the one that is most easily used by monitors. It affixes over the VESA adaptive sync standard which is placed on DisplayPort 1.2a. Because AMD does not charge any royalties or fees for use, there is very little cost for manufacturers to include FreeSync with their monitors. If you are looking for a gaming monitor, you are likely to find this technology in a variety of models and brands, even those on the low end of the cost spectrum.

G-Sync, on the other hand, requires manufacturers to use Nvidia hardware modules and they remain in full control of quality, making it more expensive for manufacturers to use this technology in the production of their gaming monitors. Because of the added cost for manufacturers, you will likely never find a low-cost monitor that features G-Sync. Most manufacturers consider this to be a premium add-on and they charge more for it.

Everything You Need to Know About AMD FreeSync

Before you decide on any adaptive sync monitor, you need to know the pros and cons of each type. Being fully informed on the pros and cons of each type will help you to choose the one that will best meet your gaming needs and stop the screen tearing and stuttering that make you crazy.

Although AMD is not the first to develop a product that addresses screen tearing and stuttering, they are currently the most widely used by gamers and that could be due to cost and availability. As stated before, AMD does not charge royalties, leading to lower costs for manufacturers.

Pros of AMD FreeSync

One of the biggest things AMD Freesync has going for it is the cost. Monitors that feature AMD FreeSync are much more affordable than those with NVIDIA G-Sync technology. The lowered cost means this type of monitor is more widely available to gamers with a range of budgets.

Because it is a software solution, it is easier to obtain and does not cost a tremendous amount of money. You will find AMD FreeSync is available on budget monitors as well as high-end models.

Connectivity is another pro of AMD FreeSync. Monitors that feature FreeSync typically have more ports available. AMD has introduced its FreeSync in HDMI which allows this technology to be used by many more monitors than NVIDIA G-Sync.

Cons of AMD FreeSync

Although it would certainly seem AMD FreeSync is the perfect choice because of its performance and price, there is a con to consider. Unfortunately, AMD FreeSync only works with AMD graphics cards. If your computer has an NVIDIA graphics card, FreeSync will not be able to synchronize the refresh rate.

AMD also has less strict standards which could result in inconsistent experiences with different monitors. AMD does not retain control over their technology which means manufacturers can take liberty in creating their monitors with AMD FreeSync. If choosing a gaming monitor with FreeSync, it is wise to carefully research the manufacturer and read reviews to ensure the right sync level is achieved.

If you are searching for a monitor with AMD FreeSync, make sure you carefully check the specs. AMD has released a Low Framerate Compensation addition to FreeSync that allows it to run smoother when it is being run in monitors with lower than the minimum supported refresh rate.

FreeSync Vs G-Sync Application

Pros and Cons: Everything You Need to Know About NVIDIA G-Sync

Having balanced information about both types of adaptive sync manufacturers will help you to make the right decision. Both top manufacturers have their pros and cons, so it is not always easy to make a choice.

Pros of NVIDIA G-Sync

The biggest benefit of using NVIDIA G-Sync is consistent performance. Unlike AMD, NVIDIA retains complete control over quality. Every single monitor must pass NVIDIA’s stringent guidelines for extreme quality and performance. The certification process is so strict, NVIDIA has turned down many monitors.

As mentioned above, AMD has come out with their Low Framerate Compensation, but every single NVIDIA monitor offers the equivalent. Any monitors with G-Sync will also offer frequency dependent variable overdrive. This simply means these monitors will not experience ghosting. Ghosting is what occurs as the frame slowly changes, leaving behind a slightly blurred image that fades as the new frame comes in.

When you purchase an NVIDIA G-Sync monitor, you can rest assured the quality and performance will be consistent among different manufacturers because NVIDIA ensures it will. It does not matter which monitor you purchase, if it includes NVIDIA technology, it will have met the stringent certification standards of NVIDIA before being put on the market.

Cons of NVIDIA G-Sync

As with any product, there are some cons to consider with NVIDIA G-Sync. One of the biggest cons is the expense. On average, you are going to spend much more on an NVIDIA G-Sync monitor than an AMD FreeSync. This limits NVIDIA technology to mostly high-end gamers. NVIDIA requires all manufactures to use proprietary hardware modules, adding to the expense for manufacturers.

There is also the problem with limited ports available. If you have a lot of gaming gear to connect, you may not be happy with the limited ports that are offered. In addition to this problem, just like with AMD, NVIDIA G-Sync does not work with AMD graphics cards. If your computer uses an AMD card, you will be stuck with using AMD FreeSync.

AMD FreeSync Vs. NVIDIA G-Sync: Laptops

If you are looking for a gaming laptop, both AMD and NVIDIA have models that make gaming graphics smoother and more consistent than ever before. For the most part, AMD has been out of the mobile technology industry, so NVIDIA has the market when it comes to laptop availability.

You will be able to find NVIDIA G-Sync laptops from almost every major manufacturer. The new laptops can now handle framerates at close to 120Hz, where they were once limited to 75Hz or lower. This has made laptop gaming much more attractive to gamers who play high graphic-demanding PC games.

Although AMD is a little late to the party, ASUS recently released their ROG Strix GL702ZC which includes an AMD FreeSync display. It will be interesting to see how the competitive landscape changes as AMD FreeSync laptops begin being released in greater abundance.

AMD FreeSync Vs. NVIDIA G-Sync: What About HDR Monitors?

The demand for high-definition is increasing and manufacturers are taking note. With ultra-high-resolution now growing in depth, both AMD and NVIDIA seem to be responding. There are now new gaming monitors hitting retailers and they are bringing the highest resolution that has ever been seen with laptop gaming.

While this is exciting for gamers, it is likely going to be pricey. AMD has always remained rather lax about the use of their technology, but with AMD FreeSync 2, they are committed to remaining more in control. AMD will not give manufacturers the okay unless their monitors include Low Frame Rate Compensation. They have also put in certain standards for low latency and dynamic color displays which produce a double brightness and color richness than the standard sRGB. One of the coolest things about these AMD displays is they automatically switch over to FreeSync as long as it is supported by the game you are playing.

There are announced versions of NVIDIA G-Sync monitors in 4k and ultrawide models that rise as high as 200Hz. These displays offer amazing fluidity which cannot be matched by AMD, even though FreeSync is certainly at the precipice of greatness. Playing a game on one of these models will amaze you because it offers the highest level of brightness, color richness, and crispness of any gaming display you have ever seen.

It is clear these two are at in a battle for gamers’ loyalty. For most people, AMD FreeSync products are a more affordable option, but can they measure up if cost is not involved?

AMD FreeSync Vs NVIDIA G-Sync

Here’s the Bottom Line

It is clear that screen tearing, ghosting, and stuttering are the biggest irritations for PC gamers. Playing PC games like Mass Effect can quickly send you over the edge if screen tearing is constantly occurring. Screen tearing takes a beautifully exquisite game and turns it into a boxed mess that does not flow as it should. If you’ve experienced this, you know how annoying it can be. Sometimes, the tearing is so consistent it makes the game unplayable. Many people think it is their graphics card alone that is to blame, but this is not always so.

Both AMD and NVIDIA have the same potential, but it seems NVIDIA, in most cases, holds to a higher standard with manufacturers using their technology. Now that AMD has created FreeSync 2, they may be giving NVIDIA more of a run for their money.

AMD FreeSync is featured in many more gaming displays than G-Sync simply because of availability and price. When manufacturers are not held to stringent certifications, they are able to produce more affordable products. With this freedom comes the price of inconsistency.

If you can afford it, NVIDIA G-Sync is likely going to be your best bet. Although it is not superior in concept, NVIDIA keeping close reigns on their products means consistency across the board with all manufacturers.

Just make sure to remember that the type of graphics card you have will determine which will work. Neither AMD nor NVIDIA allow their adaptive sync hardware or applications to work with competitors’ graphics cards. There are some reported workarounds with this problem, but they are not widely recommended. In the end, only you can make the choice based on your budget and needs.

0 notes

Text

Freesync 2 Monitor Vs G Sync Monitor: Information on Similarities

Adaptive sync technology was first released in 2014 and has come a long way since its introduction. Both AMD and NVIDIA are in the market. Those who are into PC gaming will want to learn as much as they can about these two options to determine which one will be more intuitive and able to provide a seamless feel.

What Is Adaptive Sync?

The vertical refresh rate of a monitor can have a big impact on how well it is able to play a game. If the vertical refresh rate is too slow, there will be subtle lines running through the screen, causing interference.

While your graphics card is an important part of your gaming experience, it can only move images so fast. A traditional monitor is going to have a slow refresh rate. For instance, a 60Hz monitor will refresh every 1/60th of a second. Unfortunately, your graphics card may not be on the same rate which could end up causing multiple frames to be in the same window for a brief second or so. This is called screen tearing and it is something every PC gamer wants to avoid.

Both FreeSync and G-Sync eliminate tearing and stuttering, making your graphics flow like melted butter. Adaptive sync monitors make a huge difference in your gaming experience on the PC. It is amazing to put a traditional monitor and an adaptive sync monitor side-by-side and see how they perform.

Without adaptive sync monitors, there will be stuttering and tearing which can destroy your image play quality and lead to games being less enjoyable. Today’s gaming monitors must include adaptive sync to be labeled as a gaming monitor. There are two main types of adaptive sync technology and this guide will break them both down and help you understand how they are similar and how they are different. You will also learn how they are both used for gaming.

How Does FreeSync and G-Sync Work Monitors Work

How Does FreeSync and G-Sync Work Monitors Work?

As mentioned above, sometimes your graphics card and monitor refresh rate are not perfectly synchronized which is when you start getting stuttering and tearing. Both FreeSync and G-Sync work with your graphics card to synchronize the refresh rate so there is no tearing or stuttering when your game is graphically intense. Whatever rate your graphics card is generating images at, the monitor will refresh at the exact same rate for a seamless play.

FreeSync Vs. G-Sync: Application

Although FreeSync and G-Sync perform much the same, there are some major differences. Application is one of the areas where these two differ greatly.

Out of the two, FreeSync is the one that is most easily used by monitors. It affixes over the VESA adaptive sync standard which is placed on DisplayPort 1.2a. Because AMD does not charge any royalties or fees for use, there is very little cost for manufacturers to include FreeSync with their monitors. If you are looking for a gaming monitor, you are likely to find this technology in a variety of models and brands, even those on the low end of the cost spectrum.

G-Sync, on the other hand, requires manufacturers to use Nvidia hardware modules and they remain in full control of quality, making it more expensive for manufacturers to use this technology in the production of their gaming monitors. Because of the added cost for manufacturers, you will likely never find a low-cost monitor that features G-Sync. Most manufacturers consider this to be a premium add-on and they charge more for it.

Everything You Need to Know About AMD FreeSync

Before you decide on any adaptive sync monitor, you need to know the pros and cons of each type. Being fully informed on the pros and cons of each type will help you to choose the one that will best meet your gaming needs and stop the screen tearing and stuttering that make you crazy.

Although AMD is not the first to develop a product that addresses screen tearing and stuttering, they are currently the most widely used by gamers and that could be due to cost and availability. As stated before, AMD does not charge royalties, leading to lower costs for manufacturers.

Pros of AMD FreeSync

One of the biggest things AMD Freesync has going for it is the cost. Monitors that feature AMD FreeSync are much more affordable than those with NVIDIA G-Sync technology. The lowered cost means this type of monitor is more widely available to gamers with a range of budgets.

Because it is a software solution, it is easier to obtain and does not cost a tremendous amount of money. You will find AMD FreeSync is available on budget monitors as well as high-end models.

Connectivity is another pro of AMD FreeSync. Monitors that feature FreeSync typically have more ports available. AMD has introduced its FreeSync in HDMI which allows this technology to be used by many more monitors than NVIDIA G-Sync.

Cons of AMD FreeSync

Although it would certainly seem AMD FreeSync is the perfect choice because of its performance and price, there is a con to consider. Unfortunately, AMD FreeSync only works with AMD graphics cards. If your computer has an NVIDIA graphics card, FreeSync will not be able to synchronize the refresh rate.

AMD also has less strict standards which could result in inconsistent experiences with different monitors. AMD does not retain control over their technology which means manufacturers can take liberty in creating their monitors with AMD FreeSync. If choosing a gaming monitor with FreeSync, it is wise to carefully research the manufacturer and read reviews to ensure the right sync level is achieved.

If you are searching for a monitor with AMD FreeSync, make sure you carefully check the specs. AMD has released a Low Framerate Compensation addition to FreeSync that allows it to run smoother when it is being run in monitors with lower than the minimum supported refresh rate.

FreeSync Vs G-Sync Application

Pros and Cons: Everything You Need to Know About NVIDIA G-Sync

Having balanced information about both types of adaptive sync manufacturers will help you to make the right decision. Both top manufacturers have their pros and cons, so it is not always easy to make a choice.

Pros of NVIDIA G-Sync

The biggest benefit of using NVIDIA G-Sync is consistent performance. Unlike AMD, NVIDIA retains complete control over quality. Every single monitor must pass NVIDIA’s stringent guidelines for extreme quality and performance. The certification process is so strict, NVIDIA has turned down many monitors.

As mentioned above, AMD has come out with their Low Framerate Compensation, but every single NVIDIA monitor offers the equivalent. Any monitors with G-Sync will also offer frequency dependent variable overdrive. This simply means these monitors will not experience ghosting. Ghosting is what occurs as the frame slowly changes, leaving behind a slightly blurred image that fades as the new frame comes in.

When you purchase an NVIDIA G-Sync monitor, you can rest assured the quality and performance will be consistent among different manufacturers because NVIDIA ensures it will. It does not matter which monitor you purchase, if it includes NVIDIA technology, it will have met the stringent certification standards of NVIDIA before being put on the market.

Cons of NVIDIA G-Sync

As with any product, there are some cons to consider with NVIDIA G-Sync. One of the biggest cons is the expense. On average, you are going to spend much more on an NVIDIA G-Sync monitor than an AMD FreeSync. This limits NVIDIA technology to mostly high-end gamers. NVIDIA requires all manufactures to use proprietary hardware modules, adding to the expense for manufacturers.

There is also the problem with limited ports available. If you have a lot of gaming gear to connect, you may not be happy with the limited ports that are offered. In addition to this problem, just like with AMD, NVIDIA G-Sync does not work with AMD graphics cards. If your computer uses an AMD card, you will be stuck with using AMD FreeSync.

AMD FreeSync Vs. NVIDIA G-Sync: Laptops

If you are looking for a gaming laptop, both AMD and NVIDIA have models that make gaming graphics smoother and more consistent than ever before. For the most part, AMD has been out of the mobile technology industry, so NVIDIA has the market when it comes to laptop availability.

You will be able to find NVIDIA G-Sync laptops from almost every major manufacturer. The new laptops can now handle framerates at close to 120Hz, where they were once limited to 75Hz or lower. This has made laptop gaming much more attractive to gamers who play high graphic-demanding PC games.

Although AMD is a little late to the party, ASUS recently released their ROG Strix GL702ZC which includes an AMD FreeSync display. It will be interesting to see how the competitive landscape changes as AMD FreeSync laptops begin being released in greater abundance.

AMD FreeSync Vs. NVIDIA G-Sync: What About HDR Monitors?

The demand for high-definition is increasing and manufacturers are taking note. With ultra-high-resolution now growing in depth, both AMD and NVIDIA seem to be responding. There are now new gaming monitors hitting retailers and they are bringing the highest resolution that has ever been seen with laptop gaming.

While this is exciting for gamers, it is likely going to be pricey. AMD has always remained rather lax about the use of their technology, but with AMD FreeSync 2, they are committed to remaining more in control. AMD will not give manufacturers the okay unless their monitors include Low Frame Rate Compensation. They have also put in certain standards for low latency and dynamic color displays which produce a double brightness and color richness than the standard sRGB. One of the coolest things about these AMD displays is they automatically switch over to FreeSync as long as it is supported by the game you are playing.

There are announced versions of NVIDIA G-Sync monitors in 4k and ultrawide models that rise as high as 200Hz. These displays offer amazing fluidity which cannot be matched by AMD, even though FreeSync is certainly at the precipice of greatness. Playing a game on one of these models will amaze you because it offers the highest level of brightness, color richness, and crispness of any gaming display you have ever seen.

It is clear these two are at in a battle for gamers’ loyalty. For most people, AMD FreeSync products are a more affordable option, but can they measure up if cost is not involved?

AMD FreeSync Vs NVIDIA G-Sync

Here’s the Bottom Line

It is clear that screen tearing, ghosting, and stuttering are the biggest irritations for PC gamers. Playing PC games like Mass Effect can quickly send you over the edge if screen tearing is constantly occurring. Screen tearing takes a beautifully exquisite game and turns it into a boxed mess that does not flow as it should. If you’ve experienced this, you know how annoying it can be. Sometimes, the tearing is so consistent it makes the game unplayable. Many people think it is their graphics card alone that is to blame, but this is not always so.

Both AMD and NVIDIA have the same potential, but it seems NVIDIA, in most cases, holds to a higher standard with manufacturers using their technology. Now that AMD has created FreeSync 2, they may be giving NVIDIA more of a run for their money.

AMD FreeSync is featured in many more gaming displays than G-Sync simply because of availability and price. When manufacturers are not held to stringent certifications, they are able to produce more affordable products. With this freedom comes the price of inconsistency.

If you can afford it, NVIDIA G-Sync is likely going to be your best bet. Although it is not superior in concept, NVIDIA keeping close reigns on their products means consistency across the board with all manufacturers.

Just make sure to remember that the type of graphics card you have will determine which will work. Neither AMD nor NVIDIA allow their adaptive sync hardware or applications to work with competitors’ graphics cards. There are some reported workarounds with this problem, but they are not widely recommended. In the end, only you can make the choice based on your budget and needs.

Freesync 2 Monitor Vs G Sync Monitor: Information on Similarities published first on https://gaminghelix.wordpress.com

0 notes

Text

indie bundle cruft deathmatch volume 3: ex box

So normally, I wouldn’t do so many of these in such short order, but I’m moving at the end of the month, and I’m moving to a place where the internet’s gonna be wonky, so I’m trying to store as many games on my hard drives as I can. As a result, I’m going through my “definitely not gonna play these again” games as quickly as possible. So, hey, here’s another one.

LOVERS IN A DANGEROUS SPACETIME is a 2d metroidvania, I guess? Except you pilot a spaceship with a partner. It’s fun enough, but metroidvanias and I don’t get along super good. It looks nice and plays okay, but I feel like it’s meant to be played with multiple people, rather than solo. I played it on Xbox, since I got it free on Xbox Live at some point. NAH.

MAX: THE CURSE OF BROTHERHOOD is a sidescrolling platformer about a boy who wishes his brother away. Kinda like Labyrinth, but, as far as I’ve played, without the brilliance of Henson or Bowie present. This isn’t to say it’s a bad game at all, but it’s not really gripping me. But it is a platformer, and I’m someone who’s been trying to figure out why I don’t like platformers for a long time.

I think I’ve figured it out.

One of my least favorite things to do in a video game are forced racing sequences. In these sequences, you mostly have to learn where everything is, memorize it, and repeat. I’m not one for memorization. It’s fundamentally uninteresting to me on a basic level. A good racing game involves some degree of skill; most races in games not meant to be racers rely more on simply memorizing the track.

Most platformers I’ve played don’t involve any kind of interesting or meaningful decision making. Sometimes you’ll get some puzzle solving, which can be enjoyable, but more often than not, platformers are about simply reacting to what’s there, and nothing more than that. See the path, follow the path. The enemies aren’t super intelligent; Goombas march back and forth, and that’s about it. These are games about memorizing the path and timing your button presses perfectly.

In a Sonic game, you might get a few choices, but that’s about it. Played a bit of Mario 3D Land lately and it’s the same thing; at its most difficult, it’s just a game about memorization.

I value a game like DOOM or Halo, where you get to look over the environment, plan your route, and act accordingly, reacting to changes in the situation as they arise. There’s a nice mix of “doing interesting things” at work in these kind of games.

I value improvisation.

That’s why platformers aren’t very interesting to me.

Oh, and Max has a magic marker that changes the environment. It’s a rule that all platformers have to have A Gimmick, and that’s what Max’s gimmick is. SEE YOU IN ANOTHER LIFE, BROTHER.

SPEEDRUNNERS, because it’s a platformer (a racing platformer, but a platformer nontheless), definitely can be SENT TO LIMBO.

SUPER DUNGEON BROS: claims, on its store page, that it is a rock-themed dungeon brawler. Basically it’s kinda like Diablo, I guess, except that I wasn’t really having much fun, and I forgot to take a screenshot. I DECIDED TO PASS ON IT.

BONEBONE: RISE OF THE DEATHLORD sure seems like a mobile port to me. You shoot arrows at skellingtons. YAWN.

BRINE seems like one of those Fallout mods you get for someone who offers “realistic nights,” but it’s so dark that you begin to wonder if the person making the claim suffers from severe night blindness. Seriously, the ground is just, like... totally black. It’s some kind of survival game with a 24% positive rating on Steam. I’M STEERING CLEAR.

BROADSWORD: AGE OF CHIVALRY is like, I don’t know, if Civilization were incredibly low-res? I’ve really struggled to enjoy hex-based 4X games. For some reason, Stellaris is my drug of choice, but even games like Civ are hard for me to enjoy. AWAY WITH YOU.

This is what screenshots from BULLET LIFE 2010 look like. Yes, they did misspell “Resume.” Reviews are about half and half positive/negative. I’m surprised there are positive reviews at all, but what do I know? It’s a 3D bullet hell shooter that doesn’t feel super... bullet... hell-y. But I didn’t spend a lot of time with it because the controls were a nightmare. Took way too long to discover that “W” was the interact button and “X” was fire. Oh, and by switching to full screen in the pre-load options menu, I booted up the game. Here I thought I’d be able to edit multiple settings. Silly me. DUMPED.

BULB BOY is an aesthetically wonderful point and click adventure game, which I may come back to some day. The problem is that, well, it’s a point and click adventure game, another one of those genres I really struggle with. If platformers bore me because it’s about reaction time and memorization, point and clicks bore me because they’re about clicking on everything you can until you figure out in what order a bunch of interactive objects are supposed to be placed. It’s a genre that can be brute forced in a way I personally find dissatisfying.

But.

Don’t let this dissuade you from checking out Bulb Boy, a game that has a 91% positive rating on Steam, which, last I checked, is the same as DOOM. So, if you’re into the genre, you’re probably in for a treat. DIFFERENT STROKES FOR DIFFERENT FOLKS.

What did I just say, CAPTAIN MORGANE AND THE GOLDEN TURTLE, about adventure games? Also, I have no idea what’s going on in the graphics here, but this sure doesn’t look like a three year old game. Also, it runs awfully on my GTX 1070, and there’s a review on Steam that makes the same complaint, but for a 1080. NOPE.

I was bummed out that Steam wouldn’t let me take screenshots of CARRIER COMMAND: GAEA MISSION. It’s not a great game from what I’ve played so far, but it’s interesting. Lots of little tweaks that Bohemia Interactive could do to make it play way better than it does. Maps feel huge. It’s neat. Obviously ARMA is their bread and butter, but... this has me intrigued. FINALLY, A GAME THAT STAYS.

Apparently there are earlier Deponia games, so I probably shouldn’t be testing CHAOS ON DEPONIA right now, but I went through the tutorial. Seems like an adventure game, made me chuckle. I’LL KEEP THIS ONE AROUND. FOR NOW.

CITIES XL PLATINUM is a city builder, so you’d think it would stay on my list, but it won’t, and here’s why: city builders come in two varieties. There’s the Extremely Popular SimCity style, where you just kinda build a town and keep things running and that’s that, and then there’s the Less Popular But The Kind I Like games, like Tropico, The Settlers, and Anno. These games take you to different maps where you have specific objectives you need to complete. I find operating within those constraints much more enjoyable than the open-ended sandbox creation aspect of things. That’s just not my thing.

Cities XL Platinum is in the former style, unfortunately, which means I’m... like, I’ll give it a shot, but I’m not super into it. The performance issues I experienced weren’t great either, and Cities Skylines, which is from the Cities in Motion developers, is a much better game in this specific city-building subgenre. Forgot to screenshot. KICK IT TO THE CURB.

CLOUD CHAMBER is an online game that was, as best I can tell, removed from Steam. If you have it, you can... like, click on stuff, but you can’t play it, because apparently it, whatever it was, was an online game. WHAT’S THE POINT?

CONTAGION sounds cool, but I didn’t really enjoy the time I spent with it. A review says “it’s like No More Room in Hell, but costs money, and is worse,” and that seems about right. PASS.

COGNITION: AN ERICA REED THRILLER is a game I took a screenshot of, because I hadn’t been doing it or it hadn’t been working in the past few games. Apparently I ended up in Episode 2 even though I told it to go to Episode One. As you can see, people’s faces look... odd.

It’s a point n’ click where you spend like ten minutes in three different camera angles (one shot of two people talking, two closeups of their faces) where they mostly just talk about things that could’ve been said in far less words. It has 199 reviews on Steam, and the reviews are mostly stellar, so... hey, make of it what you will. The genre’s not for me, so I’M BANISHING IT TO OBLIVION.

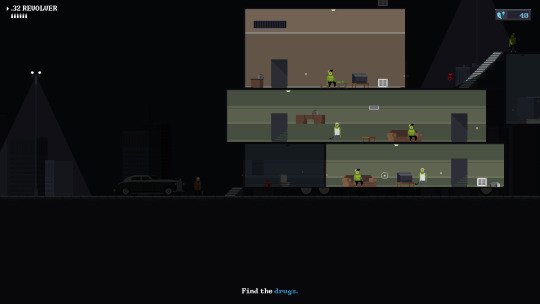

DEADBOLT reminds me of Gunpoint. Like... uncannily so. This is fine, though, because I really liked Gunpoint. Now, you might be going “hey, Doc, I thought you hated sidescrolling games as evidenced by your above remarks about platformers!” Well, kinda. Deadbolt, like Gunpoint, keeps me engaged by letting me plan things. Observe. Strategize. Engage. That’s what makes it such a fun game. Best way I can describe this is if Gunpoint and Hotline Miami had a baby. The game wears its influences on its sleeves. I LOVE IT.

Word of caution: it gave me a black screen on Windows 10. I had to edit the ini, then it worked properly. Solution was located in the Steam Discussions.

BROKEN DREAMS wouldn’t let me take screenshots of it, probably because it was running in flash. It’s a quirky indie puzzle platformer, with an art design that I find distinctly off-putting. NAH.

DRAKENSANG refused to boot up at first, which is a bad sign, but I finally got it to work, and I was rewarded with a nice classic 3D PC RPG. It feels great to play, very Dragon Agey, if you’ve played that, though it’s got less “AAA Bioware” vibe and more of a “mid-00s German RPG” thing going on. Cool stuff. I had to reinstall the directx executable that came with the game and add “-windowed” to the launch options to make it work. NEAT STUFF.

I’m genuinely unsure where or when I acquired CLOSE YOUR EYES, but it’s definitely not my thing. It’s one of those games where the default controls are Z/X and Arrow keys. Close Your Eyes claims to be a horror game, but this RPG Maker-esque game did nothing to evoke any response in me as I played. I have no idea if it’s good or bad, but the genre and gameplay did nothing to draw me in. I was bored. AWAY WITH YOU.

Well, that’s 20 games. Of those 20 games, 4 of them stayed on the backlog.

The main thing I’m getting here is that genre’s pretty important to me, especially when it comes to being able to make plans. Adventure games and platformers tend not to emphasize strategizing, which is something that’s important to me when playing games. So games where you don’t do that, where you just react to stuff, that’s not as interesting to me.

Until next time.

1 note

·

View note