This is my design journal for the courses "Physical prototyping", "Digital prototyping" and "Interactivity" which are parts of my journey of becoming an interaction designer.

Don't wanna be here? Send us removal request.

Text

Creating a new world

Everything in the universe moves, the Earth around the Sun, the Sun within the galaxy, the galaxy in clusters, all cycling around each other. There is no centre, no place where we can say “Here it all began, here it all stops”.

vimeo

Dissolving Self 2 Maziar Ghaderi (2014)

DS2 offers the space for the performer to rethink her craft in unexpected ways through the incorporation of interactive technology, which holds the potential to convey her inner thoughts and/or motives. Live movements on stage serve as the kinetic form of creative articulation, and are digitally translated to trigger multimedia in real-time.

0 notes

Text

3+1

SPATIALITY AND TIME

(Warning: up-in-the-air) I realise more and more how knowledge about and theories of astrophysics, the universe(s) and multiverses influence my work. I come across the same questions in my project as I do when philosophising about life and the universe.

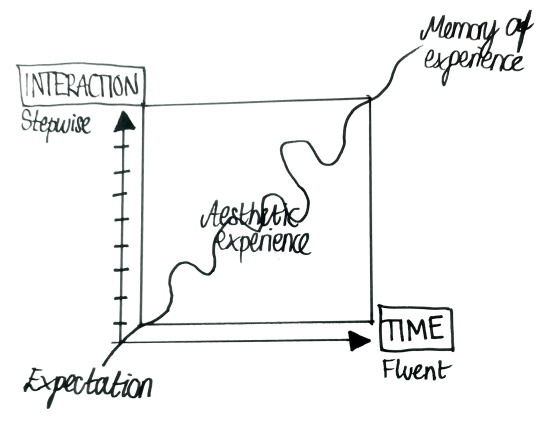

Time is one aspect that keeps popping up in my research. I came across a book called “A Routledge Companion to Research in the Arts” edited by Michael Biggs and Henrik Karlsson. In chapter 16, they write about time and interaction. They mean that some art practises are embedded in time, which means that time has a fundamental role in the creation and experience of it. Dixon writes that ‘theory and criticism in digital arts and performance, as well as artist's own self-reflections, are replete with explanations and analyses of how works "explore", "challenge", "reconfigure", or "disrupt" notions of time' (2007:522).

Biggs & Karlsson write that interactive music takes place in and through real-time rather than over time which makes it important to investigate its in-time properties. I’m thinking this is where the research on interaction becomes useful. As pointed out by Biggs & Karlsson, interactions in real-time art forms are subject to constant change. And experience emerge from interaction (Lenz, Diefenbach & Hassenzahl, 2013), which means that experiences of interactive artworks are results from the dynamic interplay of interactions between the human and the artwork happening in-time.

In the use context of my artwork, the room will be important because the three spatial dimensions are the ones our body exist in and we depend on our body to interact with and to experience things. Without the three spatial dimensions, our senses would be useless. What would we sense without a body? We would see, hear, smell, taste, and feel nothing. Even if we could, there would be nothing to sense. Not even light and sound would have anything to travel through, so there wouldn’t be anything for us to experience. Luckily for our body as we know it, we live in a world where the three spatial dimensions exist.

In addition to these dimensions, my artwork will just as much exist in the fourth - time. And the way we experience time is linear. Following this, it might be quite obvious that there is a difference between researching art real-time and outside time. In fact, Xenakis (1971) argues that an art piece can also exist outside time, as a snapshot encoded in out memories which makes it something we can navigate through, jump back and forth in, and sustain at random access. This is what I have been trying to describe when questioning the experience of the artwork only existing in the situation of use, but also after it. Biggs & Karlsson (2010) mean that this raises questions about whether or not real-time art can be represented independent of time, and how the spectrum of space and time can be calculated.

The art and the memory of the experience of it may be accessed retrospectively. But is it possible to relive the experience? If so, will it really be the same experience or will it be a copy of the first one, meaning that two similar experiences exist in different points in time? If not, what is different from experiencing something in real-time versus looking back at a memory? This make me think of mindfulness practises and methods in cognitive behaviour therapy. Revisiting previous experiences, even if they were really emotionally strong ones, does not make me feel the same as I did when they happened. I may feel a lot of things but those feelings are only my reaction to the memory of the experience and not the experience itself. Thinking back on a memory of something always gives me room to add new parameters to it that didn’t exist when the experience actually took place. Therefore, it seems unreasonable that it is possible to evoke the same feelings we once had, no matter how much we want to.

There is also this interesting notion of solipsism. It is possible that I am the only person alive in this universe, and that I am the very creator of it as well as everything existing in it. That all the people I interact with are just subject to my imagination. There is no way of proving it wrong. In a human-computer system, nothing would happen and nothing would be created without any input from the human. This makes the human not only the spectator, but also the creator of the art. To relate this to existential curiosities, I envision a world, consisting of the four (if not more) dimensions, being created when someone is interacting with my artwork. This is their world that they create together, in which only they exist and no one else can experience the same thing as they do. Even if someone stands beside them doing the exact same thing at the same time, they will see it slightly different, react to color and sound differently, perceive symbolic messages differently, etc., meaning that no one can ever enter the world that you created.

In addition to this, there will there will be more than one spectator of my artwork. This means that another layer of those four dimensions, another world, will be created for each person interacting with it. Those worlds will exist in parallell, perhaps in different places in space and time, but they will exist in the same dimensions. In other words, there will exist a multiverse of universes created from interacting with the artwork. However, the artwork will traject between those and exist in all of them, making it a multiverse travelling entity.

References Biggs, M., & Karlsson, H. (Eds.). (2010). The Routledge companion to research in the arts. Routledge. Retrieved from https://www.transart.org/writing/files/2015/02/routledge-companion-to-research-in-the-arts.pdf

Dixon, S. (2007) A History of New Media in Theater, Dance, Performance Art, and Installation. Cambridge, MA: MIT Press.

Lenz, E., Diefenbach, S., & Hassenzahl, M. (2013, September). Exploring relationships between interaction attributes and experience. In Proceedings of the 6th International Conference on Designing Pleasurable Products and Interfaces (pp. 126-135). ACM.

Xenakis, I. (1971) Formalized Music: thoughts and mathematics in music. Bloomington, IN: Indiana University Press.

0 notes

Text

Aspects of interaction in art

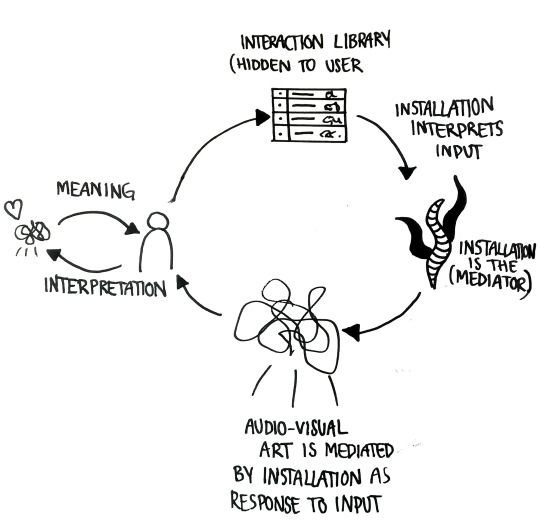

DIALOGUE The experience of traditional art can be described as active as well as interactive and consists of the interplay between environment, perception and the viewer’s creation of meaning of the art piece (Muller, Edmonds & Connell, 2006). Interaction consists of a dialogue between two parts, typically the user and a computer, user and user or computer and computer. With interactive art, this dialogue results in a unique creation of artwork for each person encountering it (Muller et al., 2006).

Muller et al. (2006) mean that this dialogue is not only psychological but also material in the exchange of input and output between the user and the computer behind the artwork. The dialogue is driven by the user’s curiosity or aim for satisfaction (Edmonds, 2010). In addition, Edmonds, Bilda & Muller (2009) describe that there are other aspects involved in this dialogue called attractors - factors that call for the user’s attention - and sustainers - factors that keeps the user’s interest of interacting with the artwork. This means that an interactive artwork can, without answering to user actions, call for initiating a dialogue and manipulate the user into wanting to keep the conversation going.

It is discussed that experience emerge from interaction (Lenz, Diefenbach & Hassenzahl (2013), and the and the meaning of interactive art lies in the interaction itself (Costello, Muller, Amitani & Edmonds, 2005). Because of this, I will conduct my project with an interaction driven design approach to investigate aesthetic experiences emerging from interaction. This also makes sure that the focus lies on interaction rather than art.

To serve one of my main interests, I have chosen to manifest the dialogue in my artwork with a language of audio-visual art. I want to let the user have an experience of conversing with an art installation perceived as a character without using words as we are used to. I will therefore create a library of audial and visual elements used as a language to interact with the installation. Just as in a conversation with any other human, the user will be left with only memories of the experience and no physical manifestation of the dialogue that took place.

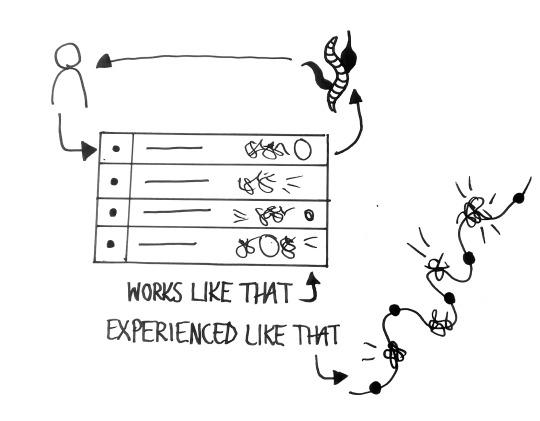

This is how the loop of interactions with my art piece will look. The user makes an input to the software that is interpreted by the artwork which acts with an audio-visual output accordingly. The output is then interpreted by the human who creates meaning of it and answers with a new input. Both parts senses and interprets the other’s addition to the conversation, but obviously in different ways. The artwork responds to a preprogrammed technical library and has no intention of its own while the human interprets by bodily senses, emotions and intelligence.

This fact is used to fuel the experience of the artwork. It simplifies the behaviour of the installation as there is no machine learning or artificial intelligence required, while the user is unaware of the technical compounds behind it and only has their body and mind to create meaning of the experience with.

TIME Interactions happen over time (Löwgren, 2009). This means that the creation of my artwork is equal to designing the conditions for an aesthetic experience of a human-computer conversation, in which time will be a crucial component. It also means that the experience is dynamic and will change throughout the interaction, as well as from situation to situation and from person to person. There is no universal recipe to what will happen and what people will feel when interacting with it, and that is the very charm of art as it leaves room for telling us something about how to design conditions for different experiences that are desirable for the context.

As earlier mentioned, the experience emerge from interaction, which makes the dramaturgical structure of the dialogue very important. The user will enter the situated interaction with expectations based on, amongst other factors, the audio-visual attractors made by the artwork. Their experience will unfold and change over the course of the whole interaction period and last for an undetermined amount of time after it in the form of memory and emotions.

References

Costello, B., Muller, L., Amitani, S., & Edmonds, E. (2005, November). Understanding the experience of interactive art: Iamascope in Beta_space. In Proceedings of the second Australasian conference on Interactive entertainment (pp. 49- 56). Creativity & Cognition Studios Press.

Edmonds, E. (2010). The art of interaction. Digital Creativity, 21(4), 257-264.

Edmonds, E., Bilda, Z., & Muller, L. (2009). Artist, evaluator and curator: three viewpoints on interactive art, evaluation and audience experience. Digital Creativity, 20(3), 141-151.

Lenz, E., Diefenbach, S., & Hassenzahl, M. (2013, September). Exploring relationships between interaction attributes and experience. In Proceedings of the 6th International Conference on Designing Pleasurable Products and Interfaces (pp. 126-135). ACM.

Löwgren, J. (2009). Toward an articulation of interaction esthetics. New Review of Hypermedia and Multimedia, 15(2), 129-146.

Muller, L., Edmonds, E., & Connell, M. (2006). Living laboratories for interactive art. CoDesign, 2(4), 195-207.

0 notes

Text

Inspiration

ANIMA Nick Verstrand 2014

vimeo

ANIMA (2014) is an immersive installation that investigates the emotional relationship between humans and artificial entities, through the use of movement, texture, light and sound. ANIMA interprets our behaviour and portrays its character by responding to the viewer with an array of audiovisual expressions. Through this behavioural process, the installation creates the illusion of being a sensory autonomous entity, thereby challenging us to reflect upon our position in relation to artificially created intelligence. ANIMA creates the context which allows us to investigate the extent to which an object can feel, acquire agency, or even possess a soul. http://www.nickverstand.com/projects/anima/

„SAY SUPERSTRINGS” dastrio x Ouchhh x Ars Electronica 2018

vimeo

According to superstring theory, all matter in the world is made up of one thing: vibrating thin strings. At a concert which we visualize the changes in brain wave activities in real-time, we will also transform the Delta, Theta, Alpha, Beta and Gamma brain waves into a real-time concert experience that is wrapped around by generating the data about emotion, focus & attention, some auditory and neural mechanisms with electroencephalogram (EEG). http://cargocollective.com/hellyeee/SAY_SUPERSTRINGS

Box Bot & Dolly 2013

youtube

Box explores the synthesis of real and digital space through projection-mapping on moving surfaces. The short film documents a live performance, captured entirely in camera. https://www.youtube.com/watch?v=lX6JcybgDFo&t=12s

AVA_V2 Ouchhh 2017

vimeo

AVA_V2 is the surface-volume shape coefficient. The main inspiration comes from monumental experimentations which focused on particle physics. http://www.ouchhh.tv/

Very Nervous System David Rokeby 1982-1991

vimeo

Very Nervous System was the third generation of interactive sound installations which I have created. In these systems, I use video cameras, image processors, computers, synthesizers and a sound system to create a space in which the movements of one's body create sound and/or music. I created the work for many reasons, but perhaps the most pervasive reason was a simple impulse towards contrariness. The computer as a medium is strongly biased. And so my impulse while using the computer was to work solidly against these biases. Because the computer is purely logical, the language of interaction should strive to be intuitive. Because the computer removes you from your body, the body should be strongly engaged. Because the computer's activity takes place on the tiny playing fields of integrated circuits, the encounter with the computer should take place in human-scaled physical space. Because the computer is objective and disinterested, the experience should be intimate. https://vimeo.com/8120954

0 notes

Text

Interactive audio-visual art

For my bachelor’s thesis project, I will create an interactive audio-visual artwork that explores the interaction aesthetics in the dialogue between the user and interactive art systems. I want to create it in a way that it is perceived as a character and the audio-visual art becomes the language for you and the artwork to communicate to each other. I am interested in what emotional experiences emerge from this, and ultimately, how it could affect our own identity and self-perception.

My initial research questions are:

How do interaction attributes relate to the aesthetic experience of interactive audio-visual art systems?

To what degree is the artwork perceived as alive and how does that affect the interaction with it?

What relationship can emerge from the interaction between the user and the interactive audio-visual art system?

According to Lenz, Diefenbach & Hassenzahl (2013), experience emerges from interaction. We can also see that interest is shifting from intelligent to empathetic products (Bialoskorski, Westerink & van den Broek, 2009). In interactive art, interaction can be viewed as the very material of the artwork. Muller, Edmonds & Connell (2006) argue that studying the user experience of interactive art is fundamental for understanding interaction as a medium. Therefore, understanding the aesthetic experience of interactive art is important for future designing of desired experiences with interactive systems. The aim of my thesis is to open up for discussion around what they could be and broaden the idea of what we could use them for.

The initial plan of how to investigate this is with help from the following frameworks (although these might change during the project):

Aesthetic Interaction (Petersen, Iversen & Krogh, 2004). This framework promotes curiosity, engagement and imagination in the exploration of interactive systems and contains three aspects of interaction: socio-cultural history, bodily and intellectual experience, instrumentality.

Interaction vocabulary (Lenz et al., 2013). Explains the how of interactions and can be used to identify as well as differentiate different types of interactions related to experience.

Aesthetic interaction qualities (Löwgren, 2009). Can be used to explore the temporal nature of aesthetics in interactions through four different dimensions: pliability, rhythm, dramaturgical structure and fluency.

As I will investigate art from an interaction design perspective, I will encounter implications. Research solely in art will not be sufficient, nor solely interaction design. The design process I use will be crucial. The double diamond won’t cover my need of creating - learning from - and adapting my artwork, as I will go back and forth between designing and reframing my research questions throughout the whole design phase. I think that I will have to create my own type of design process, with inspiration drawn from the five phases of Design Thinking (empathise, define, ideate, prototype, test).

References Bialoskorski, L. S., Westerink, J. H., & van den Broek, E. L. (2009, June). Mood Swings: An affective interactive art system. In International conference on intelligent technologies for interactive entertainment (pp. 181-186). Springer, Berlin, Heidelberg.

Lenz, E., Diefenbach, S., & Hassenzahl, M. (2013, September). Exploring relationships between interaction attributes and experience. In Proceedings of the 6th International Conference on Designing Pleasurable Products and Interfaces (pp. 126-135). ACM.

Löwgren, J. (2009). Toward an articulation of interaction esthetics. New Review of Hypermedia and Multimedia, 15(2), 129-146.

Muller, L., Edmonds, E., & Connell, M. (2006). Living laboratories for interactive art. CoDesign, 2(4), 195-207.

Petersen, M. G., Iversen, O. S., Krogh, P. G., & Ludvigsen, M. (2004, August). Aesthetic interaction: a pragmatist's aesthetics of interactive systems. In Proceedings of the 5th conference on Designing interactive systems: processes, practices, methods, and techniques (pp. 269-276). ACM.

0 notes

Text

Focus as an opportunity for implicit interaction

Interaction techniques are limited by the technology available, and since implicit interactions are based on perception and interpretation they depend on devices that both have perceptional capabilities and can act on as well as react to the user’s action in a use context (Schmidt, 2000). According to Schmidt, computers can do this with a sensor-based approach by itself, through the environment or with another device that shares information about the context via a network. Ju, Lee & Klemmer (2008) seem to agree with this as they describe an implicit interaction as the system’s ability to recognize and create a hypothesis of the user’s intended input in a context and transforming the representation of it. They define invisibility in relation to implicit interaction as accomplishing tasks seamlessly, which indicates that a computer must understand the user’s needs in a context and act accordingly without the user explicitly telling it what they want. If that fails, it would appear to increase the risk of misunderstanding between the two.

Schmidt (2000) claims that computerized systems will adapt to the context, which implies that it is important for them to make an appropriate interpretation of it. He proposes a mechanism to identify and model implicit interactions in which he means that the computer is required to read a number of components of the context and that those must evaluate to true in order for the computer to execute the interaction. In the notion of context, he includes the way an artefact is used. Ju et al. (2008) also presents a framework for describing different types of implicit interactions that consists of two axes; the balance of initiative taken between the system and the user as well as the level of attentional demand. This suggests that users’ shift of attention between different aspects of or outside of the use situation, in other words what they do or don’t focus on, could affect the interaction and that focus therefore may be considered as one of the components the computer should take into account to make an accurate interpretation of the context.

In a conversation between two people it is not only the words spoken that provides information, but also the way they interact with each other (Schmidt, 2000). Considering this, one might find focus to contribute to humans’ abilities to understand messages as they can selectively pay attention to, or not pay attention to, various aspects of the information provided. Schmidt means that it is not only exchanged information between the two persons in the conversation, but also about the context in which the conversation takes place. This suggests that messages could be interpreted in slightly different ways depending on what aspects of the information the person focused on and that a computer may affect the user’s experience by influencing the context in which they interact.

A project was conducted by two students at Malmö Högskola called the Focus tracker that identifies and logs in which direction the user points their face (see Fig 1). The Focus tracker displays a text document on the screen that would mimic a workspace for studying on the computer. When the user is sitting in front of the computer to read the text, it tracks distinctive points of their face, such as the tip of the nose and eyebrows, through the computer camera and by that knows in what directions the user turns their head. If it recognizes that the user is turning their head away from the screen for a certain amount of time it interprets them to having lost focus on the task and could then suggest that they may need a break from their work to regain energy.

Fig 1. Screenshot of the Focus tracker. The left image shows its default mode and the yellow fields in the right image shows data of the directions in which the user has turned their head. The more saturated the color is, the more focus has been targeted in that direction.

Considering Schmidt’s (2000) mechanism of implicit interactions, the users’ focus is within the Focus tracker one of the components of the context that the computer identifies to interpret the use situation. Since the user would have to look at the screen to be able to read the text, the Focus tracker would only suggest them to take a break if it recognizes their focus to be targeted at something else. However, the attentional demand of users is complex (Ju et al., 2008). The purpose of the Focus tracker was to help the user make decisions about taking pauses if they appear to lose concentration and by that increase the efficiency of reading a text. It assumes that its functionality is desirable every time the user is not facing the screen for a certain amount of time. The Focus tracker understands the notion of focus as equivalent to the direction in which the user turns their head which in this particular situation is treated as an unconscious action. Though, it seems like there could be situations where the context could change in a way that the interaction would no longer be helpful or desirable, such as if the user explicitly chooses to turn away their head from the screen to be able to reflect upon what they read in the text. In that case, the same input from the user (turning their head away from the screen) would be intentional instead of unconscious. Considering this, one might find it beneficial for the computer to be able to track the user’s focus in a way that lets it separate intentional actions from unintentional ones to make better decisions of whether the following interaction is appropriate to execute or not.

Schmidt (2000) describes explicit interactions as those where the user directly indicates to the computer what they expect it to do, for example through a graphical user interface, gesture or speech input. Once the user becomes aware of how the Focus tracker understands and answers to their input, it seems like the interaction could be perceived as one that fits Schmidt’s description because they could then manipulate the function by choosing where they turn their head to trigger or avoid triggering the interaction. Either consciously or unconsciously, the user themselves is in control over their shift of focus in these examples. As discussed by both Schmidt (2000) and Ju et al. (2008), implicit interactions can interrupt the user, which indicates that their shift of focus also can occur implicitly. Another experiment made by the students at Malmö Högskola was the Notification that tracks a face in the same way as the Focus tracker but instead of a text to read it displays a text editor (see Fig 2). Every 10 seconds, it also shows a notification on the screen that is displayed differently depending on where the user is focusing to reduce the risk of them missing it.

Fig 2. Screenshot of the Notification. The image to the left shows how the notification displays if the user is facing the screen while the image to the right shows how it displays if the user turns their head to the right.

Implicit interactions are those in which a computer understands the user’s action as an input even though they did not primarily mean to interact with it (Schmidt, 2000). This suggests that there could be layers of interactions happening at once and that the user’s focus could shift between them depending on which one they prioritize the highest at one particular point. The goal of the Notification was to grasp the user’s attention and if it succeeds it interrupts all other eventual interactions, such as concentrating on writing the text, and forces the user to focus on the notification. It could be questionable if a computer controlling the user’s focus in such a way is always appropriate. For such an interaction to be desirable, it seems like the computer would need to know that the user prioritizes the implicit interaction higher than their current one – for example; that they prioritize the information provided by the notification higher than not being interrupted while writing the text.

Schmidt (2000) claims that implicit interaction is mostly used additionally to explicit interaction, which is applicable to both the Focus tracker as well as the Notification as the user’s primary focus with these sketches is to either read or write a text and not trigger the notifications. The fact that the Focus tracker, without explicit input, could recognize if the user needed a break from their work indicates that the computer could identify needs in the use situation by tracking their focus and make the experience of an explicit interaction more effective by adding an implicit interaction to it. Ju et al. (2008) argue that it is important not only to understand the significance of explicit and implicit interactions, but also how to design transitions between the two so that users can, for instance, make requests and anticipate actions even if their ability to directly interact with a computer is limited. From this, it seems that tracking the user’s focus could help the computer to identify such requests or anticipations. In the case with the Notification, the computer does not only perform an output that the user expects (sending a notification), but also makes an additional use of the user’s input (adapting the display of the notification to the user’s focus to reduce the risk of it being overlooked). This suggests that considering the user’s focus as a component for the computer to interpret the use context could lead to opportunities for designing implicit interactions.

References

Ju, W., Lee, B. A., & Klemmer, S. R. (2008). Range: exploring implicit interaction through electronic whiteboard design. In Proceedings of the 2008 ACM conference on Computer supported cooperative work, 17-26. ACM. doi: 10.1145/1460563.1460569

Schmidt, A. (2000). Implicit human computer interaction through context. Personal technologies, 4(2), 191-199. doi:10.1007/BF01324126

0 notes

Text

Roundup

This module has been the most free for us so far since we got to choose topics ourselves. This has required us to be very open-minded to explore and experiment with different ideas. In some cases the freedom was difficult for us since the design space got too big and we had troubles narrowing it down as well as create a clear understanding of what we were actually exploring with out sketches. However, choosing our own topic to work with has made the design process fun as we got to dig deeper into areas that we find interesting with interaction design. During our workshop we have seen a wide range of different approaches to working with the servo, which may be a result of just that. I am impressed by many things I’ve seen during these past weeks, such as the sketch that let the user have “tug of war” with a robot, a reverse termometer that let them search for places by picking a temperature and a sketch where the servo worked as an indicator of how close the user was to find a hidden object on the screen.

I feel like working with the servo as both input and output created a whole new dimension for interactions possible. It also let us push the boundaries between the physical and the digital which I think is an exciting way of working. It can sometimes be challenging to work in pairs when you don’t share the same view on things, but for us the Lenz et al. framework really helped us converse around our sketches and create a mutual understanding of them. I am happy with how our sketches turned out and what we learned from them, and also how my work has different from the three modules during this course. I feel like I have gained a lot of knowledge about important things in interaction design, such as how our focus can affect our interaction with things, how the balance between security and surprise can relate to good interactive experiences and how losing control over the interaction sometimes can be exciting as long as we trust the computer. Now, I’m excited to take this knowledge into the next course to make new projects within the field of tangible and embodied interaction.

0 notes

Text

Deeper analysis of interaction attributes and trust

From this point, I will refer to our sketches as the Ball sizing (where the user changed the size of a ball with the servo), the Shape customization (where the user customized the visual appearance of shapes), the Ball spawning (where the user could change for how long balls would be displayed on the screen) and the Telescope (where the user moved around a physical cone to move around the viewport).

Me and my partner have now applied some of Lenz et al.’s Interaction Attributes (2013) to our sketches that we think are helpful for us to define and separate different experiences of interactions from each other.

Stepwise/fluent

We had a long discussion where we talked about how the different attributes relate to each other, and that we think some of them can lead to a higher degree of others. For example; a fluent interaction can lead to a more precise interaction. The Ball sizing sketch we made can work as an example of this. The interaction with the ball is very fluent - every tiny movement in the 180-degree range with the servo changes the size of the ball in a direct, flowing way. If we would want the ball to be 7 cm wide but could only use a stepwise interaction, we could maybe only make it 5cm or 10cm wide. That way we lose some level of control over the interaction and can’t make the object precisely the size we want to. In the fluent interaction, we could feel that we have more control because the feedback is immediate. Every small step we rotate the servo has an impact on the ball. With the stepwise interaction in the Shape customization, the user needs to rotate the servo for quite some time before anything happens which brings a short time of uncertainty before the output is visible and there is an “Aha”-moment.

I think that what interaction attributes that leads to us having more control depends on what needs we have in a particular situation. In my last post, I mentioned a fluent zooming interaction versus a stepwise zooming. A stepwise zoom could be more consistent than a fluent, because we can always zoom exactly 25%, 50%, 75% or 100% by just pressing a button, for example. This zooming creates a high level of control when we know beforehand how much we want to zoom in, because it is a fast way of getting an exact percentage of zoom. But at other times, for instance when wanting to zoom in on an image in Photoshop, we may prefer a fluent zoom because different images require different percentage of zooming, and a fluent zoom lets us be more precise about exactly where and how much to zoom in on one image. This can also be compared to scroll versus auto scroll – with an auto scroll we have a more stepwise interaction where we indicate that we want to scroll down by initiating that interaction, and so the page automatically jumps to the next section. With an auto scroll (or using the page up/page down keys) we jump between landmarks in one page, while with manual scroll (using the arrow keys), we experience a more fluent interaction that we can stop at any time we like.

I think that shifting attributes in interactions also makes us interact with things in different ways. In contrast to the fluent interaction of the Ball sizing, our Shape customization is stepwise because the shapes shift between a set of 5 different appearances while the user rotates the servo. The shapes changing are obvious demarcations of the steps of the interaction. Since we only reach these steps every 35 degrees the servo is rotated and nothing happens between those landmarks, we experienced that we tend to pause at them. This was to recognize what changed, and then decide if that change was desirable before continuing the interaction to see what will happen next. So, the Shape customization showed that a stepwise output can also make the user interact with the servo in a more stepwise way. With stepwise interactions, we get like a checkpoint - somewhere to pause and something to hold on to before continuing the interaction. From our sketches, we felt that pausing a fluent interaction breaks a flow that is not desirable. We can’t pause a fluent interaction in a comfortable way because there is no natural break point in them like there is in stepwise interactions.

Constant/inconstant

When stripping it down to its core, fluent interactions also seem to consist of steps, but much smaller and shorter than those in stepwise interactions. That makes us experience them as a continuous flow instead of distinctive steps. In the Shape customization, the user has control over the interaction in the sense that they are responsible for when the shape will change appearance and at what step they want to stop. But they can’t know what the next step will be the first time they use it, because each step means a new visual appearance for the shape that the user has not seen before. In contrast to this, the Ball sizing is constant in its output which lets the user predict how it will behave because they quickly notice that it always reacts the same to their input.

What makes us feel like we are in control over the interaction with the Shape customization is that the pattern in which the shapes change is constant – it always goes from a pink square, purple square, blue ball, green square to yellow square. We can imagine that if that pattern would instead be random, it would make the interaction more approximate than precise because it would remove the aspects of consistency. We think that an inconstant and approximate interaction would prevent us from feeling like we are in control over it, because then we can never wrap our head around what will happen next. But after having interacted with the Shape customization for a while, the user starts to learn its pattern and can then predict what will happen – then it would be experienced as constant and kind of control would be the same as with the Ball sizing.

Another example is our Telescope. In the version where the cone could be rotated when clicking the screen. it is both inconsistent and covered. That makes us feel like we are not in control – because we don’t know what triggers it’s movement. What position it moves to actually depends on the size of the last ball spawned. It IS consistent in the sense that it always rotates in the same degree as the balls are big. The difference is that the we experience the sketch as being inconsistent, because we probably don’t figure out how it works while interacting with it.

Apparent/covered

Another set of attributes we have talked about is the apparent versus covered. We think that as soon as something becomes apparent, we feel like we are in control over it because we understand how it works and can then predict its behaviour. An example is when our classmates tested our Ball spawning and they didn’t understand the impact of their input which made the interaction covered and hard to grasp. That fact also made them perceive the sketch as inconstant as it seemed to them like it reacted differently to their input all the time. We think this it is because the long-interval balls stay on the page just as long even if the servo is rotated to its minimum. If there would have been no such delay and all the balls displayed on the page would react the same way to the input it would be more understandable and by that, more apparent.

One more example we talked about regarding the apparent and covered attributes is the amount of information displayed in the Facebook chat compared to the Tinder chat. The Facebook chat is very apparent – we can see when all messages are sent, if the other person is online, if they have seen our message etc. In the Tinder chat, we can only see when the message was sent. The apparent interaction in the Facebook chat gives us more control, which I think we appreciate in that particular context because we typically talk to friends and family on Facebook - people we have a relation to and care about. Though, this is also something that can be used to gain control in social settings by for example not reading a message from someone we are mad at. However, in the Tinder chat we appreciate the fact that we don’t have total control because we are using it to get to know someone new that might be interesting to us and the covered interaction makes the encounter more mysterious and exciting.

The Ball sizing is quite apparent because we get direct feedback of our input and that makes us more quickly understand it. The Shape customization is more covered because the user gets surprised each time the shapes change and there is no way of knowing how they will look in the next step before having used it at least once and memorized the pattern of the different steps.

One recurring aspect through all of our sketches has been the option for the user to go backwards. Our very first iteration of the Shape customization was that the user could only browse through the different customizations once and was not allowed to go back. The human curiosity makes us want to see every option possible so we rotate the servo to the last step which is the yellow square, but maybe we liked the blue ball more but couldn’t go back to that option. We think this is something that contributes to the feeling of being in control – that we know that it’s okay to make mistakes because we are able to correct them.

Trusting the system

I have previously described the interaction between a user and a computer as a dialogue, and I think that just like when we communicate with other people, there needs to be a reciprocity between the two parts – a good relationship built on trust – to feel comfortable about the interaction. Do we always want control in interactions with computers or could we also be fine with handing it over? When we lose control, something else has to take it. I think that the user needs to trust the system in having control for them, otherwise they feel like their relation to the system becomes uneasy and intimidating. If the system can prove to them that it understands and interprets their input in a way that makes sense, they are fine with handing over control to the system. This can be applied to the Ball spawning, in which people were uncertain if the system even understood what was going on or if it just lived its own life regardless of their input. However, in the Shape customization for example, the output was a logical answer to the input which made the users comfortable with the system having partly control over how the shapes would appear on the screen.

Maybe an interaction is comfortable as long as we have control over our input and we understand it’s impact on the system. When the we lose control over what we feel is “our part” of the interaction it can become uncomfortable and make us feel vulnerable, like in our Telescope when the cone would move without the user making an input themselves. What the output we receive doesn’t matter in the same way – we can appreciate surprises because it keeps the interaction interesting – like the different steps of the Shape customization.

I think that sometimes it could also be comfortable not to have complete control. As I wrote in the previous post - sometimes we appreciate watching a film on TV because we don’t have to bother about deciding what to watch. To apply this to our work we could try to imagine our Shape customization as a photo editor, like Photoshop. As a photo editor, it’s kind of annoying to not be able to customize the shapes exactly the way we want to because we want the high level of control, and freedom, in that context. However, if like in the example above, we imagined it was the TV, it would be kind of nice not to be in total control over what the content is. Sometimes it’s nice just to put on the TV and watch whatever is on because we would be exhausted by constantly making meaningful choices about everything we do.

What degree of control we wish for may relate to our area of expertise in different contexts. A pro user of Photoshop may want full level of control because they know how to use the program to get the results they want. But for a beginner, all the options available in Photoshop can be overwhelming because they are not familiar with them. For them, pre-made filters would most likely be something useful and wanted. This can also be related to a project that Clint showed us about a new way of making a color palette called PlayfulPalette by Masha Shagrina (https://www.youtube.com/watch?v=bo5MM0gD6cM&feature=share). The PlayfulPalette lets the user affect all colors of a digital drawing by changing the default color on the palette. In that case, we can make use of the system partly being in control over what colors the image should have – because as Masha herself says, if we were in total control we would have to repaint the whole image rather than just changing one small detail in the palette. To use this, we have to trust the system in making choices for us that are aligned with what we want it to do – we need to know that the computer understands our needs and can behave accordingly. This may have to do with what I’ve written earlier about design making things easier for us. We strive after making the interaction with the design as effective as possible, if it’s not effective it’s annoying and time consuming. Sometimes, like in this case, the most effective solution is leaving some of our control for the computer to handle the situation for us.

0 notes

Text

Intimate interactions

Why is control important to us? Many elderly people don’t feel comfortable about interacting with technology because they don’t know how it works and they’re afraid of doing wrong. Is this because they don’t feel like they are in control over the technology? Me and my partner read up a bit on control as a psychological phenomenon and found a website that writes about control in relation to Abraham Maslow’s theory of Hierarchy of Needs (a model of different levels of fundamental needs for our well-being). On this website, they claim that control becomes more important the farther down we go the model – that is, the more fundamental the needs are (http://changingminds.org/explanations/needs/control.html). Since Maslow’s needs are always present to us, we think that being in control is important to experience pleasant and comfortable interactions.

In our earlier sketches, we have experimented with customizing elements visible in the viewport - in other words - being in control over elements’ visual appearance on a page. We thought that another way of creating a feeling of being in control over digital things in screens could be to determine what elements will be visible in the viewport. With computers, we typically do this by switching between different windows and programs as well as dragging things in and out of the screen. Now, we wanted to explore what the difference is in the experience of being in control over what’s visible in the viewport by moving the viewport itself rather than changing the content within it. Just like we move around a camera to choose what we want to take pictures of instead of mounting it on the wall and reorganize the objects to take photos of in front of the camera, we could move around a screen to choose what to look at instead of manually dragging and dropping content in and out of it.

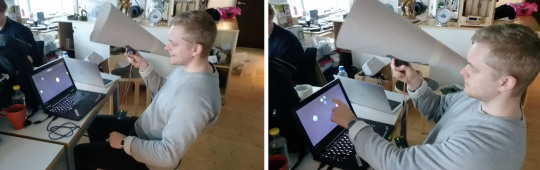

During this module, we have talked about designing environments to affect the experience of a whole room, but we did not really know how to realize that in a good way. Still, we wanted to incorporate that idea in our work somehow, so we made a sketch that absorbs the user in a one-on-one experience in a smaller sense. We made this by attaching a cone made out of paper onto the servo, and at the end of the cone, we mounted a smartphone on which we mirrored the computer screen with our sketch running. We tweaked our sketch with balls popping up in different intervals on the screen by adding a space background, made the balls static on the page as well as changed their appearance to look more like planets. And so, we had a screen inside a cone mounted on a servo that would work like a telescope to look at different planets in space.

The telescope under construction.

This sketch is a way of moving through a digital space, like we talked about in the last module. It lets the user select out different elements of a page to look at by exploring their way through it. When the cone is physically moving in the 180 degrees panorama motion, all the elements on the page moves along with the position of the servo in the opposite direction. This creates a feeling of having moved the viewport to access various parts of its content rather than moving objects in or out of it.

The interaction with this sketch is very intimate. The rest of the world is literally shut out from the interaction and it can only be experienced by one person at a time. To mimic the feeling of actually being in the space, we made the interaction very fluent so that with every millimeter the cone is moved, the content moves as well, just like it would do if we looked through a real telescope out in the real space. If the interaction would have been more stepwise, for instance if we could only look at certain predetermined frames of the space, it would remove something organic and alive about it. It would be more like looking at pictures of the space rather than moving through it. This could be related to what Jens talked about in his theory lesson about zooming. When we zoom in on something in a fluent way like when dragging a slider, we have control over exactly what we zoom in to, whilst when we take a shortcut and press a button to zoom in exactly 25% or so, we can lose the grip on what we zoom in on. The same thing goes for this sketch – when we can rotate the telescope just the way we want to, we can quite easily orient ourselves in the space because we keep track of where we started out. It would be hard to do that if it was more stepwise since we can’t really know where we are in relation to where we were before if we just teleported from one place to another in the space.

One thing we found interesting with our earlier sketches was what I wrote about in my previous post when we made the servo randomly snap back at the user which could make them feel intimidated because they lost control over the interaction. We tried to incorporate that in this sketch as well, but with the output being more covered than in the last experiment. We explored what it would feel like if we lost the control over where to move the viewport and made a version that would rotate the servo, and by that, move the cone, if the webpage was clicked with the computer mouse. But the user does not interact with the computer while using this sketch, which let us make a Wizard of Oz thing where one of us could intrude on the interaction and click on the screen so that the telescope would suddenly move. By removing all control for the user and making the movement of the cone independent of their action, it could create a feeling of helplessness because there was no way of knowing what triggered its movement.

We also did a test where the user could click on the computer mouse themselves. In that case they thought it was exciting, like a pleasant surprise to look at something new. This was because they knew that the telescope would move to another position because they were in charge of when the movement would occur. That way they were prepared for the surprise and therefore they appreciated it. However, when another person was in control over the movement, they were taken by surprise and the movement didn’t make sense to them and that made the interaction illogical and intimidating rather than exciting. This could be related to the decision we do when we want to watch a movie: do we turn on the TV to watch whatever is on, or manually go to Netflix and browse through the library to choose for ourselves? Sometimes we appreciate watching TV because we don’t have to bother about making decisions about what to watch. When choosing what film to watch on Netflix there is no reason to choose one with a rating under 7 on IMDB, which can become a struggle if we have already browsed between all those films and watched the ones we find interesting. Then we spend a lot of time just browsing instead of watching a movie on the TV that was actually quite good as well.

0 notes

Text

Having (or lacking) control

Me and my partner have talked a bit about what we find interesting in interaction design. We both are fascinated by how changes in a design affect our experience of interacting with things or other people, that we can design experiences in a sense. I like the idea of creating something that is bigger than just looking at a poster or tapping a button on a smartphone screen, like walking into something that embraces you and engages more of your senses. I want to create something where the experience embraces you. To bring interactivity into whole environments rather than one small artefact is very exciting and appealing to me.

So far, we have mostly talked about attributes relating to objects – like color, shape and size. But there are many other qualities uncovering from interacting with the servo and our sketches that are interesting, like tempo (as in our sketch with the balls popping in and out of the screen), rhythm, resistance, flow, etc. We want to try to do something that embraces us rather than looking at something from a distance, and got the idea that our sketches could be projected on all the walls in a room. How could we make the user “interact with the walls” in a sense, and would that somehow enlarge the experience to something greater? And could we use changes of visual aspects in a room to affect people’s mindset and behaviour in a room? We often talk about certain colors to different emotions which could help making meetings more creative in conference rooms, for example. Then we also started to talk about control. The same way we can customize the desktop on our computer the way we like we could customize the room around us to make us more focused, calm, creative or whatever. How would it be if everyone walking into a room had the opportunity to customize it to their own liking? And how does the user experience differ between entering a room and just being forced to feel something, from being able to change the setting and create your own mood?

We discussed that there are different levels of control in interactions. While painting something in a program like Photoshop, we have a high level of control because we can determine the exact pixel size, color, pressure etc. of the brush, and of course how it moves on the canvas. But if we use premade filters on a photo we have a lower degree of control – we are in charge of what particular filter to add, but we have to settle with how it looks like, or swap it to another filter. As for the servo, we could be in control over the speed of the servo’s rotating movement, but the system could be in control over what happens when it reaches a certain value.

To make an experiment of what it would be like to lose some of our control over the interaction with the servo, we tweaked our code from the sketch where the visual appearance of shapes could be customized by rotating the servo.

vimeo

https://vimeo.com/240117423

The interaction with out original sketch felt very fluent. After having rotated the servo a couple of times you were familiar with it’s limits - you knew just about how much you could rotate it before you reached it’s maximum or minimum value. In that sketch the user was in total control over the servo. But with this tweaking, that control was removed and the user would have no idea of when they would reach those limits. The servo could start rotating back in the middle of your interaction with it, it can surprise you and maybe even intimidate you, as Axel’s facial expression is expressing in the video. It was almost like it answered “No, I don’t want to change appearance!!” when you tried to make it look like something else. We got kind of the same feeling from interacting with this as with that game where 4 people hold a thing in their hand and one of them will randomly get an electric shock - there are mixed feelings of both excitement and fear.

Our next step is to try to disguise the servo somehow, or make it look like something else so that it becomes more interesting to interact with. We could add a wheel, a lever or something else. We should also explore how the servo could be used as output in combination with the input that it so far has been.

0 notes

Text

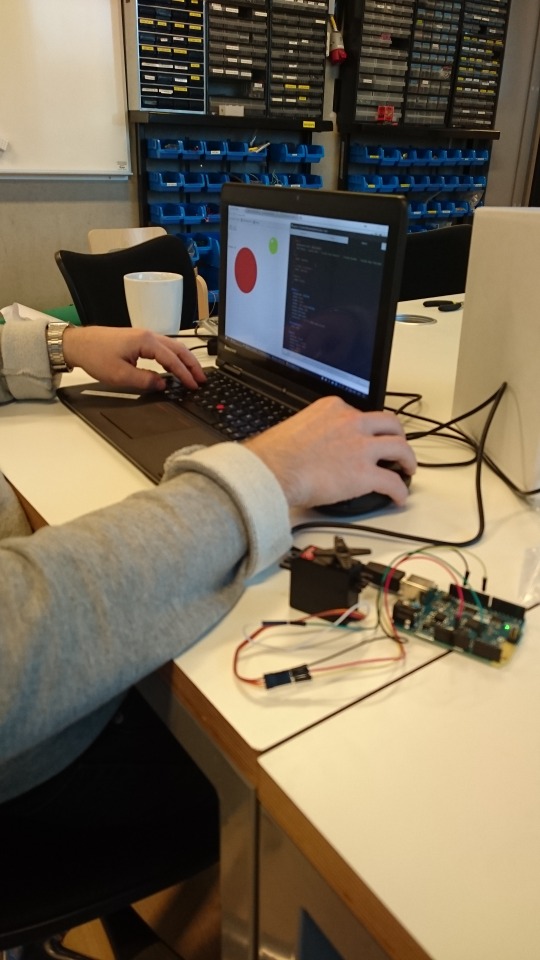

Module 3

Now we have begun the last module of our Interactivity course. This time we will use a technology-driven design approach; the theme for this module is of our choosing, but we have to include a specific material – a servo motor. The servo we are going to use rotates in a 180 degrees angle and can work as a way of both receiving and sending data, which means that it can be both an input and an output. Our mission is to work with this in an interesting way, to try to look beyond the idea of it being a potentiometer and get away from what it appears to be.

This is a task that goes between the boundaries of the digital and the physical. We will work with the servo motor by connecting it to a computer, and the two can communicate by sending values to each other. The interaction between those can look like: digital input – physical output (the user can control the servo by sending values to it from the computer that determines the servo’s position), but it could also be physical input – digital output (the user can control things on the computer by manually rotating the servo and capture values of its position). This opens up for a wider range of possible interactions which is both interesting and different from what we have done before. It is also a challenge because we need to incorporate more functionality in one sketch to really make use of the material.

Initially, we decided to brainstorm around what we thought we could do with this material. We talked about what existing interactions we know of that traverse between analog and digital input and output and came up with examples like controlling a character in a video game with a remote control, pressing a remote control to increase/decrease volume on the TV etc. We also discussed what things rotate in 180 degrees or what things that make use of that scope, like clocks, metronomes, and record players. To get some inspiration we also asked ourselves where we can find interactions with the rotation movement elsewhere; like when opening a bottle, changing volume on speaker, dimming light, turning on stove, painting circular shapes, steering something like car, clean (wipe), cooking (stir the sauce), screw/unscrew a screw etc.

So, what could this thing do? We discussed that it could wave at someone or at something. It could paint something if we mounted a brush on it. It could push something or swipe something. It could move another thing. It could hit something or play an instrument like a gong gong or chimes. It could rotate something (within the scope of 0-180 degrees. Or could it go beyond that?). It could turn something on or off, or it could increase/decrease something gradually. We brainstormed around some ideas of what we could make of all this and some of the things we came up with were that it could maybe open a box so that it becomes like a treasure chest, rotate a cam so that it works like a burglar alarm around your house, control the arm of a stuffed animal so that it waves at you when you come home. It could also work like a sound alarm: if something happens - play the chimes (like an analog notification), for example. We came to the conclusion that there are many possibilities.

To get to know the material, we got some example code to practically try out the servo as both an input and output. We started off by using the user input value from rotating the servo to change the scale the size of a ball on the page. From this, we came up with the idea of using this to show others how much we approve of something. Then we remembered that Facebook already has done this in the Messenger app as you can tap and hold the like-button to choose how big of a like you want to give something.

vimeo

https://vimeo.com/239523016

While tinkering around with the code, we stumbled upon our first challenge. We noticed that the values we got out from physically rotating the servo were not what we expected them to be. When rotating the servo from the far left to the far right, we expected the values to go from 0-180, but what we got was more random and could look like 0, 34, 92, 100, 0, 16 and so forth. To be able to make conscious and meaningful interactions, it seemed like constant values would be quite vital. Inconstant values prevented the output from being smooth, because it would jump to values with different margins between them so that the ball first scaled up slowly and suddenly much faster. We spent a lot of time problem shooting this before we understood that there was a small code snippet causing the problem. When removing that small piece of code it finally behaved like we wanted it to.

We continued on the color- and-shape theme and created a sketch that explored how we can customize visual aspects of digital content with physical input. If no input is given to this sketch, there is just 6 small white boxes floating around on a page. However, if the user rotates the servo, these boxes start to change shape, color and size. We thought of this as some kind of interactive screensaver, or like a stress ball. Here there are some interesting questions we come across: how does it feel to customize the visual appearance of digital objects with physical input? And what is the experience of traversing between the physical and digital world in such a way? If it was a stress ball - what aspects of this actually makes us calmer? The interaction with the servo actually felt more natural and made us feel closer to the shapes than when changing them with the trackpad. It created a feeling that the interaction itself is central, and not the output of the interaction. Personally, I think this could have to do with its’ resistance, that it takes more effort to rotate the servo than swiping the trackpad which made us handle the servo with more care, and that in turn created a feeling of a more close and personal interaction. One interesting thing with this sketch was that we noticed that different qualities in designs can have different impact on different people. I am very affected by colors, and therefore I wanted to change the shapes according to what colors I found more appealing. But my partner thought that what shapes the elements had was more fundamental to his experience.

vimeo

https://vimeo.com/239523700

We continued thinking about how we could make use of this interaction and made a sketch where we could control the movement of shapes on the screen. We made one with two pink lines that could be pushed up and down on the sides, which made us think of a pong game where two lines could be controlled by the servo to move up and down and prevent a moving ball from reaching the edge of the screen. However, since that is an already existing game, we decided not to continue working with that specifically.

vimeo

https://vimeo.com/239523099

The next thing we wanted to try out was to, not change the appearance of things, but how changes in how things are displayed can affect our experience of them. So we made another sketch that displays colorful balls on a page in different intervals. These balls pop up for a limited amount of time and then disappears again. The intervals are determined by how the user rotates the servo.

vimeo

https://vimeo.com/239523141

If the user rotates the servo to its minimum limit, the balls will quickly pop up and stay on the screen for a quite small amount of time and then disappear just as quickly. If the servo is rotated to it's maximum, the balls will fade in, show for a longer time and then slowly fade out. However, the amount of balls popping up per minute is the same, which means that in the faster case there will only be one ball visible at one time, whilst in the slower case there will be plenty. It is possible to change the display of the balls into all stages in between these two extremes as well. Since we of course were aware of this when we made the sketch, it made perfect sense to us. But when we tested it on our classmates, they had troubles understanding what their interaction with the servo did, because there is a delay between when the input is made and when the shift is starting to show on the screen. When the servo is rotated, all balls from that point will change. But when changing from maximum to minimum value, all balls with the slow animation is still visible on the screen until their "showtime" is over. That meant that our classmates just rotated the servo back and forth several times so the change in pace didn't really make sense. We noticed that the user needs to interact with the servo once and then wait for the change to show to really understand what's going on.

We noticed the same thing with this sketch as with the first one - different aspects have different impact on different people's experiences. I thought the version when the balls quickly popping up was more stressful because I never knew where the next one would appear. I thought that it was too many impressions I needed to register per second, and it was hard to keep track of them when everything happened so fast. The faster one made me feel calm because it has a longer delay, which means I have much time to adapt to the change. My partner thought the opposite - he thought the fast one was systematic and easy to follow while the slow one was messy because there are more elements displayed at once. He thought that when they last longer on the screen it’s more like a cluster of balls and that made it more stressful because we need to focus on many spots at one time.

So far, we have explored how visual aspects of digital objects can be manipulated by physical input. We seem to lean towards more abstract themes like interactive art, and what impact visual aspects of these things have on our interaction with them.

0 notes

Text

Conclusions of module 2

We have talked a lot about comfort during this module. We feel comfortable about being in a space when we are familiar with how it works. This could mean that we correctly can predict the behaviour of a digital space because it has a consistent design. We asked ourselves if making a digital space feel more at home is the same thing as making it more comfortable to be in? We often interact with things to adjust them until we get a grip on them, i.e until they feel totally comfortable. This is also where we began to talk about trust – if we can trust a space it feels comfortable to be in. But maybe it’s not like that, comfort is nice because it makes us feel like we are in control – it promotes security, but we also need aspects of surprise to keep it interesting in the long run. We would not want to live in a city where every street looks the same because that would be boring, and in the same sense we would not want to live in a city that is totally predictable so that the same things happen every day. Now, we think that what actually makes us dwell in digital spaces could be a good balance between security and surprise. We have tried to explore and find logical structure in digital spaces – i.e. the combination of consistency and predictability – and that a logical structure means that the design makes sense to us which makes us trust it. Maybe the logical structure is what makes us feel secure within a design, and what is displayed within that structure is what should have aspects of surprise and be varied, interesting and fun. If the space is without change and moderate surprises, it can be perceived as dull and inorganic. On the other hand, changes in the structure could also be nice for the user – if the new structure feels better than the old one. Updates of programs can be a struggle at first because we have to get to know the space again, but they could be huge improvements of the design that makes us feel even more at home in it. We have come to the conclusion that embracing one of the qualities we have worked with comes with a cost of another. Too much consistency can make something boring. Customization is not always good because it can replace other qualities like security. Predictability can remove something fun about the design, if it is too predictable it’s no longer an interesting interaction. We want some aspects of surprise, or variation, to keep it fun at the same time as we should feel secure in the space. We want the street we live on to be consistent so that we know how to orient ourselves in it, but we want different people to walk there or things to happen there so that each day is new and interesting. So maybe it’s not only about keeping something comfortable because then it would be totally consistent – we also need to explore our boundaries of what is comfortable and try new things in a space to truly want to inhabit it.

On the show n’ tell we got the feedback that some of our insights were questionable if they really brought something new to the table. I understand what that means and that it is important to go beyond the obvious, but this is of course a subjective matter and I feel quite troubled by that because to us, our insights were new. I think that space is a huge theme that could concern pretty much anything, and three weeks is a short time to try to wrap our heads around that. I feel like this module has been hard for me to really get under the surface of things. I have been surprised by some things and learned things I did not know before, but I still don’t feel satisfied or like I’ve really gotten to the core of what dwelling in digital spaces actually means. I wish I would have gotten deeper into this by now but felt that there was a struggle in coming up with appropriate experiments to get acceptable answers to the questions we had. Despite that, I am happy with what we have accomplished, and I hope that I will be able to really cut to the core of things in the next module.

0 notes

Text

To make use of our bodily assets

We have noticed from our previous sketches that the lack of consistency or predictability in digital spaces can cause false anticipation, discomfort and confusion in our interaction with them. To learn something more constructive about that, we continued by working with how we could make the navigation through digital spaces more effortless.

When I asked my boyfriend what it is about our home that he thinks makes it feel like his home, he answered that it is the colors, smells, sounds etc. he experiences there. All the things he described were things we can pick up on by our senses - things that we don’t actively have to pay attention to in order to register and recognize. I thought this could be something to work with and wanted to make a sketch that experiments with how things we can recognize with our senses can be used in navigating through digital spaces.

This sketch consists of two tasks: find the blue square and then find the yellow circle. The information provided in these two tasks is the same, but it is displayed in different ways. In the first task, the information is explained in text, and in the other one it’s represented by shapes and colors.

I asked my boyfriend to test this sketch out. He perceived it as a game and said that he liked the first one with the text more because it felt more professional and fun to him. When I asked him why he thought it was funnier he said that it was more challenging. He said: “The one with colors and shapes was so easy that it felt more like a task for a three-year-old”. With that said, the second one was more effortless than the first one.

The belief that design should be easy and comfortable is one thing that has kept coming back to us during this module. In the beginning I wrote the post “To feel at home in space” in which I said: “A home is a place I know every corner of, because I’ve explored it over time. The fact that I know the space prevents me from having to think very much to get to a specific location in this place”. We seem to have embraced Krug’s words “Don’t make me think” in our sketches. We want to create effortless interactions in digital spaces to make them comfortable to be in since we believe that could be one thing that makes us dwell in them. I did not know what this experiment really was all about until I tested it out on people. Everyone that tested it thought that the one with colors and shapes was easier because they didn’t have to think as much to come to a decision about where to click as in the first one. Several people said that they sort of just “knew” where they should click as soon as they saw the different alternatives. It seems like even though the words in the text version was very concrete we don’t pick up on them as easily as with shapes and colors because they need to be read and analysed to make sense of. We don’t need to concentrate as hard on colors and shapes to understand their meaning, because their differences are obvious to us, even if we only see them for a short amount of time. We could call it implicit and explicit attention, if those words make sense, whereas the things we implicitly pick up on seems to be more effortless than the explicit ones.

I had an interesting conversation with my classmates about things in the digital space that imitates things from the physical. The physical world has existed long before us and it is a place that we have inhabited and adapted to. The digital world is comparably new and artificial, it is something that humans have created. But still, we call it a world of its own, in which we have implemented attributes from the physical space so that it feels more familiar to us. But can all aspects of what makes us dwell in a physical space really be applied to the digital? The sketch we made shows us that information we can pick up on with our visual perception works for facilitating our navigation through a digital space, but we cannot be sure that also means that it makes us feel more at home in it.

However, I have earlier stated that I think dwelling in a space may relate to how easily and comfortably we can navigate through it. I think there is something about trust in our interaction with digital things. If a design is consistent which makes my predictions about its behaviour right, I feel like I can trust it to do what I expect it to and that makes me comfortable about interacting with it. It may relate to what I wrote about our customization sketch, that we want some sort of security in a space to go back to or hold on to, and trust could be one form of security. But at the same time, I can trust things in my best friend’s home because I know their behaviour too, but that does not mean that I feel at home there. So, trust may not be equal to dwelling, but I think it at least plays a role in it.

0 notes

Text

Hot and cold interactions

Not being interrupted in the flow of interactions may also be one thing that makes us dwell in a space. As discussed in the previous post, we have acknowledged the possibility of applying the notion of focus and different layers of interactions in this theme as well. We already know that by focusing on one thing we push other things in the background. We think that if we have multiple interactions at once, the shift of focus between them should be seamless in order for us to not feel interrupted in our flow.

One situation where we think it is important to have a fluent flow of interactions is while writing a text in a text editor. In those situations, we have a lot of thoughts running through our head that need to be printed down – while we also might have to read up on something or look up the meaning/spelling of a word. These different tasks require the use of different tools that we shift our focus between. This shift often means that we have to switch window from the text editor to the browser etc. in a stepwise interaction, and while focusing on one of these tools, the other ones are hidden which could easily interrupt the work flow.

Jens told us that our most frequent interactions can be referred to as hot interactions, while there are also warm interactions that have an intermediate level of attention, and cold interactions that are less used. In this case, the text editor would be the hot interaction, the reading would be warm and the dictionary cold. This is where we saw an opportunity for design as we asked ourselves; How can we improve the relationship and intimacy between hot and warm interactions in a workspace?

We made a sketch that we wanted to be perceived as one single tool containing all functionality needed to write a text to create a fluent work flow in the digital space rather than separate snippets of space to switch between. The three different parts of the singular space (text editor, text to read and spell checker) had to be aligned according to how much of our attention and room they need to be experienced as comfortable interactions. The thought behind the placement was that the top of the screen tends to be where we first pay attention since we are used to reading information from top to bottom, which resulted in the text editor and text to read being placed at the top left and right since they are hot and warm interactions. The spell checker is less frequently used and needs less height in relation to its width compared to the other two which made the placement at the bottom appropriate.

In my last post I wrote about having a sense of where things are in the digital space. This sketch opposes the way things are typically placed in it – stacked on top of each other or hidden outside our visual scope whilst not used – and therefore it explores a new way of knowing digital space. This sketch makes all the tools we need visible at all times which prevents us from being forced to search for the different tools we need to work with a text. Instead, they are nicely served as one complete tool with all functionality at hand when needed. We actually found this sketch to be very useful and handy and it worked surprisingly well for preventing interruptions of the work flow. In the previous module I wrote that design seems to be about making things easier for us, and this sketch really strengthens that statement. If a space feels easy and comfortable to interact with, it seems like we are more likely to dwell in it as well, and I think that would be what a successful design is all about.

0 notes

Text

The human consciousness

In the last module we talked a lot about focus and different layers of interaction. I think that might be applicable in the field of dwelling in digital spaces as well.