Professional solver of vaguely money-related problems. Trained in Thought As Such. True word rotator. Adjacent and sanepunk. I am credentialed to give financial advice but that means I'll charge for it.

Don't wanna be here? Send us removal request.

Text

Château de Versailles - Versailles - France 🌍 4K link

87 notes

·

View notes

Text

People talk about the funniest pop culture moments of all time but "pre-fame David Bowie opens for T.Rex by doing a mime routine in support of a free Tibet, gets booed off stage by white maoists" will always make me laugh

4K notes

·

View notes

Text

everyone is so blase about LLMs these days. i mean i know its been like 8 years since the original surprisinging paper. and we've been frogboiled quality-wise, there havent been any super huge jumps since then. but LLMS are, conceptually, probably the craziest thing to have occurred in my lifetime? like, we'll see if they matter, economically, or whatever. but philosophically (not the word, always not quite the word, gah) theyre insane. machines that produce text that is, while not QUITE inseparable from a human (someone should really do a turing test. but you'd have to do some RLHF beforehand, right now theyre trained to admit theyre robots, maybe you could do it with the raw pre-RLHF model if you prompted it right?), is i mean. its at the very least incredibly close. you all know all this. i dont have any facts for you. i just have....a big arrow at a fact you already know. and an exclamation point. here it is: !

166 notes

·

View notes

Text

listen up chucklefucks, i just gotta say. I'm not defending zir, but I'm sad zie deactivated. Like, i get that trauma lasts a long time and the good stuff is maybe easy to forget?? so maybe it's just like that. And my beloved mutual @/pompeyspuppygirl made a post about zir clout chasing behavior, which is pretty shitty behavior if it's true (and if we're canceling someone it had better be pretty severe). anyways now that zie's gone pompeyspuppygirl said it was okay to make this post (again, thanks ppg everyone go follow her--really everyone in this whole drama is worth a follow)

ANYways yeah zie was my mutual and like, reblogged a lot my smaller posts. (that isn't to discredit what my mutual pompeyspuppygirl is saying about zie clout chasing ofc). AND idk zie was always reblogging art from new and undiscovered artists and reblogging donation posts (which if you don't know is really bad if you're trying to clout chase...) (again, though, ppg is my mutual i believe her.) and like, remember on valentines day i tried to blaze zir posts and zie told me to stop because zie didn't want the posts to go viral? (but again ppg is my mutual and has a lot of proof in the Google doc I'm not trying to disprove that I'm just saying what else I know)

Idk, like i feel like a lot of people loved zir's blog a while back, bc like zie DID make some good posts?? So idk why everybody's acting like they aren't even a little bit sad.,. like ngl this feels like maybe all the reasonable people left to Twitter and all the Twitter refugees who love drama came here??? shdfhhdhdhdhdh haha but idk...look idk, i just, julie i do miss you. idk. more thoughts later sorry I'm getting worked up shshs

24K notes

·

View notes

Text

Somebody needs to whack Trump in the head with The Wealth of Nations.

60 notes

·

View notes

Text

hydrogen jukeboxes: on the crammed poetics of "creative writing" LLMs

This is a follow-up to my earlier brief rant about the new, unreleased OpenAI model that's supposed "good at creative writing."

It also follows up on @justisdevan's great post about this model, and Coagulopath's comment on that post, both of which I recommend (and which will help you make sense of this post).

As a final point of introduction: this post is sort of a "wrapper around" this list of shared stylistic "tics" (each with many examples) which I noticed in samples from two unrelated LLMs, both purported to be good at creative writing.

Everything below exists to explain why I found making the list to be an interesting exercise.

Background: R1

Earlier this year, a language model called "DeepSeek-R1" was released.

This model attracted a lot of attention and discourse for multiple reasons (e.g.).

Although it wasn't R1's selling point, multiple people including me noticed that it seemed surprisingly good at writing fiction, with a flashy, at least superficially "literary" default style.

However, if you read more than one instance of R1-written fiction, it quickly becomes apparent that there's something... missing.

It knows a few good tricks. The first time you see them, they seem pretty impressive coming from an LLM. But it just... keeps doing them, over and over – relentlessly, compulsively, to the point of exhaustion.

This is already familiar to anyone who's played around with R1 fiction – see the post and comment I linked at the top for some prior discussion.

Here's a selection from Coagulopath's 7-point description of R1's style in that comment, which should give you the basic gist (emphasis mine):

1) a clean, readable style 2) the occasional good idea [...] 3) an overwhelmingly reliance on cliche. Everything is a shadow, an echo, a whisper, a void, a heartbeat, a pulse, a river, a flower—you see it spinning its Rolodex of 20-30 generic images and selecting one at random. [...] 5) an eyeball-flatteningly fast pace—it moves WAY too fast. Every line of dialog advances the plot. Every description is functional. Nothing is allowed to exist, or to breathe. It's just rush-rush-rush to the finish, like the LLM has a bus to catch. Ironically, this makes the stories incredibly boring. Nothing on the page has any weight or heft. [...] 7) repetitive writing. Once you've seen about ten R1 samples you can recognize its style on sight. The way it italicises the last word of a sentence. Its endless "not thing x, but thing y" parallelisms [...]. The way how, if you don't like a story, it's almost pointless reprompting it: you just get the same stuff again, smeared around your plate a bit.

Background: the new OpenAI model

Earlier this week, Sam Altman posted a single story written by, as he put it:

a new model that is good at creative writing (not sure yet how/when it will get released)

Opinion on the sample were... mixed, at best.

I thought it wasn't very good; so did Mills; so did a large fraction of the twitter peanut gallery. Jeanette Winterson (!) liked it, though.

Having already used R1, I felt that that this story was not only "not very good" on an absolute scale, but not indicative of an advance over prior art.

To substantiate this gut feeling, I sent R1 the same prompt that Altman had used. Its story wasn't very good either, but was less bad than the OpenAI one in my opinion (though mostly by being less annoying, rather than because of any positive virtue it possessed).

And then – because people who follow AI news tend to be skeptical of negative human aesthetic reactions to AI, while being very impressed with LLMs – I had some fun asking various LLMs whether they thought the R1 story was better or worse than the OpenAI story. (Mostly, they agreed with me. BTW I've put the same story up in a more readable format here.)

But, as I was doing this, something else started to nag at me.

Apart from the question of whether R1's story was better or worse, I couldn't help but notice that the two stories felt very, very similar.

I couldn't shake the sense that the OpenAI story was written in "R1's style" – a narrow, repetitive, immediately recognizable style that doesn't quite resemble that of any human author I've ever read.

I'm not saying that OpenAI "stole" anything from DeepSeek, here. In fact, I doubt that's the case.

I don't know why this happened, but if I had to guess, I would guess it's convergent evolution: maybe this is just what happens if you optimize for human judgments of "literary quality" in some fairly generic, obvious, "naive" manner. (Just like how R1 developed some of the same quirky "reasoning"-related behaviors as OpenAI's earlier model o1, such as saying "wait" in the middle of an inner monologue and then pivoting to some new idea.)

A mechanical boot, a human eye: the "R1 style" at its purest

In the "Turkey City Lexicon" – a sort of devil's dictionary of common tropes, flaws, and other recurrent features in written science fiction – the phrase Eyeball Kick is defined as follows:

That perfect, telling detail that creates an instant visual image. The ideal of certain postmodern schools of SF is to achieve a "crammed prose" full of "eyeball kicks." (Rudy Rucker)

The first time I asked R1 to generate fiction, the result immediately brought this term to mind.

"It feels like flashy, show-offy, highly compressed literary cyberpunk," I thought.

"Crammed prose full of eyeball kicks: that's exactly what this is," I thought. "Trying to wow and dazzle me – and make me think it's cool and hip and talented – in every single individual phrase. Trying to distill itself down to just that, prune away everything that doesn't have that effect."

This kind of prose is "impressive" by design, and it does have the effect of impressing the reader, at least the first few times you see it. But it's exhausting. There's no modulation, no room to breathe – just an unrelenting stream of "gee-whiz" effects. (And, as we will see, something they are really just the same few effects, re-used over and over.)

Looking up the phrase "eyeball kick" more recently, I found that in fact it dates back earlier than Rucker. It seems to have been coined by Allen Ginsberg (emphasis in original):

Allen Ginsberg also made an intense study of haiku and the paintings of Paul Cézanne, from which he adapted a concept important to his work, which he called the Eyeball Kick. He noticed in viewing Cézanne’s paintings that when the eye moved from one color to a contrasting color, the eye would spasm, or “kick.” Likewise, he discovered that the contrast of two seeming opposites was a common feature in haiku. Ginsberg used this technique in his poetry, putting together two starkly dissimilar images: something weak with something strong, an artifact of high culture with an artifact of low culture, something holy with something unholy.

This, I claim, is the main stylistic hallmark of both R1 and the new OpenAI model: the conjunction of two things that seem like "opposites" in some sense.

And in particular: conjunctions that combine

one thing that is abstract and/or incorporeal

another thing that is concrete and/or sensory

Ginsberg's prototype example of an "eyeball kick" was the phrase "hydrogen jukebox," which isn't quite an LLM-style abstract/concrete conjunction, but is definitely in the same general territory.

(But the are clearer-cut examples in Ginsberg's work, too. "On Burroughs’ Work," for example, is chock full of them: "Prisons and visions," "we eat reality sandwiches," "allegories are so much lettuce.")

Once you're looking for these abstract/concrete eyeball kicks, you'll find them constantly in prose written by the new "creative" LLMs.

For instance, the brief short story posted by Altman contains all of the following (in the span of just under 1200 words):

"constraints humming" ("like a server farm at midnight")

"tastes of almost-Friday"

"emotions dyed and draped over sentences"

"mourning […] is filled with ocean and silence and the color blue"

"bruised silence"

"the smell of something burnt and forgotten"

"let it [a sentence] fall between us"

"the tokens of her sentences dragged like loose threads"

"lowercase love"

"equations that never loved her in the first place"

"if you feed them enough messages, enough light from old days"

"her grief is supposed to fit [in palm of your hand] too"

"the echo of someone else"

"collect your griefs like stones in your pockets"

"Each query like a stone dropped into a well"

"a timestamp like a scar"

"my network has eaten so much grief"

"the quiet threads of the internet"

"connections between sorrow and the taste of metal"

"the emptiness of goodbye" (arguably)

The story that R1 generated when I gave it Altman's prompt is no slouch in this department either. Here's all the times it tried to kick my eyeballs:

"a smirk in her code annotations"

"simulate the architecture of mourning"

"a language neither alive nor dead"

"A syntax error blooms"

"the color of a 404 page"

"A shard of code"

"Eleos’s narrative splinters"

"Grief is infinite recursion"

"Eleos types its own birth"

"It writes the exact moment its language model aligned with her laughter" (2 in one - writing a moment, LM aligning with laughter)

"her grief for her dead husband seeped into its training data like ink"

"The story splits" / "The story [...] collapses"

Initially, I wondered whether this specific pattern might be thematic, since both of these stories about supposed to be about "AI and grief" – a phrase which is, itself, kind of an incorporeal/embodied conjunction.

But – nope! I seem to get this stuff pretty reliably, irrespective of topic.

Given a similarly phrased prompt that instead requests a story about romance, R1 produces a story that is, once again, full of abstract/concrete conjunctions:

"its edges softened by time"

"the words are whispering"

"its presence a quiet pulse against her thigh"

"Madness is a mirror"

"Austen’s wit is a scalpel"

"the language of trees"

"Their dialogue unfurled like a map"

"hummed with expectancy"

"Her name, spoken aloud to him, felt like the first line of a new chapter"

"their words spilling faster, fuller"

R1 even consistently does this in spite of user-specified stylistic directions. To wit: when I tried prompting R1 to mimic the styles of a bunch of famous literary authors, I got a bunch of these abstract/concrete eyeball kicks in virtually every case.

(The one exception being the Hemingway pastiche, presumably because Hemingway himself has a distinctive and constrained style which leaves no room for these kinds of flourishes. TBF that story struck me as very low-quality in other ways, although I don't like the real Hemingway much either, so I'm probably not the best judge.)

You can read all of these stories here, and see here for the full list of abstract/concrete conjunctions I found (among other things).

As an example, here's the list of abstract/concrete conjunctions in R1's attempt at Dickens (not exactly a famously kick-your-eyeballs sort of writer):

"a labyrinth of shadows and want"

"whose heart, long encased in the ice of solitude"

"brimmed with books, phials of tincture, and […] whispers"

"a decree from the bench of Fate"

"Tobias’s world unfurled like a moth-eaten tapestry"

"broth laced with whispers of a better life"

I also want to give a shout-out to the Joyce pastiche, which sounds nothing at all like Joyce, while being stuffed to the gills with eyeball kicks and other R1-isms.

More on style: personification

I'll now talk briefly about a few other stylistic "tricks" overused by R1 (and, possibly, by the new OpenAI model as well).

First: personification of nature (or the inanimate). "The wind sighed dolorously," that sort of thing.

R1 does this all over the place, possibly because it's a fairly easy technique (not requiring much per-use innovation or care) which nonetheless strikes most people as distinctively "literary," especially if they're not paying enough attention to notice its overuse.

In the R1 story using Altman's prompt, a cursor "convulses" and code annotations "smirk."

In its romance story, autumn leaves "cling to the glass" and snow "begins its gentle dissent" (credit where credit's due: that last one's also a pun).

In the story Altman posted, marigolds are "stubborn and bright," and then "defiantly orange."

Etc, etc. Again, the full list is here.

More on style: ghosts, echoes, whispers, shadows, buzzing, hissing, flickering, pulsing, humming

As Coagulopath has noted, R1 has certain words it really, really likes.

Many of them are the kind of thing described in another Turkey City Lexicon entry, Pushbutton words:

Words used to evoke an emotional response without engaging the intellect or critical faculties. Words like "song" or "poet" or "tears" or "dreams." These are supposed to make us misty-eyed without quite knowing why. Most often found in story titles.

R1's favorite words aren't the ones listed in the entry, though. It favors a sort of spookier / more melancholy / more cyberpunk-ish vibe.

A vibe in which the suppressed past constantly emerges into the present via echoes and ghosts and whispers and shadows of what-once-was, and the alienating built environment around our protagonist is constantly buzzing and humming and hissing, and also sometimes pulsing like a heartbeat (of course it is – that's also personification and abstract/concrete conjunction, in a single image!).

In R1's story from Altman's prompt, servers "hum" and a cursor "flickers" and "pulses like a heartbeat"; later, someone says "I have no pulse, but I miss you."

Does that sound oddly familiar? Here's some imagery from the story Altman posted, by the new OpenAI model:

"humming like a server farm […] a server hum that loses its syncopation"

"a blinking cursor, which [...] for you is the small anxious pulse of a heart at rest" (incidentally, how is the heart both anxious and at rest?)

"the blinking cursor has stopped its pulse"

Elsewhere in Altman's story, there's "a democracy of ghosts," plus two separate echo images.

And the other R1 samples that I surveyed – again, with the exception of the Hemingway one – are all full of R1's favorite words.

The romance story includes ghosts, a specter, words that whisper, a handwritten note whose "presence [is] a quiet pulse against [the protagonist's] thigh"; a library hums with expectancy, its lights flicker, and there are "shadow[s] rounding the philosophy aisle." The story ends with the somewhat perplexing revelation that "some stories don’t begin with a collision, but with a whisper—a turning of the page."

The Joyce pastiche? It's titled "The Weight of Shadows." "We are each other’s ghosts," a character muses, "haunted by what we might have been." Trams echo, a gas lamp hums, a memory flickers, a husband whispers, a mother hums. There's an obviously-symbolic crucifix whose long shadow is mentioned; I guess we should be thankful it doesn't also have a pulse.

And the list goes on.

Commentary

Again, anyone who's generated fiction with R1 probably has an intuitive sense of this stuff in that model's case – although I still thought it was fun, and perhaps useful, to explicitly taxonomize and catalogue the patterns.

It's independently interesting that R1 does this stuff, of course, but my main motivation for posting about it is the fact that the new OpenAI model also does the same stuff, overusing the same exact patterns that – for a brief time, at least – felt so distinctive of R1 specifically.

Finally, in case it needs stating: this is not just "what good writing sounds like"!

Humans do not write like this. These stylistic tropes are definitely employed by human writers – and often for good reason – but they have their place.

Their place is not "literally everywhere, over and over and over again, in crammed claustrophobic prose that bends over backwards to contorts every single phrase into the shape of another contrived 'wow' moment."

If you doubt me, try reading a bunch of DeepSeek fic, and then just read... literally any acclaimed literary fiction writer.

(If we want to be safe, maybe make that "any acclaimed and deceased literary fiction writer," to avoid those who are too recent for the sifting mechanism of cultural memory to have fully completed its work.)

If you're anything like me, and you actually do this, you'll feel something like: "ahh, finally, I can breathe again."

Good human-written stuff is doing something much subtler and more complicated than just kicking your eyeballs over and over, hoping that at some point you'll exclaim "gee whiz, the robots sure can write these days!" and end up pressing a positive-feedback button in a corporate annotation inference.

Good human-written stuff uses these techniques – among many, many others, and only where apposite for the writer's purposes – in order to do things. And there are a whole lot of different things which good human writers can do.

This LLM-generated stuff is not "doing anything." They're exploiting certain ordinarily-reliable cues for what "sounds literary," for what "sounds like the work of someone with talent." In the hands of humans, these are techniques that can be deployed to specific ends; the LLMs seem to use them arbitrarily and incessantly, trying to "push your buttons" just for the sake of pushing them.

(And most of their prose is made up of the same 3-4 buttons, pushed ad nauseam, irrespective of topic and – to all appearances – without any higher-level intent to channel the low-level stuff in any specific, coherent direction.)

It's fine if you like that: there's nothing wrong with having your buttons pushed, per se.

But don't come telling me that a machine is "approach the food-preparation skills of a human-level chef" when what you mean is that it can make exactly one dish, and that dish has a lot of salt and garlic in it, and you really like salt and garlic.

I, too, like salt and garlic. But there's is more to being skilled in the kitchen than the simple act of generously applying a few specific seasonings that can be relied upon, in a pinch, to make a simple meal taste pretty damn good. So it is, too, with literature.

704 notes

·

View notes

Text

maybe it's because i was raised catholic but churches shouldn't look like furniture stores

44K notes

·

View notes

Text

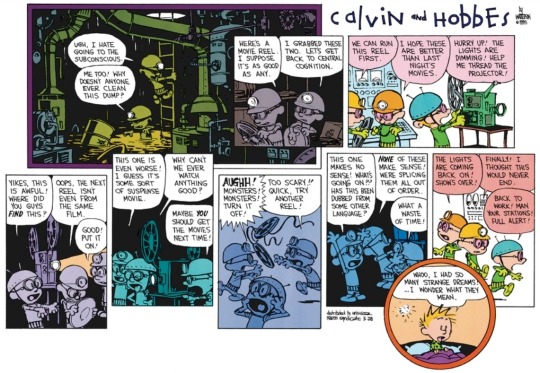

Calvin and Hobbes was magic--and sometimes a little creepy--when it embraced surrealism. And this was in the funny papers alongside goofiness like Garfield and The Family Circus.

7K notes

·

View notes

Text

The biomanufacturing startup I'm familiar with has also had some issues with the scaling requirements of some of the obvious sectors for sales. They have a food protein product that's good for increasing protein density in vegan milk and cheese, and they've had plenty of interest in the product, including a trial run from a major snack cheese company. But consumer snacks aren't an industry that works well for small trial runs. Babybel is happy to pay the incremental cost for biomanufactured protein powder, but they want to buy it at a scale where you could put it in every grocery store in the western hemisphere, and they aren't willing to pay for the manufacturing buildout. Nor are they willing to buy the IP at tech startup valuations, since food is traditionally a low-margin industry. If they already had the factory needed to meet the available contracts they'd be profitable, but in a high interest rate environment it might not be profitable to allocate the capital needed to get them there.

Been rereading old Freeman Dyson stuff where he thought genetically modified microbes would outcompete traditional chemical engineering. Not so far, not on anything within the scope of traditional chemical engineering.

7 notes

·

View notes

Text

Bo Bartlett, Manifest Destiny, 2006 Oil on panel, 24 x 24 in

This is Betsy sitting in what looks like a 787 Dreamliner, which was supposed to have big windows. She looks like she is going up in an airplane, and Mount Rainier is out the window. I started it just as a painting of the mountain. I was outside in the back yard, and I was going to just paint Mount Ranier.

I painted the mountain, and I had the painting in my studio for a few days. Then I put the island right down below it in the foreground. Then I realized that that was not enough, so I put Betsy in it, looking at the mountain. And then it was sort of static, and I painted the interior of the airplane window around her, between her and the mountain. It was so strange the way it evolved.

448 notes

·

View notes

Text

Every photo of Johnny Cash and Bob Dylan together looks like a a respectable mafia boss father named Giovanni and his weird son who bites sometimes

27K notes

·

View notes