Don't wanna be here? Send us removal request.

Text

Final Performance

vimeo

My final instrument was a generative composition that can be controlled with light.

The instrument was made with found objects, and I attempted to create a mystical or alien aesthetic. I used a green flood light wired to a dimmer switch in order to control the instrument for this performance.

The composition is a large state machine created with Pure Data. State transitions are based on time, light level, or a combination of both. The concept behind the composition was to depict another world, let the audience become comfortable in this world, and then let the world consume itself. My external light represents the sole light source that exists in this alternate dimension, so when it is left off, the inhabitants can no longer survive.

Most of the states in the composition can be traversed in a cycle, which represents the passing of days. Processed samples and various synthesis methods are used to create different moods for different states.

The composition also has a chain of starting states and a chain of ending states. The purpose of these is to really try to tell a story with the composition.

The patch is compiled to C++ code using Heavy Audio Tools, which I discussed in my last blog post. I found that the patch would not run smoothly using libPD. Although the compilation time became annoyingly long, it was very nice to be able to run the patch on the Bela with good quality and low latency. I also found that I needed to increase the Bela block size to 64 in order to avoid clicks and pops in my audio.

Both sub patches and abstractions were extremely important to making the patch easy to extend. One particular abstraction, manifested as a sort of wet percussive sound, can be heard throughout the entire composition. These sounds come from multiple instances of an abstraction that I created. This abstraction uses a lot of the drunk object in order to create sounds that meander around stereo space, pausing, changing rate, and changing tone. Messages are routed to these instances from the state machine in order to turn certain ones on and off or control different parameters to the abstraction. The most important parameter is probably the rate of the sound.

I enjoyed exploring how a single parameter could be used to control a relatively complex system. I feel that I could go even deeper with the composition, and create many more different kinds of sonic events. I also wanted to experiment with a progression that occurs over a number of light cycles, rather than having the cycle be similar every time.

Going beyond a single parameter, I think it would be interesting to try to extend an instrument like this out into a space with various controls spread around a larger area. Generative compositions have the potential to be explored by the audience rather than simply performed.

0 notes

Text

Using Heavy Audio Tools with Bela

After spending a great deal of effort trying to get good results with STK, I realized it was taking me too long to get a good sounding system with just C++ code. Performance was also a huge issue with using STK and I was not getting good results with sensor input. To be honest, a lot of this could be due to the fact that my code sucked. I don’t have a lot of experience with low level audio processing code.

I needed a way to use pd, but I wanted the option of using some custom C++ code.

The solution is Heavy Audio Tools

Heavy is an online compiler that will take your vanilla pd project and turn it into highly optimized C code which can then run on the Bela. However, Heavy only supports a subset of vanilla pd objects. They also provide a library of useful pd abstractions that can be used with heavy. You can also use abstractions that you and others create, but I don’t believe externals are supported.

Heavy also allows you to create hooks into your pd patches using special attributes on your objects. This means you can control parameters of your patch through any programming language that heavy supports.

Since this includes C and C++, you can run code compiled with heavy on the Bela.

Normally this would require some tinkering, but the Bela people provide a really easy way to get everything running. Check out the “Heavy” section of this page for details.

One caveat is that both Heavy and Bela are being updated so frequently that they are occasionally incompatible. If this is the case, you should check out the ```dev-heavy``` branch of the Bela repo and try using the ```build_pd_heavy.sh``` script there. It is often more up to date than the one on the master branch.

If all else fails you can always download the C code that heavy generates, upload it to the Bela IDE, and try to get it working manually.

In my experience, Heavy was a much better way to run pd patches on the Bela than libPD.

It was also far easier than using C++ and STK.

Some advantages I noticed:

You can still use custom C or C++ code to control your patches.

Heavy provides special attributes that generate an api for parameters to your patch and events created by your patch. I believe you could even interface between multiple heavy pd patches using C code.

It runs extremely fast

I noticed with libPD and even some C++ code that my sensors didn’t seem very responsive. When I tried compiling with heavy, the photoresistor I was using really felt like a great way to interact with the patch.

You can use the same patch on an impressive range of other platforms

In addition to compiling to C and C++, heavy gives you the option of creating VSTs, javascript code, and Unity3D plugins.

0 notes

Text

Using STK on Bela

When it was time to do some audio processing based on the output of my simulation, I realized I did not want to code up a lot of the basic DSP stuff that I wanted to do. I decided to try to use STK (the synthesis toolkit) on the Bela.

This was kind of a hassle to get up and running, but once everything was working it made my life a lot easier.

The steps I took to get STK working on the Bela are as follows:

1. Download STK and upload the tar file to the Bela through the Bela IDE.

2. The Bela IDE gives you access to a unix terminal at the bottom. I used this to compile STK on the Bela. Start by navigating to your project folder in the terminal.

3. Use the command ‘tar -zxvf stk-4.5.1.tar.gz’ on the tar file you just uploaded (ensure stk-4.5.1.tar.gz is the name of the file). This will create a folder in your project directory containing everything that has to do with STK.

4. Go into that folder and run the command ‘./configure’

5. Navigate into the src folder and run ‘make’

6. At this stage, I got an error related to the file RTAudio.cpp. The file calls a function snd_pcm_writei() with two arguments. Apparently the compiler is expecting 3 arguments. To fix the error you can simply add the following line before the function call:

int type = 0;

Finally add ‘&type’ as the last parameter to snd_pcm_writei()

7. If the make is successful, a file called libstk.a will be created

8. Create a directory in your project called lib and move libstk.a to that directory

9. Go to your project settings in the Bela IDE and add the following to make parameters:

CPPFLAGS=-I/root/Bela/projects/yourprojectdirectory/stk-4.5.1/include;LDFLAGS=-L/root/Bela/projects/yourprojectdirectory/lib;LDLIBS=-lstk;

10. That should allowed you to include any classes you want to use from STK in your Bela project.

0 notes

Text

Hearing the simulation

Dealing with data and signals in the same loop

This week the first thing I need to get done is translating the movement of the agents in my simulation to audio world. This has been trickier than I originally anticipated due to the difference between the rate of signal processing and the desired rate of my simulation.

The Bela is processing audio at a sample rate of 44100. As I understand it, this is the number of audio frames that is processed every second. Bela’s render function should process a number of audio frames each time it is called.

Running a simulation at this rate would be extremely resource intensive and also pretty pointless. The simulation really only needs to be updated about 25 to 30 times per second in order to run smoothly.

To run the simulation at a slower fixed rate we can keep track of the number of frames that have elapsed since the simulation last ran using context->audioFramesElapsed.

With 44100 frames per second, framesElapsedSinceLastUpdate/44100 gives the time since the last tick of the simulation. If this number is greater than, say, .25 then the simulation should be updated and framesElapsedSinceLastUpdate should be reset.

Making sounds based on simulation updates

So the simulation is running at a proper rate, and I want to be able to play an audio sample every time an agent takes a step (i.e. every time the agent travels a certain fixed distance).

However, the simulation is only updated 25 times per second and every second we there are 44100 frames in which we can begin playing a sample. If we only play samples when the simulation gets updated then that is not a very accurate representation of what is actually going on in the simulation.

To deal with this, the simulation must make a prediction based on the agent’s current velocity, acceleration, and distance traveled since the last step.

When the simulation is updated, it should find all the agents that are predicted to complete a step before the next update. It also predicts the time between the simulation update and the step, as well as the position of the agent when the step is complete.

This information goes back to the audio processing code, which waits until the step should actually happen to begin playing the appropriate sample for the step.

0 notes

Text

Finishing on time

When taking on a large project as I’m doing this semester, I think it’s helpful to start breaking it down into smaller bits that you can actually start working on.

Doing this can also help you get an idea of how much you need to do every week in order to be finished by your deadline.

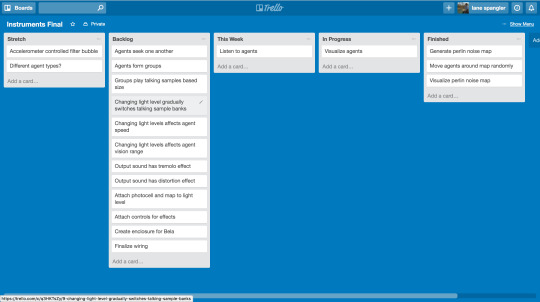

Trello is a pretty nice tool that can help you keep track of all this. Here’s what I’m doing:

I break my project down as much as I can and put individual features/tasks into a backlog list.

Every week, I pick items from the backlog and move them into the list for this week. Dividing the number of items in my backlog by the number of weeks until my deadline gives me the number of items I need to do every week. If it’s too much to do in a week, then I know I need to scale down my project in order to get it finished.

When I’m working on something I put it in the In Progress list just so when I open up the board I remember where I left off. When I finish something it goes into the Finished list because that just feels good to do.

This is essentially the same thing that many large teams do to keep track of projects, but as your projects grow larger you might find that it helps to do this just for yourself.

0 notes

Text

Prototype Week 1

As I began working on the prototype that I described in my last post, I realized that I was taking on a large project and needed to do some planning.

I decided to write my simulation in C++ because I know that I will be able to get it to run on the Bela.

I have started using this repository for my code: https://github.com/lane-s/musi4535final

I also wanted to be able to visualize the simulation on my computer. To do this, I could wrap the entire simulation in a class which can then be included by code that runs on the Bela to make sounds and code that runs on my computer to do visualization. Note that the visualization will not be a part of the final instrument, it is simply a development tool.

Another option is to make simulation class part of an external for PureData. Then on the Bela I can simply use my external to map the simulation to audio. Another advantage of this option is that I may be able to simultaneously visualize and hear the simulation when it is running on my computer.

I’m going to talk this over with a few people before I decide which option to go with.

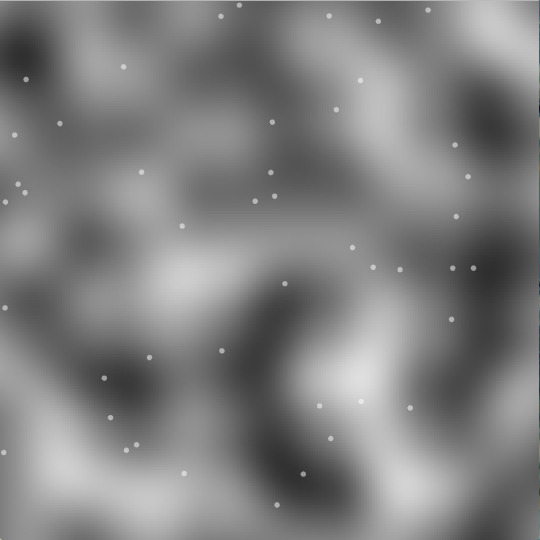

Here is a screenshot from my first visualization of the simulation:

The white dots are agents which move around randomly. They move at varying speeds, stop for varying lengths of time, and turn at random angles.

0 notes

Text

Multi-Agent System Prototype

To get started on this project I need to build a functional prototype so I can start to see how systems like this can sound.

I will first generate a perlin noise map. This will be the environment for the simulation.

The light level of the world will be controlled by a photoresistor connected to the Bela.

Agents will walk about randomly until they see another agent. They have a certain range of vision determined by their movement direction and the light level of the world. The agent makes a sound when moving based on the height of the map at their current position and their movement speed.

If an agent sees another agent, they will seek that agent out until they are touching. Once two agents are touching, they begin making whispering or quiet talking sounds.

There should be 3 banks of talking samples: Normal, worried, and hysterical.

At the full light level, only normal samples play. At the lowest light level, only hysterical samples play. Halfway in between, only worried samples play. In between each level there is a chance of playing two different sample types proportional to the light level.

The light level also increases a panic level for the agents. This means disengaged agents will move faster and eventually play hysterical sounds at high panic levels. Very low light levels will cause agents to disengage and panic until the light level increases.

I think if I can implement this system and it is interesting to play with then there will be many more options to explore. A couple of ideas I’ve had while writing this are:

1. Using an accelerometer to control some physical object (like a large bubble) in the simulation. If the bubble is over an agent, it applies a filter to the audio output from that agent.

2. Have some controls that distort the sample sounds in order to make the sounds more abstract. This could be used to allow the performer to slowly reveal the true source of the sounds.

0 notes

Text

New Project Idea

After thinking hard about my previous project idea, I decided that it was not inspiring enough for me to move forward with. I tried to imagine myself using the tool that I designed and didn’t feel like it would really be such a great thing to play with.

However, I have a new idea that I am going to start working on immediately.

I want to explore how multi-agent systems can be used to create music and to come up with an interesting way to convey what is going on in the underlying system through the physical form of the instrument. I also want to provide interesting ways to interact with the system by changing the agents’ environments.

In order to complete this project I will need to:

1. Generate an environment for the simulation to take place

2. Decide what the properties of the agents are, how they interact with the environment and each other, what their motivations are

3. Determine how the simulation is mapped to audio

4. Determine how input to the Bela affects the agents/environment

5. Determine how input to the Bela affects how the simulation is mapped to audio

0 notes

Photo

Pictured above is a cardboard prototype of one of my original ideas.

The device is a large FX pedal style live sampler/looper with a built in bank of effects.

The Pads

On the left side, there are 6 sample pads. I plan on implementing these using square FSRs.

The pads respond to input based on the state they are in, so in the final version there needs to be a light for each pad that changes color based on states. I plan on accomplishing this with an RGB light strip.

Tapping a pad starts recording, and tapping it again stops recording. Each pad can be set to play once or loop. Playback can be stopped by briefly holding down on a pad. The pad can be cleared by holding down on it when it is not playing.

Play and record functions can be quantized using the tap tempo and sync button in the bottom left.

The FX Bank

There are 8 large fx buttons that each represent one instance of an effect. So far I am thinking about having 4 feedback delays, 2 filters, some kind of distortion, and playback speed controls.

To select an effect you hit the corresponding button. The effect parameters can then be tweaked using the two rotary encoders and slider pots on the right. The effect can be applied to the pads by tapping them when the effect is selected. To go back to play mode, you hit the effect button again.

This system allows for multiple effects to be selected simultaneously.

Implementation Constraints

This device has a lot of controls, and with the Bela we are limited to 16 digital pins and 8 analog pins unless a multiplexer is used.

After using the following pins:

8 digital pins for the fx buttons

6 analog pins for the sample pads

2 analog pins for the slider pots

6 digital pins for the rotary encoders

2 digital pins for the light strip

I will be out of I/O. This means there’s no room for tap tempo, sync controls, or the loop button. The most expensive solution is to use a multiplexer to expand input, but there are other ways to solve this problem. The first thing that comes to mind is to ditch the rotary encoders in favor of two buttons for each parameter that increment and decrement the value. This would make fine adjustment of parameters harder but would free up 2 digital pins. It is also probably easier to operate buttons with your feet than it is to use rotary encoders.

0 notes

Photo

Project #1

With this project I wanted to experiment with using L-systems for making music. I start with the string “1″ and then each time the right side button is pushed, a number of rules are applied to the string. The rules are things like 1 -> 15, 5 -> 75, 7 -> 31, and 3 -> 7. If these rules are applied iteratively you get a sequence that grows with each iteration and does not repeat itself. In this case it would be 1, 15, 1575, 15753175, 157531757153175, etc. The numbers represent scale tones, and the instrument plays the sequence with a sine wave at a speed determined by the left knob. The right knob controls the root note of the sequence.’

This applies L-systems to music in a very simple way, but there are many more possibilities to experiment with. One idea is rules that are applied based on some probability or noise function. This would produce less predictable sequences that are still constrained by rules. It might also be interesting to apply L-systems to chord progressions.

There are other ways to translate L-systems to music besides having the string represent a sequence at a constant rhythm. For example, you might have a system where the character ‘A’ translates to ‘layer a new pad sound’ and this sound persists until some other character is encountered that means ‘remove a pad layer’. One L-system could also trigger the production of another L-system with different rules that is heard in a different way.

Another point is that while this project only lets the user control speed and base frequency of the sequence, it’s possible to build an instrument where the users can play with the production rules and play with adding and removing different L-systems that serve different roles.

Practical stuff:

I used C++ to program the Bela. The only problem I had in that area was bad readings from the buttons. I think they are just bad buttons.

I used Blender to model the enclosure and printed it with the Makerbot. I would advise anyone doing this to learn how to measure their non-3D printed components first and then model everything to scale in Blender rather than relying on the scaling in the Makerbot software.

0 notes

Photo

I’ve thought more about idea #2 and have come up with a way to apply effects to each sample pad. There is a dial which selects the effect, then there are 2 knobs and 2 sliders that allow you to tweak the parameters of the selected effect. There is also an apply button. When this button is pressed all the pads that have the effect applied will light up. The next pad that is touched will have the effect applied if it is not already applied and removed if it is applied. I think this is a decent way to pack a lot of options into a fairly small amount of physical space.

0 notes

Photo

Idea #5

This device consists of a number of water sources (8 in this sketch) and different sized cans. Each can should be tuned so that the sound of the water striking it corresponds to a scale note. Each water source is controlled by something like a solenoid valve. There is a sequencer interface that allows the user to specify the sequence of pitches they want. The user can also play, pause, and change the speed of the sequence.

0 notes

Photo

Idea #4

This idea involves using contact mics to process audio from hand drums to create a background drone. The processed sound is meant to complement not replace the sound of the acoustic instrument. The player could have more control over the drone sound through the use of pressure sensors on the floor that are controlled with the player’s feet.

0 notes

Photo

Idea #3

This idea is fairly straightforward. The device would be able to output 4 control voltages that can be used to control other devices. Each output has a selector for waveform, a frequency knob, and an amplitude knob. Although this is not pictured, I also think it may be interesting to try to process an audio input in different ways output a control voltage based on the audio signal.

0 notes

Photo

Idea #1

The idea is sound cancelling headphones that have a microphone for each ear. The sounds that you would normally be hearing with your ears would be processed in realtime with effects that you control. Gesture based controls could also be used. For example, maybe the openness of your hand could control the Q on a bandpass filter that is being applied to everything that you hear. Additionally, the device should provide some way to sample and playback the sounds that you’re hearing as well as load in outside sounds. The goal is to be able to play the sounds from your environment as an instrument.

0 notes