Don't wanna be here? Send us removal request.

Text

LIS 4317: Final Project

Context:

Since starting college, I've enjoyed watching live stream content on Twitch. The spontaneity, sense of community, culture, and overall interactivity that the medium affords have all contributed greatly to its increasing popularity and appeal.

As someone who has been on the viewer and spectator end of things- I've been curious about putting myself out there for a change and streaming for myself. Although I intend to do it for fun, I believe the data and visual analytics skills I've garnered throughout my coursework may afford me with some perspective into factors that could contribute to my success.

According to Ludwig, a streamer who's previously been the most subscribed to on Twitch as well as a recipient of a "Streamer of the Year" award- a poll of roughly 30 of his fellow streamers indicates that average viewer count is agreed to be the most important and prioritized statistic in a streamer's success (2024).

As such- I'd like to visually assess my personal streaming metrics from Twitch to see if there's any interesting or relevant correlations or patterns between the variety of metric variables provided by Twitch at the end of a stream (Stream Summary Metrics) and average viewer count. My research question (RQ) and the project's objectives are listed below:

Research Question and Objectives:

Research Question: How does each factor of the dataset correlate with average viewer count?

Objectives:

What are the correlation values for each factor to average viewer count?

What does each factor's relationship to the average viewer count look like?

How much change in average viewer count is accounted for by each factor?

Data:

In order to assess correlations between a variety of streaming metrics factors and average viewer count in a more convenient manner- I decided to first condense the multiple Stream Summary files provided by Twitch into a singular CSV file. First, I nested all my stream summaries within a "Stream Summaries" folder. I then inspected the structure of a singular file to see the type of variables I was working with- as reflected in figure 1.

Figure 1: Original structure and sample data from original Stream Summary CSV.

From this- I decided the next step would be to create functions to update and order stream summaries by date in an alternative directory "Ordered Stream Summaries". In order to assess what dates the data was from and to be able to evaluate the correlation between the amount of time broadcasted and average viewer count- I'd also be appending two additional columns (Date and Time.Live) to each CSV- as depicted in figure 2.

Figure 2: Revised structure and sample data from a formatted and Ordered Stream Summary CSV.

Finally, I created another function to compile all CSV files into a singular file. The resulting dataframe structure resembles that in figure 2- but with sum of all observations across all files.

Figure 3: Final compiled CSV file of all Stream Summaries- with observations ordered by date. Looking at the data alone- I am eager to see what type of correlations variables like time live/broadcasting and chat messages sent may have with average viewership. Next- it was time to begin visual analysis. Analysis:

Using R, RStudio, and the following libraries:

readxl

ggplot2

reshape2

shiny

I proceeded to further calculate correlations as well as visualize them using ggplot2 and the interactivity afforded by local Shiny app development.

Objective 1: What are the correlation values for each factor to average viewer count?

To begin, I intended to make use of a Pearson correlation heatmap to conduct some initial exploratory analysis on what factors in the Stream Summary metrics may have a linear relationship with the average viewership. While most factors may be discrete, the correlation heatmap should still provide a quick and convenient way to screen potential relationships.

Figure 4: Correlation heatmap of Stream Summary metrics factors

In order to reduce clutter- I avoided adding elements such as axis labels that felt otherwise redundant. I also chose purple and green to add some contrast and netter differentiate between a negative and positive correlation.

Analyzing the second column (or second row from the bottom), I am able to take note of a few observations regarding the factors' linear correlation to average viewership:

Time live is the most pigmented- indicating the greatest correlation (positive or negative).

There seem to be more factors with positive correlations than negative.

Looking at the calculated Pearson values themselves corroborates my observations:

Thanks to this analysis- I was encouraged to be particularly mindful of the relationship between time live and the average viewership.

Using the calculation and the heatmap collectively- I was able to deduce the following most relevant (|r| > 0.1) correlations.

Positive:

Time.Live

Chatters

Chat.Messages

Negative:

Live.views

Objective 2: What does each factor's relationship to the average viewer count look like?

In order to evaluate all factors in correlation to average viewers, I decided to write up an interactive Shiny App that would enable me to toggle between different independent variables as desired, as well as to change the factor determining the datapoints color. I also took the take to create a key describing the variables and their significance within the sidebar.

Figure 5: Local Shiny App Preview for Stream Summary Metrics analysis.

In order to keep the information and visuals organized, I leveraged alignment by making use of a sidebar for the selection of factors and the main panel for the plotting. I also continued the previous color palette as an homage to the Twitch palette and to keep the theme consistent. All this is demonstrated in figure 5.

Figure 6: Scatterplots of the four highest correlating factors to average viewership. Click on images to view them in closer detail.

Looking at these graphs- one can see how discrete values can be harder to for linear correlation against in the scatterplot form. While the line of best fits helps illustrate the previously determined correlations- the data points of the discrete factors themselves don't paint as vivid a picture of this relationship as the plot of the continuous factor (Time.Live) does.

Proceeding with the statistical analysis, the grey band around the line of best fit reflects the uncertainty or margin of error associated with the estimated line. One can also see here that the Time Live seems to have not only the most positive correlation, but also the least uncertainty. On the other hand- while Live Views seems to have the most negative correlation, its wide band indicates a significant margin of error.

Visually, in descending order, the scatterplots and lines indicate that the correlation to average viewers does indeed shift from Live.Views (most positive) to Chatters and Chat. Messages (weaker yet positive correlations) to Live.Views (negative correlation).

Objective 3: How much change in average viewer count is accounted for by each factor?

Finally, to gauge how much change or variability each factor accounts for to the average viewership- I decided to calculate and plot the R-squared value associated with a linear regression model between each factor and the dependent value. The result of this was the following:

Figure 7: Barplot of R-Squared values by factor

This visual, as depicted in figure 7 helped me understand how much each variable can affect or potentially even help predict average viewership. From this- I can see that despite factors like Chatters, Chat.Messages, and Live.Views having some correlation to the average viewership as depicted in the previous visuals, the amount of time live (Time.Live) may disproportionately account for the most change to viewership.

Conclusion: Thanks to this analysis, I was able to extract insights on the correlation between the varying factors, the relationships between them and average viewership, and the significance and merit of each factor as a predictor for average viewership. These visualizations helped me not only explore what factors may have the biggest weight and correlation to average viewership, but also encouraged me to question my choices and methods. While this project enabled me to experiment and put my understanding of visual analytics to the test- it has also provided me an opportunity to reflect and think about how I could chosen methods of analysis and visualization that would better suit the data types (continuous/discrete factors for example). Link to the R code and data for the entire project can be found here:

LIS 4317: Final Project · JocieRios References:

Ludwig. (2024, January 30). I asked 100 streamers how to be successful [Video]. YouTube. https://youtu.be/QtVkI21963k?si=VNl61yBAHUNAhaTQ

0 notes

Text

LIS 4317: Module #13 HW

This week- we were tasked with creating a simple animation using Xie's R "animation" package.

I decided to create an animation in the GIF format of stream summaries. This assignment encouraged me to do a lot of research, to understand the syntax of the package. I also had to take a lot of time and tinkering to figure out how to get the graphic to not be all over the place, and to read the data in an iterative manner! For example, it was first a challenge to read in all the files from each Stream Session and order them according to date. If you choose to want to test my code- consider taking the csv files I've shared and uploading them to a folder (I named mine "Stream Summaries- as is reflected in my code) within the same directory within which you run the code. Another challenge was that without explicitly stating the limits and breaks, the canvas of the scatterplots was changing often, making the animation jarring. It would be fun to continue working on this and exploring what alternative types of visuals may be more suitable as an animation, or what other elements (such as lines of best fit, adjustment of point sizes) would help make a more engaging or meaningful graphic.

Here's the "final" product (for now anyway):

Here's a link to the github commit for this module- containing the code and the data I used: Mod# 13 · JocieRios/visual-analytics2@1480d5f (github.com)

0 notes

Text

LIS 4317: Module #12 HW

This week we were introduced to social network analysis! Earlier on in this semester we were tasked with finding an interesting graphic related to visual analysis. Having been in awe of Prof. Hagen’s graphics in her own research involving social networks, I had actually shared one of her graphics!

As eager as I am to explore this- I found myself easily overwhelmed by the options and variety. In order to delve into this, I decided to just follow along with an example provided in ggnet2’s documentation.

First- I created the very first graph which was an undirected Bernoulli graph consisting of 10 nodes.

Then I decided to replicate the fourth example provided in ggnet2’s documentation! It was absolutely beautiful- and showed the potential customization for the nodes, edges, and even the addition of labels. However- it took significantly longer to process than the former (as expected!).

I appreciated the code as an opportunity to see just how edges and nodes can be set up for analysis. In this case- each node reflects a French Members of Parliament, their party respective to the color of the node, and the edges their relationships (as denoted by twitter followage).

Code for this assignment can be found here: https://github.com/JocieRios/visual-analytics2/blob/main/Rios_HW12.R

0 notes

Text

LIS 4317: Module #11 HW

I made a marginal histogram inspired by this week's reading. I focused on the relationship between time streamed and active chatters.

The code for this assignment can be found here: visual-analytics2/Rios_HW11.R at main · JocieRios/visual-analytics2 (github.com)

0 notes

Text

LIS 4317: Module #10 HW

For this week's assignment- I decided to continue to analyze stream statistics provided to me at the conclusion of a stream. As such- I used data on viewership and chat engagement over time to create a time series analysis.

The provided reference for R's ggplot2 package- "The Complete ggplot2 Tutorial" by Selva ( The Complete ggplot2 Tutorial - Part1 | Introduction To ggplot2 (Full R code) (r-statistics.co) ) inspired me to customize the graph's theme further. I changed the background and gridlines to help make the points easier to follow, as well as the color gradient to fit the colors used by the platform I use (Twitch).

Additionally, I mapped the size of the points to the number of chatters active, and the color to the amount of chat messages sent at a given minute. ggplot2 has proven to be a wonderful package for customization of graphs!

0 notes

Text

LIS 4370: Module #11 HW

Problem:

This assignment consisted of debugging the following code that creates a function tukey_multiple:

Caption: Code results in an error stating that there is an unexpected symbol in that specific line of code provided.

According to the error shown above- the bug appears to be that the return value appears immediately after an assignment.

Solution:

All I did was shift the return value, return(outlier.vec), to the next line. After this, the console no longer reported an error!

This assignment was pretty straightforward. Code for this assignment can also be accessed here:

intro-to-r2/Rios_HW11.R at main · JocieRios/intro-to-r2 (github.com)

0 notes

Text

LIS 4370: Module #10 HW

For my final project- I aim to create a package that will help evaluate the variety of metrics that the live streaming platform- Twitch affords its "streamers" with. I hope to be able to streamline helpful functions and visualizations.

An example of such metrics and data used are portrayed in this following csv:

Though it will be read into the package using read.csv- which converts the contents into a data frame.

-------

Additionally, I've written up a sample DESCRIPTION file for such a package and uploaded it to GitHub. Link to this here: It's been interesting to learn about the importance behind each line of such a file, and the conventions one needs to follow. For example, I hadn't previously considered the importance of licensing with packages. However- as an individual who greatly appreciates open-source resources, I'd love to provide a great basis with which to inspire others who are interested in improving such a package. Using the CC0 license is then appropriate for my case.

intro-to-r2/DESCRIPTION.txt at main · JocieRios/intro-to-r2 (github.com)

0 notes

Text

LIS 4317: Module #9 HW

This spring break I aspired to challenge myself by trying a few new things. One of these included streaming on Twitch, and as fun and nerve-wracking as it was, the platform does a wonderful job of providing statistics by which to gauge how well your stream may have performed over the course of time you were live. Of the 8 metrics included in the originally exported csv, I decided to focus on the average viewership, time live, and chat message count.

As average viewership over time was the focal point of the graphic, I decided to map the count of chat messages to the color of the scatter plot's points. I originally intended to use size, but provided there's a lot of data points (1 for each minute streamed)- I opted to use color to maintain balance, repetition, and a better overall alignment of all the points.

0 notes

Text

LIS 4370: Module #8 HW

For this week's module- we explored input/output, string manipulation, and the plyr package.

In doing the homework, I found that I needed to take additional time to review both string manipulation and the plyr package. As always, I found that there were different approaches to fulfill some tasks, such as sub setting using grep():

Or grepl():

I also experimented with saving a csv in different ways. For example, saving a csv using write.table() function with the manually assigned comma separator:

Or using write.csv():

They all resulted in identical results- so its just a reminder to experiment or not worry about taking one's on approach to these assignments.

GitHub Link: intro-to-r2/Rios_HW8.R at main · JocieRios/intro-to-r2 (github.com)

0 notes

Text

LIS4317: Module #8 HW

For this week's assignment- I practiced correlation analysis using ggplot2 and the native mtcars dataset.

I decided to plot the relationship between horsepower and miles per gallon. The scatter plot shows a negative correlation between the two variables, and the line of best fit created using linear regression only emphasizes this. Had I used lm() and summary() to create a model and investigate into the coefficients, I would've been curious to determine the actual correlation and if it was statistically significant.

Focusing on the lesson- I feel that Few's advice to make use of grid lines is important to scatterplots. They allow me to gauge what the values of the points are in a way that isn't overwhelming, helping maintain a minimal yet legible aesthetic.

The cheatsheet was also incredibly helpful and overwhelming at the same time. I'd need to take additional time to explore what ggplot2 graphs and its arguments can do!

GitHub Link here: visual-analytics2/M8_CorrelationAnalysis at main · JocieRios/visual-analytics2 (github.com)

0 notes

Text

LIS4370: Module # 7 HW

This week- we learned about the distinction between S3 and S4 in R.

Work for the first few questions here and also linked below:

Answers to discussion questions:

You can tell what OO system an object is associated with using the isS4() function.

You can determine the base type of an object using the class() function.

A generic function is a function that is determines the class or type of its arguments- and executes a correlating method. As a result- it consists of a collection of methods.

The main differences between S3 and S4 are that S3 is older, as well as more simple and therefore more dynamic. This includes allowing for the class of existing objects to be changed pretty easily- which is generally unsafe. Accordingly, S4 is newer and more formal and rigorous by association. It also revises upon the ability to change classes as its predecessor- making it more difficult to change the class of existing objects.

0 notes

Text

LIS 4317: Module #7 Assignment

For this assignment- I created visuals in R reflecting distribution analysis. 1. For the first plot, I created a scatterplot with an added grid as I thought it would be helpful to keep track of the points and their alignment to the y-axis in particular.

2. For the second plot, I created a barplot where I omitted the grid as I felt it unnecessary as it wasn’t much too difficult to read without it. (I like how the bar plot is chunked and provides some insight into the distribution of cylinders!)

Code and Plots below:

0 notes

Text

LIS 4370: Module #6 Assignment

For this assignment, we worked on math using R, specifically involving matrices.

I don't know if there's an appropriate or ideal way to complete question 3, but I tried my best with what I knew!

Link to the code is available here:

intro-to-r2/Rios_HW6.R at main · JocieRios/intro-to-r2 (github.com)

0 notes

Text

LIS 4317: Module #3 Assignment

I had a lot of fun trying to make a visual to present a worksheet I made on Chicago bike racks! May not be the most amusing information, but it is a very big convenience to be able to know where one can secure their bike when needed. Link to the worksheet here: https://bitly.com/chicagobikeracks I greatly suggest opening the image in a new tab as well!

0 notes

Text

LIS 4370: Module #3 Assignment

In this assignment, I was responsible for creating a data frame using some hypothetical data on polls from 2016's election.

Here's the compiled R report:

Creating a data frame was a pretty straightforward process. I decided to calculate the average of the results to estimate what the results looked like and gather which candidates polled the highest.

0 notes

Text

Module # 2 Google Table, Tableau and Geographic map- Chicago Bike Racks

For this assignment, I decided to use a dataset I found listing the locations and amounts of bike racks throughout the city of Chicago.

The dataset can be found here: Bike Racks - Catalog (data.gov)

Using Tableau, I was able to plot the coordinates of each bike rack on a map and differentiate the number of racks through a spectrum of color. I added a background map so that I could better distinguish the locations throughout the city and changed the opacity to help the points be more visible.

Although it's overwhelming from a distance, it becomes more useful as you zoom in. For example, zooming in to my neighborhood and its surrounding area allowed me to gauge where I can safely lock up my bike. I'd recommend you open these images in a new window/tab to ensure you can see the details properly.

Additionally, selecting and hovering over a point gives you more useful details and information regarding the bike rack's location thanks to Tableau's tooltip feature within the worksheet.

Or open the link here: Chicago Bike Racks | Tableau Public

0 notes

Text

LIS 4370: Module #2 Assignment

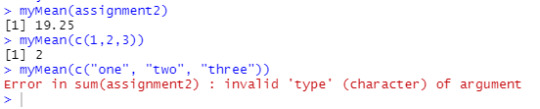

In this assignment, I tested a function called myMean that should calculate the mean of number values. The code for the assignment can be seen as follows:

Input:

assignment2 <- c(16, 18, 14, 22, 27, 17, 19, 17, 17, 22, 20, 22)

myMean <- function(assignment2) {

return(sum(assignment2)/length(assignment2))

}

myMean(assignment2)

myMean(c(1,2,3))

myMean(c(“one”, “two”, “three”))

In testing the function- I found that it worked as intended, as mean is accurately calculated as long as the right vector type is passed through.

If one didn’t know better however, they may believe the function would only work as long as the assignment2 object is passed through it- however- as long as an int vector is passed to the function- its mean will be calculated. I reinforce by testing numbers in string form, to no avail.

The Github link to the raw code is:

intro-to-r2/Rios_HW2.R at main · JocieRios/intro-to-r2 (github.com)

0 notes