Don't wanna be here? Send us removal request.

Text

Within the cloud eco-system, SaaS is the biggest revenue generator followed by IaaS and PaaS. There is a strong push from all software publishers to sell via SaaS vis a vis perpetual licensing model. And the model has worked well both for vendors and users. For vendors, it has allowed them to expand their reach cutting across large, mid-sized and small companies. It also helps them get a steady revenue stream from a large base of customers which helps them work on product updates and pass it on to their customers.

The benefits to enterprises are also well documented. After all, SaaS has been a runaway success because it was a win-win for both the stakeholders.

But like any piece of technology, there are challenges associated with managing SaaS applications. While most enterprise decisions are taken with a lot of due diligence, it is not possible at times to foresee some of these challenges. In fact, in certain cases, even if an organization does, they are left with no choice but to go with particular software for a lack of alternatives.

But to be fair, in spite of its dream run, SaaS is still a relatively new concept and many of the teething issues will hopefully subside over time.

In addition to the challenges mentioned, another issue that many large organizations face is Shadow IT, a phenomenon where employees end up buying SaaS services without the knowledge of their IT team. This can lead to inefficient processes and a challenge in managing software assets. Solutions like SAM (Software Asset Management) can help keep a tab on such scenarios helping them manage their software footprint regardless of where it resides, i.e. on-premise or on the cloud. know more....

0 notes

Text

The retail sector has transformed over the last decade. An industry in which the unorganized sector has traditionally dominated is going through one of the most exciting phases. And a big reason for this tectonic shift in large parts can be attributed to e-commerce, where technology is the backbone.

While the unorganized might have the larger share, the organized sector has made significant strides by leveraging technology across several business touch points.

And when we speak about the organized sector, one generally tends to think about e-commerce only. Still, even the brick-and-mortar setups have also adopted technology in large measures.

Like in the world of technology, where hybrid is the preferred route, a similar analogy could be drawn in the retail sector. With the advent of e-commerce platforms, many feared this would mark the end of brick-and-mortar setups. However, recent studies throw interesting inferences. One data point suggests that many people search online but eventually buy from retail outlets. This indicates that retail’s future lies in “click and mortar”, a hybrid approach wherein a business has offline and online operations.

Analytics has been an essential tool in the transformation of this sector. It would not be unfair to say that the best-known use cases of analytics have been in the retail industry.

Any technology uptake is not a result of a push mechanism; instead, showing tangible business benefit has only yielded business success. For instance, there is a reason why both offline and online retail platforms exist. While offline provides the customer with a complete shopping experience (also supported by the perception of getting authentic products), online platforms, on the contrary, are a rage because of convenience, range and price.

Both the formats need to have a tight grip on parameters like supply chain, inventory control, and trend predictions, among other things. Analytics has helped immensely in these areas. From historical data referencing to forecasting, analytics has made its usefulness very evident for this sector. And with the industry poised to undertake new and emerging technologies like VR, and Web 3.0, the possibilities are infinite. know more....

0 notes

Text

BI projects, if successfully implemented, can be game changer, but it is not easy to get them right on the first go. Moreover, when it comes to SMBs, every decision matters as they like to avoid any spillage.

BI projects are perceived to be in the confines of large organizations as they have the wherewithal and the budgets to undertake these projects. However, a BI project need not necessarily be expensive, and even if it is one, if properly executed, it always comes out on top as far as ROI goes.

Almost all organizations these days start capturing data in some form from the day of their inception, as they know a critical piece to their success is synthesizing this information into a valuable strategic asset.

A well-articulated BI strategy can alter the growth trajectory of a start-up by providing evidence-based decision-making, reducing expenses, manage Supply Chain, among other things. Since things are nascent, getting them right is easier compared to an established organization.

While it is great to capture and eventually churn meaningful insights, it typically requires a dedicated workforce and infrastructure. And this is where it gets a little complicated for SMBs as they may not be in a position to do so. A natural argument would be to outsource this entire function, but it is not as easy as it is made to sound.

And with data, there is always a concern around security. Therefore, it is essential to strike a balance between data agility and security & governance.

Some ways to manage issues around BI projects are:

Deploying a Data Warehouse to manage issues around data integration

Involve all stakeholders and educate them on data quality, as that holds the key to data management

Putting systems in place to capture the right data at the right time and in the right way

Managing storage can be a daunting task, especially for SMBs. Ideally, companies should look at cloud-based storage at the very onset ( keeping in mind the regulatory compliance of their industry). know more....

0 notes

Text

5G is seen as the enabler of the next phase of transformation for many sectors. Healthcare is no exception. While the concept of mobile health (m-health) has been there for a while, what 5G does is bring many potential use cases in this sector to fruition.

When one thinks of 5G, three things come to mind, reliability of connection, security and ultra-low latency. And these are precisely why 5G can bring a revolution to this sector which was earlier not even possible.

As India gets ready for its first rollouts, it would be interesting to watch the use cases championed for this sector. The initial foray would be in Tier 1 towns, with connected ambulances emerging in initial trials.

However, the real value of 5G will be in its ability to bridge the enormous gap that currently exists between urban metros and the rural sector. Quality healthcare is a big problem in the hinterlands. India has less than two hospital beds per 1000 people.

But to build the infrastructure for such services comes at a cost. It will be interesting to see how this is managed. The government obviously will have a significant role to play, the only concern being whether the operators will be willing to invest in the required infrastructure.

Every country will have its unique set of priorities and challenges, and ours is no different. While there are some great healthcare providers in the country, access to those services is confined to a few and comes at a steep price.

The possibilities of 5G are limitless in healthcare, but it needs to be backed with intent. know more....

0 notes

Text

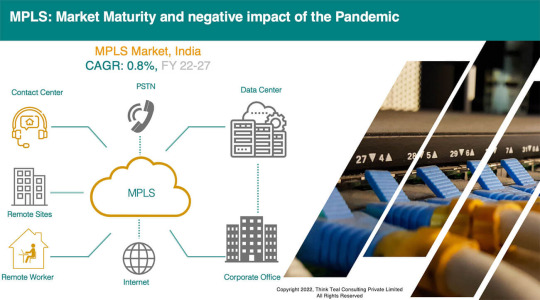

MPLS (Multiprotocol Label Switching) has been the network of choice for over two decades now for most enterprises. Reduced network congestion, increased uptime, and security are some of the reasons why MPLS became a quick favourite.

But the MPLS market has reached a point of stagnation as far as market revenue growth and will have negligible CAGR in the next 5-year arc. The last two years have been particularly bad as companies decided to reduce their existing MPLS links while there were hardly any new deployments. In fact, due to the lockdown, many branches were also shut down. So, while work from home or Hybrid work might have helped some technology markets, that cannot be said for the MPLS business.

As a result, the overall enterprise data services business will have a relatively tepid growth rate as MPLS has had the largest share of this market (almost close to 1/3rd of the overall enterprise data services market). Going by this trend, it would not be surprising that MPLS gets upstaged by ILL (Internet leased line connectivity).

Also, the MPLS market has been battling price erosion for some time now, affecting the profitability of the business line and eventually impacting the growth rates. As MPLS is the preferred connectivity option for most large enterprises (SMBs tend to prefer either leased line connectivity or some also opt for broadband), the scope for new deployments is also limited.

For a telecom operator, while MPLS is too big a market to neglect and will remain a mainstay for the next couple of years, an increased focus on ILL, point-to-point connectivity (DLC or ethernet-based connectivity) can be expected. know more...

0 notes

Text

Challenges with RPA Implementation

Employee Resistance - 56

Lack of required skill set - 31

Managing privacy and security - 45

Challenge in automating end-to-end use cases - 23

Business and IT are not aligned - 52

Selecting the wrong business case – 41

Whenever any tech product or solution is launched, there are bound to be some challenges in market acceptance and implementation. These are teething issues which happen across all sectors. The quicker they get resolved, the better the prospects for growth.

RPA also has its fair share of challenges. The most significant is how many perceive it as a replacement for the human workforce, i.e. resulting in employee resistance.

From the era of the industrial revolution to the modern world of the metaverse, there has always been the apprehension of how machines will replace human beings and rob them of their livelihood.

So rule number one for successful RPA deployment is to understand the people that work in an enterprise. It is vital to give them the reassurance that the time saved from doing repetitive tasks can actually be used for creative and strategic work.

Also, IT and the functional departments must drive such initiatives equally. And for that, it is important to choose a suitable business case for early success which paves the way for enterprise adoption. Fostering a culture of learning new skills to take advantage of new technology should not only be the purview of IT, but all the stakeholders involved.

Security and privacy are horizontals which are always critical to get right no matter which technology deployment is suggested. Since RPA usually deals with process automation tied with business-critical data, all the necessary compliance certifications should be in a place like PCI compliance, GDPR etc., as per your industry requirements.

Clear communication of the processes involved, i.e. educating the end beneficiary, evaluating the solutions in the market, and facilitating the final deployment, helps smooth down the challenges that typically arise in such transformative processes. know more...

0 notes

Text

If data is the new oil, then the content is the finished product from the refinery. In most knowledge-based industries, managing content can be a deal breaker. However, ECM solutions don’t come cheap, so due diligence is required before any purchase decisions are taken.

One common theme which cuts across any tech conversation is digital transformation. While DT can mean different things to different organizations, the objective is to streamline, automate and increase business productivity, among other things.

One of the earliest forms of digital transformation that many organizations carried out was to either go paperless or enhance process visibility by digitizing many paper-driven processes. And ECM, to a large extent, provides these solutions. The goal of an ECM solution is to manage the entire life cycle of an enterprise’s content, which could include images, structured or unstructured data, to reduce the risk of data loss and thereby improve productivity.

But the cost of a typical ECM solution could be a deal breaker. Typically in the perpetuity software licensing model, there are many elements, i.e. license fees, implementation fees, hosting, maintenance and training to highlight the broad ones.

But compare that to the SaaS-based model, the cost elements you need to tackle are licensing fees (opex-based), implementation and training. The fundamental reason is that in legacy applications, you must manage both the front end and the backend infrastructure requirements (hence the term monolithic). This may seem like an oversimplified comparison in favour of cloud, and ideally, any enterprise-level decision-making should be done based on a proper TCO analysis. know more....

0 notes

Text

The enterprise connectivity market has witnessed changing patterns when it comes to different kinds of connectivity services.

There was a time that this market was heavily dependent on MPLS services. While MPLS remains the biggest contributor in terms of revenue, its rate of growth has reduced considerably, primarily because of market saturation and price cuts. On the other hand, point to point to connectivity services like DLC or ethernet services have suddenly picked up the pace on account of OTTs. This market has seen the most considerable positive uptake in the last few years.

The Internet Leased Line (ILL) market has been steadily growing over the past few years. Going by the trends we have seen, maybe in a year to two, the ILL market will be the most significant revenue contributor in the enterprise connectivity services business, toppling MPLS services.

Cloud adoption and application proliferation have helped in this steady growth part for ILL services as the requirement for steady internet services are constantly increasing. The need for dedicated internet is highest amongst OTTs, Banks, Fintech, Ecommerce and IT/ITes companies.

In fact, in some cases, it has been observed that small to mid-market organizations prefer ILL services for branch connectivity to drive their digital transformation initiatives. This was earlier done primarily over MPLS.

The growth story is anticipated to continue by large enterprises and SMBs; however, how they use these services might differ. In the case of large enterprises, they would look at ILL for branch connectivity as well as a backup link. On top of this, OTTs have suddenly spiked up the growth of this sector as transaction volumes are enormous.

In the SMB market, many consider ILL as the primary connectivity. And given the base of this segment, it augurs well for the market. With efforts around cloud and digital transformation on the rise, this market is expected to grow in the 3-5 years. know more....

0 notes

Text

Cloud adoption in India has come a long way. From trepidation around security to initial adoption confined to non-critical applications, we have covered a lot of ground in the past decade.

When we speak about cloud, one must bear in mind what kind of application we plan to host as there are typically three kinds. One which helps run the business, then there are those which manage certain aspects of business, and then there are those which support various non-critical functions.

Regarding the first kind, security, latency, and predictability become essential. So, while choosing the right service provider is necessary, the network fabric that delivers these infrastructure services is equally important.

The most common way of connecting to any cloud services is through an internet connection running on a secure network. But when the question comes to mission-critical applications, the connection's security, performance and reliability also need to be looked into. This is where cloud interconnect comes in, a physical connection between an enterprise core network with a cloud interconnect (typically a colocation facility). This is the surest way to receive secure and reliable connectivity for your most critical workloads.

Since most enterprises these days have a Hybrid IT model, exposure to cyber risks is high. Point-to-point connectivity is a more secure approach.

Also, in many industries, latency matters. Thanks to a direct pathway, the latency is reduced in a cloud interconnect.

The present and future of business are based on a hybrid IT environment, but as we have seen in various deployments, it is fraught with complexities. But direct connectivity might be a way enterprises can explore as the systems mature and the cloud becomes more integrated with our technology stack. know more...

0 notes

Text

In a world where microservices and containers occupy high mind share and are seen as the future of how applications should be built, legacy applications have managed to create a negative perception around them. But the reality is these legacy applications support most business-critical functions. And over the years, many deep investments have gone in to build, support and grow these applications. In many scenarios, they are hard-wired with the very functioning of an organization (take, for example, core banking solutions).

While the merits of microservices architecture need no retelling, it is also essential to understand that all legacy applications cannot be shunted. And somewhere, application modernization is a middle path which tries to bridge the gap that legacy has created by imbibing more modern tools and infrastructures.

Typically, there are three ways of going about this process. In the first one, called “the lift and shift process”, as the name suggests, not many changes are done to the code, and the application is lifted “as is” and put into new infrastructure. In the second one, called “refactoring”, the process involves significant redevelopment of the code, to the extent of completely rewriting the code. Finally, you have a process called “replatforming”, which is somewhat of a middle path between the first two methods. While you don’t rewrite the entire code, you ensure that the application can take advantage of a modern cloud platform.

But the most crucial aspect remains the application identification, which needs to be modernized. Usually, an application modernization process involves significant costs and effort. Often, an application is so strongly intertwined with the system infrastructure that decoupling it does not justify ROI. Hence, choosing the right candidate matters! know more....

0 notes

Text

We have had several cloud outages in the last year. And in most of the cases, the issue was found to be around the network. Enterprise technology has taken giant strides, but managing the corresponding growth in complexities has not been easy.

Take, for instance, the Data Center. There has been a massive transformation that has taken place over the past decade. From having data centers across multiple locations to consolidation to virtualizing infrastructure to adopting the cloud, we have come a long way. And somewhere in this process, virtualization has played a pivotal role. So much so that one could call it the chassis on which this entire movement took place.

While server virtualization has been the front runner in adoption, network virtualization has also found a favourable response among enterprises. If you follow the pattern, the fundamental switch has been around the use of software in managing your physical infrastructure, be it your servers, storage or network, the foundation of any data center fabric. As far as SDN goes, the reasons for adoption range from cost optimization, fast failover, and network automation; essentially, most enterprises are trying to tackle the challenge brought about due to the complexity of managing our networks due to data-intensive and bandwidth-hungry applications.

As a solution, SDN finds its application within the data center (over LAN) and has also found success in connecting geographically distributed environments through SD-WAN.

With everything being software-driven, SDN will witness higher adoption in time. And with multi-cloud emerging as an industry standard in the coming years, SDN will be a natural choice. know more....

0 notes

Text

As employees have evolved and learned to deal with new ways of work, enterprises are being compelled to implement new IT strategies to suit the work culture requirements of their employees. However, matching employee expectations sometimes becomes a challenging task for organizations as there is a constant risk of possible security breaches or possibilities of compromise on the company's sensitive information.

Striking the right balance between what employees want and the level of flexibility that organizations can provide without compromising on the company's data/resources becomes a key focus for most of the IT leaders of the organization.

When the pandemic hit, organizations did what they could do best to keep business running. IT teams worked to support their employees in every possible way they could. IT decision-makers had to make certain swift decisions to accommodate the remote working of employees. However, in the present scenario, as the trend of work-from-anywhere is catching up and hybrid work is becoming a reality, businesses need to plan for the long term to create a sustainable modern workplace.

Organizations are revisiting their IT policies and implementing new strategies to create and support a sustained workplace transformation.

Businesses on the journey of digitization should ensure that workplace modernization becomes a core component of this ongoing quest to achieve business excellence. Having a clear workplace modernization roadmap helps organizations minimize the business disruption risks in the future. This can be achieved by assessing the current IT infrastructure, employees' changing needs, and how businesses can cater to these needs without compromising on business interests. know more...

0 notes

Text

As cloud adoption increases in India, there has been a corresponding increase in the complexity of the projects undertaken and the maturity of the services requested. More often than not, these requirements stem from cloud natives or enterprises with large IT teams who are equipped to manage such turn-key projects.

We have come a long way from teething issues during your first deployment to managing multiple cloud environments. And this growth trajectory is equally mapped by cloud service providers. Beyond the standard compute, storage and network offerings, the library of service offerings are astounding. The discerning customer who has been keeping a close track will also agree that all of these big players (AWS, Microsoft Azure, Google etc.) have their sweet spots. While there are multiple reasons organizations prefer having a multi-cloud environment, the ones that come to mind are having access to an array of feature-rich services and avoiding potential vendor lock-in.

Agreed, multi-cloud promises a multi-vendor federation but is not easy to manage, or so it seems. Cloud, in its infancy, promised that the future of IT would be agile and swift and that vision should not be compromised as the market matures and evolves.

One has to get their multi-cloud management right to justify all the investments in the cloud and bring to light the promise that it has to offer. We are looking for a central console that gives you control over your disparate cloud environments.

An ideal framework for a multi-cloud management platform should be able to:

Help in service provisioning

Oversee service monitoring

Check on service performance

A successful multi-cloud framework should have a catalogue of services which are easy to provision by clearly articulating your cloud architecture. know more....

0 notes

Text

The telecom sector is going through an exciting phase. With the upcoming 5G auction (26th July), a lot is at stake for the industry. So far, the fortunes of the sector have been decided by the consumer business as it generates more than 80% of the overall revenues ( maybe even more). But that trend is changing with the b2b or the enterprise business picking up steam.

Think about it, when we speak or hear about 5g, how many times do we discuss consumer use cases? Compare that with the b2b sector. The opportunity clearly lies in this segment. And that might be the big difference as we go forward with established players having a much stronger footing amongst enterprises as compared to newer entrants.

From a service breakout perspective, mobile services are the most significant revenue contributor (upwards of 60%), but the growth levers in this segment are SMS services and M2M connectivity.

The future of enterprise mobile services will pivot around IoT, and we will tell you how. Most of the use cases that we come across 5g are around IoT. The growth of SMS also stems from a significant push of M2M connectivity or A2P (application to person) messaging.

The timing is right for the telecom sector as we step into the future. Advancements in AI, 5G and IoT, in conjunction with their brand and high customer trust, can help telecom companies emerge as Large System Integrators (LSI). They are equipped to provide consulting, advisory, and systems integration (SI) services. Telcos will have a significant coordination role in the value chain. Pivoting the legacy business will require capitalizing on dedicated core networks, developing the right set of ecosystem partners, and fostering IoT agility to build customer trust. know more...

0 notes

Text

The V-SAT connectivity market is one of the smallest (about 4-5%) among enterprise connectivity services. The V-SAT business took a beating in the past two years; in fact, the market reduced in size.

BFSI, Government & Media contributes to more than 3/4th of V-SAT requirements in India. With BFSI leading the pack with 40%.In BFSI, the primary requirement comes from ATM installations, whereas, in the Media & Entertainment sector, satellite cinema distribution is a key use case.

As far as the BFSI sector goes, a small percentage of loss could be attributed to 4G replacing VSAT broadband at various ATM sites.

Similarly, the shutdown of movie theatres also led to the degrowth of this market.

Hopefully, with the worst behind us, the V-SAT market is expected to return to its growth trajectory; however, it will be tepid.

However, over the last year, there has been a lot of movement from a provider's point of view. Apart from existing players like Airtel, Reliance Jio, Tata Nelco and some global players like Amazon (Project Kuiper) and Elon Musk's backed Space X see potential in this market.

Apart from the three industries which drive V-SAT uptake in India (BFSI, Government, Media & Entertainment), a prominent use case for satellite broadband would be to provide connectivity to remote locations. But a lot rides on how these operators price these services. Currently, there is a vast differential in pricing regarding connectivity through V-SAT and 4G or fixed-line broadband. While V-SAT-based connectivity is priced at more than 1000/- per GB, 4G and fixed-line broadband are one of the cheapest in the world (ranging from 2/- to 15/-).

Satellite Internet will need the government's support in simplifying the clearance process, creating a conducive eco-system, and eventually tying it with the larger aegis of the "Digital India" program. know more....

0 notes

Text

Any conversations around technology themes like Workspace Modernization, Digital Transformation or Cloudification are not complete unless the security aspects are discussed.

Not a single day goes by when we don’t hear about some security breach. Some are planned, while few are accidental, but the loss incurred either way is significant.

The past two years have been significant in digital consumption worldwide, and India is no exception. There are hardly any companies which do not have a digital transformation mandate. Cloud adoption and penetration have hit an all-time high and will only grow in the next few years.

Over the last few years, a lot of emphasis was given to securing the perimeter as most of us used to work and access applications from within the corporate network. But with remote work on the rise, endpoint protection again finds reckoning amongst most tech decision-makers.

As the volume and the levels of security breaches have grown, the solution coverage also has seen a strategic shift in how it is administered.

Until about a few years, endpoint security meant anti-virus, which was seen as a stand-alone product designed to protect a single endpoint (laptop, mobile, desktop, IoT etc.). With the advent of endpoint protection platforms, one does not see the endpoint in isolation but as an integral part of the enterprise network, providing visibility to all the endpoints connected from a single location.

Further, features like automatic updates, behavioural analysis etc., make today’s platforms more holistic.

The endpoint is still the most vulnerable, with more than three out of four successful breaches originating at the endpoint. And with remote work becoming a standard practice, more and more employees are connecting to their corporate networks from devices outside their office, forcing many CISOs to rethink their security posture. With zero-day attacks becoming more common, managing endpoints is becoming more critical. know more....

0 notes

Text

Two topics which are doing the rounds in the enterprise tech space are Data Center Modernization and the confederation of the multi and hybrid cloud. And one technology which helps in stitching these pieces together is Hyper-Converged Infrastructure (HCI).

Some big names hog the limelight, but it is important first to understand your requirement and then map it to the solutions capability framework.

While tech specifications are a critical element to the success of the deployment, one also needs to factor in the complexity of the entire process. One of the biggest challenges in managing a legacy-based three-tier infrastructure is the manageability and coordination with multiple vendors to achieve the desired results. Hence, one of the foremost principles to keep in mind is that your HCI solution should be simple and easy to use. Please remember that was one of the reasons why you went for it in the first place. The purpose of HCI is to simplify the management of IT. HCI should not build to your challenges but rather help you resolve them.

Given today’s IT posture for most large enterprises who have to deal with a multi-cloud hybrid IT environment, one must try looking for solutions which provide a single pane of glass view of your compute, storage, network fabric and the hypervisor which manages all of it.

When one speaks about HCI, more often than not, it is said in reference to Hybrid Cloud because HCI gives you the closest “cloud-like” experience in an on-premise deployment.

Another benefit of a simple interface is that it reduces risk instances. That results in a significant leap in innovation and a quicker time to market.

And lastly, please bear in mind regardless of which infra that we speak about (bare metal, public or private cloud) heart of the entire tech juggernaut is the application. So it is vital that no matter which HCI solution you choose, it should allow all enterprise applications to run, regardless of the scale of operations. know more.....

0 notes