Don't wanna be here? Send us removal request.

Text

Microsoft Azure Online Data Engineering | Azure Databricks Training

Azure Databricks Connectivity with Power BI Cloud

Azure Databricks can be connected to Power BI Cloud to leverage the capabilities of both platforms for data processing, analysis, and visualization. - Azure Databricks Training

Here are the general steps to establish connectivity between Azure Databricks and Power BI Cloud:

1. Set up Azure Databricks:

- Make sure you have an Azure Databricks workspace provisioned in your Azure subscription.

- Create a cluster within Databricks to process your data.

2. Configure Databricks JDBC/ODBC Endpoints:

- Open your Azure Databricks workspace.

- Go to the "Clusters" tab and select your cluster.

- Under the "Advanced Options," enable JDBC/ODBC.

3. Get JDBC/ODBC Connection Information:

- Note down the JDBC or ODBC connection details provided in the Databricks workspace. This includes the JDBC/ODBC URL, username, and password. - Azure Data Engineering Training in Ameerpet

4. Power BI Desktop:

- Open Power BI Desktop.

5. Get Data:

- In Power BI Desktop, go to the "Home" tab and select "Get Data."

6. Choose Databricks Connector:

- In the "Get Data" window, select "More..." to open the Get Data window.

- Search for "Databricks" and select the appropriate Databricks connector.

7. Enter Connection Details:

- Enter the JDBC or ODBC connection details obtained from the Databricks workspace.

- Provide the necessary authentication details. - Azure Data Engineering Training

8. Access Data:

- After successful connection, you can access tables, views, or custom queries in Azure Databricks from Power BI.

9. Load Data into Power BI:

- Once connected, you can preview and select the data you want to import into Power BI.

10. Create Visualizations:

- Use Power BI to create visualizations, reports, and dashboards based on the data from Azure Databricks.

11. Refresh Data:

- Set up data refresh schedules in Power BI Service to keep your reports up to date.

Additional Considerations:

- Ensure that the Databricks cluster firewall settings allow connectivity from the Power BI service.

- The Databricks JDBC/ODBC connection may require specific libraries or drivers; make sure to install them if needed.

Keep in mind that the exact steps and options might vary based on updates to Azure Databricks, Power BI, or related components. Always refer to the official documentation for the most accurate and up-to-date information. - Microsoft Azure Online Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#azuredataengineertraining#powerbionlinetraining#azuredataengineertraininghyderabad#azuredataengineer#azuredatabrickstraining

0 notes

Text

Azure Databricks Training | Data Engineering Training Hyderabad

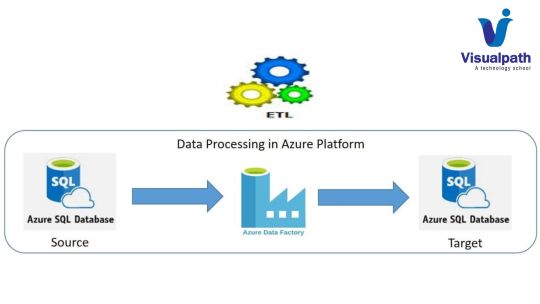

File Incremental Loads in ADF - Databricks & Powerbi

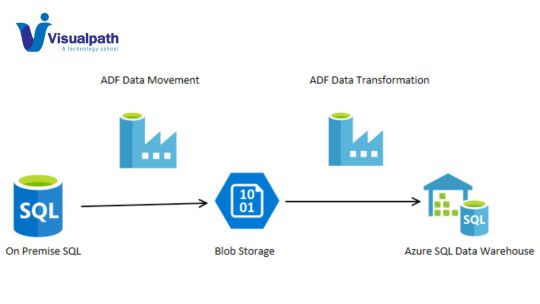

In Azure Data Factory (ADF), performing incremental loads is a common requirement when dealing with large datasets to minimize the amount of data transferred and improve overall performance. Incremental loads involve loading only the new or changed data since the last successful load. - Azure Databricks Training

Here are the general steps to implement file incremental loads in Azure Data Factory:

1. Identify the Incremental Key: Determine a column or set of columns in your data that can be used as a unique identifier to identify new or changed records. This is often referred to as the incremental key.

2. Maintain a Last Extracted Value: Store the last successfully extracted value for the incremental key. This can be stored in a database table, Azure Storage, or any other suitable location. A common practice is to use a watermark column to track the last extraction timestamp. - Data Engineering Training Hyderabad

3. Source Data Query: In your source dataset definition in ADF, modify the query to filter data based on the incremental key and the last extracted value.

For example, if you're using a SQL database, the query might look like:

```sql

SELECT *

FROM YourTable

WHERE IncrementalKey > @LastExtractedValue

```

4. Use Parameters: Define parameters in your ADF pipeline to hold values like the last extracted value. You can pass these parameters to your data flow or source query.

5. Data Flow or Copy Activity: Use a data flow or copy activity to move the filtered data from the source to the destination. Ensure that the destination data store supports efficient loading for incremental data.

6. Update Last Extracted Value: After a successful data transfer, update the last extracted value in your storage (e.g., a control table or Azure Storage).

7. Logging and Monitoring: Implement logging and monitoring within your pipeline to track the progress of incremental loads and identify any issues that may arise. - Azure Data Engineering Training in Ameerpet

Here's a simple example using a parameterized query in a source dataset:

```json

{

"name": "YourSourceDataset",

"properties": {

"type": "AzureSqlTable",

"linkedServiceName": {

"referenceName": "YourAzureSqlLinkedService",

"type": "LinkedServiceReference"

},

"typeProperties": {

"tableName": "YourTable",

"sqlReaderQuery": {

"value": "SELECT * FROM YourTable WHERE IncrementalKey > @LastExtractedValue",

"type": "Expression"

}

}

}

}

```

Remember that the specific implementation may vary based on your source and destination data stores. Always refer to the official Azure Data Factory documentation. - Microsoft Azure Online Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#azuredatabrickstraining#microsoftpowerbitraining#powerbionlinetraining#dataengineeringtraininghyderabad#azuredataengineeringonlinetraining#azuredataengineeringtraining

0 notes

Text

Azure Databricks Training | Power BI Online Training

Get started analyzing with Spark | Azure Synapse Analytics

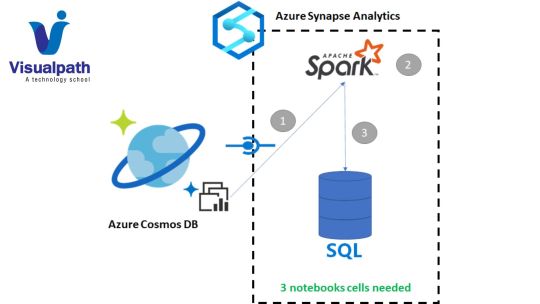

Azure Synapse Analytics (SQL Data Warehouse) is a cloud-based analytics service provided by Microsoft. It enables users to analyze large volumes of data using both on-demand and provisioned resources. This connector allows Spark to interact with data stored in Azure Synapse Analytics, making it easier to analyze and process large datasets. - Azure Data Engineering Online Training

Here are the general steps to use Spark with Azure Synapse Analytics:

1. Set up your Azure Synapse Analytics workspace:

- Create an Azure Synapse Analytics workspace in the Azure portal.

- Set up the necessary databases and tables where your data will be stored.

2. Install and configure Apache Spark:

- Ensure that you have Apache Spark installed on your cluster or environment.

- Configure Spark to work with your Azure Synapse Analytics workspace.

3. Use the Synapse Spark connector:

- The Synapse Spark connector allows Spark to read and write data to/from Azure Synapse Analytics.

- Include the connector in your Spark application by adding the necessary dependencies.

4. Read and write data with Spark:

- Use Spark to read data from Azure Synapse Analytics tables into DataFrames.

- Perform your data processing and analysis using Spark's capabilities.

- Write the results back to Azure Synapse Analytics. - Azure Databricks Training

Here is an example of using the Synapse Spark connector in Scala:

```scala

import org.apache.spark.sql.SparkSession

val spark = SparkSession.builder.appName("SynapseSparkExample").getOrCreate()

// Define the Synapse connector options

val options = Map(

"url" -> "jdbc:sqlserver://<synapse-server-name>.database.windows.net:1433;database=<database-name>",

"dbtable" -> "<schema-name>.<table-name>",

"user" -> "<username>",

"password" -> "<password>",

"driver" -> "com.microsoft.sqlserver.jdbc.SQLServerDriver" - Azure Data Engineering Training

)

// Read data from Azure Synapse Analytics into a DataFrame

val synapseData = spark.read.format("com.databricks.spark.sqldw").options(options).load()

// Perform Spark operations on the data

// Write the results back to Azure Synapse Analytics

synapseData.write.format("com.databricks.spark.sqldw").options(options).save()

```

Make sure to replace placeholders such as `<synapse-server-name>`, `<database-name>`, `<schema-name>`, `<table-name>`, `<username>`, and `<password>` with your actual Synapse Analytics details.

Keep in mind that there may have been updates or changes since my last knowledge update, so it's advisable to check the latest documentation for Azure Synapse Analytics and the Synapse Spark connector for updates or additional features. - Microsoft Azure Online Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#azuredatabrickstraining#microsoftpowerbitraining#powerbionlinetraining#dataengineeringtraininghyderabad#azuredataengineeringonlinetraining#azuredataengineeringtraining

0 notes

Text

Azure Databricks Training | Azure Data Engineering Training in Ameerpet

Power BI Online Training - Visualpath is the Best Software Online Azure Data Engineering Training institute in Hyderabad. Avail complete ADE Training in Hyderabad, USA, Canada, UK Australia. You can schedule a free demo by calling us +91-9989971070.

Google form: https://bit.ly/3tbtTFc

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit : https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#dataengineeringtraininghyderabad#powerbionlinetraining#microsoftpowerbitraining

0 notes

Text

Microsoft Power BI Training | Azure Data Engineering Training

Unlocking the Synergy of Databricks and PowerBI | Visualpath

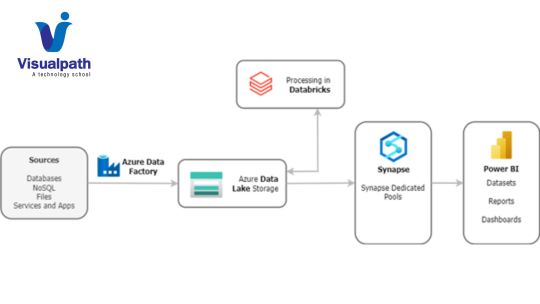

In the dynamic landscape of data management, organizations are constantly seeking innovative solutions to harness the power of their data. Azure Data Engineering, a comprehensive suite of tools and services, has emerged as a pivotal force in this journey. Within this ecosystem, the integration of Databricks and PowerBI stands out, offering a seamless and powerful solution. - Azure Data Engineering Training

What is Azure Data Engineering, DataBricks and PowerBi?

Azure Data Engineering refers to the set of services provided by Microsoft Azure to design, implement, and manage data workflows efficiently. At its core, it aims to streamline the process of collecting, storing, processing, and visualizing data. Databricks, a unified analytics platform, and PowerBI, a business intelligence tool, work in tandem to elevate the capabilities of Azure Data Engineering.

What are the Key Aspects of Azure Data Engineering, Databricks and PowerBi?

Databricks Integration:

Azure Databricks enhances the data engineering process by providing a collaborative environment for data scientists, engineers, and analysts. It facilitates the development of scalable data pipelines and the execution of complex data transformations. The integration with Azure ensures seamless connectivity with various data sources, enabling a unified approach to data processing.

Powerful Data Transformation:

Databricks empowers data engineers to perform advanced data transformations using languages like Python and Scala. This capability is crucial for preparing raw data into a structured and usable format. PowerBI complements this by providing an intuitive interface to visualize and explore the transformed data, allowing for quick insights and informed decision-making. - Azure Databricks Training

Scalability and Performance:

Leveraging the cloud-native architecture of Azure, Databricks ensures scalability by dynamically allocating resources as per the workload. This enables organizations to handle large datasets and complex computations efficiently. PowerBI, with its ability to handle vast amounts of data, ensures that insights are delivered with speed and accuracy.

Real-time Analytics:

Azure Data Engineering with Databricks and PowerBI supports real-time analytics, allowing organizations to make data-driven decisions in near real-time. This is particularly crucial in today's fast-paced business environment. - Microsoft Azure Online Data Engineering Training

Conclusion:

The synergy between Azure Databricks and PowerBI within the Azure Data Engineering framework offers organizations a powerful solution to unlock the true potential of their data. By seamlessly integrating data processing, transformation, and visualization, this combination not only enhances efficiency but also provides a foundation for informed decision-making. As organizations continue their data-driven journey, embracing these key aspects can pave the way for a more agile, scalable, and insightful future.

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#AzureDataEngineeringOnlineTraining#MicrosoftPowerBITraining#PowerBIOnlineTraining

0 notes

Text

Microsoft Power BI Training | Azure Data Engineering Training

Visualpath Teaching is the best Azure Databricks Training. It is the NO.1 Institute in Hyderabad Providing Online Training Classes. Our faculty has experienced in real time and provides Microsoft Azure Real time projects and placement assistance. Contact us +91-9989971070.

Google form: https://bit.ly/3tbtTFc

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Our Blog : https://azuredatabricksonlinetraining.blogspot.com/

Visit : https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#AzureDataEngineeringOnlineTraining#MicrosoftPowerBITraining#PowerBIOnlineTraining

0 notes

Text

Power BI Online Training | Data Engineering Training Hyderabad

Synergy of Big Data | Databricks and PowerBi

In the dynamic landscape of data management, organizations are increasingly turning to powerful cloud platforms like Azure for their data engineering needs. Azure, with its comprehensive suite of services, offers an ecosystem that enables seamless data processing, storage, and analytics. Two key components of this ecosystem, Databricks and PowerBI. - Microsoft Azure Online Data Engineering Training

Databricks PowerBI?

Databricks, a unified analytics platform, is at the forefront of Azure's data engineering arsenal. Leveraging Apache Spark, Databricks empowers organizations to process large-scale data sets with lightning speed and efficiency. Its collaborative environment allows data engineers, data scientists, and business analysts to work seamlessly together, fostering a culture of innovation and agility.

Azure Databricks?

Azure Databricks integrates seamlessly with various Azure services, enabling the creation of end-to-end data pipelines. From data ingestion to transformation and storage, Databricks streamlines the entire data engineering process. The platform supports multiple programming languages, making it accessible to a wide range of users, while its auto-scaling capabilities ensure optimal resource utilization.

PowerBI?

PowerBI – Microsoft's business analytics tool that transforms raw data into insightful visualizations and interactive reports. Integrating PowerBI with Azure Databricks unlocks the full potential of data engineering efforts. The PowerBI connector for Azure Databricks allows users to pull in data directly from Databricks clusters, facilitating real-time analytics and reporting. - Azure Databricks Training

What are the Advantages of Integration of Big Data?

One of the key advantages of this integration is the ability to create data pipelines in Databricks and visualize the results in PowerBI dashboards. This end-to-end integration enhances decision-making processes by providing stakeholders with timely and actionable insights.

Moreover, the scalability of Azure allows organizations to handle increasing data volumes effortlessly. As data grows, Databricks and PowerBI seamlessly scale to meet the demands, ensuring that analytics and reporting remain robust and performant.

Collaboration between data engineers and business analysts becomes more meaningful with this integration. Data engineers can focus on optimizing data pipelines in Databricks, while business analysts leverage PowerBI's intuitive interface to derive insights and create impactful visualizations.

Conclusion,

The integration of Azure Databricks and PowerBI represents a powerful alliance. Organizations leveraging this combination benefit from a unified platform that streamlines data processing, analysis, and reporting. As the digital landscape continues to evolve, embracing such advanced tools becomes imperative for staying ahead in the data-driven era. - Azure Data Engineering Online Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#AzureDataEngineeringTraining#MicrosoftPowerBITraining#DataEngineeringTrainingHyderabad#AzureDataEngineeringTrainingHyderabad

0 notes

Text

Azure Databricks Training | Power BI Online Training

Why the Integration of Big Data is Paramount? - Visualpath

In the ever-evolving landscape of data analytics, the seamless integration of powerful tools is imperative for efficient data engineering. Apache Spark, a widely adopted open-source distributed computing system, finds a harmonious partnership with Microsoft Power BI when orchestrated through Databricks, creating a dynamic ecosystem for accelerated data processing and visualization. - Microsoft Azure Online Data Engineering Training

Key Advantages of ADE with Databricks and PowerBi

One of the key advantages of using Databricks lies in its collaborative and interactive workspace, which facilitates seamless collaboration between data engineers, data scientists, and business analysts. The platform's support for various programming languages, such as Python, Scala, and SQL, ensures flexibility and caters to the diverse skill sets within a data team.

The integration of Databricks with Power BI brings forth a comprehensive solution for end-to-end data analytics. Power BI, a business intelligence tool by Microsoft, excels in data visualization and reporting. By connecting Power BI to Databricks, organizations can effortlessly tap into the processed data, creating visually appealing and insightful dashboards. - Azure Data Engineering Online Training

The integration process is streamlined, allowing users to connect Power BI directly to Databricks clusters. This direct connection ensures that the latest data is readily available for visualization, eliminating the need for manual data transfers and reducing the time-to-insight significantly.

Additionally, the Databricks and Power BI integration provides the flexibility to schedule and automate data refreshes, ensuring that dashboards and reports always reflect the most up-to-date information. This real-time connectivity enhances the agility of data-driven decision-making processes within an organization.

Conclusion:

The integration of Databricks with Power BI creates a powerful synergy that accelerates data engineering processes. The combination of Databricks' distributed computing capabilities and Power BI's intuitive visualization tools provides a comprehensive solution for organizations seeking to extract actionable insights from their data. As businesses continue to prioritize data-driven strategies, the collaboration between Databricks and Power BI stands as a testament to the evolving landscape of efficient. - Azure Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#AzureDataEngineeringTraining#PowerBIOnlineTraining#MicrosoftPowerBITraining

0 notes

Text

Power BI Online Training | Data Engineering Training Hyderabad

File Incremental Loads in ADF : Azure Data Engineering

Azure Data Factory (ADF) is a cloud-based data integration service that allows you to create, schedule, and manage data pipelines. Incremental loading is a common scenario in data integration, where you only process and load the new or changed data since the last execution, instead of processing the entire dataset. - Microsoft Azure Online Data Engineering Training

Below are the general steps to implement incremental loads in Azure Data Factory:

1. Source and Destination Setup: Ensure that your source and destination datasets are appropriately configured in your data factory. For incremental loads, you typically need a way to identify the new or changed data in the source. This might involve having a last modified timestamp or some kind of indicator for new records.

2. Staging Tables or Files: Create staging tables or files in your destination datastore to temporarily store the incoming data. These staging tables can be used to store the new or changed data before it is merged into the final destination. - Azure Data Engineering Online Training

3. Data Copy Activity: Use the "Copy Data" activity in your pipeline to copy data from the source to the staging area. Configure the copy activity to use the appropriate source and destination datasets.

4. Data Transformation (Optional): If you need to perform any data transformations, you can include a data transformation activity in your pipeline.

5. Merge or Upsert Operation: Use a database-specific operation (e.g., Merge statement in SQL Server, upsert operation in Azure Synapse Analytics) to merge the data from the staging area into the final destination. Ensure that you only insert or update records that are new or changed since the last execution.

6. Logging and Tracking: Implement logging and tracking mechanisms to keep a record of when the incremental load was last executed and what data was processed. This information can be useful for troubleshooting and monitoring the data integration process. - Data Engineering Training Hyderabad

7. Scheduling: Schedule your pipeline to run at regular intervals based on your business requirements. Consider factors such as data volume, processing time, and business SLAs when determining the schedule.

8. Error Handling: Implement error handling mechanisms to capture and handle any errors that might occur during the pipeline execution. This could include retry policies, notifications, or logging detailed error information.

9. Testing: Thoroughly test your incremental load pipeline with various scenarios, including new records, updated records, and potential edge cases.

Remember that the specific implementation details may vary based on your source and destination systems. If you're using a database, understanding the capabilities of your database platform can help optimize the incremental load process. - Azure Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#MicrosoftPowerBITraining#PowerBIOnlineTraining#DataEngineeringTrainingHyderabad

0 notes

Text

Azure Databricks Training | Azure Data Engineering Training in Ameerpet

Unveil Insights of Databricks and PowerBi? - Azure Data Engineering

Accelerating Data Engineering (ADE) is a crucial aspect of modern business intelligence, enabling organizations to extract meaningful insights from their data quickly. Databricks and Power BI are two powerful tools that, when integrated seamlessly, can streamline the data engineering process and empower decision-makers with real-time analytics. - Azure Databricks Training

Curious to know about the Strengths of Databricks and PowerBi

Understanding Databricks: Databricks is a unified analytics platform that simplifies the process of building big data and AI solutions. It provides an Apache Spark-based environment for data engineering, machine learning, and collaborative data science. Before integrating with Power BI

Connecting Databricks with Power BI: Start by exporting your refined data from Databricks in a format suitable for Power BI, such as CSV or Parquet. Use Power BI's native connectors to establish a connection with Databricks. This can be achieved through Power BI's Power Query Editor. - Microsoft Azure Online Data Engineering Training

Optimizing Data Transformation in Power BI: Leverage Power Query Editor in Power BI to perform additional data transformations if needed. This includes cleaning, shaping, and enriching the data to meet specific reporting requirements. Utilize Power BI's M language for advanced data manipulations and custom transformations.

Creating Interactive Dashboards: Develop interactive dashboards in Power BI to visualize the processed data. Power BI provides a user-friendly interface for building insightful reports and dashboards without the need for extensive coding. Leverage Power BI's drag-and-drop capabilities.

Implementing Real-Time Analytics: Databricks allows for real-time data processing. Integrate real-time streaming data with Power BI to enable live dashboards that reflect the most up-to-date insights. Explore Power BI's streaming datasets and real-time dashboards to provide decision-makers with timely information. - Azure Data Engineering Online Training

Ensuring Data Security and Compliance: Implement appropriate security measures to protect sensitive data during the ADE process. Both Databricks and Power BI offer robust security features to ensure compliance with data protection regulations. Utilize Power BI's row-level security (RLS) and Databricks' access controls.

Automating Workflows with Azure Data Factory: Integrate Azure Data Factory into your ADE workflow for orchestrating and automating data pipelines. This enhances efficiency by automating repetitive tasks and ensuring timely data updates.

Conclusion:

The integration of Databricks and Power BI streamlines the ADE process, allowing organizations to turn raw data into actionable insights efficiently. By understanding and harnessing the capabilities of these powerful tools, businesses can empower decision-makers with real-time analytics, interactive dashboards, and the ability to make informed decisions based on accurate and up-to-date information. - Azure Data Engineering Training

Visualpath is the Leading and Best Institute for learning Azure Data Engineering Training. We provide Azure Databricks Training, you will get the best course at an affordable cost.

Attend Free Demo Call on - +91-9989971070.

Visit Our Blog: https://azuredatabricksonlinetraining.blogspot.com/

Visit: https://www.visualpath.in/azure-data-engineering-with-databricks-and-powerbi-training.html

#AzureDatabricksTraining#AzureDataEngineeringOnlineTraining#DataEngineeringTrainingHyderabad#MicrosoftPowerBITraining#PowerBIOnlineTraining

0 notes