Don't wanna be here? Send us removal request.

Text

More on Cryptocurrency

(Information as of 19 September 2017)

Evolution of Currency

9000 BC: Barter goods people had in surplus for goods they were in need of

600 BC: Introduction of coins allowed for trade to flourish

1661: Paper money was mass produced and did not rely on raw materials like gold and silver

1946: The first charge card was introduced and has evolved into payless cards

2009: Bitcoin launched and became the first fully implemented decentralised currency

Bitcoin

Bitcoin is the original cryptocurrency and was released as open-source software in 2009.

Using a new distributed ledger known as the blockchain, the Bitcoin protocol allows for users to make peer-to-peer transactions using digital currency while avoiding the “double spending” problem.

No central authority or server verifies transactions and instead the legitimacy of a payment is determined by the decentralised network itself.

Features: Fast P2P payments worldwide, low processing fees, decentralised, available to anyone, (partial) anonymity, transparent

Market capitalisation: $71.8 billion

Litecoin

Litecoin was launched in 2011 as an early alternative to Bitcoin.

Around this time, increasingly specialised and expensive hardware was needed to mine bitcoins, making it hard for regular people to get in on the action. Litecoin’s algorithm was an attempt to even the playing field so that anyone with a regular computer could take part in the network.

Its creator is a former Google employee and MIT graduate named Charlie Lee who wanted to make cryptocurrency more accessible and for Litecoin to be the “silver” to Bitcoin’s “gold”.

Key differentiations from Bitcoin: Simpler cryptographic algorithm, 4x faster block generation, faster transaction processing, maximum of 84 million coins released compared to Bitcoin’s 21 million

Market capitalisation: $3.5 billion

Ripple

Ripple is considerably different from Bitcoin as it is essentially a global settlement network for other currencies eg. USD, Bitcoin, EUR, GBP or any other units of value eg. frequent flyer miles and commodities.

To make any such settlement, however, a tiny fee must be paid in XRP (Ripple’s native tokens) and these are what trade on cryptocurrency markets.

Because Ripple is intended as a new global settlement system for the exchange of currencies and other assets, many banks are experimenting with the technology. Banks counted as early supporters include Royal Bank of Canada, Santander, UBS and UniCredit.

There is no “mining” on the Ripple network - instead, there is an existing supply of 100 billion ripples, with many still being held by the company. In mid-2017, the company announced they’d lock up billions of dollars worth of ripples in escrow smart contracts to decrease any fears of the market being “flooded”.

Market capitalisation: $7 billion

Ethereum

Ethereal is an open software platform based on blockchain technology that enables developers to build and deploy decentralised applications.

In the Ethereal blockchain, instead of mining for bitcoin, miners work to earn Ether, a type of crypto token that fuels the network. Beyond a tradeable cryptocurrency, Ether is also used by application developers to pay for transaction fees and services on the Ethereum network.

Ethereum has quickly skyrocketed in value since its introduction in 2015 and is now the second most valuable cryptocurrency by market cap. It’s increased in value by 1880% in the last year - a huge boom for early investors.

Market capitalisation: $28 billion

Ethereal Classic

In 2016, the Ethereum community faced a difficult decision. The DAO, a venture capital firm built on top of the Ethereum platform, had $50 million in ether stolen from it through a security vulnerability.

The majority of the Ethereal community decided to help The DAO by “hard forking” the currency, and then changing the blockchain to return the stolen proceeds back to The DAO.

The minority thought this idea violated the key foundation of immutability that the blockchain was designed around, and kept the original Ethereum blockchain the way it was. Hence, the “Classic” label.

Market capitalisation: $1.5 billion

0 notes

Text

Documentary: Banking on Bitcoin

The first Bitcoin was created on January 3, 2009.

Money is an accounting system - it is a way of recording who owns what, who has what, who owes what to whom. You needed somebody who could stand as the central issuer - somebody who was the trusted third party, someone who could guarantee that the money was real. For hundreds of years now, we have had governments issue money.

Bitcoin is also an accounting system. It is a way of recording transactions, recording value, and it does it digitally, so you and I can send it to each other directly and everything is recorded in the open ledger. By monitoring and updating that ledger in a collective, consensus-based system, you do away with the need for somebody in the middle (bank) having to be that repository of all the information. And that’s what gets away from the fees, the inefficiencies, and ultimately the potential for corruption and risk that come with centralising information in that way.

It takes that trusted third party function and it automates it. It puts it into an open ledger that is put online, that is there for anybody to see, so that every Bitcoin is accounted for and you known you’re not getting a counterfeit Bitcoin. It is a peer-to-peer electronic cash system.

The Bitcoin was launched a few weeks after Lehman Brothers went bankrupt and the whole system nearly collapsed. What the crisis showed is that the existing system had some major flaws.

By not being controlled by a central company or person, that meant it couldn’t be shut down. As the value grows, people will find more and more uses for it. It is easily transferable, it’s anonymous. By 2140, there’s going to be 21 million Bitcoins and that’s the cap. There’s only x amount of gold, there’s only x amount of Bitcoin.

Before, if you wanted to send something of value across the Internet, you had to get somebody else involved. You had to have a credit card company or PayPal, or maybe a bank. The promise of Bitcoin is that you’re directly ending this currency to another person and then the Bitcoin network performs the function that normally PayPal or the other companies would do.

Cryptocurrency is a system of money that uses digital encryption. The genesis of cryptocurrency was the Cypherpunk (meaning a fringe subculture consisting of secret messages) movement, growing out of a love of the Internet and its possibilities. The discovery of cryptology and that you could give birth to a new world out of the Internet, one that lives outside the nation-state and outside the structures of power and hierarchies associated with that. They talked about the need and possibility for a digital currency that was anonymous or could be anonymised using cryptography.

The Cypherpunks that emerged in the early ‘90s were hyper-concerned about privacy, about personal liberty and a lot of people had come up with their own systems - some of them came very close to happening. The one that probably came the closes was Digicash from David Chaum. He claimed privacy of payments was essential for democracy - the reason not being because you need to be able to make private payments in order to express yourself, but rather to inform yourself, you may need to purchase information and that’s the thing that allows you to have opinions worth expressing. Chaim inspired the Cypherpunk movement and the break between the two came when Chaum realised he would need existing institutions to help him with Digicash and he starting talking to governments and banks. It came close to happening in the late ‘90s but nobody was ready for it. Other than a few experiments here and there by Hal Finney, Nick Szabo, the conversation around this died down.

However, it came back to life after the financial crisis and you had people going back to those experiments in the 1990s and looking at new ways of putting those ideas together. Hal Finney came up with his own system, Adam Back had Hashcash, Wei Dai had B Money, Nick Szabo had Bit Gold. In 2008, Satoshi Nakamoto took a lot of these ideas and made them work. He created an encryption-based protocol (not really a currency), utilising a ledger called the Blockchain, allowing for many kinds of transactions to occur. Contracts, and all kinds of things could be built into the Blockchain. It did this through a system of consensus building where multiple computers all participate in the management of the Blockchain ledger - a digital document that keeps track of all the payments.

Bitcoin is really the money supply controlled by a computer. The key point is that this is a distributed ledger, there is no central server. All the other ledgers that we have (banking ledgers, company ledgers) all sit and reside inside that company, which means they have one point of attack and can be hacked eg. JP Morgan, Home Depot, Target. The Bitcoin ledger resides on thousands of computers which can’t be hacked. Once a transaction is recorded on the Blockchain, it is there and permanent. It cannot be changed or altered, and the identities of the people are encrypted. The wallets are encrypted so you don’t know who is spending the money. But you know every single Bitcoin out there has a history, you know where it’s been, the different addresses it’s gone between.

The most important pieces of the Bitcoin infrastructure are the miners. These are the computers that are tasked with maintaining the ledger of the Blockchain. They verify the information, update it and make sure that it is trustworthy. How are they incentivised to do so? As they are going through the process of confirming transactions, they are simultaneously being subjected to a very, very difficult computing test. The Bitcoin core protocol is forcing them to look for a number. All of these computers are ultimately competing to be the one that receives that payout every ten minutes. But really, that’s the secondary component. They’re really being rewarded with Bitcoin, the more important task is the validation and verification of transactions and the maintaining of the ledger.

Bitcoin, in being the first to achieve decentralised value exchange, transfers the process of trust to a collective agreement around a body of independent computers who are compelled by an incentive system to maintain that consensus and affirm the information to be correct. It is liberating because this can be done without intermediaries. The most important thing behind Bitcoin is not the currency, the key factor is the Blockchain. Bitcoin is cash with wings. It’s the ability to be able to take a local transaction and do it globally.

Charlie Shrem was the Vice Chairman of the Bitcoin Foundation and one of the Bitcoin millionaires who played an important role in the infrastructure of managing the movement of funds around the system in the early phase.

A live digital currency exchange was organised in New York - a fusion between Wall Street and Bitcoin. It would be hard for Bitcoin to develop in any way in which it wasn’t interacting with the regular economy of dollars and euros. The problem with exchanges is that it took one back to the old world Bitcoin was trying to get away from. As soon as you move back to an exchange, you’re moving back to a world where some third party has personal data on you, all of your money, the security vulnerabilities and none of the strengths Bitcoin was designed to provide.

Jed McCaleb created Mt. Gox because he couldn’t buy Bitcoins as quickly as he wanted to. He did it by starting with a URL that he’d previously used to run Magic Card trading sites to buy and sell Bitcoin, he then sold it off when it took off quickly.

21 million Bitcoins are programmed to be released into the system. It’s a set number, but within that, every single Bitcoin can be divided up into a hundred million different pieces so there’s room for it to expand as the use expands - especially in the emerging markets in the developing world. Eg. a hundred trillion dollars from the reserve bank of Zimbabwe is a reminder of what happens if governments screw up money. Zimbabwe is a good example of a government that lost the trust of their people, by printing too much money and causing runaway hyperinflation.

A lot of us take the capacity to send money to others, receive money, store money, and do it electronically these days for granted, because we are amongst the lucky 50% or so of the world to have bank accounts. 2.5 billion adults in the world do not have access to bank accounts. A technology like Bitcoin, cryptocurrency, has the capacity to bring those people into the financial system, and give them the power to have a bank in their cellphone or pocket - opening up commerce to people currently excluded from it.

Someone who is working in another country and wants to send money back home currently uses places like Western Union to do remittances. Western Union typically serves an unbanked customer, either a migrant worker or an individual who doesn’t feel comfortable in the financial services sector. The problem with remittances is they’re really expensive. If I’m sending $100 back home, I may end up spending $5 or $10 just on fees to get that money back to my family, which is a huge percentage if you’re poor and working. Since Bitcoin doesn’t care about borders, I could send Bitcoin overseas, the people there could receive it and transfer it into local currency, making the overall process quicker, cheaper and more convenient.

Western Union deals with 750, peak, transactions per second and those happen instantly. If you look at a Blockchain, you have to wait for verifications, and if you do a recent test it takes about 15 minutes for every transaction to be validated by the network. This is a challenge as well.

In December 2010, Wikileaks (which solicits and publishes secrets, classified government documents and suppressed material from whistleblowers around the world) was under cyber attack from governments that wanted to shut it down. The Wikileaks scandal seemed to provide an opportunity for Bitcoin. At the time, the big banks and credit cards stopped processing payments for Wikileaks and Bitcoin could provide a way to send donations to Wikileaks. However, Nakamoto was paranoid about drawing too much government attention to the Wikileaks project and was aware that it was a young software with kinks that had not been worked out yet. Nakamoto eventually disappeared and his identity was never revealed. Bitcoin was based on a small number of projects in the 1990s eg. Hashcash, Bitgold, with only a small group of people who would have known about it.

The first group was a bunch of more or less tech-minded coders. Then the Libertarians came on board and saw it through a political lens - by allowing and accepting Bitcoin. Then the suits and venture capitalist money came in (Silicon Valley). The more people that got involved, the stronger the network got. In 2013 especially, Bitcoin mushroomed. Most Bitcoin companies at the time knew that a big part of the market for Bitcoin was people buying drugs on Silk Road who needed Bitcoins to do that. What Ross Ulbricht did by creating the Silk Road was he took this uncommon but usable cryptocurrency called Bitcoin and married it with a streamlined, workable user interface that was a free market and could sell anything. Ulbricht had an extremely Libertarian viewpoint and wanted to experiment with using technology to create free markets for people. It was a fruition of Cypherpunk dreams - the crypto-anarchist utopia that they had imagined in the ‘90s. It was, in theory, an anonymous website with anonymous money used to buy any contrabands imaginable. People sold drugs that were posted to customers all over the world.

Cyber-investigators can trace you back to your IP address if there is a crime, so if you call another computer, that IP address is tracked. On Tor, that’s taken away because your information hops from computer to computer to computer, so there’s no way to trace you back to your original one. Tor is an anonymous system, so if you use the Tor browser, you can browse with some degree of security. This is great for plugging your banking information into a site so you don’t get hacked. The other component of Tor, which has similar uses, is called Tor Hidden Services. This is a URL that doesn’t have a “.com” on the end of it, it has “.onion” - and that is it’s own little space where you can create websites and can freely transact without oversight or regulation. People put websites on there that are outside of law enforcement’s view. There’s hackers for hire, malware, credit card dump services, etc.

BitInstant was born because if you want to move your dollars into a Bitcoin exchange, it takes at least three days. And since the major exchange, Mt. Gox, was in Tokyo, it could take a week or more. This was problematic, and Charlie Shrem (BitInstant founder) figured out that if you just held a balance of dollars at the exchange, you could go to him and say “Charlie, I’ll give you $100, will you give me $100 of credit at the exchange?” And you can then have your dollars at the exchange in ten seconds as opposed to a week. This was hugely helpful especially as Bitcoin is so volatile and you need to know exactly what price you’re going to get. At the time, aside from Mt. Gox, BitInstant was the way most Americans bought Bitcoins.

For the most part, BitInstant was taking steps to make sure that people weren’t using the service for illegal purposes. However, Charlie made the mistake in dealing with BTCking (Robert M. Faiella) - of acknowledging that he knew what BTCKing was doing and that he was acquiring Bitcoins to sell them to Silk Road customers.

Ulbricht (Silk Road) was eventually convicted on seven charges including narcotics and money laundering in a Manhattan court room. There’s a reason the case was tried in New York in the Southern district (where most financial regulation cases are dealt with and where District Attorney Preet Barara and the Senator Charles Schumer have been all over Bitcoin) and that was largely because of Bitcoin. Ulbricht was caught in San Francisco. The Silk Road servers were out of the country. Ulbricht’s case was a Bitcoin case - and Silk Road posed a huge threat to the marketplace and Wall Street. The idea that somebody could create a marketplace where you could freely transact without oversight or regulation, had to be dealt a massive and public blow. Bitcoin monetised the anonymous part of the Internet and made it possible to trade in things that the Federal Government did not want you to trade in. Ulbricht was sentenced to prison for life. The Feds seized a whole bunch of Bitcoins, and in order to sell those Bitcoins off or go to an exchange, one had to fit within Federal and State law and there wasn’t an exchange that met those regulations at the current time. It was thus handled through auction. This, in a way, legitimised the use of it as a currency. The first live Bitcoin exchange thus happened on Wall Street.

In January 2014, Charlie Shrem was arrested and charged with engaging in money laundering - by allowing people to transfer money from Bitcoin to cash via BitInstant. The day after Charlie’s arrest, a Bitcoin hearing was held in New York. A proposed regulatory framework for virtual currency firms operating in New York was aimed for within 2014 due to the criminal case of virtual currency coming to light as a vehicle for money laundering and drug trafficking. A BitLicense (license for Bitcoin companies) was proposed - specifically tailored to virtual currencies. Benjamin Lawsky (financial regulator) was given the opportunity to write the rules for the industry starting from scratch.

Two weeks after the Bitcoin Hearings, Mt. Gox suffered an irreversible collapse. Some estimate nearly half a billion dollars in user’s Bitcoins went missing (stolen or lost) - about 6% of total circulation. It filed for bankruptcy. Mark Karpeles (CEO) blamed hackers for the losses. The only reason it survived as long as it did was that in 2013, the price of Bitcoin kept going up. It peaked in late 2013, and started coming down in late 2014. Its failure was that it didn’t have the mainstream institutional controls required to bring it to the next level. The important lesson was that you don’t leave money with a third party you don’t trust.

If regulation was done right, more confidence could have been created in Bitcoin and remove uncertainty - Lawsky claimed. In the spring of 2015, Lawsky’s department handed down the final version of the BitLicense. A lot of companies complained that the regulations were too burdensome for them to operate in New York and still make money. It was created to make sure there were enough consumer protections, ensure adequate cybersecurity, make sure that companies were adequately capitalised so that they wouldn’t collapse upon themselves. The Bitcoin Center (exchange) was eventually closed - unable to meet New York regulations. Lawsky left government (Department of Financial Services) and opened a firm to consult Bitcoin companies, navigating the BitLicense application process.

Banks and other companies have become interested in the Blockchain technology underlying Bitcoin - as it allows derivatives, securities and all manner of financial instruments to be transferred in complex and interesting ways. It seems as if banks are looking to create their own private Blockchain powered by their own computers rather than anonymous miners - giving them control over the system. These include ways to replace stock exchanges, finding new ways to originate loans, new ways to move money between countries - this goes back to the original idea of Bitcoin but it is now happening within these financial institutions rather than outside it, as Bitcoin had imagined.

Yet, the dynamics of the Blockchain do not change - it is still open source and control lies in the hands of its users.

0 notes

Text

Virtual Reality: The Future of Art

The Courtauld Institute - roles VR can play in today’s museum space

What does virtual reality mean?

Consumer technology - used en masse to increase volumes of people

Technology and art - Heidegger talked about technology and the question of art - a framework - art revealing peoples’ beings - meaning of what life is about - only becomes art if technology disappears and art comes through stronger - questioning the boundary of the real and virtual - seeing the world being made

In museums and galleries - creating worlds through works - interpretation, panels and displays to give meaning and context to work - auditory environment to experience works - architecture eg. Jean Nouvelle creating sensory environments or realms

Where do we sit now? Collective encounters with object and art - bringing people together through virtual spaces.

Virtual reality as an artistic medium? A new material eg. the use of Google Tiltbrush - street artist’s natural extension - Art Basel use, interested in the experimentation eg. Robin Road - Playgroundless by a studio called Workflow was a collaboration at the Royal Academy where four people could plug into a virtual reality environment simultaneously - other industries also use VR eg. architecture

Disembodied eye in virtual space - similar to drones. New technology and ethical considerations, violence getting into a very “real” place

Using first person narrative to implicate viewers and present a certain position - whose voices are they? Recreating current positions? Representations of something?

“Moral panic” - difference between the media and the public, fear of AI - eg. Jordan Wharfson’s “Real Violence” at Sadie Coles - work that implicates the viewer as a spectator in a hyper violent event - putting them into a complex and repellant subject position

Practice of creating VR is triangulated - Artists and galleries are involved, but also tech businesses eg. Google - who’s funding what and for what purpose? Given access to technologies through marketing agencies, therefore with restrictions eg. “no sex, no religion”

Google Arts and Culture - an “innovation partner” for the cultural sector, bringing art and technology together, serving as a mediator and not tracking or collecting hard data - facilitating

Zaha Hadid paintings and drawings - dynamic images exploring images and possibilities of design - VR examining these designs in virtual spaces

How is curating in a VR space different from elsewhere? Need to consider where the audiences’ body is when in the exhibition space. How do you use the technology and make it a collective experience, avoiding the huge queues in museums and galleries. RA used timed slots which you booked in advance and paid for to put a cap on how many people could use it.

Immersive spaces and the power of visual projection - e.g. Media and Arts in Korea - projecting “Starry Night” by Van Gogh into a warehouse space in Seoul

When is the point when digital means you don’t need the museum anymore? Assuming VR is in a virtual world, why do you still need the gallery? Will VR supersede physical spaces and museums, or will these spaces still be needed as a point of access? Eg. Jon Rafman piece at Frieze

Role of curator becomes even more important - trusted voices to navigate through a wealth of information.

Technology puts the roles of curators, artists etc. in flux.

“Cultural value” - VR as a learning, discovery and creative tool

Tension in museum space - role of global museums (eg. British Museum) vs. small museums struggling for funding

Serpentine online digital commissions, called “software commissions” - utilising digital space as a place to commission artists

Idea of duplication with VR - multiple locations - challenge for museum collections: IP Management - How do you put the Elgian marbles back together again? Larger museums 3D-scan objects. How do regional museums become a part of this discourse?

1 note

·

View note

Text

Singapore is Asia’s best in attracting talent amid digital push: INSEAD report

http://www.todayonline.com/singapore/singapore-asias-best-attracting-talent-amid-digital-push-insead-report

18 April 2017, TODAY

Singapore ranks the highest in Asia in attracting and developing talent, reflecting not only its world-class education system but how it’s adapting skills in the digital era. The city-state took the No 2 spot behind Switzerland on the Global Talent Competitiveness Index, published on Tuesday (April 18) by the French business school, INSEAD. Australia was the only other Asia Pacific country ranked in the top 10.

The index assesses a country’s ability to enable, attract, grow and retain talent, as well as develop global knowledge and vocational and technical skills. High-ranking countries share some key advantages: Employment policies that favour flexibility, good education systems and technological competence.

Singapore’s government is seeking to build the economy into a regional high-tech hub. It’s helping small businesses adopt new technologies and supporting workers in getting re-skilled. With immigration curbs in place, the city state is pushing for automation of some low-skilled jobs, such as cleaners.

“Digital technologies will help small and exposed economies like Singapore punch above their weight by creating means for their businesses and talent to reach out to the global market,” said Ms Su-Yen Wong, chief executive officer of the Singapore-based Human Capital Leadership Institute, which helped compile the index.

Some of Asia’s biggest economies ranked much lower on the index. Japan dropped three spots to No 22 globally, while China was ranked at 54 and India at 92. Malaysia had the highest ranking of upper middle-income countries and came in at No 28 on the global index, beating wealthier nations such as South Korea, Spain and Italy. The South-east Asian nation scores high because of its vocational and technical skills and being open to foreign talent, according to the study.

0 notes

Text

Could digital detectives solve an ancient puzzle?

http://www.bbc.co.uk/news/magazine-39555462

18 April 2017, BBC News, Nick Holland

For more than two thousand years people have believed that joint pain could be triggered by bad weather, but the link has never been proven. The link between joint pain and bad weather has long been suspected by patients and medical professionals alike and the theory dates back at least to Roman times and possible earlier.

Each day Becky enters information about how she feels into an app on her phone, the phone's GPS pinpoints her location, pulls the latest weather information from the internet, and fires a package of data to a team of researchers. More than 13,000 volunteers have signed up for the same study, sending vast quantities of information into a database - more than four million data points so far. The app, called "Cloudy with a Chance of Pain" is part of a research project being run by Will Dixon. He is a consultant rheumatologist at Salford Royal Hospital and has spent years researching joint pain.

Digital epidemiology allows patients to send detailed information over the internet - which means they can do it more regularly, and of course you can get many more people to take part, thousands more; numbers that would be unthinkable using the old methods. By combing through that data, Professor Dixon hopes it will be possible to find correlations and clues that would have been hidden to doctors just a decade ago. His team will analyse the data over the coming year, and hope to find a definitive answer to the question.

Another study underway in the US has recruited more than 20,000 participants using an app that asks them to say "ahhhhhhh" into their phone. Named mPower, and built using technology developed by British academic Max Little, the project hopes to find out more about the onset and progression of Parkinson's disease. If the "ahhhhhhh" sound is smooth and unbroken, it has likely come from a healthy patient. But if it breaks and wavers, it could suggest that the patient may have Parkinson's. By monitoring the precise pattern and pitch of the noise, it may even be possible to determine how advanced the disease has become, or how strongly its symptoms are being felt at a given moment. Using that information, it could allow patients to take much more specific doses of a drug to help manage the disease. The software is even being used in a clinical trial for a new drug. And again, it is the accumulation of vast amounts of data, volunteered by thousands of participants, that is making the study possible.

Another app, soon to be launched, will allow users to photograph their plate of food, and use artificial intelligence to work out what's on the plate. The technology could help people determine the nutritional content of their meal, and allow public health bodies to track how well any particular population is eating. It is being developed by Marcel Salathe, also a Professor of Digital Epidemiology and founder of what is likely the world's first lab dedicated to the field of study. He thinks the discipline could have particular benefits in parts of the world where basic medical infrastructure is lacking, but lots of people have smart-phones. Digital epidemiology could become the reporting network through which sickness outbreaks are initially detected, he says.

0 notes

Text

Rise of the Robots: What advances mean for workers

17 April 2017, BBC News, Tim Harford

http://www.bbc.co.uk/news/business-39296096

There are already 45,000 Kiva robots at work in Amazon warehouses. They carry the shelves to humans for them to select things - and improve efficiency up to fourfold.

Factories have had robots since 1961, when General Motors installed the first Unimate, a one-armed automaton that was used for tasks like welding.

But until recently, robots were strictly segregated from human workers - partly to protect the humans, and partly to stop them confusing the robots, whose working conditions had to be strictly controlled.

With some new robots, that’s no longer necessary. Rethink Robotics’ Baxter can generally avoid bumping into humans, or falling over if humans bump into it. Cartoon eyes indicate to human co-workers where it’s about to move. Historically, industrial robots needed specialist programming, but Baxter can learn new tasks from its co-workers.

The world’s robot population is expanding quickly - sales of industrial robots are growing by around 13% a year, meaning the robot “birth rate” is almost doubling every five years.

There has long been a trend to “offshore” manufacturing to cheaper workers in emerging markets. Now, robots are part of the “reshoring” trend that is returning production to established centres.

The progress that has happened is partly thanks to improved robot hardware, including better and cheaper sensors - essentially improving a robot’s eyes, the touch of its fingertips, and its balance. But it’s also about software: robots are getting better brains.

Attempts to invent artificial intelligence are generally dated to 1956, and a summer workshop at Dartmouth College for scientists with a pioneering interest in “machines that use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves”.

In the last few years, progress in AI has started to accelerate. Narrow AI involves algorithms that can do one thing very well, like playing Go, or filtering email spam, or recognising faces in your Facebook photos. Processors have become faster, data sets bigger, and programmers better at writing algorithms that can learn how to improve themselves. IBM’s Watson, which hit the headlines for beating human champions at the game show Jeopardy!, is already better than doctors at diagnosing lung cancer.

Erik Brynjolfsson and Andrew McAfee argue that there’s been a “great decoupling” between jobs and productivity - how efficiently an economy takes inputs, like people and capital, and turns them into useful stuff.

Historically, better productivity meant more jobs and higher wages. But they argue that’s no longer the case in the US where US productivity has been improving, but jobs and wages haven’t kept pace. Some economists worry that we’re experiencing “secular stagnation” - where there’s not enough demand to spur economies into growing, even with interest rates at or below zero.

Technology seems to be making more progress at thinking than doing: robots’ brains are improving faster than their bodies. Martin Ford, author of Rise of the Robots, points out that robots can land aeroplanes and trade shares on Wall Street, but still can’t clean toilets. For a glimpse of the future, we should look to another device now being used in warehouses: the Jennifer Unit. It’s a computerised headset that tells human workers what to do, down to the smallest detail. If you have to pick 19 identical items from a shelf, it’ll tell you to pick five, then five, then five, then four. That leads to fewer errors than saying “pick 19″.

0 notes

Quote

In well known pages from the Grundrisse, Marx spoke of a tendency, a limit point in the process of the valorisation of capital: the impossible possibility that capital might circulate "without circulation time," at an infinite velocity, such that the passage foreman moment in the circulation of capital to the next would take place at the "speed of thought." Such a capital would return to itself even before taking leave of itself, passing through all of its phases in a process encountering no obstacles, in an ideal time without time - in the blinding flash of an instant without duration, a cycle contracting into a point. Bill Gates restaged this fantasy - a limit point of capital, towards which it strains, its vanishing point - in his "Business @ the Speed of Thought"...a contemporary formalisation of the this threshold, summoning the possibility of the circulation of information that would, Gates fantasies, occur as "quickly and naturally as thought in a human being."

The Soul at Work - Franco “Bifo” Berardi, p.11

1 note

·

View note

Text

The Soul at Work - Franco “Bifo” Berardi

Preface

The soul is the clinamen of the body. It is how it falls, and what makes it fall in with other bodies. The soul is its gravity. This tendency for certain bodies to fall in with others is what constitutes a world.

The materialist tradition represented by Epicurus and Lucretius proposed a wordless time in which bodies rain down through the plumbless void, straight down and side-by-side, until a sudden, unpredictable deviation or swerve - clinamen - leans bodies toward one another, so that they come together in a lasting way. The soul does not lie beneath the skin. It is the angle of this swerve and what then holds these bodies together. It spaces bodies, rather than hiding within them; it is among them, their consistency, the affinity they have for one another. It is what they share in common: neither a form, nor some thing, but a rhythm, a certain way of vibrating, a resonance. Frequency, tuning or tone.

1 note

·

View note

Text

The All-you-can-eat Food Parcel, Wired Magazine

Nigel Gifford designs drones to deliver food aid in disaster zones. His humanitarian UAV, the Pouncer, is edible itself. He's the Somerset-based engineer behind Aquila, the Wi-Fi beaming drone bought by Facebook in 2014 to connect 1.6 billion people to the Internet. In 2010, Gifford imagined Aquila (originally named Ascenta) as a high-altitude drone that could be used to beam Internet or mobile-phone connectivity to civilians below. The UAV was designed with solar panels that would give it enough power to stay airborne for 90 days, with a flexible central section that could adapt to securely carry any cargo. Facebook bought the drone for a reported $20 million. Now with an enlarged wingspan the size of a commercial airliner, Aquila made its first successful flight - a 96-minute cruise above Yucca, Arizona - on June 28, 2016. Post-sale, Gifford's new company Windhorse Aerospace has focused it's energies on the Pouncer, a UAV whose three-metre-wide hull can enclose vacuum-packed foods. It's structure will be made from as yet unspecified baking components that can be consumed. It will have a 50kg payload that should feed 100 people for one day. GPS will guide it to within 8 metres of its target. Windhorse Aerospace will be testing it's capabilities in the spring; by late 2017, it will be in production.

0 notes

Text

The Human in Google Assistant, Wired Magazine

Google Assistant is the chatty digital helper the company is using to turn search queries into conversations. Unveiled in messaging app Allo in September 2016, then extended to the Pixel and Amazon Echo rival Home the following month, Assistant is intended to be the character at the core of Google's products - it's AI-powered answer to Siri. To construct Google Assistant's "easygoing, friendly" personality, part of a division run by Google Doodle head Ryan Germick imagines likely questions, then comes up with a range of responses, which are then handed over to the developers to code (a low-tech method). Unlike in a film, this character isn't the hero. You, the person interacting with it, are the hero. That's why the Assistant can't be opinionated : its there to be reliable, not to have depth. "If we gave it some dark conflict secret, that probably wouldn't be a great user experience." Humour is one of the most effective ways of building character...(It) can be used to deflect awkward questions, especially for an AI that is learning it's way. In fact the Assistant is learning: to displace it's human writers. As it takes requests and listens to the user's reactions, it's machine-learning algorithm improves itself, a process Google refers to as "the transition".

0 notes

Text

Walter Benjamin by Esther Leslie

One of the poets who most fascinated him was Charles Baudelaire, who observed the twinning in modernity of the fugitive and eternal, the transitory and the immutable.

That Benjamin’s upbringing was comfortable in material terms is apparent from his own writings, the memories of a Berlin childhood that Benjamin wrote and rewrote, turned into verse, worked on and worked over for many years in adulthood.

When he was forty, only finally dislodged from dependence on the family home in Delbrückstrasse some three years earlier, he scuttled back into his childhood. This was not as an act of self-indulgence, but rather as part of his effort to understand how the impulses of the nineteenth century came to form the environments and the inhabitants of the present. Benjamin dredged up an enchanted world where the shock of sensual experiences impacts indelibly on mind and memory. He recorded the accoutrements of life in Berlin in 1900: the patterns on family dinnerware, the organic forms of garden chairs on the balconies…

From his own remembered childhood Benjamin extrapolated that a child’s senses are receptive, as a child’s world is new. The child receives the world so fully that the world forms the child. The world imprints itself, just as bodies do on the chemistry of photographs. The child is embedded in the materiality of its world.

Sound - newly recordable in Benjamin’s lifetime - also played a role in configuring experience.

For his first ten years Benjamin was formed in and by the rush of an imperial city, a mollusc constrained and protected by the city as it rapidly flung it’s energies into modernizing, with the introduction of new technologies of transportation and labour, leisure and war.

The child has an affinity for the world of objects, an openness to sensation, an ability to invent and transform, to play with waste scraps and turn them into treasures. Benjamin identified the ‘compulsion’ to adopt similarities to the world of objects as a primitive impulse, reaffirmed by childish mimesis and unacknowledged in the adult world.

In 1905, Benjamin was sent away to a progressive coeducational boarding school in Haubinda, Thuringia for almost two years. While there, he was taught by the educational reformer Gustav Wyneken, who promoted the doctrine of Youth Culture…[which] held that the young were morally superior to the old. They were more spiritual or intellectual, and so thus required exposure to the full panorama of artistic and scientific culture. Intellect was the aspect around which the school revolved…

The boys and girls under Wyneken’s influence were encouraged in their idealism. Granted autonomy, the young would find their way to spiritual teachers; the teachers, in turn, relinquished power in a system of ‘student self-administration’, and regarded their pupils as spiritual equals whom they aided in a quest for knowledge and absolute values.

In this setting, Benjamin learnt that youth, the coming humanity, could be educated as 'knights’ protecting Geist, for whom the profoundest and most important experience is that of art. Benjamin’s core philosophy developed at the school…Wyneken was the first to lead him into the life of the intellect, and he maintained contact with his mentor for a decade.

1910 saw his first published writings. A follower of Wyneken had founded a magazine in 1908 called Der Anfang (The Beginning), with the subtitle 'a magazine of future art and literature ’…Benjamin published poems and prose, reflections on the possibility of a new religiosity and the questions of youth. His pseudonym was Ardor. In March 1911, with the magazine now subtitled 'Unified Magazines of the Youth’, Benjamin published 'Sleeping Beauty’. The essay acknowledged the age as one of Socialism, Women’s Liberation, Communication and Individualism. It asked, might not the age of the youth be on the horizon? Youth is the Sleeping Beauty who is unaware of the approaching Prince who comes to wake her. Youth possesses a Hamlet-like consciousness of the negativity if the world. Goethe’s Faust stood as a representative of youth’s ambitions and wishes. Carl Spittler’s epic poems 'Prometheus and Epimetheus’ and 'Olympian Spring’ and his book Imago, 'the most beautiful book for a young person’, portrayed the 'dullness and cowardice of the average person’ and, in his 'universal ideal of humanity’, he presented a model for youth beyond pessimism. Benjamin’s essay picked out the possibilities of youth’s magnificence as depicted in the 'greatest works of literature’. He and his comrades were to inject those ideal forms with living blood and energy. Benjamin wrote...about an awakening commitment to Zionism and Zionist activity, which appeared to him for the first time as a possibility of even perhaps a duty. He did not, however, intend to abandon his political efforts at school reform. Benjamin approved of a journal documenting Jewish spiritual life and dealing with such themes as Jews and luxury, Jews and the love of Germany, and Jews and friendship. He noted...that he and others do indeed possess a 'two-sidedness', the Jewish and the German side, and how, up till then, they have oriented to the German side. He agreed that Jewish forces in Germany would be lost by assimilation, though he noted that the entry of Russian Jews would postpone that. But he drew back from embracing a Zionism that involved the formation of Jewish enclaves or indeed the establishment of a Jewish state. Against a nationalist and political Zionism he advocated a cultural Zionism, a cultural Jewish state and not a territorial state. Such a Jewish state might provide a solution for Eastern Jews who were fleeing persecution, he conceded, but for Western Jews there was another solution. German Jews might reach the necessary 'Selbstbewusstsein', 'self-consciousness', a salvation of Jewish characteristics in the face of assimilation, through the organization of Jewish intellectual life in Germany.

0 notes

Text

John Berger - Ways of Seeing (1972)

EPISODE 1

The convention of perspective is unique to European art. Perspective centres everything on the eye of the beholder. With the invention of the camera, everything changed. We could see things that were not there in front of us.

A manifesto written in 1923 by Dziga Vertov, the Russian film director, set against images from a film made in 1928 called The Man With A Movie Camera. The invention of the camera changed not only what we see, but how we see it.

The camera reproduces a painting, making it available in any size, anywhere, for any purpose. It is no longer a unique image that can only be seen in one place. Originally, paintings were an integral part of the building for which they were designed.

The extreme example is the icon. Worshippers converge upon it. Behind its image is God, before it believers close their eyes. They do not need to keep looking at it as they know it marks a place of meaning.

It is the image of the painting which travels now. The meaning of a painting no longer resides in its unique painted surface. Its meaning has become transmittable…it has become information of a sort.

Art has become mysterious again because of its market value…which depends on it being genuine.

The camera, by making a work of art transmittable, has multiplied its possible meanings and destroyed its unique, original meaning. Have works of art gained anything by this? Th most important thing about paintings themselves is that their images are silent, still. Occasionally, this uninterrupted silence and the stillness of a painting can be very striking. Its as if the painting…becomes a corridor, connecting the moment it represents with the moment at which you are looking at it. Because paintings are silent and still, and because their meaning is no longer attached to them but has become transmittable, paintings lend themselves to easy manipulation. They can be used to make arguments or points, which may be very different from their original meaning…the most obvious way of manipulating them is to use movement and sound (zooming in on a detail). The meaning of a painting shown on film or television can be changed even more rapidly. The camera moves in to examine details, yet as soon as this happens, the comprehensive effect of the painting is changed. In a film sequence, the details have to be selected and re-arranged into a narrative, which depends on unfolding time…In paintings, there is no unfolding time. Paintings are also changed by the sounds (music and rhythm) you hear when you are looking at them.

Lastly, paintings can be changed in another way. When paintings are reproduced they become a form of information which is being continually transmitted. The meaning of an image can be changed according to what you see beside it or what comes after it.

What it means, in theory, is that the production of works of art can be used by anybody for their own purposes. Reproduction should make it easier to connect our experience of art directly with other experiences.

What so often inhibits a spontaneous process is the false mystification which surrounds art. For instance, the art book depends upon reproductions yet often what the reproductions make accessible, a text begins to make inaccessible…kept within the narrow preserves of the art expert.

Children look at images and interpret them very directly…because they were really looking and relating what they saw to their own experiences.

“But remember that I am controlling and using for my own purposes the means of reproduction needed for these programs...there is no dialogue yet. You cannot reply to me. For that to become possible in the modern media of communication, access to television must be extended beyond its present narrow limits. Meanwhile, with this programme... you receive images and meanings which are arranged.”

“Many of the ideas in this programme were first outlined in an essay written in 1936 by the German writer Walter Benjamin.”

EPISODE 2

Men dream of women. Women dream of themselves being dreamt of. Men look at women. Women watch themselves being looked at. Women constantly meet glances that act like mirrors, reminding them of how they look or how they should look. Behind every glance is a judgment. Sometimes the glance they meet is their own, reflected back from a real man.

A woman is always accompanied except when quite alone, perhaps even then by her own image of herself. While she is walking across a room, or weeping at the death of her father, she cannot avoid envisaging herself walking or weeping. From earliest childhood she is taught and persuaded to survey herself continually, she has to survey everything she is, and everything she does, because how she appears to others, particularly how she appears to men, is of crucial importance for it is normally thought of as the success of her life.

In one category of painting, women were the principle, ever recurring subject - that category was the nude...we can discover some of the criteria and conventions by which women were judged.

To be naked is to be oneself. To be nude is to be seen naked by others, and yet not recognised for oneself. A nude has to be seen as an object, in order to be recognised as a nude.

Nakedness is not taken for granted, as in archaic art. Nakedness is a sight for those who are dressed.

Always in the European tradition, nakedness implies an awareness of being seen by the spectator. They are not naked as they are, they are naked as you see them.

The mirror became a symbol of the vanity of women. In the male hypocrisy, this is blatant. You paint a naked woman, because you enjoy looking at her, you put mirror in her hand, and you call the painting “Vanity.”

To be naked, is to be without disguise. Being on display, is to have the surface of one’s skin, the hairs on one’s body, turned into a disguise - a disguise that cannot be discarded...Most nudes in oil paintings have been lined up for the pleasure of their male spectator owner, who will assess and judge them as sights...With their clothes off, they are as formal as with their clothes on.

By contrast, in another tradition (Indian), nakedness is seen as a symbol of active, sexual love - the woman as active as the man. The actions of each, absorb the other.

In an oil painting, the second person who matters is the stranger looking at the picture...sometimes the painting includes a male lover, but the woman’s attention is very rarely directed towards him.

The convention of not painting hair on a woman’s body...hair is associated with sexual passion...the woman’s passion needs to be minimised, so the spectator may feel that he has the monopoly of such passion...There were paintings that depicted male lovers, but these were mostly private, semi-pornographic pictures.

Woman in the European art of painting are seldom shown dancing, they have to be languid, exhibiting minimum energy. They are there to feed an appetite, not to have any of their own.

The nude in European oil painting is usually presented as an ideal subject. It is said to be an expression of European humanist spirit.

Men and women are tremendously narcissistic and cut off from each other, whereas a woman’s image of herself is derived directly from other people, a man’s image of himself is derived from the world - it’s the world that gives him back his image.

EPISODE 3

We look, we buy. It is ours. Ours to consume, to sell again, perhaps to give away. More often, ours to keep. We look, we buy, and we collect valuable objects. But the most valuable object of all has become the oil painting.

Oil paintings often depict things, things which in reality are buyable. To paint a thing, and put it on canvas, is not unlike buying something and putting it in your house. The objects within a painting often appear as tangible as those outside it.

If you buy a painting, you buy also the look of the thing it represents. Paintings often show treasures. But paintings have become treasures themselves. Art galleries are like palaces, but they are also like banks. When they shut for the night, they are guarded, lest the images of the things which are desirable are stolen.

The value of paintings has become mysterious. Where...does this value come from?

Those who write about art, or talk or teach about it, often raise art above life, turning it into a kind of religion. In the first programme, I tried to show how and why modern methods of reproduction and communication, like colour photography and television, have theoretically changed the meaning of visual art of the past, demystifying it and making it secular. But mostly those who use these new methods of reproduction and communication, those who write books or make TV programmes about art, tend to cling to the old approach. Art remains something sacred. A love of art seems, automatically, to be offered as a sublime human experience...perhaps we should be somewhat wary of a love of art.

You cannot explain anything in history, not even in art history, by a love of art.

The sort of man for whom painters painted their paintings. What are these paintings? Before they are anything else, they are objects which can be bought and own. Unique objects. A patron cannot be surrounded by music or poems in the same way that he is surrounded by pictures. Does this special ownership of pictures engender a special pride?

From about 1500 to 1900, the visual arts of Europe were dominated by the oil painting, the easel picture. This kind of painting had never been used anywhere else in the world. The tradition of oil painting was made up of hundreds of thousands of unremarkable works, hung all over the walls of galleries and private houses.

If, as we are normally taught to do, we emphasise the genius of the few and concentrate only on the exceptional works, we misunderstand what the tradition was about. The European oil painting, unlike the art of other periods, placed a unique emphasis on the tangibility, the solidity, the texture, the weight, the graspability of what was depicted. What was real was what you could put your hands on.

The idea that a thing is only real if you can pick it up may be connected to the idea of taking a thing to pieces to see how it works. At the beginning of the tradition of oil painting, the emphasis on the real being solid was part of a scientific attitude. But the emphasis...became equally closely connected with a sense of ownership.

There is not a surface in this picture that does not denote wealth (Hans Holbein - The Ambassadors).

Works of art in other cultures and periods celebrated wealth and power...but these works were static, ritualistic, hierarchic, symbolic. They celebrated a social or divine order. The European oil painting served a different kind of wealth. It glorified not a static order or things, but the ability to buy and furnish and to own.

Before the invention of oil painting, medieval European painters often used gold leaf in their pictures. Afterwards, gold disappeared from their paintings and was only used for their frames. But sometimes the paintings themselves were simple demonstrations of what gold and money could buy. A certain kind of oil painting celebrated merchandise in a way that had never happened before in the history of art...it became the principle subject of these works.

Portraits...did not celebrate what was buyable. They were records of the confidence of those to whom ownership brought confidence...generations of portraits marinated to celebrate a continuity of power and worthiness...those they depicted represented an exceedingly small fraction of the population. The poor have neither annals nor portraits, their lives are unrecorded.

Everybody’s clothes indicate social status, but the clothes and feathers and jewellery of these women make exaggerated claims...seas of silk and satin.

There were also paintings whose subjects were taken from classical literature...Classical mythology was part of the specialised knowledge of the privileged minority and these paintings helped them to visualise themselves whilst displaying the classic virtues, making the classic gestures...the settings for charades in which they themselves would play.

Even there, in the development of landscape painting, the faculty of oil painting to celebrate property, did play a certain role...the pleasure of seeing themselves as the owners of their own land...enhanced by the ability of oil painting to render this land in all its substantiality.

The painted landscape stands in for the real one.

(But this did not apply to all painters)...we should not confuse such exceptional works with the purpose of the general tradition.

Intermittently, the tradition can breed within itself a counter-tradition, but the basic values of the tradition win in the end. The painting itself has a fabulous price on its head. It has itself become a fabulous object of property.

...what I have tried to show is a fundamental part of the truth which is usually ignored.

Oil painting was...before everything else...a medium which celebrated private possessions. The tradition of oil painting has now been broken once and for all, in certain ways, publicity has now taken its place. The sight of it makes us want to possess it.

EPISODE 4

We are surrounded by images of an alternative way of life. We may remember, or forget these images, but briefly we take them in. And for a moment, they stimulate our imagination, either by way of memory or anticipation. But where is this other way of life? It’s a language of words and images which calls out to us wherever we go, whatever we read, wherever we are...we take them away in our minds. We see them in our dreams.

Publicity proposes to each of us in a consumer society that we change ourselves or our lives by buying something more. This “more”...will make us in some way richer, even though we will be poorer by having spent us money...[it] persuades us of this...by showing us people who have apparently been transformed and are, as a result, enviable. This state of being envied is what constitutes glamour and publicity is the process of manufacturing glamour. Glamour works through the eye and the mirror.

Glamour is a new idea. For those who...owned the classical oil paintings, the idea of glamour did not exist. Ideas of grace, elegance, authority amounted to something apparently similar but fundamentally very different. When everybody’s place in society is more or less determined by birth, personal envy is a less familiar emotion. Without social envy, glamour cannot exist.

Envy becomes a common emotion in a society which has moved towards democracy and then stopped halfway, where status is theoretically open to everyone but enjoyed by only a few. Glamour is new, society has changed.

But the oil painting and the publicity image have much in common, and we only fail to see this because we think of one as fine art and the other as commerce. Sometimes publicity impersonates a painting...sometimes, works of art are used to give prestige to a publicity scene.

Usually the reference to art are less direct. It is a question of publicity echoing devices once used in oil painting, devices of atmosphere, settings, pleasures, objects, poses, symbols of prestige, gestures, signs of love.

0 notes

Text

Telematic Embrace: Visionary Theories of Art, Technology and Consciousness by Roy Ascott

University of California Press Berkeley and Los Angeles, California 2003

---

From Cybernetics to Telematics The Art, Pedagogy, and Theory of Roy Ascott Edward A. Shanken

- Frank Popper - the foremost European historian of art and technology - Roy Ascott is recognised as “the outstanding artist in the field of telematics” - Telematics integrates computers and telecommunications, enabling such familiar applications as electronic mail (e-mail) and automatic teller machines (ATMs). - Ascott began developing a more expanded theory of telematics decades ago and has applied it to all aspects of his artwork, writing and teaching. He defined telematics as “computer-mediated communications networking between geographically dispersed individuals and institutions...and between the human mind and artificial systems of intelligence and perception.” - Telematic art challenges the traditional relationship between active viewing subjects and passive art objects by creating interactive, behavioural contexts for remote aesthetic encounters. - Synthesising recent advances in science and technology with experimental art and ancient systems of knowledge, Ascott’s visionary theory and practice aspire to enhance human consciousness and to unite minds around the world in a global telematic embrace that is greater than the sum of its parts. - By the term “visionary”, I mean to suggest a systematic method for envisioning the future. Ascott has described his own work as “visionary”, and the word itself emphasises that his theories emerge from, and focus on, the visual discourses of art. - While the artist draws on mystical traditions, his work is more closely allied to the technological utopianism of Filippo Marinetti than to the ecstatic religiosity of William Blake. At the same time, the humanism, spirituality, and systematic methods that characterise his practice, teaching, and theorisation of art share affinities with the Bauhaus master Wassily Kandinsky. In the tradition of futurologists like Marshall McLuhan and Buckminster Fuller, Ascott’s prescience results from applying associative reasoning to the serendipitous conjunction, or network, of insights gained from a widely interdisciplinary professional practice. - Ascott’s synthetic method for envisioning the future is exemplified both by his independent development of interactive art and by the parallel he subsequently drew between the aesthetic principle of interactivity and the scientific theory of cybernetics. - His interactive Change Paintings, begun in 1959, joined together divergent discourses in the visual arts, along with philosophical and biological theories of duration and morphology. They featured a variable structure that enabled the composition to be rearranged interactively by viewers, who thereby became an integral part of the work.

- In 1961, Ascott began studying the science of cybernetics and recognised its congruence with his concepts of interactive art. The artist’s first publication, “The Construction of Change”, reflected an integration of these aesthetic and scientific concerns and proposed radical theories of art and education based on cybernetics. For Ascott and his students, individual artworks - and the classroom alike - came to be seen as creative systems, the behaviour of which could be altered and regulated by the interactive exchange of information via feedback loops. - By the mid 1960s, Ascott began to consider the cultural implications of telecommunications. In “Behaviourist Art and the Cybernetic Vision”, he discussed the possibilities of artistic collaborations between participants in remote locations, interacting via electronic networks. At the same time that the initial formal concerns of conceptual art were being formulated under the rhetoric of “dematerialization”, Ascott was considering how the ethereal medium of electronic telecommunications could facilitate interactive and interdisciplinary exchanges. - The sort of electronic exchanges that Ascott had envisioned in “Behaviourist Art and the Cybernetic Vision” were demonstrated in 1968 by the computer scientist Doug Engelbart’s NLS “oN Line System.” This computer network based at the Stanford Research Laboratory (now SRI) included “the remote participation of multiple people at various sites.” - In 1969, ARPANET (precursor to the Internet) went into operation, sponsored by the U.S. government, but it remained the exclusive province of the defense and scientific communities for a decade. - Ascott first went online in 1978, an encounter that turned his attention to organising his first international artists’ computer-conferencing project, “Terminal Art” (1980). - Ascott’s early experiences of telematics resulted in the theories elaborated in his essays “Network as Artwork: The Future of Visual Arts Education” and “Art and Telematics: Towards a Network Consciousness”. Drawing on diverse sources, in the latter essay, he discussed how his telematic project “La Plissure du Texte” (1983) exemplified Roland Barthes’ theories of nonlinear narrative and intertextuality. Moreover, noting parallels between neural networks in the brain and telematic computer networks, Ascott proposed that global telematic exchange could expand human consciousness. - He tempered this utopian vision by citing Michel Foucault’s book L’order du discours (1971), which discusses the inextricability of texts and meaning from the institutional powers that they reflect and to which they must capitulate. Consequently, the artist warned that in “the interwoven and shared text of telematics...meaning is negotiated - but it too can be the object of desire...We can expect a growing...interest in telematics on the part of controlling institutions”. - Science and technology, for Ascott, can contribute to expanding global consciousness, but only with the help of alternative systems of knowledge, such as the I Ching (the sixth-century B.C. Taoist Book of Changes), parapsychology, Hopi and Gnostic cosmologies, and other modes of holistic thought that the artist has recognised as complementary to Western epistemological models. - In 1982, Ascott’s telematic art project “Ten Wings” produced the first planetary throwing of the I Ching using computer conferencing. More recently, Ascott’s contact with Kuikuru pagés (shamans) and initiation into the Santo Daime community in Brazil resulted in his essay “Weaving the Shamanic Web”. Here the artist’s concept of “technoetics” again acknowledges the complementarity of technological and ritualistic methods for expanding consciousness and creating meaning. - Ascott’s theories propose personal and social growth through technically mediated, collaborative interaction. They can be interpreted as aesthetic models for reordering cultural values and recreating the world.

- Throughout the late 20th century, corporations increasingly strategised how to use technology to expand markets and improve earnings, and academic theories of postmodernity became increasingly anti-utopian, multicultural, and cynical. During this time, Ascott remained committed to theorizing how telematic technology could being about a condition of psychical convergence throughout the world. He has cited the French philosopher Charles Fourier’s principle of “passionate attraction” as an important model for his theory of love in the telematic embrace. Passionate attraction constitutes a field that, like gravity, draws together human beings and bonds them. Ascott envisioned that telematic love would extend beyond the attraction of physical bodies. As an example of this dynamic force in telematic systems, in 1984, he described the feeling of “connection and...close community, almost intimacy...quite unlike...face-to-face meetings” that people have reported experiencing online. - Telematics, the artist believed would expand perception and awareness by merging human and technological forms of intelligence and consciousness through networked communications. He theorised that this global telematic embrace would constitute an “infrastructure for spiritual interchange that could lead to the harmonisation and creative development of the whole planet”. - Joining his long-standing concerns with cybernetics, telematics, and art education, he founded the Centre for Advanced Inquiry in the Interactive Arts (CAiiA). In 1995, CAiiA became the first online Ph.D. program with an emphasis on interactive art.

CYBERNETICS

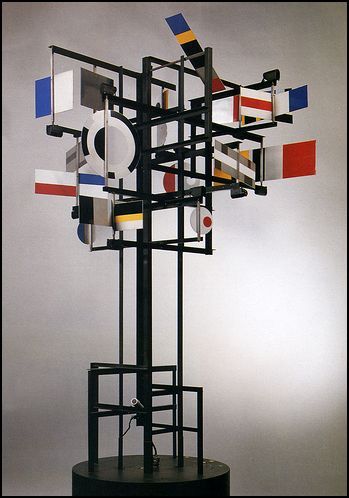

The Hungarian-born artist Nicolas Schöffer created his first cybernetic sculptures CYSP O and CYSP I (the titles of which combine the first two letters of the words “cybernetic” and “spatio-dynamique”) in 1956.

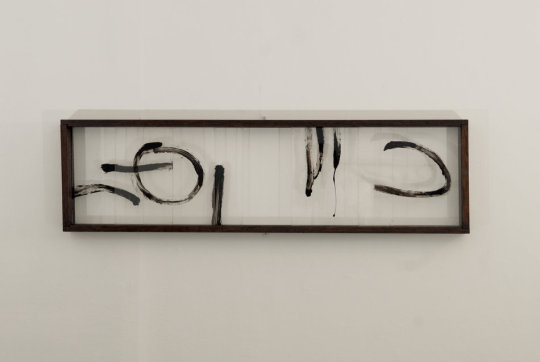

CYSP 0

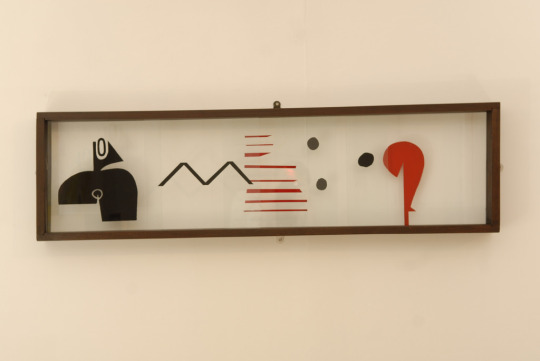

CYSP 1

In 1958, scientist Abraham Moles published Théorie de l’information et perception esthétique, which outlined “the aesthetic conditions for channeling media”. Subsequently, “Cybernetic Serendipity,” an exhibition curated by Jasia Reichardt in London (1968), Washington D.C. (1969), and San Francisco (1969-70) popularized the idea of joining cybernetics with art.

Art historian David Mellor writes of the cultural attitudes and ideas that cybernetics embodied at that time in Britain. “The wired, electronic outlines of a cybernetic society became apparent to the visual imagination - an immediate future...drastically modernised by the impact of computer science. It was a technologically utopian structure of feeling, positivistic and ‘scientistic’”.

Evidence of the sentiments described by Mellor could be observed in British painting of the 1960s, especially among a group of artists associated with Roy Ascott and the Ealing College of Art, such as his colleagues Bernard Cohen and R.B. Kitty and his student Steve Willats, who founded the journal Control in 1966. Eduardo Paolozzi’s collage techniques of the early 1950s likewise “embodied the spirit of various total systems,” which may possibly have been “partially stimulated by the cross-disciplinary investigations connected with the new field of cybernetics”. Cybernetics offered these and other artists a scientific model for constructing a system of visual signs and relationships, which they attempted to achieve by utilising diagrammatic and interactive elements to create works that functioned as information systems.

THE ORIGIN AND MEANING OF CYBERNETICS

The scientific discipline of cybernetics emerged out of attempts to regulate the flow of information in feedback loops in order to predict, control, and automate the behaviour of mechanical and biological systems. Between 1942 an 1954, the Macy Conferences provided an interdisciplinary forum in which various theories of the nascent field were discussed. The result was the integration of information theory, computer models of binary information processing, and neurophysiology in order to synthesize a totalizing theory of “control and communication in the animal and the machine”.

Cybernetics offered an explanation of phenomena in terms of the exchange of information in systems. It was derived, in part, from information theory, pioneered by the mathematician Claude Shannon. By reducing information to quantifiable probabilities, Shannon developed a method to predict the accuracy with which source information could be encoded, transmitted, received and decoded. Information theory provided a model for explaining how messages flowed through feedback loops in cybernetic systems. Moreover, by treating information as a generic substance, like the zeros and ones of computer code, it enabled cybernetics to theorise parallels between the exchange of signals in electro-mechanical systems and in neural networks of humans and other animals. Cybernetics thus held great promise for creating intelligent machines, as well as for helping to unlock the mysteries of the brain and consciousness. W. Ross Ashby’s Design for a Brain (1952) and F.H. George’s The Brain as Computer (1961) are important works in this regard and suggest the early alliance between cybernetics, information theory, and the field that would come to be known as artificial intelligence.

Information in a cybernetic system is dynamically transferred and fed back among its constituent elements, each informing the others of its status, thus enabling the whole to regulate itself in order to maintain a state of operational equilibrium, or homeostasis. Cybernetics could be applied not only to industrial systems, but to social, cultural, environmental, and biological systems as well.

Much research leading to cybernetics, information theory, and computer decision-making was either explicitly or implicitly directed towards (or applicable to) military applications. During World War II, Norbert Wiener collaborated with Julian Bigelow on developing an anti-aircraft weapon that could predict the behaviour of enemy aircraft based on their prior behaviour. After the war, Wiener took an anti-militaristic stance and refused to work on defence projects.

Cybernetic research and development during the Cold War contributed to the ongoing buildup of the U.S. military-industrial complex. Indeed, the high-tech orchestration of information processing and computer-generated, telecommunicated strategies employed by the U.S. military suggests nothing short of a cybernetic war machine.

To summarize, cybernetics brings together several related propositions: (1) phenomena are fundamentally contingent; (2) the behaviour of a system can, nonetheless, be determined probabilistically; (3) animals and machines function in quite similar ways with regard to the transfer of information, so a unified theory of this process can be articulated; and (4) the behavior of humans and machines can be automated and controlled by regulating the transfer of information.

There is, in cybernetics, a fundamental shift away from the attempt to analyse either the behavior of individual machines or humans as independent phenomena. What becomes the focus of inquiry is the dynamic process by which the transfer of information among machines and/or humans alters behaviour at the systems level.

CYBERNETICS AND AESTHETICS: COMPLEMENTARY DISCOURSES

The application of cybernetics to artistic concerns depended on the desire and ability of artists to draw conceptual correspondences that joined the scientific discipline with contemporary aesthetic discourses.

The merging of cybernetics and art must be understood in the context of ongoing aesthetic experiments with duration, movement and process. Although the roots of this tendency go back further, the French impressionist painters systematically explored the durational and perceptual limits of art in novel ways that undermined the physical integrity of matter and emphasised the fleeting-ness of ocular sensation. The cubists, reinforced by Henri Bergson’s theory of durée, developed a formal language dissolving perspectival conventions and utilising found objects. (Durée - the consciousness linking past, present, and future, dissolving the diachronic appearance of categorical time, and providing a unified experience of the synchronic relatedness of continuous change). Such disruptions of perceptual expectations and discontinuities in spatial relations, combined with juxtapositions of representations of things seen and things in themselves, all contributed to suggesting metaphorical wrinkles in time and space.

The patio-temporal dimensions of consciousness were likewise fundamental to Italian futurist painting and sculpture, notably that of Giacomo Balla and Umberto Boccioni, who were also inspired by Bergson. Like that of the cubists, their work remained static and only implied movement. Some notable early 20th-century sculpture experimented with putting visual form into actual motion, such as Marcel Duchamp’s Bicycle Wheel (1913) and Precision Optics (1920), Naum Gabo’s Kinetic Construction (1920) and Lázsló Moholy-Nagy’s Light-Space Modulator (1923-30). Gabo’s work in particular, which produced a virtual volume only when activated, made motion an intrinsic quality of the art object, further emphasising temporality. In Moholy-Nagy’s kinetic work, light bounced off the gyrating object and reflected onto the floor and walls, not only pushing the temporal dimensions of sculpture, but expanding its spatial dimensions into the external environment.