Web developer & Code for Canada fellow working out of the Publich Health Agency of Canada

Don't wanna be here? Send us removal request.

Text

7th Month Update: All The Things!

January was an odd month. Here is what happened:

The team attended a fellowship retreat (i.e., Code for Canada recalled all of cohort three back to the mother ship in Toronto)

I presented a two-hour workshop to Civic Hall Toronto; the themes of which were “Technical considerations for digitizing processes”, “What are APIs?”, and “An introduction to open source software development”

I poured ~20 hours into building a user interface for a conference tracker app, which was informed by 3 interviews with 6 users, and will be iterated on

We are now in the thick of things with SurveyMonkey Apply, as we started using the 90 day implementation service, and our building in earnest with it

One: Story time

The main goal of the retreat was to ensure our stories are great going into the March 10th-11th Code for Canada Showcase. The showcase is intended to do as it sounds: showcase the work done by each of the four teams over the previous 9 months. As prior entries of this blog attest to, we have a few stories baked into our team’s experience. We can talk about our user experience (UX) research journey. We can talk about a lack of clarity and digital capacity within our division, and how it cripples the goal of turning the current grants and contributions program (i.e., MSP) into an intelligible web app. We can talk about running workshops and building digital capacity and literacy within our government division. We can talk about the options analysis experience and our push to a software as a service (Saas), public cloud solution (i.e., SurveyMonkey’s Apply). We can talk about how disjointed and opaque certain processes are within the government. We can talk about overcoming numerous blockers in our push to get Apply.

After some back and forth, the team decides to tell a story through the lens of a fictional character - a woman named Sara who applies to the current manifestation of the MSP program. The focus is human-centered design. In other words, the focus is on how rigorous understanding of users is mandatory for a soundly built technological solution. The secondary theme is how digitizing a process introduces constraints to that process, and how those constraints can be virtuous. The following two slides show how working with a database to generate usable data forces us to reimagine how we’re asking questions:

Two: Presenting at Civic Hall Toronto

I was asked by Code for Canada to stay in Toronto after the retreat to host a learning session for members of Civic Hall Toronto. With only 5 participants in total, it was an intimate affair. Nonetheless, we got through all the material. I got some excellent feedback, and it seemed like the participants really took a thing or two away from the session. Here is a link to that slide deck.

Three: The Conference Tracker

I spent a lot of time on trains and buses, as well as at the office, adding to the conference tracker. Essentially, management wants me to build a solution to conference canvassing. Conference canvasing is a long process where conference information is disseminated to and through managers, and ends up with employees. From their employees must flag an interest, and the manager and the employee must work out the logistics (e.g., times and expenses). Make that process happen multiple times a month with a handful of employees, and it can really become a hassle for managers. I learned the full depth of the problem through three 1-hour interviews, each with two employees who have a different relationship to the current process of conference canvassing. From there I formulated my MVP (minimum viable product): a directory that simply displays conference data (past and upcoming), and acts as a repository for conference reports generated by attendees. The app is currently up on Netlify, where the master version is rebuilt and hosted whenever I push a change to master.

Four: Building with SurveyMonkey Apply

We are concurrently building with a specialist from SurveyMonkey. I still have a lot to learn, and will do so as the need arises. I have helped with piping data, using hidden fields, formulating equations, and performing minor style changes. There will be a lot more work to be done, but I don’t know the exact nature of it until the time comes.

2 notes

·

View notes

Text

6th Month Update: ‘Tis The Season For Procurement

This story begins with one word: “impossible”. Yes, at the beginning of December we “learned” about yet another reason why what we are trying to do would not fly. (“But will it blend?”, I hear you ask.) We didn’t hang our heads in defeat. For the umpteenth time we braced ourselves, dug deep, had meetings, read policy, and committed ourselves to finding a way. Credit must be given to Glennys Egan, our product manager, for coordinating the meetings, informing all relevant actors, and getting clarity on the precise reason why we were being told “no”.

This latest hurdle was legal. Apparently someone believed that The Crown (i.e., the federal government) requires the ability to sue whatever company it contracts with for an unspecified amount, were things to go awry. The belief is that the above is a standard indemnity clause for the government. To be clear, the belief is that the company for which services are being contracted must open itself up to massive risk in the form of being sued to the ground. Interestingly, the policy I read on the matter suggested multiple avenues through which the above doesn’t have to be the case. There can be many exceptions, and certain actors have the ability to “go silent” on the indemnity clause. Here is another salient point: the nature of our project is such that the data that might be breached is not imperative to the safety of the nation, nor does it contain personal, sensitive data like citizens’ health records. In other words, the risks legal was trying to defend against were (and are) relatively minuscule.

Just before Christmas break, a miracle occurred. SurveyMonkey decided to go silent on their clause not to be indefinitely sued, and we were able to sign a contract with them and purchase their software. It cannot be stated strongly enough that such an event might not have happened for several more months. We have been playing whack-a-mole when it comes to getting this deal done. At no point did we know how long the game would last. Before procuring the product, there could have been 10 more moles to whack for all we knew. This was definitely a time to celebrate if there ever was one. Now, we stride into 2020 with the promise of good things to come.

Here is a summary of the hurdles this team has overcome since July 2019:

The options analysis for potential software solutions being performed in a very narrow, opaque fashion

Data localization/sovereignty concerns

Data sensitivity and data classification concerns

Cloud computing and software as a service (SaaS) security concerns

Other external departments owning parts of the process we are trying to digitize

General, yet prolific, confusion about recent cloud computing policy from the TBS (Treasury Board of Canada Secretariat) and SSC (Shared Services Canada)

Procurement limitations and processes (e.g., anything purchased by a over 10k must be done via a request for proposals, which in turn could take months)

Legal concerns, like the indemnity clause above

(The above is not a comprehensive list, for that would be too easy!)

1 note

·

View note

Text

5th Month Update: Two Conferences & One Report

FWD50

All the Code for Canada fellows were invited to FWD50, a three-day government innovation conference. I decided to use the majority of my time in one-on-one conversations. Though initially I had no goal with these conversations, one of the first conversations turned into a discussion on innovation and why it’s so tough in government. I decided I would dedicate the rest of my time at the conference discussing this topic in one-on-one conversations with whoever would agreed to talk with me. I took notes and told participants I would email them my report if they so desired. It was a nice way to make a connection and to keep the connection alive.

You can find the report here, and the organized raw data here.

VueConf Toronto 2019

I also attended VueConf Toronto 2019, which is an annual conference for the popular javascript library Vue.js. The talks were really for developers, which was great. I got some great insight into unit testing and using GraphQL, both of which are crucial for my future work in web development. What is more, I got to meet the team behind the Vue component library Vuetify. It was great to talk with them and put names to faces, especially after having communicated with them via Discord (a chat service) or almost a year.

Tool

Finally, I would like to add that I was able to attend a Tool concert on my last night in Toronto. Though not a huge Tool fan, I had a feeling it would be quite an experience, and the band did not let me down. Their massive songs and artistic, vague storytelling are reminiscent of what I have seen of Pink Floyde. In other words, it was great to get a piece of that before it's gone forever.

1 note

·

View note

Text

4th Month Tech Update: Slow & Steady

UX research was the primary focus

This month was extremely UX research heavy. So much so, that the team had to divvy up the work and tackle it over the span of weeks. A great deal of the month was spent on the following tasks:

Producing transcriptions of audio interviews with applicants to, and staff of, the MSP (multi-sectoral partnerships) program - thankfully all done via software

Manually reviewing the transcriptions and encoding key ideas into comments on the document, based on a pre-established legend of relevant themes

Writing out those comments on sticky notes

Organizing, reorganizing, and further reorganizing those sticky notes into sensible clusters that lucidly tell the story of staff and applicants regarding the MSP program

With the final research report as yet to be written, our UX research journey continues into our fifth month. We anticipate the report coming out soon. It will be a massive milestone.

Moving forward, slowly

The path has been laid out. The lines are drawn. Out of a chasm of ambiguity a shape has formed. It looks like on November 14th we will be starting our 30 day trial license of a grant management software called Apply. This has been a long time coming. From a germinating possibility among many options, we now see a shoot forming where Apply was planted. To be sure, there are still plenty of potential barriers threatening corrupt, uproot, or otherwise soil the seedling. We have, for our part, done our due diligence to plant it in the right soil, and to provide it with all the nutrients required. (Who knew botany would be part of our work?) As a team we have put forth a document explaining all the reasons why a public cloud, software as a service product like Apply is by far the ideal way to go. Here is a taster of reasons supporting our proposal for Apply: low cost, sustainability, high security, fully end-to-end, easily customized, feature-rich, and satisfying policy ideals.

Presenting at Adobe

After running a Vue.js workshop at Shopify Canada with the Meetup.com group “Ottawa Codes” in September, I followed with an October presentation at Adobe Canada via the Meetup.com group “Javascript Ottawa”. It was the first time I gave the presentation, and it was to a group of ~50 attendees. The slide deck was sparse, as intended. With only 20 minutes to present, I could not run the workshop, and instead opted to give a presentation on the making of the workshop - the what and the why. It went well. There were some awkward pauses and some laughs, most of which were intended.

The part of my presentation that resonated most with audience members was my reiteration of a story told the behavioural economist Dan Ariely in one of his fantastic books. (I highly recommend them all.) He tells the following story (seen here from Psychology Today, and here from the BBC), which unfortunately might misrepresent the true causal effect involved. General Mills released Betty Crocker instant cake batter in the 1950s. Bakers were to just add water, place it in the oven, and voila - the cake was ready! Sales were horrible, however, so the company hired psychologist Ernest Dichter to uncover the cause. Dichter’s research led him to the main culprit: adding water was too easy. Bakers felt a certain moral guilt for not having actually baked the cake from scratch. In other words, you could bake this cake, but exclaiming “I baked this cake!” didn’t sit well with bakers. To resolve the issue - the issue of it being too easy - Ditchter suggested making the addition of eggs mandatory in the baking process. Consequently, the new recipe required adding water and eggs. The addition of the extra step - i.e., the eggs - sufficed to remove the moral guilt stunting prior sales, and sales took off (or so the story goes).

Space Apps Ottawa 2019

From October 18th-20th, I participated in Space Apps Ottawa. Space Apps is a global NASA hackathon, with chapters in major cities all over the globe. There were over 200 similar events occurring simultaneously all over the world. Representatives from the Canadian Space Agency (CSA) were in attendance, and I chose to tackle one of their challenges as opposed to a NASA one. After networking on the Friday night, and came to understand the archival struggles of RADARSAT-1. RADARSAT-1 is a Canadian satellite in service from 1995-2013. It captured radar imagery in a variety (over 20) of beam modes. Images from this satellite have been used for a myriad of reasons. For example, tracking and plotting shipping routes, surveying the military capabilities of other nation states, tracking ice coverage and ice melting, tracking urban development with a historical lens, and so forth. The Canadian government can even use it to verify whether or not farmers are planting the crops they say they are planting, and for which they are receiving certain subsidies.

Of the data captured, 50%, or roungly 800k images, currently resides in Canada. Of that 50%, only 2% of the data has been processed into images. The other 98% remains in its raw format. Processing the images is highly expensive, and many of the images may not be useful considering that many of them are only historically relevant (e.g., tracking melting ice in 1998). Still, the CSA believes there is a great wealth of useful data there, and with the primacy of big data and artificial intelligence within the private sector, the CSA is convinced that much of the data can be economically useful. There is a problem: they aren’t sure which of the 98% of the images are useful. Over the weekend, my team and I built a solution for the CSA. At the moment the public cannot easily express their interest for what data should be converted into images, because the current solution is lacking. It doesn’t enable users to see what data is available where easily, and it quickly leads to frustration. Our solution is to plot all the metadata (the data about the raw images) on a map, so that users can easily see what data is available and where. Through the use of filters, users quickly and easily narrow down the data to what is relevant to them. What is more, there is an analytics service that can automatically track users’ interactions with the map, such that the CSA can passively discover what is of interest to users. The solution built by the team was phenomenal. There is a prototype of it the web app here. (Only the beam mode filters are working at the moment.) Although the hackathon was one of the most modest one’s I have been to in terms of venue, I got the chance to work on a fascinating project with very smart and humble teammates. It was definitely a win. Also, we won our challenge space and will now be participating in national judging soon. With any luck, work on this project will continue, and I will have updates. You can watch a two-minute video of the hackathon here.

Milestones reached

Moved forward on UX research

Acquired trial license of Apply

Gave a talk at Ottawa Javascript

Built something at Space Apps Ottawa

1 note

·

View note

Text

3rd Month Tech Update: The Further You Go

“One must still have chaos in oneself to be able to give birth to a dancing star.”

Being proactive

The Code for Canada team built upon the energy and concerns driving them from the second month. After feelings of being left in the dark about certain processes, and after having core members at the Public Health Agency of Canada express their desire for us to be more proactive in the options analysis, we became more proactive. I posed a question to the team: “What is the most important thing we can focus on here during our tenure as fellows?” The answer was obvious to all of us: “Help this department choose the right solution upon which everything else will be built upon” Consequently, I set myself the goal of surveying the land of of grant management software, if only to see what is possible. Lo and behold, the private sector’s solutions to grant management are well-defined, robust, and impressive. I narrowed down the field to three solutions: Apply by SurveyMonkey, Fluxx.io, and Salesforce. I then got in touch with each vendor, explained our needs, and viewed multiple demonstrations from all three.

Meeting the monkey

After multiple conversations, both in E-mail and through VoIP (voice over IP), I scheduled an in-person meeting/demo with Apply. (They just so happen to be based out of Ottawa.) Core team members of our program went to the meeting, as did our lead on the options analysis process. Questions were answered and participants left feeling enthused about the potential therein. I asked some good questions thanks to a lead here at the MSP (multi-sectoral partnership) program. She is very familiar with the ins-and-outs of the program in its current manifestation. Prior to the meeting, I showed her a recorded demo of Apply, received her feedback, and then conveyed that feedback at the meeting. This was especially useful because our UX designer Rosemarie, who is most familiar with how the product is currently being used, could not attend the meeting.

Value added

The team pushed hard to ensure that the OA process included the due-diligence that our team felt was necessary, and that we know the fantastic people at this department deserve. I thank my team members for supporting this research, for participating in the vendor meetings, and for pushing in tandem. Whether or not the research has made a difference is as yet unknown. All we can say is that we did what we felt was right, we added value, and we pushed for what seemed most beneficial for our department. And, after a recent meeting at IMSD (Information Management Services Directorate) with a few key players, it became even more apparent that a vendor solution is probably the way to go.

Cloud solutions are the way forward

I spent the last week of the month steeped in heavy research regarding the Government of Canada’s official policies on providing cloud solutions, and on security regarding cloud solutions. I thought, “Someone on our team eventually needs to become intimately knowledgeable about what getting a cloud solution entails”, so I preemptively began doing just that. What is more, since the policy is all relatively new (circa 2018), many players in the space are either a) unaware of the policies entirely, or b) know not how to enact them. One thing is clear, however: public cloud deployment of software as a service (SaaS) is the preferred method of digitizing government processes going forward. By this is meant paying an annual fee to get access to vendor-developed software on a server which also hosts other instances of the application for private companies and/or individual citizens. Due to the cost of producing custom in-house solutions, and the expectations Canadians have for receiving intuitive and modern digital solutions, the Government of Canada is slowly moving towards a model of vetting and procuring publicly hosted software from vendors. The ultimate goal is to create a marketplace of cloud solutions that government departments can then select from.

Milestones reached

expanded the scope and depth of the options analysis process

thoroughly compared multiple best-in-class grant management solutions

delved deeply into the Government of Canada’s cloud solution policy, in preparation for potential next steps

attended Health Canada’s Data and Digital Day, to promote Code for Canada and to give participants insight into the solution we are building

coordinated a meeting with Apply, which made it a real contender for the options analysis process

1 note

·

View note

Text

2nd Month Tech Update: Going Down The Rabbit Hole

Building capacity through building a farm

I was able to host a Vue.js workshop for full-time staff and co-op students within the building. It consists of hands-on development material I built at my last job. The workshop aims to provide participants which practical knowledge to get up and running building with Vue.js within 2 hours. Specifically, it consists of an online IDE (integrated development environment) where everyone gets full access to their own instance of the project repository; and a standalone web app built with the very same code found in the repository. In other words, while participants learn concepts from the lessons, they can also peer behind the content to see the actual code generating the examples. What is more, there are challenges throughout where participants must use the previous lessons to continually improve their project: designing a personal farm.

The results of the workshop were promising. Though all participants had web development experience, many were not very familiar with modern reactive front-end libraries. It was their first taste. They were introduced to modern javascript ideas such as reusable components, importing and exporting modules, and state. Further, some participants inquired as to how they could incorporate Vue.js into their current work, which I consider a win. It means they understand the value of the technology, and they are starting to see, and contemplate, ways it can be wielded in their own jobs.

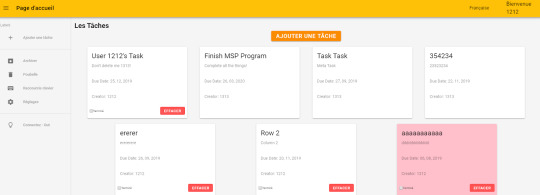

Adding internationalization

At the moment I am building the front-end with Vue.js, and a Vue.js UI (user interface) framework Vuetify. Vuetify is built using Google’s popular Material Design spec, and essentially provides me with a bunch of nifty pre-made components. I didn’t add in internationalization (also known as i18n, where 18 refers to the letters couched within the bookending “i” and “n” in the word “internationalization” - shout out to Wikipedia for that!) from the start of my project. I decided I would add it early on, and I did. However, even this slight delay in implementation cost me over a day of troubleshooting to get everything working as desired. I got a refresher on a lesson I have already learned many a times in IT: “Do fundamental stuff at the start, or else!”

Up in the air

Month two was an interesting one by the end of it. On the technical side, it feels like everything has bled into everything else. What it will congeal into I know not at this juncture. There have been two notable catalysts for this occurrence:

i) As a team, we have gone full-force into conversations, interviews, and discussions with teams & actors both internal and external to our department. Consequently, our expertise in certain domains (e.g., the staff expectations for the future system) have surpassed that of others, for whom this project is one of many on the go. We have spread our net wide.

ii) On the technical side, there is a sequence of events that need to take place in a somewhat ridged order. For example, in order for us to produce a solid front-end, we need questions about the back-end answered, and before questions about the back-end can be answered a back-end solution must be chosen, and before a back-end solution is chosen there needs to be a formal options analysis (OA). We don’t have all the dominoes in place, yet. This leaves us in a tricky situation. We have a good deal of knowledge into some important things now, but we are also lacking knowledge into other critical things. We want to start building, but we aren’t certain what the back-end and it’s API will look like. We want to develop a web app, but we don’t know where the app will be hosted (and consequently what restrictions will apply to it). We want to use modern web technologies, but we don’t know what technologies are familiar to the team who will take over once we leave. We want to play with the possible back-end solutions that are being investigated, but we can’t gain access to a sandbox of those technologies.

Entering month three, our main focus - and consequently my main focus - will be on resolving the aforementioned dissonance.

Milestones Achieved

changed my Node.js code to target a cloud MongoDB database hosted by MongoDB Cloud Services, so now my app reads and writes data to a remote database.

added internationalization and localization to the app, so users can change languages (with the option of components like datepickers changing their format, too)

as a team we raised our level of confidence and knowledge to the point where we can contribute to the options analysis process & take a more active stance on the technology side of things

installed Windows 10 Pro on my work machine (for under $3 CND on ebay.ca via an OEM license), in order to get access to Windows’ Hyper-V, which is required for Docker Desktop on Windows

finished introductory Docker course on Lynda.ca to learn about containerization, and how it can be utilized in my development process going forward

built workplace IT capacity through hosting a Vue.js workshop

1 note

·

View note

Text

1st Month Tech Update: Getting Stacked

The product

At the moment, the product we will be building appears to be a web application wherein users (i.e., applicants to a federal grants and contributions program) can login, submit various forms, and track the progress of their application. The current digital process for doing so is non-existent, begetting a slew of problems for both program applicants and program staff. (Program staff are the delightful people at the Public Health Agency of Canada who administer the program.) The current process is very manual and time consuming for both applicants and staff. It also leaves applicants in the dark as to the status of their application. The latter results in anxious applicants calling into the program for updates, which in turn further burdens the staff.

Current endeavour

As a front-end developer, I am limited regarding my capacity to work on such an application. This is why I am using my research time to learn how to make full-stack applications. Specifically, I am learning the MEVN (MongoDB, Express.js, Vue.js, & Node.js) stack. This stack is isomorphic, meaning the front-end and back-end use the same language: javascript. What is more, this stack features Vue.js, which is both fun to work with and gaining in popularity within the developer community. I will also need to learn about seamlessly deploying and updating an application on the cloud, which will likely happen on Microsoft’s Azure platform

My reasoning

Irrespective of whether or not we use this stack, it’s important for me to learn how the front-end hooks into the back-end. Since sustainability is a large part of the project - we will hand off the application to another team once our 9 months tenure expires - it’s important for me to develop the front-end with a keen eye towards how it will integrate with a backend. At the moment I am trying to build a simple back-end, which I am calling “the back-end scaffolding”. I am erecting a front-end around it. At any point in time this scaffolding may be expanded out and scaled, or fully discarded.

Milestones achieved so far

i) work with MongoDB for the first time, set it up, and learn it’s CLI (command line interface)

ii) work with Express.js & Node.js for the first time; learn how Express.js routing and middle-ware work to send http requests; and perform CRUD (create, read, update, and delete) operations on the database.

iii) connect various technologies together to create user authorization via JSON Web Tokens

iv) update our UI (user interface) library from Vuetify 1.5.5 to Vuetify 2.0, which will provide us with accessible material design components (e.g., buttons, navigation bars, dropdowns, and many more) right out of the box

v) use Vuex (an add on to Vue.js) to keep track of state across the entire application

vi) use “nodemon” to chain npm scripts together, enabling me to start my development environment in as few steps as possible, restart the development server automatically upon change, and to transfer the environment to other machines

Thank you for reading :) - Adam Simonini, Web Developer

2 notes

·

View notes