Don't wanna be here? Send us removal request.

Photo

Above is a sample of C# code that I wrote for the Kybalion project. Specifically, this script is responsible for the raycasting elements of the user’s device camera (essentially this allows for interactivity between the user and the image targets with AR buttons). This sample has been provided with indicative comments to explain the purpose of the code in more detail.

0 notes

Text

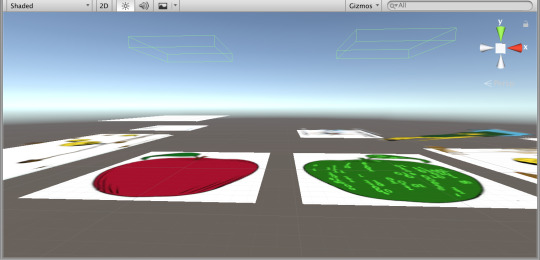

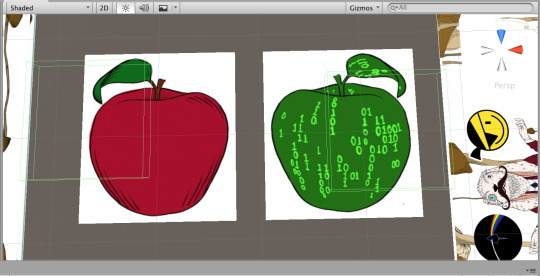

Cyberdelic Cardboard - AR Buttons

This is the layout of the image targets in the scene view. Specifically I will be working to add interactive AR Buttons to the tree of life and tree of knowledge areas, so that users can access other scenes. Utilising similar logic and C# programming as I did for the Kybalion project, I created buttons that are positioned above the image targets in such a way that when the user attempts to interact with them, the button appears to cover the same physical space as the images where in reality they are a closer distance to the user rather than actually taking up a wider space.

0 notes

Text

Revised Gantt Chart

Given that working on this project was always going to be an evolving process, it is unsurprising that the schedule of tasks and time management of said tasks would change over the course of the project. While the number of tasks has decreased, the volume of work has remained the same as expected. This primarily due to the unexpected magnitude of the software and programming issues encountered throughout the work on the project, namely C# scripting errors and incompatibility issues between Unity, Vuforia, and Xcode. This also changed a priority that had been set out at the start; rather than uploading the apps to both iOS and Google Play app stores, the apps will only be uploaded to the iOS app store (for the time that I am working on the Cyber Rabbit projects). This will allow for other goals to be reached more expeditiously before the scheduled assignment hand-in date.

0 notes

Photo

Goal: add an interactive VR feature where users can look at the green gatefold tablets via the viewer and activate a VR experience.

1. Currently the only feature is; when the reticle moves over a specific section of the gatefold artwork, the camera zooms in.

2. What we need is; a VR trigger for each tablet, which transitions the view into a stereo VR mode and begins the respective animated sequence.

3. Problem; writing a script that causes the scene to reopen in VR stereo view, but only when there is an interaction with the tablets.

4. Downloaded the Vuforia Digital Eyewear sample project from the Unity Asset Store to compare the C# scripts from there with the ones that Cyber Rabbit and I have created for the Kybalion project.

5. I noticed in particular that in the sample project, the "Device Type" under "Digital Eyewear" in the Vuforia Config menu was set to Phone + Viewer, so I made the appropriate change to the Kybalion project.

6. This caused both the Cover scene and the AR/VR scene to open in a strange stereo view where the screen was directly split in two, down the middle.

7. Realising that somehow, VR had not been enabled in the XR settings of the Kybalion project, I corrected this and so the stereo view showed two screens as though they were viewed through a pair of concave lens.

8. However, the problem remained that the Cover scene opened in a VR view when only AR elements exist in that scene, and in the AR/VR scene the stereo view should only activate on a certain interaction rather than on the opening of the scene.

9. My idea for a solution was to set a boolean in the C# script responsible for initiating the stereo view; if the cover scene was opened, do not activate the stereo view, and if the AR/VR scene was opened, allow the stereo view to be activated on interaction.

10. The first solution did not work, due to there being no predefined method in Unity or Vuforia to activate or deactivate a stereo view. In response to this, I set about creating a stereo view "mode" manually. I did this by setting up two separate cameras, one for the user's right eye and one for the left eye. Each of these cameras has a reticle, and I used a simple "frame" image to contain the cameras in such a way that a stereo view is simulated. To switch back from the stereo view to the mono view, I simply have to disable the camera element of the parent game object of the left and right cameras.

11. Now that the stereo view issue is resolved, I shifted my focus towards implementing a VR gaze interaction. The aim here is to implement a function where by the reticle in stereo view passes over one of the tablets and triggers a response.

0 notes

Photo

Upon testing the app on iOS devices, I found that both scenes that use AR seem to enter a bugged version of the VR stereo mode. What is desired is for the Cover scene to not enter this mode for any reason, and for the Gatefold scene to only enter it once the VR button is triggered.

In response to this, I rebuilt an older version of the app which did not have this error, and replaced the “broken” version on the iOS app store with the older, fixed one. Then, I resolved to set about implementing a secure and functional stereo mode during the course of setting up VR Gaze interactions.

0 notes

Photo

Occasionally, Batuhan and I will organise a Skype call to work together on the project for a few hours. For one such Skype session, here is what progressed:

Visual assets were missing from the project, so it needed re-importing from a backup on Unity Collab.

Paths between game objects and visual assets used for them (such as shaders) needed restoration due to the aforementioned change.

Buttons on cover artwork were not appearing correctly; this turned out to be because the shaders for the buttons re-imported as incorrect file types.

Buttons had lost interaction completely; this was due to the physical objects of the buttons being obstructed by other visual elements.

After the buttons had regained their interactive capability, the issue arose of the respective animations not being played upon the interaction taking place. This issue arose due to the animator components of the button game objects not being linked correctly to the scripts responsible for actually triggering the animators once the buttons had been pressed. I used debug log messages to check which parts of the script were working or not, as shown in the final image, and this method proved useful in solving the majority of the problems we faced during the session.

0 notes

Photo

Since one of the primary aims for the future versions of the app is to have functioning AR and VR elements for the gatefold artwork, I updated the UI to accommodate the scene being available. I also added text explaining that the back cover now has interactivity, so the users know the function exists.

0 notes

Text

Shifting Focus

For the next week or two I’ll be moving my focus back towards the Kybalion project, as Cyber Rabbit have informed me that their “deadline” for completing the project for the Oresund Space Collective is sooner than that of the Stories project.

0 notes

Photo

Once all Unity project compiler errors were solved, I created the build folder for the app for iOS. I then opened the app's .xcodeproject file in Xcode, and after adjusting the provisional signing and version settings, I archived the build. This process involves validating the build and exporting it to App Store Connect. I then logged into App Store Connect via my browser and accessed the dashboard, where I added the app's details (including the build I archived in Xcode earlier) to prepare it for submission to Apple's review team.

0 notes

Photo

Adding the alternate coloured cover (the back cover) as an image target option gave way to an issue: normally the animated AR elements are only supposed to be initiated in the AR scene once the Activate AR button is clicked. This worked normally for the (red) front cover (shown in the first image). For the (lime) back cover however, the animations begin as soon as the image target is detected, even before the Activate AR button is clicked (shown in the second image).

The third and fourth images depict that the front and back covers work normally once the Activate AR button is clicked.

My solution to the problem of the back cover’s animations activating prematurely was to change the ButtonCanvas script to include the Vuforia elements of the back cover, so that it is treated separately to the front cover and therefore works correctly like the front cover. The last three images highlight the additions to the script which achieved this.

0 notes

Photo

After fixing the proportions of the menu, I realised that in the credits section, the button that is intended to link users to the Satalia website was not functioning correctly. I concluded that the Satalia logo graphic was covering the button, which prevented it from being interacted with. To solve this, I removed the obstructing graphic and replaced the default button image with the Satalia logo, as shown in the image.

0 notes

Photo

Upon downloading the Stories from 2045 project via Unity Collab, I instantly noticed that the main menu is too large in editor mode, and too small in play mode. (Shown in Image 1 and Image 2)

When I looked at the hierarchy, I noticed that an AR Camera game object (courtesy of Vuforia) was present in the scene. I reasoned that this may be the source of some of the problems with the menus’ proportions, since no AR elements were being used in this particular scene despite the AR Camera being there. So, I removed the AR Camera and replaced it with a Main Camera game object (this acts as the ‘normal’ camera that a fresh Unity scene would start with).

Once I made this change, the menus regained their proportions but some of the background elements vanished from them. When I removed the AR Camera from the scene the message “no cameras rendering” appeared, since there were no camera game objects in the hierarchy, and although I expected this to be remedied once I replaced it with the Main Camera game object, the error message persisted. (Shown in Image 3 and Image 4)

I switched to the scene view and began re-scaling every UI element in the main menu scene so that they matched the size of the canvas, to see if this fixed the issue. Despite changes in the scene view, many UI elements remained invisible in the game view. (Shown in Image 5 and Image 6)

After replacing the apparently faulty Main Camera game object with a simple “Camera” game object from the UI element menu, all 4 menu buttons are present and functional, but the text elements and the background image remain missing. (Shown in Image 7)

I realised that the remaining issue was the position of the elements on the canvas, and upon adjusting these I managed to finally repair the issues of the UI elements proportions on both the main menu and credits. (Shown in Image 8 and Image 9)

0 notes

Text

Task Reflection: Adding AR buttons to the back cover of the album

I feel that I carried out this task effectively and skillfully as I was able to implement the desired feature to the exact specifications of the client. The task also allowed me to practice coding for AR buttons, which is greatly beneficial to me since the implementation of AR buttons is significantly different to that of regular Unity UI buttons, particularly as there is more complex scripting required.

In hindsight, a potential improvement would be for me to have realised sooner that instead of placing the buttons above the logos, I could create the buttons using the logo textures.

0 notes

Text

Using Unity Hub

By utilising Unity Hub, I'm able to choose which version of Unity a project opens in. This is useful because I'm working on multiple projects which have been created on different versions of Unity.

In the past, changing the version of Unity with which I open a project has sometimes produced compiler errors, usually due to certain C# functions becoming outdated as a result of the features added into the newer Unity version. I will be wary of this possibility going forward.

0 notes

Photo

The back cover of the Kybalion album can now be interacted with, specifically giving users access to the websites of the three parties who contributed to the album.

I programmed the AR buttons for this to work, and thereafter altered the visuals to match the physical images that are being projected as "buttons" (the white squares in the first image indicate where the buttons are).

The first two images show the Unity project open in the editor in play mode, simulating the app running on a device, utilising my laptop’s webcam to view the image target.

The latter two images show the C# script I wrote to program the buttons in Visual Studio.

0 notes