Blog following the progress of my Advanced Skills unit.

Don't wanna be here? Send us removal request.

Video

youtube

Rave Late Work It 29/11/17 - 360 video demo with ambisonics

This is my final video with ambisonic audio that pans with and matches visual sources. It is successful, and a good demonstration of what I’ve learnt, as well as demonstrating a common practical application that VR is being used for.

I found the 360 panner really interesting, and it took a lot of work to get things to sound more natural. It was also difficult to judge how much sound should come from the speakers, as it would at the event, and how much from the actual position of the sound source. I found that quite often the process was about finding a compromise between what worked creatively, and what represented reality. I didn’t get to explore many of the other tools as they weren’t really applicable to this project, however I found the HRTF and binaural simulation very impressive and powerful.

It took many attempts at uploading to make this work. The problem was that the ‘front’ of the video did not match the ‘front’ of the audio, causing mismatched audio. This is despite the fact that in the editing software, they were clearly matched up. I also think that the binaural effect achieved is a lot more clean, impactful and convincing when watching the video in Reaper, perhaps due to the compression that the files undergo to be upload-able and playable in real time.

REFERENCE:

Hannah Williams (2018) Rave Late Work It 29/11/17 - 360 video demo with ambisonics Available at: https://www.youtube.com/watch?v=0cKAiTDpqlc (Accessed: 22nd March 2018)

0 notes

Text

28/02/18: Research

Rob France, Senior Product Manager – Dolby

Dave Tyler, EMEA Audio Manager – Avid

Andrew Lillywhite, Customer Development Engineer – Sennheiser

AL: Stereo – something that he finds he still has to define because some people have grown up with stereo always being there. Records were released to show off stereo effects and what it could do with panning. Almost there in the same place with spatial audio – DTS, Atmos and Auro demos in his office. There is stereo represented by 2 loudspeakers, 2 dimensional sound space. Binaural is listening through headphones to 2 channels, can represent 360 degree space incl. height and front and back.

RF: In theory we can go up to 64 speakers, but it varies. Challenge is working with new formats when it comes to scale, listening to a variety of different listening environments regardless of what that system is going to be. Can listen to 5.1, can listen to stereo. Can’t listen to all of the different variations of stereo. Stereo TV, high quality stereo speakers either side of TV, headphones, 2 speakers strangely arranged on 1 side of a tablet. Can’t listen to each single option, but can get a good idea of what each should sound like. Gets more complicated when it gets to spatial audio.

More and more channels of audio being recorded and coming into post, how is it being dealt with through workflow?

DT: ProTools started the year with a maximum of 7.1 surround channels, midway through the year v.20 which supports Dolby Atmos. Worked with Dolby Atmos before that but was a workflow that required additional plugins. V.12.8 added extra complexity, integrated Atmos panner with 3D which links to the Dolby hardware. Had film mixers asking for it because they wanted to see final workflow smoothed out. More complex because 7.1/7.2 beds for static and then objects to be placed anywhere. Lot of things to deal with so wanted to simplify basic workflow in DAW. In October 1st, 2nd 3rd order ambisonics with bussing. Allows you to work with information capture on ambisonics microphone. Worked with Facebook.

DT: Challenges for mixers working in Atmos: the flexibility of being able to position an object anywhere within that environment, and finding ways to do that that are creative but are all blended with what’s going on with the rest of the sound. Temptation is to get gimmicky with all these things but its better to do something natural. Get some amazing Atmos mixes now where you do feel immersed and you do feel a part of it but you’re not aware of the speakers around you, it’s a very natural feeling. Certainly a challenge to get to that point because you’re dealing with lots of different sources. Managing all of the options, and delivery. Making sure you deliver all of the options of beds etc. and ready to deliver to the next stage adds complexity to the process not so much for the mixer but for the technical side.

RF: Fundamentally, end goal has been to allow people to create content the way they want to create it and get that as transparently as possible into people’s homes and movie theatres, and whatever the listening situation is. For now we’re trying to carry more immersive audio with avid and Sennheiser. Looking at things like classic helicopters flying over as an object, and also dialogue - commentators separate for accessibility. Looking forward may be options to boost the commentator, for better accessibility. Whilst we’re talking about immersive audio, there are a few different strands. There is immersive, and there is personalization. When we look at all our devices, people are now used to personalizing their experience: choosing different camera angles, 360 video is the ultimate personalization as you choose where you look. Let’s not forget the things of today that improve our accessibility, generally on the young market side with giving access to everything. Delivery mechanisms give you a lot more flexibility to provide people with a more flexible audio service.

How to bridge the gap between the amazing audio being produced and consumer inability to know how to listen to that audio?

DT: How are people listening? Best to make it easier for the consumer to get right, 5.1 and 7.1 sound bars are getting thinner and thinner making it easier for consumers to have good sound without having to implement 5.1 systems with cables going all around the room. If we can get that down to something that is essentially a soundbar able to produce a full 3D effect from something that’s able to sit under the television then that’s something that will progress the immersive experience as a whole. As disks become less popular in favour of streaming, soundbars can be sold as part of a whole package. People are used to personalization.

AL: HTRF and binaural, current processing works for some and not others. Future would maybe be personal HRTF tracking per person – on phones? Can make binaural and 3D audio an experience that is fantastic for everybody.

DT: Different rendering plugins from different manufacturers all use different encryption so the engineer who’s mixing it will sound slightly different to them than any end user, its an issue . Important because if we get to any level of personalization, you’ll know that what you’re listening to will translate to end users. Some manufacturers tackle this by having options so you can choose what’s best for you, but sometimes its hard to know what’s best for you, other companies looking at cost effective ways of reproducing HRTF? Issue at the moment is that mixer doesn’t know if it’s going to translate. Dolby Atmos = more carefully controlled environments, speakers are setup to be specified by Dolby, done to a certain standard, you know it will translate to theatres.

Audience Q +A

Personalization: implicated at the service provider side or device?

RF: Sports, broadcast everything and then allow end user to decide in their device what they want to listen to.

Streaming is more tricky. Netflix already complain of the number of different versions they need to handle. Depends on broadcaster and scenario. A lot of times it will be broadcasters broadcasting everything and it will be up to the end user to determine what they want.

AL: with binaural audio for offline media, there is no choice but to render it in the device. If you’re looking at 360 video on a phone, the binaural rendering is taking place on the phone. The binaural renderer used in studio for monitoring is only for monitoring and getting a good idea of what it’s going to sound like at the end.

DT: Will often have the options with Avid to listen to how it will sound with different renderers.

Asked by me: Does Dolby Atmos, and spatial audio in general, better represent reality or is it about using these new techniques and new technology to create a new experience?

RF: Why can’t it be both?

What would be examples of both?

DT: ‘Whether its Dolby Atmos or another 3D audio process, we’re trying to create that immersive.. the idea is to capture an event, as it is, and to be able to put the listener/viewer in that space as a full event. But, it can be used in an artistic way to create an experience they could never have had in the real world, so there’s definitely scope for both’

RF: ‘our aim is to build a toolkit that allows you to do whichever you want to do. The answer is that a lot of content is not real.. you look at things like Star Wars, Avatar he’s not real, and we’re listening to him somewhere totally different and allowing them to create a soundscape that is very believable. That’s what it’s always worth doing in a sound session, taking a step back you know, sound is really there to put you in that environment. Visuals need that supporting feeling, because it’s sound that makes your hairs stand up on the back of your neck, and gives you that feeling, and allows you to anticipate. What 3D sound does in addition to that is allow you to give people information about where their next visual content is going to come from. So things like the helicopter flying overhead, you know when the helicopter is going to appear on screen before it gets there. Or when you’re playing on your Xbox, and you’re walking and you hear gunshots from over here, you know that’s where the next person is going to attack you from. It depends on the content, where if you’re doing the latest documentary,( where you’re trying to put across or use …) then you’ll want to use the tools in a slightly different way. Ultimately it’s a creative decision, and if you’re creating an unreal world then you’ll probably want to create an unreal soundscape, whereas if you’re trying to create a very realistic, meaningful aspect to the content then you can use audio.

Sports is an interesting example where you want to get the best of both worlds. The reality is when you’re sat in a sports stadium there’s a lot of swearing around you. Now, we don’t want to bring that across into the home so we don’t want to create … that level of immersion but what we do want to bring across is the impression of the crowd, and the things like the PA are coming overhead for the impact. That’s an example where you have certain elements of realism but want to exclude certain others.’

DT: ‘I’ve got a couple of simple examples from things that I’ve seen that have struck me. One’s the recording of an Opera, with an Ambeo mic placed at the front of the stage with a 3D camera. When you put the headset on you’re standing on the stage with the orchestra pit behind you which is quite disconcerting and watching this opera, it just looks and sounds amazing. By contrast, there’s a fantastic piece in one of the demos for Dolby, it’s an animation with a score written for it and sound design, and I’ve used it a number of times in demonstrations. There’s something about the immersion, we’ve used that word a lot, there’s something about the feeling you get from the audio being all around you. Most people know nothing about immersive audio, about Atmos, about the speakers or so on, but they just sit down and they know that this is a very emotional experience that they probably wouldn’t have got if it was just a pair of stereo speakers.’

Make tools more accessible? Will we see youtube stars and low budget filmmakers mixing Dolby Atmos into their material?

DT: ‘now, the facebook work station tools are free. They work with ProTools and other DAWs, The tools for people to do that are already available through Youtube 360 channels and facebook 360 channels, that’s something that’s a reality already.

AL: 38 mins binaural and first order ambisonics.. new product.

Looking to the future?

AL: technology has to get easier to consume…

DT: ‘we’re moving away from channels into other paradigms about objects and other ways to think about these things. The ability to make things as simple as possible for the end user is where we need to go (sound bars and headphone delivery) to give them things that are simple to use. In video VR headsets and systems are quite clunky,

RF: ‘Certainly doesn’t involve more channels, the reality of the situation today is that it’s continuing to grow and buying the number of channels for the house, it’s gone from being a choice forced by manufacturers to being a choice that can be made by the consumer. I will go back to the idea that the key thing we’re going to start seeing is more advantage taken of the fact that now the device is the final point that mixes the audio together. It’s controlled by the consumer and the device that mixes all the audio together and so I think we’re going to start to see much more blending of content from different sources so the idea that you have your broadcast content is combined with some social content is the type of thing we’ll start to see, but its really going to take advantage of the fact that each of us has an individual end point that can be much more tailored for ourselves and we can have much more control over it’

Shows the state of the industry and how it’s developing towards consumers having better understanding of and means to playback spatial audio, and appreciating the effect it gives. These are industry professionals who believe there is a future for the technology, which demonstrates the relevance.

REFERENCES:

France, Rob, Tyler, Dave and Lillywhite, Andrew (2018) Audio Tech: The Future of Sound. Lecture delivered at BVE Expo, 28th February 2018

0 notes

Link

REFERENCE:

Youtube (2018) Use spatial audio in 360-degree and VR videos - computer - youtube help. Availablle at: https://support.google.com/youtube/answer/6395969?hl (Accessed: Jan 8th 2018)

0 notes

Text

Update: 06/01/2018

I have finished editing the 360 video and spatial audio. I started by spatialising the ambisonic audio track with the FB360 plugin, then worked through the mono feed to break it up into different sections with each of the speakers and put them on their own channels. I then spatialised all of those channels.

Panning tools for moving sounds about in 360:

I then cut down the video from 3 hours to less than 5 minutes. I chose parts where I could demonstrate the ambeo mic on it’s own, the ambeo and a single source, and the ambeo and multiple sources.

Cutting down the 360 video with the native tools in Adobe Premiere, using the GoPro 360 viewer:

Reaper project, where the first 4-channel track is the ambeo audio and the tracks below are the broken up feeds from the speakers. Here the video is linked to the audio the 360 player:

Panning linked to video:

The binaural effect of sound in 360 space is very effective when rotating the image on a screen within the program, I now need to encode and upload to youtube

0 notes

Text

07/12/17: Research

Primary Research: Mark Durham, Sound Design lecturer at Ravensbourne

I met with Mark after contacting the course leader for Sound Design. I hoped that he would talk me through some of the options that were available within Ravensbourne to help me edit my footage together. I took notes whilst we talked, and hopefully this will come across.

He started off by saying that he’s not an expert in 360 audio, but he has done research into the topic, and some tests. This is one of his tests that was shot in Ravensbourne: https://www.youtube.com/watch?v=bWSoxE7vDAI

It was created with a Ricoh Theta 360 camera and a Soundfield ambisonic microphone. The editing was done in Premiere, and the audio post and creation of the final video was done in the Facebook Spatial audio workstation, a plug-in that can be used with DAWs (digital audio workstations).

I explained the project and the deadlines, and he explained that there are a couple of options. I asked about using ProTools HD, as it would be a chance for me to learn the software, and I thought it would be the most widely-used tool in the industry. Mark told me that Ravensbourne only have a few licenses for the program, so it would be unlikely I would have enough time to get to learn and use the software in the way I wanted to. It would also be a very steep learning curve for me as I hadn’t used it before.

He said that in the research he had done, he found that the Facebook spatial audio workstation was being used by a lot of people in industry for professional delivery. I would be able to use the Facebook 360 player, or even plug in an Occulus or other headset to view the footage in VR. He said he couldn’t remember whether you can deliver the 360 video straight out of the FB workstation, so I would have to do some research into it.

He recommended that I use Reaper as the DAW, and I was happy with this as I have used it in the past.

The one piece of advice he wanted to give me was to be aware of the formats of things; the process is still very new and experimental so not everything will automatically work together.

I will take Mark’s advice and use the method he suggested, as he understands the constraints I have/ what’s best for me. It doesn’t go against any of the research I have gained so far as all programs and solutions do the same 360 mixing, just in slightly different ways.

REFERENCES:

Durham, Mark (2017) Sound Design lecturer, 7th December 2017

Durham, Mark (2017) 360 Sound Test 1 Available at: https://www.youtube.com/watch?v=bWSoxE7vDAI (Accessed: 7th December 2017)

0 notes

Text

04/12/17: Footage

Ambeo recordings:

Poly wav files, opened in Adobe Audition. Opens all 4 channels in one track. Ambeo A-B format converter plugin works, on the left you can see the A format in, and on the left the B format out in the form of W X Y and Z info. In a program that supports it, I would then be able to spatialise the channels.

360 camera footage:

MP4 files that open in Premiere as a stretched out 360 images. Linked with Go Pro VR Player, which allows simultaneous playback in a 360 viewer. The 2018 update of Premiere includes VR editing and transition effects. Below is the 360 rotation effects for changing the image.

0 notes

Text

01/12/17: Post-production Research and Test

Facebook 360 workstation offers some great tools at no cost, that work with many DAWs including the ones I use.

https://facebook360.fb.com/spatial-workstation/

The Dolby VR tools look very powerful, but are expensive.

https://www.dolby.com/us/en/professional/content-creation/vr.html

There are other plugins such as the Steam tools, and 3DCeption in unity, but both are used mainly for game production.

https://valvesoftware.github.io/steam-audio/ https://twobigearshelp.zendesk.com/hc/en-us/categories/200850199-3Dception-Spatial-Workstation

I downloaded the Facebook tools and experimented with Reaper, which turned out well. I took a test recording and made a test export with audio only.

youtube

Use headphones!

References:

Hannah Williams (2018) Audio only Ambeo Test Available at: https://www.youtube.com/watch?v=rd0hCBa13Yg (Accessed: 22nd March 2018)

3DCeption (2018) Two Big Ears Available at: https://twobigearshelp.zendesk.com/hc/en-us/categories/200850199-3Dception-Spatial-Workstation (Accessed: 1st December 2017)

Dolby (2018) Dolby Atmos for VR Available at: https://www.dolby.com/us/en/professional/content-creation/vr.html (Accessed: 1st December 2017)

Facebook 360 (2018) Facebook 360 Workstation Available at: https://facebook360.fb.com/spatial-workstation/ (Accessed: 1st December 2017)

Valve Corporation (2018) A BENCHMARK IN IMMERSIVE AUDIO SOLUTIONS FOR GAMES AND VR Available at: https://valvesoftware.github.io/steam-audio/ (Accessed: 1st December 2017)

0 notes

Text

30/11/17: Update

Tuesday:

On Tuesday 28th I picked up the equipment from Audio Department. It was nice to see the guys who worked there again, and they were interested in the project. I told them that I was planning on using the Sound Devices 633 to record the audio and they were concerned that it might not work. They helped me test the Ambeo mic as they had a 633 there, and we found that it wouldn’t work.

This is because all 4 of the capsules on the Ambeo need +48v phantom power to work, as is standard for a lot of microphones. Only the first 3 inputs on the 633 can send phantom power to the mic inputs, as the last 3 are mini 3-pin lemo XLR connections. This would have meant that I could only record 3 of the 4 microphones from the Ambeo, meaning the ambisonics would not have worked. Luckily, they were nice enough to lend me a Zoom F8 free of charge. This was perfect as the Zoom F8 is natively compatible with the Ambeo due to a partnership between Zoom and Sennheiser.

I took the audio equipment back to Ravensbourne to test it. I got to grips with the audio settings on the Zoom F8, referring to the manual for general use, and the v4.1 supplementary manual for the Ambisonics settings:

Source: https://www.zoom.co.jp/sites/default/files/products/downloads/pdfs/E_F8v4.1.pdf

When set to Ambisonic mode, the gain on the first 4 inputs to the mixer are linked so that the mics are always recorded at the same level. It also turns on phantom power to all the channels needed. It can be set to record in Ambisonics A format with stereo monitoring, FuMA (an ‘old’ style B format), AmbiX (the ‘new’ style B format), and a dual mode of FuMa or AmbiX with a backup recorded on the second set of 4 channels. You can also choose the positioning of the mic from upright, upside down and endfiring.

I also referred to the Sennheiser Ambeo Quick Guide for setup information.

Source: https://en-uk.sennheiser.com/global-downloads/file/7439/VRMIC_Quick_Guide_09_2016.pdf

Sennheiser’s created a series of 6 videos explaining the process of recording through to editing, which I found incredibly helpful. https://www.youtube.com/watch?v=bLfQb8isuQo

I set up the microphone on a tripod and recorded ambience, and did a few things like talking into different sides of it and walking around it in a circle. I recorded in Ambisonics A Format as this option seemed to give me the most freedom as it can be converted to B format, either Ambi-X or FuMa. I then looked at the recording on my computer to check that all four channels had recorded correctly. I did not convert it from A format to B format yet as I wanted to check that I was fully comfortable with the recording process before getting caught up with editing. In hind sight, I should have done a test edit then to give myself piece of mind.

Later in the day I collected the VR camera and set it up. It was fairly intuitive to use as it’s designed for fairly basic operation, and the documentation provided with it was very simple to understand. This is where Sam helped me a lot, as I wasn’t really confident in this. I won’t go into great detail about the camera but there are a few points to mention:

The camera settings are fully automatic: ISO, White balance and iris are all taken care of.

The camera connects to a stitching unit that stitches the image together in real-time. This unit also powers the camera with Power injected over ethernet. There is a slot for an SD card on this unit.

The unit creates its own 2.4/2.5 GHz wifi network, which you connect to on a laptop by connecting to the wifi and going to the IP address.

On the laptop you get a web app control screen with a preview of the image from the camera with a slight delay. This is where you access settings to live stream or record.

The recording can only be triggered via the web app.

https://support.orah.co/hc/en-us/articles/206494264-Orah-4i-User-Guide

Wednesday:

The event started at 6pm, and we got access to the Walker Space at 4pm. Normally this is more than enough time to set everything up, however because I also had the 360 setup to think about it was a little bit more stressful than usual. Because I was being paid to do the event sound first and foremost, I prioritised setting that up. This also meant that I couldn’t get any footage of me setting up the camera or microphone before the event.

The sound setup for this Rave Late was: 6 Sennheiser G3 radio lav mics, and 2 Sennheiser G3 radio handheld mics, with the receivers plugged into the Yamaha 01v96 12 channel desk. I also took a feed from the laptop at the front which was playing the presentations as often there are videos, and a feed from my laptop that I play music from. The 2 main outputs from the desk feed the speakers and an audio recorder in the form of a mono mixdown. None of this affected or was effected by the addition of the 360 camera and audio.

The setup of the room was slightly different than was planned. The stage was slightly further to the left than centre, and the talkaoke was nearer to the back of the room. However I still put the camera in the same place that I had planned to. I left Sam to setup the 360 camera as he had tested and got it working that morning after a few issues connecting via the wifi, and to test the sound for the event after it was set up. This meant that I could focus on getting the audio working and testing it.

Unfortunately we had to tape the mic in the shock mount to the camera tripod as there wasn’t enough space to safely have 2 tripods without obstructing the walkway, but this did work well. I used my studio quality monitoring headphones to check the quality of each mic capsule, and to set the linked gain for the recording level. I also made sure that I had enough space on the SD cards to record for the duration of the event, and tucked the Zoom F8 under the stage to safely record the event, with the breakout extension cable taped down on the floor between them. I set it to record then went to my position at the front of house desk at the back.

The event sound system was all set up and ready to go, so I plugged in the usual Roland 4 channel recorder that we use. Due to time constraints, I didn’t have time to set up the desk to output more of the microphones on the speakers individually into the recorder. This was unfortunate but the mono recording I did get actually worked well as no one really spoke over each other, so it should be fine to use in the edit. I did get a feed from the Talkaoke table to feed into it, so I do have that clean to add in.

I checked that the 360 camera was working properly, and that it was ready to record onto the media. We had to set the quality to a 1080 overall image (the camera is capable of 4k) because the SD card wouldn’t have had enough space to record the whole event otherwise. I was fine with this, as my main focus is obviously the sound.

I set both the camera and the Roland to record, then all I could do was leave it all to record for the show, checking it during the break. And to mix the sound for the event.

The event went fine, and all of the media recorded perfectly. It went on for over 3 hours, meaning I have a lot of footage. We finished late, but I managed to record some footage of the equipment whilst it was still set up.

Over the next few days I will look at the footage and make sure I understand the formats and see what it will look like in the edit. In the next few days I will make contact with a sound editor who works primarily with ambisonics to learn more about editing, and on 7th December I am meeting with Mark Durham to discuss what facilities within Ravensbourne specifically are available to me.

https://wavreport.com/2017/06/28/review-sennheiser-ambeo-zoom-f8/

REFERENCES: Sennheiser (2017) AMBEO VR Mic for 360 and VR videos (1/6) - The Principle | Sennheiser Available at: https://www.youtube.com/watch?v=bLfQb8isuQo (Accessed: 30th November 2017)

Jones, A (2017) Review: Sennheiser Ambeo + Zoom F8 Availabe at: https://wavreport.com/2017/06/28/review-sennheiser-ambeo-zoom-f8/ (Accessed: 30th November 2017)

Orah (2018) Orah 4i User Guide Available at: https://support.orah.co/hc/en-us/articles/206494264-Orah-4i-User-Guide (Accessed: 30th November 2017)

0 notes

Text

27/11/17: Update

I’m feeling a lot more confident about my project after working on the planning of the video I’m going to film for the rest of last week.

I’m going to film the Rave Late on the 29/11, an event that consists of talks centred around an industry theme. I already work on the Lates doing front of house sound mixing, so I have an understanding of how I can film.

I met with Pippa Hogg today; she is the person who organises the Rave Lates for Ravensbourne, and I have worked with her before. The theme of the Rave Late will be ‘Work It’ and it will feature people who work in the creative industries who run agencies giving advice on how to get into the industry. We talked through what I want to do technically: Record a 360 video of the Rave Late with full ambisonic audio. She understood that I am prioritising audio over visual quality as it’s for a university project, and we agreed that it would act as a test recording as she was very interested in how it would work. If the test goes well, there might be more opportunities to film in 360 in the future.

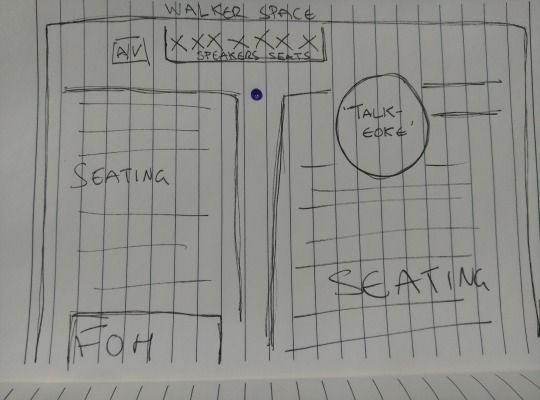

The Rave Late is going to be in the Walker Space on the ground floor and consist of this set up: 1 host and 5 guest speakers who will be based on a stage, giving presentations across 2 parts with a break in the middle and a group Q&A at the end. A talkaoke table - a pop up talk show/discussion table. Audience seating filling the rest of the space.

I am based at the front of house desk at the back of the room, mixing the audio live to 2 speakers at the front of the room. I will be mixing the music that we play before, after and during the event, the sound from the laptop playing the videos at the front, and all of the radio mics on the talent.

To capture the event in 360 I will place the 360 camera and ambisonic microphone at the front of the room. The blue dot on the picture above marks the ideal positioning. I will use an Orah 4i camera which I am borrowing from DoubleMe at Ravensbourne. This camera is designed for live broadcasting 360 video, and as a result it live stitches the footage together. This is perfect for me as it means I don’t have to worry about doing anything with the camera footage, so that I can focus on the audio.

Source: https://www.orah.co/

For the Ambisonic microphone, I am hiring the Sennheiser Ambeo from Audio Department. It will cost me £18 for one day’s hire with a student discount. I emailed the manager checking that it will work with the Sound Devices 633 mixer that I have access to at Ravensbourne, and he confirmed that it would. I will place it as close to the camera as possible, and leave the mixer recording under the stage.

Source: http://hire.audiodept.co.uk/shop/products/wired-mics/sennheiser/sennheiser-ambeo-vr-mic/

As I’m live mixing the event, I will also record one or more mono feeds of the event sound which I can cut up in post production and place around the 360 field.

As I will be solely responsible for both the front of house sound and the 360 video I have asked Sam Hill, a broadcast engineer who specialises in audio, to help me out. He will help with the event sound so that I can focus on the 360 production as much as possible. I’ve worked with him a lot in the past and he is the perfect person to help me with this.

I will be collecting the equipment tomorrow so that I can check it all works before wednesday.

0 notes

Text

21/11/17: Update

Unfortunately due to circumstances outside of my control, I have got an extension on my deadline which is as yet undetermined. I have been unable to work on my project for the last 2 weeks, save contacting Laurence Chater.

I have spent today researching into ambisonics, and how I’m going to record audio for 360

Ambisonics:

The established conventions of ambisonics is based around the B format:

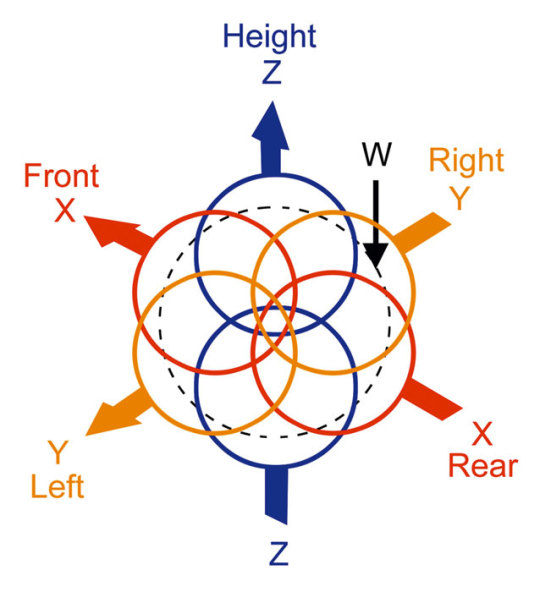

This is made up of W, X, Y and Z information. W is a sum of all information, X forward/backwards, Y left/right, Z up/down.

The WXYZ formation is the basis of 0th- and 1st-order ambsionics. When adding more channels they are labelled as RSTUVKLMNOPQ. Below I have summarised the orders that produce full sphere audio, omitting the horizontal-only format where the Z (up/down) channel is missing, and mixed-order formats with combinations between two orders. These all come under the definition of B format. 0th-Order: W information only, 1 channel. mono. 1st-Order: WXYZ, 4 channels. full sphere. 2nd-Order: WXYZRSTUV, 9 channels. full sphere with 2 height channels. 3rd-Order: WXYZRSTUVKLMNOPQ, 16 channels. full sphere with 3 height channels.

For my 360 video I obviously want to avoid 0th-Order, as it’s a single mono track. 1st order is fine for what I want to do; 9+ channels of 2nd and 3rd-Order ambisonics is too many to keep track of, with no real benefits to upgrading. Most of the presentation options I have available to me (youtube, Oculus etc.) only support ambiX (standing for Ambisonics exchangeable) or similar. This is a 4 channel B-format, this is the standard number of channels supported.

Based on this, I have researched my recording options:

Zoom H2N Handy Recorder

Small recorder with 5 internal microphones that can be recorded in multiple combinations. It is capable of recording 2 and 4 channel surround sound and makes for a very simple solution to recording 360 audio. Its worth around £100-£120, so is an excellent entry level option.

Sennheiser Ambeo

A specially designed microphone with four identical capsules in a tetrahedral configuration. Records in Ambeo A format and comes with software that converts it easily to B format. It’s recently released but it’s already widely regarded as an industry standard due to it’s ease of use and functionality. Its worth approx. £1,500 but is widely available for hire. Sennheiser made a partnership with Zoom to make the Zoom F8 mixer and recorder directly compatible with this microphone. I had practical experience with this microphone on work experience at Audio Department. I also spoke to some location recordists, who believe that this is one of the best options, if not the best, for 360 audio in the field.

SoundField SPS200

Tetrahedral microphone that records in A format. Designed mostly for studio use and location work when used with a laptop through a DAW (Digital Audio Workstation), with its own software and plug-ins provided. Contains 4 identical capsules with variable polarity and width, offering vast creative possibility and freedom. It is available for about £2,700.

Core Sound Tetra Mic

Works in a similar way to the Sennheiser and Soundfield microphones, with 4 capsules in a tetrahedral configuration, created by an independent American company. It is the only self-sufficient ambisonic microphone available for under $1000. Its not really an option for me as I’m looking for something that I can borrow/hire.

Recording without using an ambisonics microphone

As most of 360 sound happens in the edit, any combination of stereo/mono sources can be combined and panned around to create the effect of Ambisonics. This means I could capture a stereo atmos track of whatever I film, capture mono sources and combine them into a 360 soundscape.

After this research, I know I want to capture an ambisonic recording as it will provide me with a full ambisonic atmosphere. I can then combine this with mono or stereo recordings to flesh out a 360 soundscape. I want to use the Ambeo microphone as it’s quite accessible to me and I think it’s simple to use. The next thing to do is research its use properly, and figure out what exactly I’m going to record with it for my demonstration video.

REFERENCES:

Audio Dept (2018) home page Available at: https://audiodept.co.uk/ (Accessed: 21st November 2017)

Core Sound (2017) Introduction: TetraMic Single Point Stereo & Surround Sound Microphone Available at: http://www.core-sound.com/TetraMic/1.php (Accessed: 21st November 2017)

Facebook 360 (2018) Facebook 360 Workstation Available at: https://facebook360.fb.com/spatial-workstation/ (Accessed: 1st December 2017)

Leese, M. (unknown date) Spherical Harmonic Components Available at: http://members.tripod.com/martin_leese/Ambisonic/Harmonic.html (Accessed: 21st November 2017)

Leese, M. (2005) Ambisonic file formats Available at: http://www.ambisonic.net/fileformats.html (Accessed: 21st November 2017)

Nachbar, Christian , Zotter, Franz , Deleflie, Etienne , Sontacch, Alois (2011) AMBIX - A SUGGESTED AMBISONICS FORMAT Available at: https://iem.kug.ac.at/fileadmin/media/iem/projects/2011/ambisonics11_nachbar_zotter_sontacchi_deleflie.pdf (Accessed: 21st November 2017)

Oculus (unknown) Oculus Features Available at: https://developer.oculus.com/documentation/audiosdk/latest/concepts/audiosdk-features/#audiosdk-features-supported (Accessed: 21st November 2017)

Sennheiser (2018) Ambeo VR Mic Available at: https://en-uk.sennheiser.com/microphone-3d-audio-ambeo-vr-mic (Accessed: 21st November 2017)

Soundfield (2018) SoundField SPS200 Software Controlled Microphone Available at: SoundField SPS200 Software Controlled Microphone (Accessed: 21st November 2017)

Zoom (2018) Zoom H2n Available at: https://www.zoom-na.com/products/field-video-recording/field-recording/zoom-h2n-handy-recorder (Accessed: 21st November 2017)

0 notes

Video

youtube

Ambisonic recording example

REFERENCE:

resonate (2017) Sennheiser AMBEO VR recording - Coldplay - Speed Of Sound (Quartet) Available at: https://www.youtube.com/watch?v=1GR15BdheLM (Accessed: 20th November 2017)

0 notes

Text

15/11/17: Research

TONEBENDERS 060 – VR WITH GORDON MCGLADDERY AND CARLYE NYTE

HRTF: head-related transfer function How your ears filter and process audio in the real world, and how you use that information

Ambisonics: 1st order, 4 channels. It’s a format that’s been around since the 1970′s with four mic capsules that are set up in a way that allows you to rotate the direction that the mic array hears in 360 degrees. It’s only since VR and 360 has been around that it’s started to come into play as a delivery format. You don’t have to work entirely in ambisonics from capture from delivery, you can work with only mono sources.

The Facebook 360 workstation: evolved from Two Big Ears, gives sound designers a whole load of tools to take mono, stereo and ambisonic tracks and create an 8 channel output, from that you can encode a 4 channel ambisonics mix. It does all the HRTF inside the plugins A 360 panner on each track to work with mono, stereo, ambisonic sounds. Sliders control height and tilt. Route through control channel, creates 8 channel which you send to a standalone encoder.

Differences between mixing for linear (2D, 5.1, narrative) mix and VR: Have to deal with HRTF, be able to rotate the sound sphere in realtime, based on observers head movement. Mix is always heard through headphones, not speakers. Lose control over the mix as depends on user input.

Event/conference with speakers: Took four ambient recordings placed around the room, and a mono mix of the event sound. Raises the question of when someone at a talk talks from the back of the room, should that source be panned from the speakers that their voice would come from, or from the back of the room?

It’s useful to map sound to the location of the person in the room, so the user can turn their head to look at them. Creative use rather than realistic.

FB360 has convincing binaural, doesn’t just abandon one ear - has smooth panning so feels more like sound is going around. Using binaural mic isn’t always the way/ most important. About understanding how to mix it.

VR Problems: No clear dialogue/backgrounds/foley/music departments/hierarchy in bigger sound design projects, you can’t control the listener’s focus. Designing differently where sounds may be the main focus where they won’t have been before. More time consuming to breaking things up and perfectly pan them. In VR things come from a single point source emitters, but in real life sound comes from wider, bigger sources. Soundscape made it seem fuller. Have to take into account final delivery method, they have subtle differences eg. Occulus not having room scaling built in that Vive does.

Ultimately, designing sound for VR is finding a balance between user expectation and reality.

This has given me a better understanding of the post-production process and how to approach the edit. I will now look at the Facebook 360 Tools as a method of post-production.

REFERENCE:

Coronado, R. (2017) 060 - VR WITH GORDON MCGLADDERY AND CARLYE NYTE. [Podcast] July 14th. Available at: http://tonebenderspodcast.com/060-vr-with-gordon-mcgladdery-and-carlye-nyte/ (Accessed: 15th November 2017)

0 notes

Text

15/11/17: Primary Research, Laurence Chater

On 23/10/17 I sent an email to Laurence Chater, who works as Online Video Producer in industry making regular and 360 videos. I asked him some questions to get a starting point for the rest of my research. These are the things that I asked, and his responses:

What kind of VR projects are you/have you been involved in? Mostly 360 filming (delivering a mono or stereo 360 video to the client) but recently have started developing interactive VR apps. Most recently did a VR tour of a Tattoo studio.

What are the most popular and successful applications for VR? Event recording/streaming in 360 is popular, Advertisers were big into creating branded VR experiences in 2016/early 2017 although this seems to be dropping off now.There’s also a huge gaming market now for CG VR games on the Vive, Oculus Rift and PSVR headsets. I would say this is probably the biggest application of VR right now. Check out this industry report for more: https://greenlightinsights.com/industry-analysis/2017-virtual-reality-industry-report/

What do clients normally expect/ask for in terms of audio for VR? Most of my clients know nothing about audio, so I tend to push them towards having ambisonics (360 sound) in the final delivery, however it tends to be expensive to produce so lots of them don’t go in for it and just end up with mono sound mix/music

What techniques are there for recording? mics and equipment etc. You’d usually record separate sound from all actors/sources as you usually would on set, and you’d also take ‘spacial’ sound using a multi-directional or binaural mic like this one: https://en-uk.sennheiser.com/microphone-3d-audio-ambeo-vr-mic

What's the process like for editing? Video edit process looks roughly like this: http://speedvr.co.uk/wp-content/uploads/2017/07/19143032_1698527400176842_6406300080156654019_o.jpg Audio editing: http://speedvr.co.uk/vr-audio/spatial-audio-workflow-for-360-video/

I feel like I have a better understanding of how the audio is recorded for 360 after this research when combined with my own knowledge of sound recording. I want to speak to more people about 360 video production, both generally and talking to sound designers for 360. Then I want to figure out the techniques for editing as this is still a grey area.

This will help me plan how I’m going to make my own 360 video with ambisonic audio!

REFERENCE:

Chater, Laurence (2017) Email to the author, 15th November 2017

0 notes

Text

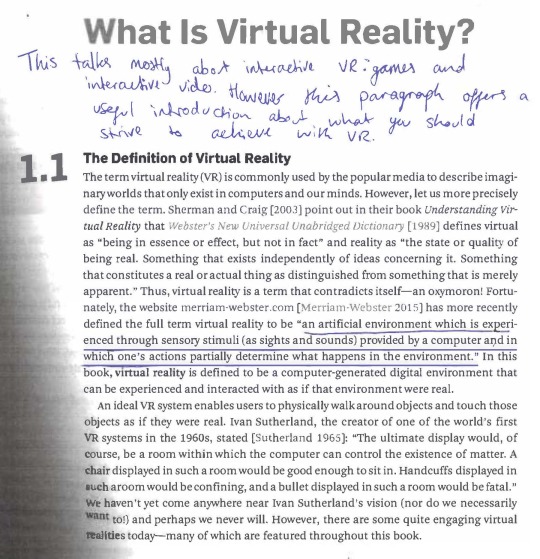

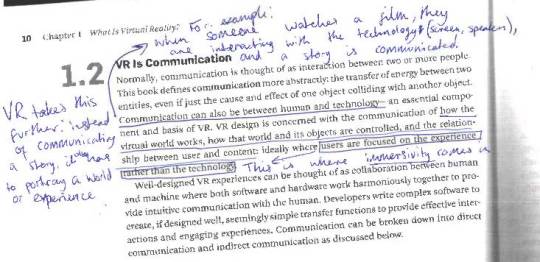

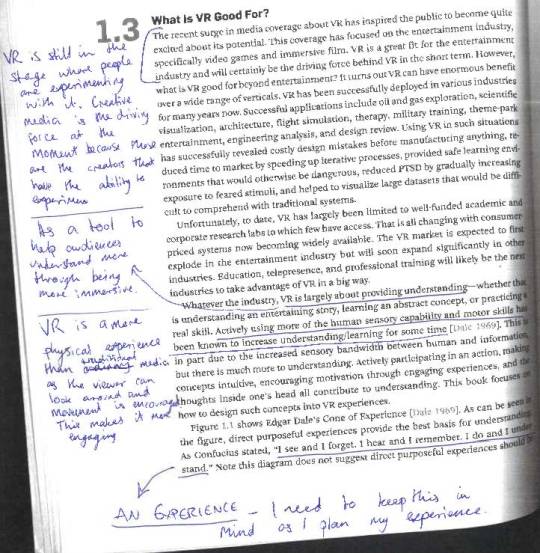

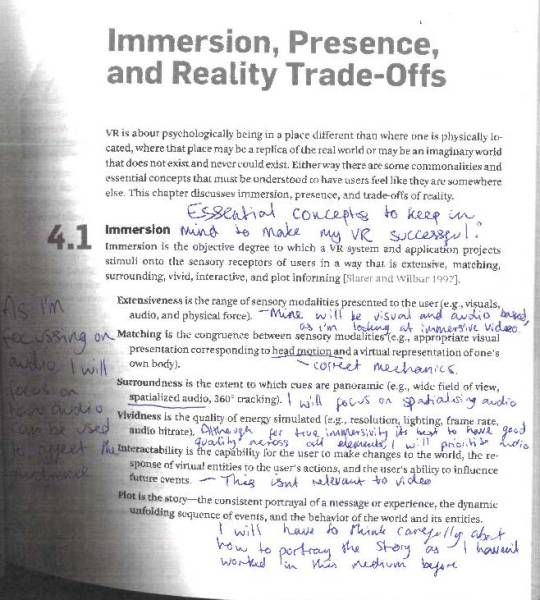

27/10/17 Research: The VR Book

The VR Book: Human-Centred Design for Virtual Reality by Jason Jerald, Ph.D.

This book was an excellent source of information about Virtual Reality production. It goes through the fundamental theories that need to be considered to make effective virtual reality content, and there were some sections on audio.

Story: Virtual reality is a tool for storytelling, but also a way of portraying an experience. I need to think about what I make in terms how it will be an immersive experience to tell a story. As I’m focusing on audio, this means thinking about and carefully planning what I capture and include in the final edit. This should be an interesting challenge as I haven’t worked in VR before.

Immersivity:

The number of senses included in the experience, in my case 2 - visuals and audio.

Surroundness in audio is how spatialised the audio is, how panoramic it is, making it more engaging.

Vividness is the technical quality of the experience. As it’s a digital medium compression is essential, so it’s important to make sure that quality is as good as possible with minimal compression for a more life-like experience.

REFERENCE:

Jerald, Jason (2016) The VR Book: Human-Centered Design for Virtual Reality, Illinois: Association for Computing Machinery and Morgan and Claypool

0 notes

Video

youtube

25/10/17:

For me, this video really helped me to wrap my head around binaural audio. It demonstrates how effective binaural/directional audio is in making an experience more immersive. This video obviously isn’t a 360 video, but you can see how the effect (or similar) would compliment a 360 video.

The difference with 360 video is that the direction that the viewer is looking is variable, so to have a singular binaural track would be extremely disorientating if it didn’t change when the viewer changed their point of view. This means that an entire 360º of audio needs to be captured then manipulated so that it matches visuals.

Incidentally, I also found it extremely relaxing and have since started listening to binaural recordings of cities and environments in the background whilst I work.

REFERENCE:

The Verge (2015) Hear New York City in 3d Audio Available at: https://www.youtube.com/watch?v=Yd5i7TlpzCk (Accessed: 25th October 2017)

0 notes

Video

youtube

Test live stream with a 360 camera by Holoportal at Ravensbourne. The audio is a mono track recorded in camera.

REFERENCE:

DoubleMe, Inc. (2017) Mixed Reality Experience Playground 1 Available at: https://www.youtube.com/watch?v=ob6aLFaD0Hc (Accessed: 23rd October 2017)

0 notes

Link

REFERENCE:

Inglis, S. (2017) Steve Pardo: Creating Rock Band VR Available at: https://www.soundonsound.com/techniques/rock-band-vr (Accessed: 22nd October 2017)

0 notes