#like WHO cares it's just a poll and the opinions of some randos on the internet.

Explore tagged Tumblr posts

Text

the evil dead franchise is not good you're delusional. get well soon.

#like WHO cares it's just a poll and the opinions of some randos on the internet.#but well I CARE!!!!! SO MUCH!!!!!!#like evil dead 2 is NOT the comedy horror masterpiece people pretend it is it's men thinking they're so smart and funny#and jerking themselves off in the writer's room and fine people can find merit in it WHATEVER#but do not act like army of darkness is not OBJECTIVELY terrible. worse than the worst freddy worse than the worst scream worse than the#worst final destination etc etc#it's BAD. and it's misogynistic as shit#and i saw a post like oh i hate that they made ash williams a male chauvinist asshole he wouldn't fucking say that#he kind of would. lol. you just don't care because you'd rather babygirlify some man with blood on his face than admit#a piece of work fucking hates women.#whatever 😐

4 notes

·

View notes

Text

I'm actually genuinely so sorry that besttship poll got hate for reblogging a post about why Cecilos is great. Shipping is supposed to be fun.

Yeah, sometimes ships mean a lot to people especially if they're canon. Especially if they're queer. Especially if they represent groups who don't usually see a lot of themselves. But it's still just fiction. It's supposed to be relaxing and fun.

I've nothing bad to say about Lumity. I don't know a lot about it, but it's new-ish, it's by a popular company. Those of it's intended demographic have likely never seen anything like it before. If you're a wlw especially, it probably means more to you.

But that doesn't make the impact of Cecilos smaller? It doesn't make any good points about it untrue? Who cares if the poll maker reblogged those good points?

The poll maker showing an opinion did fair less damage to the image of Lumity than its fans did by reacting badly enough to send hate at the idea of some internet rando supporting good points about another ship.

Side note, y'all need to not give Disney so much credit or importance especially about stuff like queer rep.

7 notes

·

View notes

Text

"Hey Jelliclebrawl, you're being really cavalier and showing a lot of favoritism on this poll. :/"

Couple reasons for that.

I have in fact not shown favoritism, because thus far I have implied that I want Tugger to win. This is not true! I'm expecting that likely either he and/or Misto will win, and I'm okay with that because I like them, but I am also nerfing them as much as I fairly can so that at least they have to work for it. However, I actually want Etcetera to win, because she's my baby. I just don't think she stands a chance against, you know, everyone else. Maybe against Pouncival. I think she could take him.

On a less jokey level, this is my fourth tournament (and will be the third that has started voting once it does). In the first I was accused of favoritism because I said in an offmic conversation that I would like the two mascots of the bracket to make it to the finals; in the second, I ended up going off on one of my voters because my determination to remain impartial meant that I couldn't just say "I do not actually like this option and here is why" to the person who kept pushing me regarding an offhand and extremely neutral remark I made. So, I'm trying something different, and seeing what happens if I'm just really blatant about which options I'm backing. Especially since my choice is likely to change at the drop of a hat, especially with the groupies and young toms, because I really do love every single character and would genuinely love any of them to win. But especially Etcetera, because she's my baby.

In the two weeks before I changed up this tournament, I got exactly two votes. One of them was from my best friend submitting John Partridge as Tugger because they knew I really wanted to run him but had already selected the two autoentries and left him up to chance. It was really discouraging but in the interim while I watched that number stay at two I decided to just run a bracket about the 1999 proshot, because I had all those gifs anyway so why not? And since this is effectively a tournament where all of the options are my own submissions, I realized I can just do whatever I want to. Would I have really liked to do my original idea instead? Absolutely, and maybe if this tournament builds enough of a follower base I can run it (and my original original idea) in future. But in the meantime... I make the rules babyyyy. If you wanted impartiality you should have submitted to my tournament when you had the chance.

I want to be able to talk about my opinions and headcanons and the stuff I'm putting into my fanfictions and the little background things I've spotted and having to remain impartial gets in the way of that. Having to do it for the Discworld poll is hard enough; doing it for the thing I am actively hyperfixating on would be torture. I want to be able to chat with you guys in the comments. I want to have fun engaging with fellow fans. I can't do any of those things if I have to sit on my hands and pretend I don't care who wins or loses and don't have any favorites while I'm in character. I'm still in character here, just this time the character I'm inhabiting is a guy with 27 cats and some dogs and I really want you to have opinions about them with me while you decide amongst yourselves which ones you like best.

And, because it bears saying: regardless of my own impartiality, vote your own heart. If something I say makes you decide that you like that option, then by all means! But I am one man and I have one vote (well, two if I feel like going to the trouble of logging into the old account), and all of my propaganda should carry the same weight as any other rando who propagandas about the polls.

#nobody has actually said anything i just want to be upfront about what i'm about#impartiality on the part of the pollrunner really is important to me and in a way i am still maintaining it on some level#i think if i had one clear favorite maybe i would be making more of an attempt#but the more i thought about it the more i realized my opinions were all based on who i would be sad to see get voted out and like#all of them#honestly#i love all of them!#they are so fun for me!#like if pressed i'd say that i'd like grizabella not to win but that's only because she already won the ball#so someone else should get a chance to win the brawl!#but even that is more like... so what if she does win?#she's a really good character and mrs. paige put her whole p-#no i shan't say it#not because it's inappropriate it's just a really low hanging fruit of a pun#anyway even with the cat that i least want to win it's still a case of 'this would still be a satisfying conclusion'#but anyway the point is#impartiality is very important to me and so if i'm going to be partial and show blatant favoritism#i'd at least like us all to be on the same page about the what my favoritism manifests itself as and why i'm allowing it

1 note

·

View note

Text

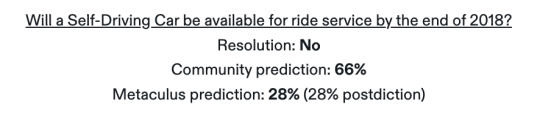

more on metaculus' badness

@dubreus replied to your post “IDK who needs to hear this, but Metaculus is...”:

yes, but because the rewards are fairly immaterial, it selects for a community who wants to forecast for its own sake. the randos on metaculus are not your usual randos

I don't get what you mean by "because the rewards are fairly immaterial."

Isn't that also true of twitter polls? Yet they attract randos.

It wouldn't be true of a real prediction market, but if anything, I'd expect fewer "randos" on account of it.

That aside, I don't find this kind of defense reassuring w/r/t the trustworthiness of Metaculus.

A lot of my beef with Metaculus is, in fact, precisely this -- that if you ask "why would I trust it?", the answer is some contingent fact about the demographics of the userbase at some point in time, rather than any structural feature of the platform itself.

Making good forecasts is hard. If you want to say you can make good forecasts, you should be able to tell me what mechanism you're using to make the forecasts good. You should have a hypothesis: "if I do X, I'll get good forecasts," and then when I ask, you point to how you're doing X.

Real prediction markets have such a hypothesis. Their X mechanism may have flaws, but it's perfectly clear what it is and why someone might expect it to help.

But what is Metaculus' hypothesis? Its "X"? Based on what the platform actually does as a piece of software, one might guess:

"We will put polls about the future on the internet. The polls are opt-in and anyone can vote on whichever ones they feel like at any time. This will produce good forecasts."

But this is obvious nonsense -- consider the case of twitter polls -- so that can't be it.

We can then observe that "the randos on metaculus are not your usual randos," which gets us something like

"We will put polls about the future on the internet. The polls are opt-in and anyone can vote on whichever ones they feel like at any time. We will advertise these polls to the kind of people we think are smart. This will produce good forecasts."

But this still isn't a trustworthy mechanism! Even if the users on Metaculus right now happen to be smart on average, or good at forecasting on average, that's a historical accident -- there is no feature of the Metaculus platform that guarantees this, or even makes it more likely.

I am asked to take it on faith that the savviness of the userbase has not gotten worse this year than it was last year -- or that the users voting on some particular question are about as savvy as those voting on a typical question. If one of these things weren't true, I would have no way to tell until after I've used the forecast and gotten burned.

Among other things, this means Metaculus is exactly the kind of thing that the original formulation of Goodhart's Law was about:

Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.

The "good demographics" of Metaculus are reliable only so long as no one cares too much about the answers. Once the answers start to have an effect on anything, there is an incentive to vote so as to steer that effect in a desired direction.

And Metaculus has no immune system against this. If a bunch of newbies come in and vote on one question in a politically motivated manner, this is the system working as intended. The "Metaculus community" is just whoever shows up. The people who, in actual fact, show up -- savvy or not, politically motivated or not -- are the Metaculus community, by definition.

----

The opt-in nature of the individual polls exacerbates this problem.

Things would be very different if being a "Metaculus forecaster" were a sort of membership with conditions, where you had to vote on every question to stay in the group. That would impose a kind of coherence across questions: if many users feel unqualified to voice opinions on a particular question, that will show up as an unusually broad forecast, and the fact that it is broader than the others will mean the thing it appears to mean. You would be comparing fractions with the same denominator.

But as it is, people can vote on just whatever. Sometimes 1000 people have voted on one question, and only 100 on a very similar question (or a question conditional on the other). These are not two opinions voiced by one "community," and nothing forces them to cohere. So it is very hard to generalize from facts about one question (or the aggregate over questions) to some other question. Knowing that "Metaculus" got one thing right, or got things right on average, tells me little about any other one of its opinions, because "Metaculus" is a different person every time they say something.

----

One particular form of this pathology involves changes in the forecasts over time.

Not only is there a different "community" for every question, there is a different "community" for each question at each point in time.

Changes in a forecast can reflect the same forecasters registering new predictions, but they can also reflect new people coming in and registering first-time predictions with different statistics.

It's tempting to look at those graphs and think "Metaculus changed its mind, it must have done so on the basis of new evidence" (and I see people do this all the time). But it also changes the graph if different people respond to the same evidence.

So these moves have no useful interpretation whatsoever: all they tell you is that that there was a change in the average over whoever showed up. If the change happened because the question got on Hacker News and that drove first-time votes from a bunch of relatively low-information users . . . then, again, this is the intended behavior of the system. The Metaculus "community" is whoever showed up, at each place and time, and never anything besides.

----

@dubreus also said:

i also think you're underselling the part where there's a commitment to *checking* whether the question ended up happening, a 6-year 1,000 question track record of doing so, and the part where even the top ranked forecasters have similar accuracy to the "dumb community median of randos"

Again, the "anything goes" methodology really limits my willingness to draw any conclusions from this.

Metaculus does have a page where you can review how well it did, in aggregate, over . . . the questions it decided to ask itself.

���But "the questions Metaculus decides to ask itself" is not a natural category, and the questions are chosen so whimsically and unsystematically that an average over them is not very informative.

Compare this to, say, 538. When people talk about 538's Senate forecasts and ask how good they are, this discussion is grounded in the fact that, if 538 predicts the Senate in a given year, they predict the whole Senate. It's not like Nate Silver can just pick his favorite subset of the races happening in 2020, and only predict on those, and then pick some other subset in 2022.

This means that aggregates over 538 Senate forecasts are aggregates over the category of Senate races, which is a natural category. Whereas if Nate Silver got to pick which states to predict, then you'd have aggregates over "Senate races conditional on Nate Silver deciding he wanted to predict them."

In some sense, this is an appropriate category to use when you want to evaluate a current 538 prediction, since it is (by hypothesis) a case where they decided to predict . . . except that you have to take on faith that older 538 selections are representative of newer ones.

Suppose 538 used to only select states where they thought their model would work well (whatever that means). Then, later, they relax this rule and predict on some states where they don't expect this. The calibration properties (etc) of their earlier forecasts would not transfer to the new round of predictions -- but nothing in their "numerical track record" would give you a hint that this is true.

I'm not going to say this kind of error is unquantifiable, but it is not something people typically quantify (certainly Metaculus doesn't). Usually people avoid the issue entirely by using transparent and systematic selection rules. (But Metaculus doesn't.)

----

In concrete terms, well, people are talking about Metaculus AI forecasts a lot. So I ask, "how good is their track record on AI?"

I go onto that track record page, and . . . hmm, yes, I can drill down to specific categories, that's good . . . so I put in a few AI-related ones and see something like:

Okay, cool! So . . . what does that Brier score actually mean? Like, what is this an average over?

One thing that quickly becomes clear is that these categories are noisy. I'm largely getting stuff about the future of AI, but there are some weird ones mixed in:

Will Trump finish the year without mentioning the phrase "Artificial Intelligence"? [Short-Fuse] Will AbstractSpyTreeBot win the Darwin Game on Lesswrong?

Okay, fine, I add some more filters to weed those out. Now what am I averaging over?

Well, something akin to "Nate Silver's favorite 17 states" . . . except here it's more like "Nate Silver's favorite 1-2 states, and also 538 only predicted the U.S. Senate once in a single year, and they also predicted the House but only once in some other year, and this year they're predicting the Israeli parliament."

Here's a question that caught my interest:

The "community" kinda thought this would happen in 2018, but it didn't. Did they think it was going to happen in 2019, then? In 2020 (I think that's when it really happened)? We don't know, because they only asked themselves this question once, in 2016-17.

I have no way to assess Metaculus' quality systematically on any topic area, because it never asks itself questions in a systematic way. Someone could look at the above and think, "maybe Metaculus is overly bullish on self-driving cars." Are they? I have no idea, because they just ask themselves whichever random self-driving car questions they feel like, with no natural group like "Senate races" over which it would be informative to take an average.

This issue, together with the fluid nature of "the community," mean I don't trust any summary statistic about Metaculus to tell me much of anything.

There's no glue holding this together, giving one of its parts predictive value for the properties of the others. It's just whatever. Whatever questions people felt like putting on the site, whatever people felt like voting on them at any point in time.

Computing a Brier score over "just whatever" does not give me information about some other "just whatever," both of them totally whimsical and arbitrary. These statistical tools were made for doing statistics. This is not statistics.

51 notes

·

View notes

Note

Fox News and CNN and Twitter don't get to decide who the President is. YOU do. Don't let them take your power away.

I’m not entirely sure how to respond to this because I don’t know what prompted it. Is this about me not voting? Pal.... even my own dad couldnt convince me. Some rando on the internet surely ain’t gonna convince me. Or is this some sort of attempt to rally me behind trump? Okay, then we come back to.... I didn’t vote. You’re looking at the wrong person if you’re expecting me to get riled up about the election.

Look, republicans definitely have reason to be suspicious right now. There’s a lot that doesn’t add up. There’s a lot of “typos” that have happened conveniently in Biden’s favor. They’ve been prematurely calling states in Biden’s favor. All this talk about stopping counting and boarding up windows at polling places and stuff.... it’s definitely reason to look into fraud, in my opinion. But republicans (specifically the die hard trumpers) are no better than the left in a lot of ways. I still keep seeing the registration vs voter numbers floating around on twitter, even though it’s already been disproven. Most of those registration numbers are from previous elections. Those are also states that have same day registration. I also saw trumpers sharing a video of a guy unloading something by a polling place, claiming they were tampering with ballots... turned out, it was just camera equipment. But trumpers jumped on that video and assumed the worst. They want the worst. They want this to be fraud. They care more about trump winning than they do about honesty or integrity.

I’m a political junkie. Analyzing trends and human interaction and how people respond to certain events is my thing. I live for it. Back when I was in high school, I knew I wasn’t going to college. But if someone somehow had the capability of forcing me to go, there were three subjects I would have considered, and political science was one of them (the other two were criminal justice and history). I love this stuff. And why this election is going this way currently is a whole rant I could do, but I’ll spare you. Regardless, Congress is more important than the presidency anyways. And there are way worse and/or more important things in life than who wins this election. There are far greater evils people face every day. This ain’t something to get work up over.

I’m sorry this kind of went off on a tangent, but I deeply annoyed right now. By everyone. Just sit tight. Vote your conscience. Approach all information with a critical mind. Don’t cling to false information without verification just because it’s convenient for you. And, if your guy loses, accept it like an adult and understand that things could be worse.

I’m not sure if you’re a Biden or trump supporter or third party. I guess I assumed trump just based on the attitude of the message and the implication behind it. That, plus I have mostly trump supporters following me. Regardless, all this information applies.

4 notes

·

View notes