#azure virtual network diagram

Explore tagged Tumblr posts

Text

How to Build a Cloud Computing Infrastructure

Cloud Infra Setup consists of the hardware and software components — such as servers, storage, networking, and virtualization software — that are needed to support the computing requirements of a cloud computing model. It allows businesses to scale resources up or down as needed, ensuring cost efficiency and flexibility.

Choose a Cloud Service Provider (CSP)

Popular options include Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and IBM Cloud.

Evaluate factors such as cost, scalability, security, and compliance to select the best CSP for your needs.

Plan Your Architecture

Define Objectives

Identify your business goals and technical requirements.

Design Architecture

Create a detailed architecture diagram that includes network design, data flow, and component interactions.

Set Up Accounts and Permissions

Create accounts with your chosen CSP

Configure Identity and Access Management (IAM) to ensure secure and controlled access to resources.

Provision Resources

Compute

Storage

Choose appropriate storage solutions like block storage, object storage, or file storage.

Networking

Configure Virtual Private Clouds (VPCs), subnets, and networking rules.

Deploy Services

Databases

Deploy and configure databases (e.g., SQL, NoSQL) based on your application needs.

Application Services

Deploy application services, serverless functions, and microservices architecture as required.

Implement Security Measures

Network Security

Set up firewalls, VPNs, and security groups.

Data Security

Implement encryption for data at rest and in transit.

Compliance

Ensure your setup adheres to industry regulations and standards.

Monitor and Manage

Use cloud monitoring tools to keep track of performance, availability, and security.

Implement logging and alerting to proactively manage potential issues.

Optimize and Scale

Regularly review resource usage and optimize for cost and performance.

Use auto-scaling features to handle varying workloads efficiently.

Benefits of Cloud Infra Setup

Scalability

Easily scale your resources up or down based on your business needs. This means you can handle peak loads efficiently without over-investing in hardware.

Cost Efficiency

Pay only for the resources you use. Cloud Infrastructure Setup and Maintenance eliminates the need for large upfront capital expenditures on hardware and reduces ongoing maintenance costs.

Flexibility

Security

Cloud providers invest heavily in security measures to protect your data. Features like encryption, identity, and access management, and regular security updates ensure your information is safe

Disaster Recovery

Implement robust disaster recovery solutions without the need for a secondary data center. Cloud-based backup and recovery solutions ensure business continuity.

How We Can Help

Our team specializes in setting up Cloud Infra Setup tailored to your business needs. Whether you’re looking to migrate your existing applications to the cloud or build a new cloud-native application, we provide comprehensive services, including:

Cloud Strategy and Consulting: Assess your current infrastructure and develop a cloud migration strategy.

Migration Services: Smoothly transition your applications and data to the cloud with minimal downtime.

Cloud Management: Monitor, manage, and optimize your cloud resources to ensure peak performance and cost-efficiency.

Security and Compliance: Implement security best practices and ensure compliance with industry standards.

Conclusion

Setting up cloud infrastructure is a critical step towards leveraging the full potential of cloud computing. By following a structured approach, you can build a secure, scalable, and efficient cloud environment that meets your business needs.

0 notes

Text

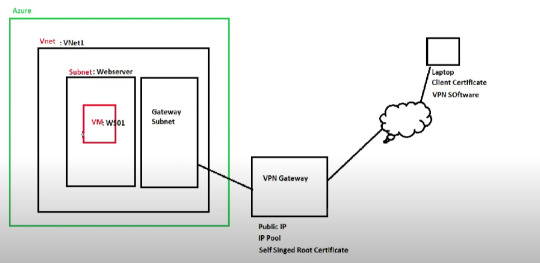

Point to Site Connections Using Azure VPNGateways

What is Point to site Connections?

A Point-to-Site (P2S) VPN gateway connection lets you create a secure connection to your virtual network from a remote location such as home. P2S VPN is also a useful solution to use instead of S2S VPN when you have only a few clients that need to connect to a virtual network.

https://learn.microsoft.com/en-us/azure/vpn-gateway/point-to-site-about go for the further informations in detail.

What is VPNGateways?

VPN gateway is a specific type of virtual network gateway that is used to send encrypted traffic between Azure Virtual network and an on-premise locations such as home or conference over the public internet.

Conceptual diagram of P2S

Steps for the P2S connection.

1. Create a Resource Group in azure portal.

2. Create a virtual network.

3. Create subnets and along with it create a gateway subnets so gateway will use ip addresses assigned in this subnet.

4. After that create a virtual network gateway after completing the following above steps.

5. Now its time to create the Create Self-sign root & client certificate. Please go through the provided link to understand the procedure.

https://learn.microsoft.com/en-us/azure/vpn-gateway/vpn-gateway-certificates-point-to-site

6. Next steps to configure Point-to-Site connection. Next step of this configuration is to configure the point-to-site connection. In here we will define client ip address pool as well. It is for VPN clients. As shown in links kindly have a look https://learn.microsoft.com/en-us/azure/vpn-gateway/vpn-gateway-howto-point-to-site-resource-manager-portal .

7. Now its time for testing VPN connection . Now we have finished with configuration. As next step, we need to test the connection. To do that log in to the same pc where we generate certificates. If you going to use different PC, first you need to import root cert & client certificate we exported.

Log in to Azure portal from machine and go to VPN gateway config page.

In that page, click on Point-to-site configuration

After that, click on Download VPN client.

8. After that unzip the vpn client setup and do the connections according to the pc, after that we can see new connection under windows VPN page. Connect it and then come to cmd prompt and type ipconfig to verify ip allocation from VPN address pool. Check the Azure portal so that the cross checking also be done.

Thanks!!!.

0 notes

Text

Upgrade your skill by Microsoft Azure Certification Training Online

Regardless of whether you're keen on picking up involved skills in distributed computing or coordinating Microsoft Azure Certification Training Online for your business, Finsliq Tech Academy has extraordinarily planned the Azure courses for you to accomplish your professional objectives. Our Microsoft Azure training classes set you up for the parts of Administrator, Developer, and Architect through actualizing Azure Microsoft ventures in certifiable situations.

Finsliq Tech Academy follows a very much planned and organized way to deal with the assistance you increase total information on distributed computing. That is the explanation; it's taken into account three Microsoft Azure training modules dependent on your experience and intrigue. With our educator drove and continuous preparing, you will ace center Azure Microsoft ideas on your timetable and at your speed. Our industry master coaches will ensure no stone gets unturned in taking your Azure abilities to the following level.

Our Azure training empowers you to pick up the accompanying Microsoft Azure aptitudes without any preparation:

● Basics of Azure Microsoft

● Send and Configure Azure Infrastructure

● Incorporating the on-premises climate with Azure Services

● Overseeing Azure assets with Azure CLI

● Checking and overseeing Azure security on Azure VMs

● Modifying Azure VM organizing

● Making an Azure Storage Account

● Diagram of Azure Virtual Network, Azure traffic steering, and Virtual Network Peering

● Scaling applications with Azure Load Balancer

● Facilitating web applications on the Azure stage

● Working with Azure Storage Queues and Azure Assistance Transport

● Making and overseeing Azure SQL Database

● Working with Azure Monitor and Azure Active Directory

Our Microsoft Azure training classes are accessible for an assortment of cloud jobs, a couple of them are:

● Sky blue Developers/Administrators

● Tasks Engineers

● Experts knowing about DevOps

● IT Professionals knowing about cloud rudiments

● Cloud Architects

The requirements to take this Microsoft Azure instructional class:

● For AZ-103, there are no requirements.

● For learning the AZ-203, having information in any of the programming dialects is attractive.

● AZ-300 training is specialized, so you ought to have earlier information on distributed computing basics and any of the programming dialects.

The activity jobs offered for experts confirmed in Azure Microsoft cloud stage are

Purplish blue Developer

Purplish blue Solutions Architect

Purplish blue Cloud Administrator

Purplish blue Consultant

Business Analyst

Purplish blue AI Engineer

Purplish blue Data Engineer

Purplish blue DevOps Engineer

Purplish blue Security Engineer

If you need to have a profession in this advanced period, accreditation in a main cloud administration supplier like Azure is an unquestionable requirement. Our involved Microsoft Azure training confers everything the aptitudes needed to clear Azure accreditations identified with Azure Administrator, Azure Developer, and Azure Solutions Architect jobs

#Microsoft Azure AI Fundamental (AI-900) Course#Microsoft Azure Data Fundamentals (DP-900) Course#Microsoft Azure Fundamentals (AZ-900) Course#Weekend IT Courses#Microsoft Azure Courses#Amazon AWS Courses#AWS Certified Cloud Practitioner Course#AWS Solutions Architect (Associate) Course#AWS Sysops Administrator (Associate) Course#Certified Cloud Security Professional (CCSP) Course#Certified Information Systems Security Professional (CISSP) Course

1 note

·

View note

Text

The Power of the Clouds Above - by Maurice Finley

The rise of cloud computing has reshaped jobs and businesses for the past decade, yet Forbes reported that over 80% of American had no idea what it was, with nearly 30% thinking it had to do with the weather. This blog explores the advantages and disadvantages of cloud computing.

Cloud computing is the delivery of computing services — from servers and storage to software and analytics (and much more) — over the Internet (referred to as “the cloud” because the internet was often drawn as a fluffy cloud in network diagrams). Cloud computing has many advantages (as well as reasons for a company to stay away from it) and comes in various forms, all of which I will to discuss in this blog post.

Many of today’s cloud computing services fall into three different categories, often called the “cloud computing stack” because each category builds on top of the previous one. The first is infrastructure as a service (IaaS) which refers to renting IT infrastructure. This includes servers and virtual machines, storage, networks, and operating systems. Examples of companies that use this service are Amazon EC2, Microsoft Azure, Google Compute Engine, etc.. The second category of service is platform as a service (PaaS) and refers to the services that supply an on-demand environment for developing, testing, deploying, and managing software. This service is especially important because it allows developers to create applications without having to worry about scaling and managing the underlying infrastructure. Heroku, Force.com, AWS Elastic Beanstalk are all companies that use this type of service. Lastly, software as a service (SaaS) refers to the method of delivering software applications over the Internet, on demand and typically on a subscription basis. The cloud providers host and manage the software as well as handle any maintenance like software upgrades and security patches. Users of the software applications connect over the Internet. Salesforce, G Suite, Cisco WebEx are notable companies that utilize the advantages of this category of cloud computing.

Cloud computing has many advantages to it, changing the ways that many businesses have thought about their IT resources. To understand the “revolution” it is important to look at the many advantages it gives businesses. Cloud computing saves companies a lot of money because it eliminates the expenses of: buying software, setting up and running data centers, electricity for power and cooling, and additional IT experts for managing these things. Cloud computing services are typically provided on demand and allow users to provision vast amounts of computing resources in minutes. This gives businesses a lot of flexibility and eliminates the pressure of capacity planning. With a vast majority of the IT management responsibilities being covered by the cloud, the IT teams can spend more time on more important responsibilities. The largest and most popular cloud computing services have a worldwide network of data centers equipped with the latest generation hardware, allowing faster network latencies. Cloud computing provider’s data centers backup data and have disaster recovery because they have the ability to store mirrored data at multiple sites of their network.

While looking at the many advantages of cloud computing, it may seem like the logical next step for many businesses today. However it is believed that only about 7% of enterprises have moved to the cloud. To understand why it is important to understand the disadvantages of the cloud. Because cloud computing services are internet based, access is fully independent on the ability to connect to them via the Internet. As with any hardware, the cloud service can fail at any time, which can be detrimental to many businesses. Using a cloud computing service adds additional risks to a business’s data being vulnerable to an attack, with not only the business having to make sure their side is secure, but also trusting that the cloud service is as well. Additional vulnerability is also added because of the ease of attacks through the internet. There have been many successful attacks on cloud services with the iCloud hack in 2014 being the most infamous. Lastly, many businesses are not particularly fond of sharing their proprietary information with companies like Amazon and Google.

https://azure.microsoft.com/en-us/overview/what-is-cloud-computing/

https://searchcloudcomputing.techtarget.com/definition/cloud-computing

https://aws.amazon.com/what-is-cloud-computing/

6 notes

·

View notes

Text

Livescribe desktop send to computer problem

LIVESCRIBE DESKTOP SEND TO COMPUTER PROBLEM FOR MAC

LIVESCRIBE DESKTOP SEND TO COMPUTER PROBLEM WINDOWS 7

LIVESCRIBE DESKTOP SEND TO COMPUTER PROBLEM WINDOWS 7

Windows 7 SP1 and 8.1 (supported only with Horizon 7).For more information about using Microsoft Teams with RTAV, see the Horizon documentation for the appropriate version: Configuring Microsoft Teams with Real-Time Audio-Video and System Requirements for Real-Time Audio-Video.Ĭlient system (for OSes supported with a specific release, see the appropriate Horizon Client release notes) RTAV is used if Media Optimization is not turned on via GPO or if the endpoint does not support Media Optimization (fallback mode). Note: System requirements for RTAV are different and more substantial than those for Media Optimization for Microsoft Teams. For more details, see Configuring Media Optimization for Microsoft Teams (for Horizon 8) or Configuring Media Optimization for Microsoft Teams (for Horizon 7.13). This section briefly lists the system requirements for both the client and the virtual desktop when using the Media Optimization for Microsoft Teams feature. See Configuring Browser Redirection for support details. Web browser media offload is supported with the Browser Redirection feature. Note : The Microsoft Teams web app (browser client) is not supported via the Teams Optimization Pack. The Media Optimization for Microsoft Teams group policy setting must be enabled in the virtual desktop.

LIVESCRIBE DESKTOP SEND TO COMPUTER PROBLEM FOR MAC

To take advantage of the offload capability, you must use Horizon Client for Windows 2006 or later, Horizon Client for Mac 2103 or later, or Horizon Client for Linux 2106 or later. Microsoft also turned on the service on their side on August 11, 2020. The Media Optimization for Microsoft Teams feature is also available with Horizon Cloud on Microsoft Azure, Pod Manifest version 2298.X and later. VMware supports Media Optimization for Microsoft Teams as part of Horizon 8 (2006 and later) and Horizon 7 version 7.13 and later. Installation and Configuration of Media Optimization for Microsoft Teams Note: For Horizon documentation references, we will be using Horizon 8 (2106) product documentation links throughout this guide. VMware Horizon® Cloud Service™ on Microsoft Azure: Support of Media Optimization for Microsoft Teams.Horizon 8.3 (2106 and later) – Media Optimization for Microsoft Teams on Linux clients.Horizon 8.2 (2103 and later) – Media Optimization for Microsoft Teams on Mac clients.Horizon 7.13 and Horizon 8.1 (2012 and later) – Media Optimization for Microsoft Teams on RDSH remote desktops and Microsoft Teams as a published application for Windows clients.Horizon 7.13 and Horizon 8 (2006 and later) – Media Optimization for Microsoft Teams on VDI desktops for Windows clients.For a complete list of features supported on each platform, see the product documentation topic for the version of Horizon that you are using. Below is a list of the minimum supported versions for each platform. Horizon supports audio, video, and screen-sharing offload to the local endpoint on Windows, Mac, and Linux platforms. Minimum Supported Horizon Client Versions The following diagram describes the flow of data between the various components of the Microsoft Teams optimization feature in Horizon.įigure 1: Microsoft Teams Optimization Flow This document helps you plan for deploying it. The Media Optimization for Microsoft Teams feature is a boon for organizations using Microsoft Teams for audio and video calls. Using this mechanism, this process avoids using RTAV altogether. Often the end-user experience improves as well because the data has one less hop to make. The load disappears from the network, and the processing moves from the data center to the endpoint. Horizon Client draws over the Microsoft Teams window in the virtual desktop VM, giving users the impression that they are still in the VM, but the media is actually traveling directly between the local endpoint and the remote peer (as shown in Figure 1). With the supported Horizon Agent and VMware Horizon® Client versions, when a user starts a call inside the virtual desktop, a channel to the local physical device is opened and the call is started there. VMware, working closely with Microsoft, supports Media Optimization for Microsoft Teams with Horizon 8 (2006 and later) and Horizon 7 version 7.13. At the same time, the virtual desktop is capturing the video feed and sending it back over the network, using the VMware Blast display protocol, to the endpoint so that the end user can see the video feed. But the RTAV feature still sends a lot of data across the wire, and the virtual desktop has to process the data and send it out over the network to complete the call. VMware Horizon® sends that data compressed, using our real-time audio-video (RTAV) feature. When the call is initiated in the virtual desktop, the user’s microphone and camera send the user’s voice and image to the virtual desktop. Making a video call from a virtual desktop can be tricky. Technical Overview of Media Optimization for Microsoft Teams

0 notes

Text

Dbschema license key

#DBSCHEMA LICENSE KEY LICENSE KEY#

#DBSCHEMA LICENSE KEY PDF#

#DBSCHEMA LICENSE KEY PATCH#

Practicability is one of the main advantages, the application providing support and compatibility for various database types, with the intuitive design getting you quickly up and running. Since it’s based on Java it can run on a wide variety of configurations and machines. DbSchema crack manages to live up to expectations and is a powerful alternative in case you consider trying something new, or even starting from scratch. It works on all major operating systems, including Windows, Linux, and Mac. DbSchema Pro 9.0.3 Crack + Keygen 2022ĭbSchema freeload is compatible with all relational databases. Easily design new tables, create HTML5 documentation, explore and modify database data, compare and synchronize schemas across multiple databases, modify and run SQL, generate random data. Powerful yet easy to use, DbSchema helps you design, document, and manage databases without the need for an SQL professional.

#DBSCHEMA LICENSE KEY PATCH#

With a few mouse clicks and several dedicated windows, you can create tables, views, callouts, groups of tables, or even bring up several editors for SQL, relational data, query builder, or random data generator.ĭbSchema Patch is a relational database design tool with interactive diagrams, HTML documentation, overview, and relational data editor, SQL editor, query builder, random data generator, comparison and synchronization of patterns, teamwork, etc. With the help of context menu entries, the effort required on your behalf mostly concentrates on gathering data to import, because all creation options are as easy as can get. Somewhere along the way, you notice the breathtaking amount and diversity of database types it supports, with entries like MySQL, Access, Firebird, Oracle, Teradata, and a lot more. DbSchema 9.0.3 Crack with Product Key 2022Īt the first launch, you can choose whether to start a new project from scratch, import already existing files, or even connect to your local database via your network. Easily design new tables, generate HTML5 documentation, explore and edit the database data, compare and synchronize the schema over multiple databases, edit and execute SQL, generate random data. Powerful, yet easy-to-use helps you design, document, and manage databases without having to be a SQL pro.

#DBSCHEMA LICENSE KEY PDF#

Discover DbSchema layouts, virtual foreign keys, Relational Data Browse, interactive PDF documentation, and many other features.ĭbSchema Patch is a design tool for relational databases, with interactive diagrams, HTML documentation, Relational Data Browse and Editor, SQL Editor, Query Builder, Random Data Generator, Schema Compare, and Synchronization, teamwork, and more. Build meaningful relations between tables and document the schema.

#DBSCHEMA LICENSE KEY LICENSE KEY#

DbSchema License Key is built for large schemas and complex databases. It can be evaluated for 15 days for free, no registration is required. Build-in tools allow to visually explore the database data, build and execute queries, generate random data, build forms applications or reports, and more. Using DbSchema Keygen you can design the schema with or without a database, save the design as a project file, deploy schema on multiple databases and share the design in a team. Direct Download Download Crack DbSchema 9.0.3 CrackĭbSchema Crack is a diagram-oriented database Windows PC software compatible with all relational and many No-SQL databases, like MySql, Cassandra, PostgreSQL, MongoDB schema visualization, Redshift, SqlServer, Azure, Oracle, Teradata, and more.

0 notes

Text

Architecture cloud computing diagram Architecture asset management digital cloud aws based diagram solution infrastructure software

If you are searching about Cloud computing – SMR you've visit to the right page. We have 9 Images about Cloud computing – SMR like Cloud computing – SMR, Cloud Computing Architecture: Front-end & Back-end and also Atlassian cloud architecture | Atlassian Cloud | Atlassian Documentation. Here you go:

Azure stack offers hybrid cloud on your terms

Cloud Computing – SMR

www.smrinfo.co.in

What is private cloud computing?. Azure stack offers hybrid cloud on your terms. Cloud computing architecture: front-end & back-end. What is selenium grid ?. Atlassian cloud architecture confluence jira footprint distribution global. Vmware cloud private virtualization computing structure using architecture server infrastructure inspirationseek via management. Cloud computing – smr. Cloud computing services service benefits infrastructure business benefit software data sarv advantages iaas security flexibility storage automatic maintenance meaning need. Azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network. Cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last

Azure Stack Offers Hybrid Cloud On Your Terms | CIO

www.cio.com

azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network

Vmware cloud private virtualization computing structure using architecture server infrastructure inspirationseek via management. Cloud infrastructure overview diagram isc architecture application upenn user case applications use path native. Cloud computing is an opportunity for your business. Cloud computing architecture: front-end & back-end. Cloud infrastructure overview. Selenium grid diagram nodes testingdocs architecture below example. Azure stack offers hybrid cloud on your terms. What is selenium grid ?. Cloud based reference architecture, cloud based digital asset. Cloud computing – smr

Cloud Infrastructure Overview | UPenn ISC

www.isc.upenn.edu

cloud infrastructure overview diagram isc architecture application upenn user case applications use path native

Architecture asset management digital cloud aws based diagram solution infrastructure software. Selenium grid diagram nodes testingdocs architecture below example. Cloud computing is an opportunity for your business. Cloud infrastructure overview. Cloud based reference architecture, cloud based digital asset. Azure stack offers hybrid cloud on your terms. Cloud computing services service benefits infrastructure business benefit software data sarv advantages iaas security flexibility storage automatic maintenance meaning need. What is private cloud computing?. Cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last. Cloud computing architecture: front-end & back-end

Atlassian Cloud Architecture | Atlassian Cloud | Atlassian Documentation

confluence.atlassian.com

atlassian cloud architecture confluence jira footprint distribution global

Cloud infrastructure overview diagram isc architecture application upenn user case applications use path native. Azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network. Atlassian cloud architecture confluence jira footprint distribution global. What is private cloud computing?. Selenium grid diagram nodes testingdocs architecture below example. Atlassian cloud architecture. Cloud based reference architecture, cloud based digital asset. What is selenium grid ?. Cloud computing – smr. Cloud computing is an opportunity for your business

What Is Selenium Grid ? | TestingDocs

www.testingdocs.com

selenium grid diagram nodes testingdocs architecture below example

Cloud infrastructure overview diagram isc architecture application upenn user case applications use path native. Atlassian cloud architecture confluence jira footprint distribution global. Architecture asset management digital cloud aws based diagram solution infrastructure software. What is selenium grid ?. Cloud computing architecture: front-end & back-end. What is private cloud computing?. Cloud based reference architecture, cloud based digital asset. Cloud infrastructure overview. Azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network. Azure stack offers hybrid cloud on your terms

What Is Private Cloud Computing? - InspirationSeek.com

inspirationseek.com

vmware cloud private virtualization computing structure using architecture server infrastructure inspirationseek via management

Atlassian cloud architecture confluence jira footprint distribution global. Cloud computing architecture: front-end & back-end. Vmware cloud private virtualization computing structure using architecture server infrastructure inspirationseek via management. Azure stack offers hybrid cloud on your terms. Cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last. Atlassian cloud architecture. Cloud computing is an opportunity for your business. Cloud infrastructure overview. Cloud computing – smr. What is private cloud computing?

Cloud Computing Is An Opportunity For Your Business - Sarv Blog

blog.sarv.com

cloud computing services service benefits infrastructure business benefit software data sarv advantages iaas security flexibility storage automatic maintenance meaning need

Azure stack offers hybrid cloud on your terms. Cloud infrastructure overview. What is private cloud computing?. Cloud computing – smr. Cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last. Azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network. Atlassian cloud architecture confluence jira footprint distribution global. Selenium grid diagram nodes testingdocs architecture below example. Cloud infrastructure overview diagram isc architecture application upenn user case applications use path native. Cloud based reference architecture, cloud based digital asset

Cloud Based Reference Architecture, Cloud Based Digital Asset

www.pinterest.com

architecture asset management digital cloud aws based diagram solution infrastructure software

Atlassian cloud architecture. Vmware cloud private virtualization computing structure using architecture server infrastructure inspirationseek via management. What is selenium grid ?. Cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last. Cloud based reference architecture, cloud based digital asset. Azure stack offers hybrid cloud on your terms. Cloud computing services service benefits infrastructure business benefit software data sarv advantages iaas security flexibility storage automatic maintenance meaning need. Atlassian cloud architecture confluence jira footprint distribution global. Cloud infrastructure overview diagram isc architecture application upenn user case applications use path native. Architecture asset management digital cloud aws based diagram solution infrastructure software

Cloud Computing Architecture: Front-end & Back-end

www.simplilearn.com

cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last

Atlassian cloud architecture confluence jira footprint distribution global. What is private cloud computing?. Vmware cloud private virtualization computing structure using architecture server infrastructure inspirationseek via management. Azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network. Cloud computing architecture saas vs premise end front simplilearn based software does understanding jul updated last. Cloud based reference architecture, cloud based digital asset. Cloud computing – smr. Architecture asset management digital cloud aws based diagram solution infrastructure software. Cloud computing is an opportunity for your business. Azure stack offers hybrid cloud on your terms

Azure stack microsoft cloud architecture hybrid paas data computing services service iaas offers terms web windows vs development platform network. What is private cloud computing?. Cloud infrastructure overview. Azure stack offers hybrid cloud on your terms. What is selenium grid ?

0 notes

Text

What is Cloud Computing? Everything You Need to Know

Cloud computing is synonymous with the internet. Cloud computing is the on-demand supply of IT resources through the internet with pay-per-use charges. At the consumer level, it usually refers to a photo, video, or document storage like iCloud, one drive, and others, which can be accessed from anywhere. Cloud computing in El Paso and everywhere in the world is high in demand. It refers to anything that involves providing hosted services over the internet. The ability to save attachments in your email inbox and view them on another computer was one of the first elements of cloud computing. That's all there is to cloud storage.

What is cloud computing?

The supply of various services, like data storage, servers, databases, networking, and software, over the Internet is known as cloud computing. Almost all of the IT companies in El Paso use cloud computing in their day-to-day work. Cloud storage allows them to save files to a remote database and retrieve them whenever they need them. So to answer what is cloud computing we can say that anything that includes offering hosted services via the internet is referred to as cloud computing.

The hardware and software components needed to establish a cloud computing model are referred to as cloud infrastructure. Utility computing or on-demand computing are other terms for cloud computing. The cloud symbol, which is frequently used to symbolize the Internet in flowcharts and diagrams, inspired the phrase cloud computing.

Not only the IT firms but the Marketing company in El Paso have a great use for cloud computing.

How does cloud computing work?

Cloud computing services in El Paso allow client devices to access data and cloud applications from faraway physical servers, databases, and computers via the internet.

The front end, which consists of the accessing client device, browser, network, and cloud software applications, and the back end, which consists of databases, servers, and computers, are linked by an internet network connection. The back end acts as a repository, storing data that the front end can access.

A central server controls communication between the front and back ends. Protocols are used by the central server to simplify data sharing. To manage the connectivity between multiple client devices and cloud servers, the central server employs both software and middleware. In most cases, each application or task has its dedicated server.

Types of cloud computing services

Cloud computing can be divided into three types of service delivery or cloud computing categories:

1. Iaas - Infrastructure as a service. A model in which a third-party provider hosts server, storage, and other virtualized compute resources on their behalf and makes them available to consumers via the internet. Microsoft Azure, for example.

2. Paas - Platform as a service. A concept in which a third party maintains an application development platform and tools on its infrastructure and makes them

available to consumers via the internet. Consider Google's app engine.

3. Saas - Software as a service. A software distribution approach in which a third-party supplier hosts and distributes applications via the internet to consumers. Salesforce, for example.

Whether it is an IT firm or a marketing agency or for personal use the Cloud computing service in El Paso patronizes all of them. It is as essential as the internet is these days in our lives.

0 notes

Text

Transform your corporate critical data with Data Bricks

Systems are working with massive amounts of data in petabytes or even more and it is still growing at an exponential rate. Big data is present everywhere around us and comes in from different sources like social media sites, sales, customer data, transactional data, etc. And I firmly believe, this data holds its value only if we can process it both interactively and faster.

Simply put, Databricks is the implementation of Apache Spark on Azure. With fully managed Spark clusters, it is used to process large workloads of data and also helps in data engineering, data exploring and also visualizing data using Machine learning.

While working on databricks, found that analytic platform to be extremely developer-friendly and flexible with ease to use APIs like Python, R, etc. To explain this a little more, say you have created a data frame in Python, with Azure Databricks, you can load this data into a temporary view and can use Scala, R or SQL with a pointer referring to this temporary view. This allows you to code in multiple languages in the same notebook. This was just one of the cool features of it.

Evidently, the adoption of Databricks is gaining importance and relevance in a big data world for a couple of reasons. Apart from multiple language support, this service allows us to integrate easily with many Azure services like Blob Storage, Data Lake Store, SQL Database and BI tools like Power BI, Tableau, etc. It is a great collaborative platform letting data professionals share clusters and workspaces, which leads to higher productivity.

Before we get started digging Databricks in Azure, I would like to take a minute here to describe how this article series is going to be structured. I intend to cover the following aspects of Databricks in Azure in this series. Please note – this outline may vary here and there when I actually start writing on them.

How to access Azure Blob Storage from Azure Databricks

Processing and exploring data in Azure Databricks

Connecting Azure SQL Databases with Azure Databricks

Load data into Azure SQL Data Warehouse using Azure Databricks

Integrating Azure Databricks with Power BI

Run an Azure Databricks Notebook in Azure Data Factory and many more…

In this article, we will talk about the components of Databricks in Azure and will create a Databricks service in the Azure portal. Moving further, we will create a Spark cluster in this service, followed by the creation of a notebook in the Spark cluster.

The below screenshot is the diagram puts out by Microsoft to explain Databricks components on Azure:

There are a few features worth to mention here:

Databricks Workspace – It offers an interactive workspace that enables data scientists, data engineers and businesses to collaborate and work closely together on notebooks and dashboards

Databricks Runtime – Including Apache Spark, they are an additional set of components and updates that ensures improvements in terms of performance and security of big data workloads and analytics. These versions are released on a regular basis

As mentioned earlier, it integrates deeply with other services like Azure services, Apache Kafka and Hadoop Storage and you can further publish the data into machine learning, stream analytics, Power BI, etc.

Since it is a fully managed service, various resources like storage, virtual network, etc. are deployed to a locked resource group. You can also deploy this service in your own virtual network. We are going to see this later in the article

Databricks File System (DBFS) – This is an abstraction layer on top of object storage. This allows you to mount storage objects like Azure Blob Storage that lets you access data as if they were on the local file system. I will be demonstrating this in detail in my next article in this series

As a Microsoft Gold certified partner and certified Azure consultants in Sydney, Canberra & Melbourne. We have extensive experience in delivering database solutions in Azure platform. For more information, please contact us from [email protected]

0 notes

Text

Learn How to Build a Cloud Computing Infrastructure

Introduction

What is Cloud Infrastructure?

Cloud Infra Setup consists of the hardware and software components — such as servers, storage, networking, and virtualization software — that are needed to support the computing requirements of a cloud computing model. It allows businesses to scale resources up or down as needed, ensuring cost efficiency and flexibility.

Steps to Set Up Cloud Infrastructure

1. Choose a Cloud Service Provider (CSP)

- Popular options include Amazon Web Services (AWS), Microsoft Azure, Google Cloud Platform (GCP), and IBM Cloud.

- Evaluate factors such as cost, scalability, security, and compliance to select the best CSP for your needs.

2. Plan Your Architecture

- Define Objectives: Identify your business goals and technical requirements.

- Design Architecture: Create a detailed architecture diagram that includes network design, data flow, and component interactions.

3. Set Up Accounts and Permissions

- Create accounts with your chosen CSP.

Configure Identity and Access Management (IAM) to ensure secure and controlled access to resources.

4. Provision Resources

- Compute: Set up virtual machines or container services (e.g., Kubernetes).

- Storage: Choose appropriate storage solutions like block storage, object storage, or file storage.

- Networking: Configure Virtual Private Clouds (VPCs), subnets, and networking rules.

5. Deploy Services

- Databases: Deploy and configure databases (e.g., SQL, NoSQL) based on your application needs.

6. Implement Security Measures

- Network Security: Set up firewalls, VPNs, and security groups.

- Data Security: Implement encryption for data at rest and in transit.

- Compliance: Ensure your setup adheres to industry regulations and standards.

7. Monitor and Manage

- Use cloud monitoring tools to keep track of performance, availability, and security.

- Implement logging and alerting to proactively manage potential issues.

8. Optimize and Scale

- Regularly review resource usage and optimize for cost and performance.

- Use auto-scaling features to handle varying workloads efficiently.

Benefits of Cloud Infra Setup

Scalability: Easily scale your resources up or down based on your business needs. This means you can handle peak loads efficiently without over-investing in hardware.

Cost Efficiency: Pay only for the resources you use. Cloud Infrastructure Setup and Maintenance eliminates the need for large upfront capital expenditures on hardware and reduces ongoing maintenance costs.

Flexibility: Access your applications and data from anywhere in the world. This is particularly beneficial in the era of remote work.

Security: Cloud providers invest heavily in security measures to protect your data. Features like encryption, identity and access management, and regular security updates ensure your information is safe

Disaster Recovery: Implement robust disaster recovery solutions without the need for a secondary data center. Cloud-based backup and recovery solutions ensure business continuity.

How We Can Help

Our team specializes in setting up Cloud Infra Setup tailored to your business needs. Whether you’re looking to migrate your existing applications to the cloud or build a new cloud-native application, we provide comprehensive services, including:

Cloud Strategy and Consulting: Assess your current infrastructure and develop a cloud migration strategy.

Migration Services: Smoothly transition your applications and data to the cloud with minimal downtime.

Cloud Management: Monitor, manage, and optimize your cloud resources to ensure peak performance and cost-efficiency.

Security and Compliance: Implement security best practices and ensure compliance with industry standards.

Conclusion

Setting up cloud infrastructure is a critical step towards leveraging the full potential of cloud computing. By following a structured approach, you can build a secure, scalable, and efficient cloud environment that meets your business needs.

0 notes

Text

How to use the Oracle MySQL Database Service with applications that run in AWS

This post was written by: Nicolas De Rico: Oracle Cloud Solutions Engineer - MySQL Sergio J Castro: Oracle Cloud Solutions Engineer - Networking From RDS to MDS When an application reaches the maximum capabilities offered by Amazon Web Services (AWS) RDS, it is often recommended to migrate to the AWS Aurora service. However, Aurora is based on an older version of MySQL. Aurora still does not support MySQL 8.0, which was released in April 2018. Therefore, it may be difficult to port applications to Aurora, and later, out of Aurora because of its proprietary aspects. Defy conventional wisdom by upgrading from RDS to MDS and access many benefits Instead of migrating from RDS to Aurora and traveling back in time, developers can use the Oracle MySQL Database Service (MDS) to lower costs and improve performance, without foregoing the latest MySQL innovations. This video describes how this migration can be accomplished. The latest benchmarks using the newly released MySQL Database Service with HeatWave show significant performance improvements compared to Aurora and Redshift. In addition, at the time of writing this article, MDS costs between a third and half the cost of RDS for a similar configuration. The MySQL Database Service, which runs on Oracle Cloud Infrastructure (OCI), can be used with applications in either OCI or AWS. This article discusses how to integrate the MySQL Database Service running on Oracle Cloud with applications running in AWS. Scale your MySQL Database Service up to 64 OCPUs and 1TB RAM But first, a quick introduction to MDS. The Oracle MySQL Database Service is a fully managed DBaaS based on the latest release of MySQL 8.0 Enterprise Edition. This service is 100% developed and supported by the MySQL engineering team. MDS is available on compute instances that range from 1 OCPU (2 threads) and 1GB RAM all the way up to 64 OCPUs (128 threads) and 1024GB RAM. Oracle does not take the approach of a two-tiered segmented MySQL product line, unlike AWS RDS and Aurora. Just select the right MDS shape for the next level of performance. Light-speed analytics query execution with MySQL + HeatWave For applications that execute analytical queries against MySQL and would benefit from extreme query performance, MDS offers an option called MySQL Database Service with HeatWave to greatly accelerate those queries. In order to always ensure best performance, MySQL analyzes each incoming query to determine whether to execute it with its traditional execution engine or with HeatWave. This process is fully transparent to the application and delivers light-speed execution. Benchmarking of TPC-H queries (400GB data set) shows a median 400x query execution speed improvement with no changes to any of the queries. So, how to get applications running in AWS to work with this wonderful OCI MDS? Read on for the details… Configuring the OCI-AWS cloud interconnection We will setup a high-speed private Intercloud connection using a 1 Gbps FastConnect link between OCI and AWS. We will start by linking the two cloud providers using OCI’s FastConnect and AWS’ DirectConnect via Equinix, which is a Telecom partner to both OCI and AWS. Setting up FastConnect has been documented many times. Mr. Castro co-wrote a blog post with an Equinix engineer, you can find the step-by-step post here. And you can find documentation on DirectConnect on the Equinix website here. In the next paragraphs, we will build the following architecture diagram with OCI and AWS: Configuring the OCI FastConnect We will be using the Ashburn region for OCI, and the US-East-1 region (N. Virginia) for AWS. We will start by reviewing the Networking and FastConnect settings in OCI. We have configured a Virtual Cloud Network with two subnets in different availability domains, one private and one public. The MySQL Database Service (MDS) will reside in the private one. The VCN CIDR range is 10.20.0.0/16 and the CIDR for the subnets are 10.20.30.0/24 for the public one, and 10.20.40.0/24 for the private one. For this configuration, we are only using one route table. The AWS side is using a 192.168.0.0/16 CIDR range, the private IP of the demonstration MySQL client console in AWS address falls in this range. All traffic going to that range will get there via the Dynamic Routing Gateway (DRG). The DRG, of course, hosts the FastConnect 1Gbps Circuit to AWS’s Direct Connect. If you click on the circuit, you can view the BGP State: Configuring AWS DirectConnect Now let us view the AWS Networking and Direct Connect settings. As previously mentioned, the CIDR range for the Virtual Private Cloud (VPC) in Amazon Web Services is 192.16.0.0/16. This VPC also has two subnets, one for layered services and another demonstration subnet for a potential RDS database candidate for migration to MDS. Their CIDR ranges are 192.168.0.0/24 and 192.168.199.0/24 respectively. They are both associated with the same route table. The routes in the Route Table were advertised by OCI via the BGP session. The Virtual Private Gateway (VGW) is the AWS equivalent to the DRG in OCI. In the above image you can see that the VGW is attached to the VPC that we’re using to interconnect to OCI, and to Direct Connect. The ID string for this VPC is vpc-0fd882a938ce2c040. If you click on the Direct Connect Gateway link, it will take you to the Virtual Interface that details the Direct Connect configuration. Viewing the OCI-AWS cloud interconnection Now that we’ve seen the FastConnect / DirectConnect details, let us see the latency between an OCI Compute Instance on OCI and an EC2 Instance on AWS. Below you will find the details for both compute instances. Latency between OCI and AWS (only 1.65ms) We can determine the network latency between the two public clouds. Pinging from 10.20.30.10 (OCI) to 192.168.0.101 (AWS) the average ping response time is 1.65 milliseconds! Using the MySQL Database Service (MDS) with AWS Now that we have configured the Intercloud connectivity, all that remains for today is to create an MDS instance in OCI and connect to it from the AWS EC2 instance. The MySQL Database Service is a top-level item in the OCI Cloud Portal menu. A configured MDS instance will look like this: Seamless connection from AWS to MDS We can see that MDS uses MySQL version 8.0.22. Newly created MDS instances will use the latest version of MySQL. We will connect to this MDS instance in OCI from our EC2 instance in AWS: As we can see, it is very easy and convenient to connect applications from AWS to OCI and vice-versa when the Intercloud connectivity has been established. An application in AWS can point to an MDS instance in OCI by simply changing the IP address of the connection. It’s as if the OCI subnet resides in the AWS VPC! Keep the networking costs under control But what about networking costs between the two clouds? Ingress is free both ways and, in OCI, the first 10TB/month egress is free. Applications running in AWS that cause high egress costs could simply be moved to OCI for costs savings and still be accessible from AWS. Additionally, MySQL has network compression options to minimize the network usage. The bandwidth of the Intercloud connection can be adjusted up or down to match the real-life workload, but the low latency should remain unchanged regardless of the bandwidth setting. With all these points, the Intercloud networking cost can be easily optimized and kept under control. Why choose MySQL Database Service (MDS) The MySQL Database service is developed and supported directly by the MySQL developers. It is always up-to-date and contains all the MySQL innovation and functionality. Built from the MySQL Enterprise Edition and on the Oracle Cloud Infrastructure, the MySQL Database Service offers unparalleled high performance and security for any critical data. Avoid the lock-in by choosing MDS MySQL is a great cloud database technology because of its prevalence in public clouds and also on-premises. Applications can be written once for all deployment models! Although not covered in this present article, migrating databases from RDS to MDS should be quite simple because of the compatibility between these database systems. Database system choices can be made based on performance, criticality of support, costs, and so on, while avoiding hard lock-ins by using popular open source technologies. Cost comparison We hope with this article that users of RDS who want more than RDS is capable will defy conventional wisdom and consider using the MySQL Database Service in OCI instead of other legacy and proprietary services in AWS. For an idea of the costs of the MySQL Database Service, here is a comparison with other public cloud MySQL services. Annual cost for 100 OCPUs, 1TB Storage configuration Configuration: 100 OCPUs, 1 TB Storage MySQL Database Service: Standard E3 AMD 16GB/Core, all regions have the same price. Amazon RDS: Intel R5 16GB/Core, AWS US East. Azure: Memory Optimized Intel 20GB/Core, MS Azure US-East. Google: High Memory N1 Standard Intel 13GB/Core, GCP Northern Virginia. Thank you for visiting and for using MySQL! Nicolas & Sergio https://blogs.oracle.com/mysql/how-to-use-the-oracle-mysql-database-service-with-applications-that-run-in-aws

0 notes

Link

The cloud services industry is a fast-paced, ever-growing, and innovative field where multiple service types now exist. For instance, IaaS(Infrastructure-as-a-Service), PaaS(Platform-as-a-Service), and SaaS(Software-as-a-Service) and FaaS (Function-as-a-Service). With it is the ever-growing demand for higher resource, capacity and cloud computing resources. One such innovation in cloud computing is called the serverless architecture. Businesses can have more focus on developing, deploying and managing their applications and software because of serverless cloud computing. It is a much-needed paradigm shift in cloud computing to alleviate the mundane task of system administration. With the advent of serverless cloud architecture, gone are the days when a system administrator has to deal with the nuance of housekeeping, maintenance, and the ability to administer the cloud infrastructure, thus allowing businesses to create and produce high-quality applications.

What is cloud computing?

It is essential to identify how cloud computing works to understand the concept of serverless technology. Cloud computing points to the use of dynamically scalable computing resources accessible via a public network like the Internet. The required resources, often referred to as a ‘cloud,’ provide customers with single or multiple services. Cloud Hosting companies allocate their services based on types, including applications/software, platforms, infrastructure, virtualization, servers and data storage. Cloud-based services or only cloud services refer to services provided by a cloud resource. Also, it is a type of service provisioning in which cloud customers contract with cloud service providers for the online delivery of services offered by the cloud. Cloud service providers manage a public, private, or hybrid cloud to facilitate the online distribution of cloud services to one or more cloud customers.

How does serverless technology work on the cloud?

Serverless technology functions are an example of cloud services wherein execution of singular or multiple code functions happens in the cloud. The serverless process alleviates the need for the user to deploy and manage physical servers on which these functions execute. Also, users are only billed for the actual execution time of each Serverless function instance and not for idle time of the physical servers and VM instances. Another benefit is the continuous scaling of function instances to address varying traffic load.

The adoption of cloud-native that orchestrates serverless technologies varies significantly across global regions. However, the penetration of serverless is maturing with an annual growth of 75% indicating it as the fastest growing public-cloud service.

The five key players dominating the serverless market post-pandemic are:

Amazon Web Services Inc.

Microsoft Corp.

Google LLC

Alibaba Group Holding Limited

IBM Corp

Benefits of serverless cloud computing

Let us take a closer look at actual case studies of US companies taking advantage of serverless functions and technologies on their cloud premises.

Faster deployment time-Netflix

Serverless architecture simplifies the process of developing scalable applications rapidly and cost-effectively.

Netflix, Amazon, and Uber are some big names that are winning hearts through their cloud-native strategies. Startups and enterprises can develop and deploy applications effectively with cloud services whereas, developers can utilize their time to concentrate on delivering functionality and drive innovation.

Serverless is considered to be the next trend of cloud-native app development. Serverless roots out the infrastructure challenges in the application. Cloud provides the entire instance for running codes and also makes all the provisions of servers. They are responsible for the entire backend infrastructure for offering serverless functions.

Netflix depends on the serverless code. AWS Lambda, Azure, and Google Cloud Functions are some of the cloud providers that are investing in Netflix. As a business, you need to assure that the cloud vendors are solid on the security part. If you are planning to move to serverless, you need strong security prerequisites via end-to-end encryption, efficient codes, access controls measures, and third-party tools like Cloud Watch.

Cut costs – Netflix, Coca-Cola, Heavywater

Thanks to serverless cloud computing, costs per streaming start ended up being a fraction of those in the data center — a welcome side benefit for Netflix. It is made possible due to the cloud’s elasticity, enabling them to continuously optimize, grow, and shrink their cloud footprint near-instantaneously. We can also benefit from the economies of scale that are only possible in a massive cloud ecosystem.

Thanks to serverless technology, Coca-Cola started cutting costs from $13,000 to $4,490 by migrating their previous AWS EC2 instances to Lambda serverless instances for its vending machines. Coca-Cola now mandates serverless solutions or requires a justification for why you won’t use serverless. With Lambda, all this happens in under a second, and Amazon charges 1/1,000 of a penny. Plus, Coca-Cola is only getting billed if a transaction executes successfully. Otherwise, Coca-Cola pays nothing. For Lambda, reaching about 30 million calls a month, the cost was $4,490 per year.

Heavywater Inc has witnessed the AWS bill to $4000, which was much lesser than the previous $30k invoice. After switching to serverless architecture, the benefits Heavywater Inc witnessed are:

1. Decreased costs.

2. Decreased human intervention with a decrease in batch processing from 24 hours to 16 hours.

3. A decrease in EC2 instances to 211.

4. A decrease in errors in SWF.

The team focused more on converting the services to lambda and the workflows into step functions and continue to leverage the benefits of serverless architecture.

More focus on customers – T-Mobile

According to Satish Malireddi – Principal Cloud Architect, T-Mobile, “Jazz and serverless computing on AWS has cut out many time-consuming development and deployment steps. That’s time we can spend innovating and iterating on the solutions customers want to see. Jazz and serverless computing on AWS are major accelerators in how quickly we can give our customers what they want (Amazon, n.d.).”

Figure 1. T-Mobile / Jazz architecture diagram (Amazon, n.d.)

Serverless- Not perfect

Considering all the benefits, Serverless is still not perfect. It does have some drawbacks. The technology and the market are very much in the early stages. Also, the software it uses for monitoring, security and optimization has much room for improvement. Besides, it requires a technical team that knows how to implement the serverless architecture and do a bit of coding in a whole different way – making for much unlearning and relearning.

Fortunately, addressing some of the significant disadvantages of serverless technology is expected in the coming years, if not months. The big cloud companies will automate and innovate functions to run for longer, support more languages, and allow deeper customizations. Companies are starting to leverage the serverless cloud platform to improve their business operations through seamless, automated, and regular functions .

Serverless- A forward thinking approach

With the ever-increasing demand to scale, leverage, and spin applications and services on the fly, Cloud technology has to catch up, and serverless architecture is the way to go. Cloud services are moving forward to deliver real-time demand, cost-effective and readily deployable services and applications.

Indeed, without a doubt, the next generation technology for cloud services is serverless functions. Software architecture and the previous server diagrams will be expected to change and accommodate serverless processes. Numerous use cases for millions of small, medium and enterprise businesses prove that adopting serverless results increased deployment timings, cost cutting and operational boons.

0 notes

Text

VMware announces intent to buy Avi Networks, startup that raised $115M

VMware has been trying to reinvent itself from a company that helps you build and manage virtual machines in your data center to one that helps you manage your virtual machines wherever they live, whether that’s on prem or the public cloud. Today, the company announced it was buying Avi Networks, a 6-year old startup that helps companies balance application delivery in the cloud or on prem in an acquisition that sounds like a pretty good match. The companies did not reveal the purchase price.

Avi claims to be the modern alternative to load balancing appliances designed for another age when applications didn’t change much and lived on prem in the company data center. As companies move more workloads to public clouds like AWS, Azure and Google Cloud Platform, Avi is providing a more modern load balancing tool, that not only balances software resource requirements based on location or need, but also tracks the data behind these requirements.

Diagram: Avi Networks

VMware has been trying to find ways to help companies manage their infrastructure, whether it is in the cloud or on prem, in a consistent way, and Avi is another step in helping them do that on the monitoring and load balancing side of things, at least.

Tom Gillis, senior vice president and general manager for the networking and security business unit at VMware sees this acquisition as fitting nicely into that vision. “This acquisition will further advance our Virtual Cloud Network vision, where a software-defined distributed network architecture spans all infrastructure and ties all pieces together with the automation and programmability found in the public cloud. Combining Avi Networks with VMware NSX will further enable organizations to respond to new opportunities and threats, create new business models, and deliver services to all applications and data, wherever they are located,” Gillis explained in a statement.

In a blog post, Avi’s co-founders expressed a similar sentiment, seeing a company where it would fit well moving forward. “The decision to join forces with VMware represents a perfect alignment of vision, products, technology, go-to-market, and culture. We will continue to deliver on our mission to help our customers modernize application services by accelerating multi-cloud deployments with automation and self-service,” they wrote. Whether that’s the case, time will tell.

Among Avi’s customers, which will now become part of VMware are Deutsche Bank, Telegraph Media Group, Hulu and Cisco. The company was founded in 2012 and raised $115 million, according to Crunchbase data. Investors included Greylock, Lightspeed Venture Partners and Menlo Ventures, among others.

0 notes

Text

Cloud Migration – Is it too late to adopt?

“Action is the foundational key to all success.” – Pablo Picasso

No technology gets adopted overnight. On-premise environment provides full ownership, maintenance, responsibility. You pay for physical machines and virtualizations to meet your demands. Lately, such on-premise environments are less reliable, have a high total cost of ownership (TCO) creating frustration and a desire to alleviate this scenario.

If you or your company are facing such frustrations, it is time to act and think about cloud migration.

1. What is Cloud Migration?

Cloud environment contains virtualization platforms and application resources, required for company or business to run operations efficiently, which was previously on-premises.

Cloud Migration, according to Amazon Web Services, is the process of assessing, mobilizing, migrating, and modernizing your current infrastructure, application or process over to a cloud environment.

At Syntelli, while we believe the principles of cloud are standard across all platforms, we are proud to partner with Microsoft’s Azure, Amazon’s AWS and Google’s GCP.

2. How to plan a successful Cloud Migration?

“Do the hard jobs first. The easy jobs will take care of themselves.” – Dale Carnegie

Figure 1. General Cloud Migration Strategy

Phase One: Assess

Identify and involve the stakeholders

Early engagement and support will lead to a smoother, faster migration process.

Create a strategic plan

Establish objectives and priorities for migration. Identify major business events for the company, which may be affected by this transition.

Calculate total cost of ownership

Very important step to estimate your potential savings.

Discover and assess on-premise applications

Some cloud providers provide assessment tools but having a subject matter expert review these applications and resources help ease migration.

Get support for your implementation

Syntelli Solutions to the rescue!!

Train your team on cloud environments, applications, and resources

Know your migration options

“Lift or Shift”, “Complete Refactor”, “Hybrid approach”

Phase Two: Mobilize & Migrate

Migration governance

If you plan on migrating more than one application or service which could impact more than one team, then you need to plan your projects and track them in order to ensure success.

Security, Risk and Compliance

You can evaluate your migration options by performing few tests. Use this opportunity to identify any scaling limitations, access control issues which may exist in your current application.

Document and review scenarios where several teams may interact with your applications on cloud. This tracing helps mitigate any dependencies and reduce potential impact.

Want more?

Subscribe to receive articles on topics of your interest, straight to your inbox.

Success!

First Name

Last Name

Email

Subscribe

Pilot your migration with few workloads

Learn more about your new cloud environment by migrating low complexity workloads. It could be a simple migration of DEV database or testing a data pipeline required to build a modeling dataset for a data science model.

Migrate the remaining applications

With successful migration and learning, you can complete your migration to the cloud.

Decommission existing on-premises infrastructure

After successful migration, it is time to pull the plug on your existing on-prem systems. This reduces your overhead spent on maintaining your outdated infrastructure or application.

Phase 3: Run and Modernize

Run and monitor

It is time to leverage the cool tools offered by your cloud provider to monitor your applications and track your resources, efficiency of your applications. Currently, the applications may be in a fragile state, so take care to review all the steps to track a successful migration.

Timely upgrades

With the new infrastructure setup on cloud you can identify scopes for code and feature improvements, resource updates.

Cloud Service Training

It is important to work with the customer and train their team members, until the team feels comfortable with the transition. Many questions still tend to linger after a successful migration.

3. Use Case: On-prem Data Science Model Migration to Cloud

Cloud Healthcheck – It’s about the data, stupid

As long as companies continue to think of the cloud simply as commodity compute and storage, the level of attention provided to securing this asset will be relegated down. Read More

Approach

Each cloud project migration varies from customer to customer. However, our customer’s goal was to migrate an existing data science model which made use of IBM SPSS Modeler.

The goal was to recreate and re-design existing data pipelines, de-couple dataset creations from data modeling and make the model production ready for various deployment demands.

Syntelli approached the migration process as depicted in the Figure 2 below.

The steps taken, are mentioned in the diagram as described in previous section.

Challenges

Each customer can decide to use cloud service provider of their choice. Options vary by cost, by infrastructure setup, compliance and security, and timeline to migrate.

In this case, the cloud service provider managed all the resources, and Syntelli leveraged the resources, and infrastructure. This service is generally termed as Infrastructure as A Service (IAAS).

Network and Connectivity

Network topology was the first challenge to overcome. Each company or customer has different levels of firewalls, packet filters and network hops. They can cause packet losses, slow transfer of data, to name a few.

Having a subject matter expert who can understand and test the connectivity to various resources like databases, virtual machines is very important. Technical scenarios like performing ping, traceroute tests and explaining these reports helped resolve many network barriers.

Security and Compliance

Next challenge was security, risk and compliance. Bigger business and organizations have a lot of applications and resources, which house Personally Identifiable Information (PII). At each point of the migration, Syntelli constantly worked with the IT Risk and Compliance team, IAAS cloud provider to make sure that the customer’s information was not compromised.

In this case, it was important to leverage security approaches like Active Directory, Kerberos for Big Data Environment, Single Sign-On option, Ranger, SSH Keys, two factor authentication, to name a few.

While, this can increase your migration timelines by few days, you will be more secure in the future.

Figure 2. On-Prem Data Science Model Migration Strategy

Success

Once the data science models were migrated successfully, the next steps were to document the migration and track all incidents which were resolved.

This not only helped the customer understand challenges, but as a consulting team, Syntelli learnt from the experience while still applying standard cloud practices.

Return on Investment (ROI) proved to be a huge success. The company saved thousands of dollars paid for propriety licensing of on-prem application. The migration helped cut costs, where only few users leveraged the application previously.

Another important point to note, the company did not have an in-house team to handle and monitor resources or use them. It was important for the Syntelli consulting team to guide them during this transition from coding, utilizing infrastructure, reporting, communicating with other teams across customer’s organization.

“Strive not to be a success, but rather to be of value.” – Albert Einstein

Currently, this company has grown in team size and technical capacity to scale their data science requirements and focus on creating improved models for their business in order to predict and forecast better results, track growth, and retention.

4. On-Prem vs Cloud Infrastructure

While cloud migration has helped reduce the customer’s stress on many topics, there is still room for inconsistencies or challenges ahead.

Discussion Topic

On-Prem Application

Cloud Migrated Application

Migration Story

Cost

Expensive Depends on subscription Significantly reduced

Maintenance

Resources managed by the customer Resources managed by cloud service provider Less man hours

Application Interaction

High human interaction Low human interaction Reduced greatly

Open Source Libraries

Limited access to Data Science libraries Ability to leverage open source libraries Still a challenge of tracking open source library changes

Data Source Flexibility

Limited to certain formats More options Various formats from different sources leveraged

Scalability

Limited Exponential Access across various teams in the organization

Data Accessibility

Limited to one team More teams interact Access across organization

Security

More secured Vulnerable Security, Risk and Compliance team need to be more vigilant and audit at regular intervals

Application Downtime

Problem can be isolated to on-premise infrastructure or propriety application Multiple points to trace Leverage monitoring and tools and reports to avoid this scenario

ROI

Minimal Significant Impact Saved thousands of dollars spent on licenses, which benefited a few

There are more topics, which can be part of the above table like SLA, resource upgrades, interoperability with other environments etc., but topics like infrastructure networking, data compliance, downtime, and support are some of the key points for successful cloud migration. It is important for a business or organization to have the key topics ironed out before migration. This helps alleviate some of the challenges.

5. Conclusion

We are currently in a state of uncertainty with recent spread of COVID-19 virus all over the world. We hope for this situation to ease and let individuals and all businesses resume their operations.

Some businesses have been able to sustain this impact, since most of their infrastructure is on cloud, and they are able to work and support communities during these worried times. We are uncertain, if our economy would have survived such an impact ten years ago.

Individuals and companies across world have accepted and adapted cloud computing. If you are on the fence, come talk to Syntelli. We can help you answer some of those questions and help you in the process.

“Intelligence is the ability to adapt to change.” – Stephen Hawking

SIMILAR POSTS

The Power of Big Data and AI in the Fight Against COVID-19

The emergence of a novel coronavirus in December 2019 has had the medical community on edge. Public health officials around the world have faced an uphill battle in treating current cases and preventing the further spread of the virus. The 2002 SARS outbreak, and...

read more

For Better Analytics, Invest in Data Management

Digital transformation is redefining how work gets done. To enable transformation, your internal consumers need analytics to discover and apply insight as they work. Business decisions need to be informed by data in the same way that consumers use data in ecommerce:...

read more

Master Data Management – MDM Models a Complex World in 2020 and Beyond

As customers, we’ve all experienced the frustration with organizations who don’t understand us. We’re baffled that these organizations—retailers, insurers, health care providers, and B2B provides in our work life - miss seemingly obvious facts that would make our...

read more

The post Cloud Migration – Is it too late to adopt? appeared first on Syntelli Solutions Inc..

https://www.syntelli.com/cloud-migration-is-it-too-late-to-adopt

0 notes

Text

Cloud computing : sarahsag

Cloud computing

Cloud computing

Distributed computing is the on-request accessibility of PC framework assets, particularly information stockpiling and processing power, without direct dynamic administration by the client. The term is commonly used to portray server farms accessible to numerous clients over the Internet. Enormous mists, prevalent today, regularly have capacities disseminated over numerous areas from focal servers. In the event that the association with the client is moderately close, it might be assigned an edge server.

Mists might be constrained to a solitary association (endeavor clouds), or be accessible to numerous associations (open cloud).

Distributed computing depends on the sharing of assets to accomplish rationality and economies of scale.

Backers of open and half breed mists note that distributed computing enables organizations to keep away from or limit in advance IT foundation costs. Defenders likewise guarantee that distributed computing enables endeavors to get their applications fully operational quicker, with improved reasonability and less support, and that it empowers IT, groups, to all the more quickly alter assets to meet fluctuating and eccentric demand. Cloud suppliers commonly utilize a "pay-more only as costs arise" model, which can prompt startling working costs if heads are not acclimated with cloud-valuing models.