#and it went in chronological order of being published which was so smart????

Explore tagged Tumblr posts

Note

hey! i stumbled across you on ao3 through genshin (i think? that was in september i have no idea at this point), went to check out your profile and saw my hero academia works there. i am currently very much into it, so i was like let's gooo sooo I found B♭ and that has been a wild journey.

firstly, i don't have any experience with american school system, so a lot of worldbuilding was new for me. moreover, marching band is something from another universe(aka music lover but never got educated on the matter), so fic constantly challenged me with new details-concepts-vocabulary. stepping outside of your comfort zone while reading? great idea! i think i never learned so much from a fic while enjoying it so much ^^

secondly, i am simply amazed by sheer amount of effort you put into it. i decided to read in publishing order, so non-chronological really impressed me. you're honestly a mastermind being able to pull that off. also, having a song for every chapter with specifically picked out lyrics relevant to the content is so, so cool! the diversity of your playlists should be astonishing, i'm jealous :)

thirdly, the characters are just so real. i love all the canon references, i love the reactions that don't feel exagerrated or too mild. they are acting...exactly as i would expect them to in that circumstances and setting. i just accepted leads' ways of thinking and reflecting so naturally

i also read the extra notes when they were available and just...how much thought is put in is mezmerising. for some reason i never thought pulling directly from your life experiences when writing? but it actually makes a lot of sense and it brought me some ideas to try out so hehe ;)

as i am very smart and hadn't scrolled down on the order post, i didn't see until quite late in the reading that the end of perfect harmony is published as notes, so that was a surprise. i understand your reasons and the fact that you're not even in the fandom anymore, but you mentioned in some extra notes that it's ok to ask for them even if years passed so...here i am three years after, complimenting B♭ :D

anyway, i finished it a couple of days ago, and even the notes are quite detailed. images of described shenanigans popped into my head just like that, and i really appreciate that you published them and i got to know what happened next!!

i actually wondered why were the comments disabled since i really wanted to comment on a few chapters bc your work deserves it so much...but yeah, that's what led me here so i guess congrats, you get my thoughts all nicely packed in one place ^_^

there's probably a lot of specific pieces, details, ideas i liked about B♭, so that is merely a summary of exciting things i remember!

i'll say goodbye using my favourite oneshot title:

thank you for the music ✩°。⋆⸜(ू。•ω•。)

not gonna lie i'm kind of obsessed w/the way you just glossed over the fact that you (probably) found me through my (anonymous) genshin fics, which means you jumped through the (minimum three) hoops required to get here, my (named) fandom blog, and then proceed to gush abt a bnha series i did. like i would assume that if someone put in the effort to find my other fandom fics from my genshin stuff, then there must've been smth really worth looking into w/the genshin stuff lmao

for the sake of my mutuals' dashboards, since this ask is so long i'm just gonna chuck the whole (long) answer under a cut lol

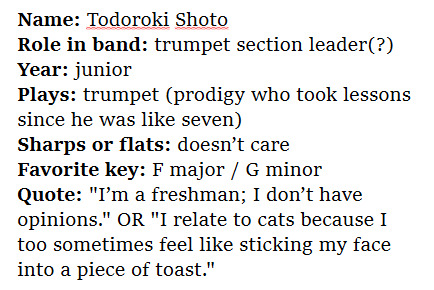

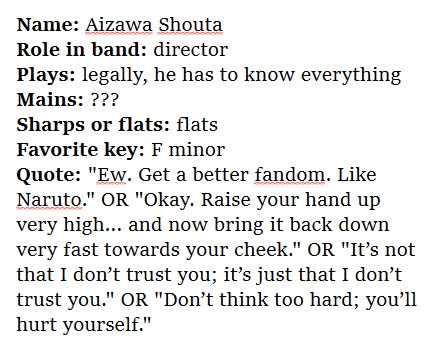

anyway yes Bb!! the amt of effort n planning i put into that series was legitimately insane. i made school schedules for EVERY SINGLE BNHA CHARACTER and PUT IT ON A SPREADSHEET so that i could PLAN WHO COULD WALK WITH WHOM TO THEIR NEXT CLASSES n have PLOT-RELEVANT CONVERSATIONS LIKE THAT. i made little profiles for each of the characters, where i chose their favorite musical key (and why), how many years/instruments they play, and gave them each a funny little quote/catchphrase!!!

what possessed me to do this for ~20 different characters i honestly could not tell you

i definitely loved working on Bb a lot. i remember sitting down three years ago, practically to the day by this point, n hashing out the events of every single chapter to the epilogue, then reorganizing them into a proper timeline (i also kept a calendar in my notes with the chapters in order), all while occasionally looking out my bedroom window n thinking how wonderfully bright n warm n sunny the world was becoming again. bc really, 2019 was a very struggle year for me, n i didn't take the time to appreciate the sunlight then the way i have every year since. from there, i worked off that very strict outline, and most of the note-chapters that were eventually put up are primarily just copy-pasted straight from there.

i remember being on youtube a lot for music recs when working on perfect harmony too!! a bunch of them changed in the years btwn walking away from the series n actually publishing the notes (which were actually published mid-december last year, then backdated to 2020 a few days later ahaha), with a number of the tour arc alternate chapter title songs coming from songs that didn't even exist at the time of the fic's original planning. my mp3 collection grew a lot during the planning phases of Bb lmao.

i'm glad the characters felt so real!!! while no one character was based entirely off one single person i knew irl, one could say that writing Bb was a bit of a love letter to my time in high school band in some places, both the events i partook in n the people i knew there. it was a very "write what you know" type of fic.

anyway haha yeah the end of my bnha days were not fun, but i still loved Bb enough to hold onto the idea of returning to it Soon(tm) that i put off publishing the chapter notes for almost two years. even then, that was a difficult decision for me to make bc a part of me wasn't ready to close that chapter of my life. i think ultimately it was the best decision to make though, since the fics are p heavily tied up in a much sadder part of my life that i'd just rather not return to.

the main reason comments were turned off of Bb (and indeed, the majority of my bnha fics) is most simply described as "resentment". it's different from how i feel abt my old snk fics (where i turned comments off of them so that i could pretend no one's really reading them anymore), which is more impersonal "oh my god i was so young back then and i give fewer than negative shits abt any mistakes i might've made on them or what anyone thinks of them" bc in bnha it's kind of hard to avoid the fact that i had a Name in the circles i typically traversed for a while. it wasn't that big of a name, but it's certainly more than nothing.

it's not really a feeling i like to dwell on, so i just archive-locked the responsible works n turned off comments for the most heinous culprits (mostly sparklers, but even tho i love Bb as a story, i do not love Bb as a publishing experience, if that makes sense), and for the most part, that keeps the resentment contained.

still, i'm genuinely happy that you enjoyed the au so much!!! i honestly love love love how goddamn SPECIFIC the premises are for this fic. the world was truly built with love, and the music puns for every title were always such a joy to come up with c':

thank you for the ask!!!! :D

#asks#kid-of-yesterday#long post#if you really did come here from my genshin fics last SEPTEMBER then boy howdy do i know Exactly which fic you came from#(if it was in september then it Must have been the saucy xv fic abt the sharp teeth bc pure identity didn't come out til oct)#i have a lot of Feelings abt my time in the bnha fandom that i just don't rlly like to talk abt publicly tbh#mostly bc (most of those Feelings are 'resentment' lmao) i try actively to not be a bitter person anymore#but also i hate admitting that people Knew me bc it feels like vanity or bragging#(bc if my name had ever been ''worth anything'' then why did Bb not garner the attention i'd hoped?)#i know that those thoughts aren't true n all but they're overall very complicated feelings#regarding how fandom at large treats fan creations and creators that ultimately led to my current decision to publish anonymously#ofc my feelings towards fandom n the fan-fan creator relationship have shifted w/time again n i do often consider just de-anoning#but it's... Tricky.... to say the least#haha sorry for unloading a little gloomily onto your lovely ask but i also think you deserve my honest thoughts#and not a saccharine falsehood / partial truth (oh hey that's the main thesis of rhythm lol)#ALSO to have an izch fic as your fave is exemplary taste when combined w/krbk#i am handing you a plaque that says 'certified good taste in ships'

1 note

·

View note

Text

Dino Watches Anime (Nov 28)

Obviously, I’m not going to list the ongoing anime that I’ve still watching as that hasn’t changed much. I will put the ones that I recently completed though!

Recently Completed!

Youkoso Jitsuryoku Shijou Shugi no Kyoushitsu e

I was going to put this in chronological order until I realized that I just wanted to get this piece of crap out of the way. Seriously, I regret watching this show. I HATE how it’s the highest rated out of all of them! It’s almost an 8/10! I gave it a 4! Here’s why:

This anime started out okay. I liked the sound of its premise. I liked the idea of teenage psychology being pushed but not as life-or-death but more of status. Because believe it or not, sometimes a person values their image and status more than their life. That plot was... kind of there? I don’t know. It was mostly boobs and ass. Those jiggle physics don’t stop here. They make sure to remind you that every character in this anime has large assets and asses every two seconds.

The characters are probably the most deplorable part of this show. They were so bad. Seriously, we just took the worst parts of every trope and threw them together! The “I don’t talk to anyone. I don’t have any friends. I’m EDGY and don’t belong here. I’m this close to selling myself to Orochimaru for power”, the “cardboard houseplant that’s so monotone that it hurts”, the “double-sided dipstick that will take out a person’s intestines and use them as a jump rope”, and the “arrogant older brother who is way more accomplished than his sister”. We also have more assorted bastards, but those are the main ones. The characters ruined everything. Their interactions were so coarse, forced, hard to watch, and everything is executed so poorly that it made me wonder whether people rated this for ulterior motives or not. Everyone here is an asshole.

Let’s look at the first three characters:

“cardboard houseplant that’s so monotone that it hurts” - Shoya Chiba isn’t even a bad voice actor. He does give me Hiroshi Kamiya vibes though (not a bad thing), but his voice acting in this show was hard to listen to because his expression didn’t change and neither did his voice. Seriously, over 12 episodes, he has that same expression. Someone threatened to harm him, and he’s still looking like a dead fish. I can’t describe how much worse it is to have a main character whose facial muscles don’t move. He has no personality.

“I don’t talk to anyone. I don’t have any friends. I’m EDGY and don’t belong here. I’m this close to selling myself to Orochimaru for power” - I like her design, but what else is going for her? How many times does she need to say, “I don’t need friends. I just want to move up in school.” Bitch, I get it. You can calm down. You keep doing things for other people but you say you don’t care? She arguably gets the most growth. Akari Kito voiced her and it was just like how any other person on earth would voice this character.

“double-sided dipstick that will take out a person’s intestines and use them as a jump rope” - She’s exactly what she sounds like. She’s in that gif. She’s sweet and nice until you catch her being not that. Yurika Kubo did a pretty alright job voicing her. Nothing really to say here besides I hated her with a burning passion.

Music was alright. Animation was... Lerche standard. Nothing special. It looks nice until you are flashed so many times that you can’t tell what this show is even about anymore.

This is one of the worst shows I’ve watched in a while. It wastes a perfectly good premise and voice cast.

Kekkaishi

2006 was a good year for anime, and this probably got swept over because Code Geass took the fall season by storm. But this anime was genuinely good. I wanted a good shonen/comedy with action and this filled that void and more. I even read some of the manga before realizing that I just don’t like reading manga that much.

I genuinely like the cast of characters and find them amusing. I also like how they incorporate a stay-at-home dad who wears an apron and no one judges him because it’s what they see as normal. We have a female character whose not being sexualized every few seconds. Sunrise did cheat a little with other female characters though because the manga made their proportions okay while the anime decided to make them look more like a Barbie rather than a human. The animation was pretty okay too. For 52 episodes, it did some pretty okay stuff but with today’s technology, it’s probably not as “wow” as it was back in the day.

I’m just mad that they developed a character only to kill him a couple of episodes later. That’s sad.

The soundtrack was pretty standard, but I was impressed by the fact that I liked the voice acting. I originally wasn’t as much of a fan of Hiroyuki Yoshino’s works because I found his voice annoying, but when he finds the right character (like Yoshimori or Eraser Mic), he works really well. It’s unfortunate that a lot of the main cast aren’t as prolific as they once were, but I guess that’s life.

No one was hurt in the making of that gif.

I rated this a 9/10 because it was for pure enjoyment. I didn’t have this much fun watching an anime in a while. This is the anime that got me binge-watching again.

Nobunaga Concerto

This anime has a blaring problem. It’s not the story, it’s not the writing, it’s not the characters, and it’s not the music. It’s the art. Watch any clip and it will give some Berserk flashbacks.

The writing was pretty good too. The story was genuinely interesting, but in the end, it didn’t feel like it did enough. It didn’t cover enough. The dialogue and the incorporation of modern culture with the historic parts were smart. Saburou was really likeable and oddly adaptive. The characters around him (the historic ones) are pretty cut and dry. The music was pretty good too! The art and lack of adaptation are the only things truly holding this show back.

Mamoru Miyano plays the main character and obviously makes him charming and funny, Yuki Kaji plays Nobunaga Oda, and Nana Mizuki plays Oda’s betrothed. I actually didn’t know anything about Oda’s tale prior to this anime so don’t think that’s required.

I rated it a 7/10

*Another important note is that they get suddenly racist in the last episode. A black guy appears, and people scream that it’s a monkey like they’ve never seen a darker-skinned human before. It was honestly disappointing.

Ookami-san to Shichinin no Nakama-tachi

Okay, this anime surprised me because of how much I liked it. It wasn’t even anything special. They took the same JC Staff rom-com tropes and put them into another anime combined with some fairy tale lore. But this anime was so entertaining and charming with its cast that I genuinely didn’t hate any of the characters. There were a few moments that made me go, “okay, that’s a bit too much”, but a girl going around punching people with neko boxing gloves? That’s pretty cool. Ookami was a really funny character who I actually found a bit interesting which is weird for a story that’s supposed to be superficial and comedic. Ryoushi is practically a spitting image of my anxiety and personality but in a charming way? He has some cool moments. He’s almost a little like Zenitsu. Courageous when push comes to shove but he’s actually awake. Ringo was the innocent loli until she wasn’t because if you mess with her friend, she will poison you. Again, they made these references to regular rom-com anime and fairy tales that completely roll together nicely. JC Staff didn’t mess this one up, and as always, there’s a tsundere Rie Kugimiya role in there somewhere.

Because I enjoyed it so much, I gave it a 9/10.

Inari, Konkon, Koi Iroha

I literally finished this one an hour ago, read the last chapter of the manga, and went “what the heck?” Because... I enjoyed this, but I also didn’t? Bitter-sweetness at its best. Houko Kuwashima is a really underrated voice actress because she hasn’t taken that many big roles as of recent, but she has incredible range. The characters of this are incredibly plain, but I don’t mind that because they aren’t painful to watch unlike the first anime I mentioned (seriously, I watched the last three shows on this list to wash that bad anime out of my brain). Everyone in this anime seems to be perfect in one way or another because they don’t really wish ill on anyone. Not gonna lie, characters like that aren’t for everyone because “everyone is a scum at some point in their lives”. I definitely respect for the need of balance. The story is pretty simple and plain and so is the art. The music was nice and pleasant. Basically, it’s a palette-cleanser of an anime after watching some bad anime. It’s about developing middle school romance and this... “teenage” couple on the side. It’s about friendship! And discovering yourself, and yes, one character found out she was gay, and I was rooting for that character so hard only to find out that she didn’t get her conclusive ending. Everyone else gets some bullshit ending one way or another! This is published in the same publication as Bungou Stray Dogs, and I wouldn’t have been able to tell if I didn’t look it up.

I rated this one an 8/10 because I enjoyed it still despite the ending being a little idealistic, sad, and far-fetched (seriously, someone becomes a god and gets their existence erased).

2 notes

·

View notes

Text

#5yrsago Greenwald's "No Place to Hide": a compelling, vital narrative about official criminality

Cory Doctorow reviews Glenn Greenwald's long-awaited No Place to Hide: Edward Snowden, the NSA, and the U.S. Surveillance State. More than a summary of the Snowden leaks, it's a compelling narrative that puts the most explosive revelations about official criminality into vital context.

Glenn Greenwald's long-awaited No Place to Hide: Edward Snowden, the NSA, and the U.S. Surveillance State is more than a summary of the Snowden leaks: it's a compelling narrative that puts the most explosive revelations about official criminality into vital context.

No Place has something for everyone. It opens like a spy-thriller as Greenwald takes us through his adventures establishing contact with Snowden and flying out to meet him -- thanks to the technological savvy and tireless efforts of Laura Poitras, and those opening chapters are real you-are-there nailbiters as we get the inside story on the play between Poitras and Greenwald, Snowden, the Guardian, Bart Gellman and the Washington Post.

Greenwald offers us some insight into Snowden's character, which has been something of a cipher until now, as the spy sought to keep the spotlight on the story instead of the person. This turns out to have been a very canny move, as it has made it difficult for NSA apologists to muddy the waters with personal smears about Snowden and his life. But the character Greenwald introduces us to isn't a lurking embarrassment -- rather, he's a quick-witted, well-spoken, technologically masterful idealist. Exactly the kind of person you'd hope he'd be, more or less: someone with principles and smarts, and the ability to articulate a coherent and ultimately unassailable argument about surveillance and privacy. The world Snowden wants isn't one that's totally free of spying: it's one of well-defined laws, backed by an effective set of checks and balances ensure that spies are servants to democracy, and not the other way around. The spies have acted as if the law allows them to do just about anything to anyone. Snowden insists that if they want that law, they have to ask for it -- announce their intentions, get Congress on side, get a law passed and follow it. Making it up as you go along and lying to Congress and the public doesn't make freedom safe, because freedom starts with the state and its agents following their own rules.

From here, Greenwald shifts gears, diving into the substance of the leaks. There have been other leakers and whistleblowers before Snowden, but no story about leaks has stayed alive in the public's imagination and on the front page for as long as the Snowden files; in part that's thanks to a canny release strategy that has put out stories that follow a dramatic arc. Sometimes, the press will publish a leak just in time to reveal that the last round of NSA and government denials were lies. Sometimes, they'll be a you-ain't-seen-nothing-yet topper for the last round of stories. Whether deliberate or accidental, the order of publication has finally managed to give the mass-spying story that's been around since Mark Klein's 2005 bombshell.

But for all that, the leaks haven't been coherent. Even if you follow them closely -- as I do -- it's sometimes hard to figure out what, exactly, we have learned about the NSA. In part, that's because so much of the NSA's "collect-it-all" strategy involves overlapping ways of getting the same data (often for the purposes of a plausibly deniable parallel construction) so you hear about a new leak and can't figure out how it differs from the last one.

No Place's middle act is a very welcome and well-executed framing of all the leaks to date (some new ones were revealed in the book), putting them in a logical, rather than dramatic or chronological, order. If you can't figure out what the deal is with NSA spying, this section will put you straight, with brief, clear, non-technical explanations that anyone can follow.

The final third is where Greenwald really puts himself back into the story -- specifically, he discusses how the establishment press reacted to his reporting of the story. He characterizes himself as a long-time thorn in the journalistic establishment's side, a gadfly who relentlessly picked at the press's cowardice and laziness. So when famous journalists started dismissing his work as mere "blogging" and called for him to be arrested for reporting on the Snowden story, he wasn't surprised.

But what could have been an unseemly score-settling rebuttal to his critics quickly becomes something more significant: a comprehensive critique of the press's financialization as media empires swelled to the size of defense contractors or oil companies. Once these companies became the establishment, and their star journalists likewise became millionaire plutocrats whose children went to the same private schools as the politicians they were meant to be holding to account, they became tame handmaidens to the state and its worst excesses.

The Klein NSA surveillance story broke in 2005 and quickly sank, having made a ripple not much larger than that of Janet Jackson's wardrobe malfunction or the business of Obama's birth certificate. For nearly a decade, the evidence of breathtaking, lawless, endless surveillance has mounted, without any real pushback from the press. There has been no urgency to this story, despite its obvious gravity, no banner headlines that read ONE YEAR IN, THE CRIMES GO ON. The story -- the government spies on your merest social interaction in a way that would freak you the fuck out if you thought about it for ten seconds -- has become wonkish and complicated, buried in silly arguments about whether "metadata collection" is spying, about the fine parsing of Keith Alexander's denials, and, always, in Soviet-style scaremongering about the terrorists lurking within.

Greenwald doesn't blame the press for creating this situation, but he does place responsibility for allowing it square in their laps. He may linger a little over the personal sleights he's received at the hands of establishment journalists, but it's hard to fault him for wanting to point out that calling yourself a journalist and then asking to have another journalist put in prison for reporting on a massive criminal conspiracy undertaken at the highest level of government makes you a colossal asshole.

The book ends with a beautiful, barn-burning coda in which Greenwald sets out his case for a free society as being free from surveillance. It reads like the transcript of a particularly memorable speech -- an "I have a dream" speech; a "Blood, sweat, toil and tears" speech. It's the kind of speech I could have imagined a young senator named Barack Obama delivering in 2006, back when he had a colorable claim to being someone with a shred of respect for the Constitution and the rule of law. It's a speech I hope to hear Greenwald deliver himself someday.

No Place to Hide: Edward Snowden, the NSA, and the U.S. Surveillance State

https://boingboing.net/2014/05/28/greenwalds-no-place-to-hid.html

5 notes

·

View notes

Text

Rick Riordan's books just keep getting better (and more diverse!) Transcript

Part 1

Part 2

Transcript Below the Cut:

Rick Riordan. So, I've wanted to make a video about Rick Riordan for a while and with The new Trials of Apollo book just coming out, I’m really hyped about it. So I wanted to talk about why I like his books, or at least some of the things that impress me about them and keep me consistently excited about them.

Rick Riordan, if you don’t know, is the author of the wildly popular Percy Jackson series, and today I want to talk about his books, especially how his representation of minorities has improved over time.

So, a few quick things: First, I’m not going to talk about ALL of Rick Riordan’s work, especially his ancillary and tie in material like the Demi-God Files or all the cross over stories, mostly because I haven't read all of them.

And second: Spoilers. Just, big old spoilers for basically everything. I’m not going to go into big plot points much, but I will be talking about some of the characters in depth. I’m going to move through his ouvre in roughly chronological order. So, you are warned.

Lastly, this video hinges on the premise that well done, well executed, fully fledged representations of minority characters in children and Young Adult media is good and important. I’m not really going to argue this point. It is the assumption we are beginning with. Diverse media with diverse characters is good and important.

And this point is, weirdly, kind of controversial. In fact, in the vast majority of children and young adult media most of the cast will be white, straight, cis, able bodied, neurotypical children or young adults with an unstated or vague religious affiliation. This last bit, about the unstated or vague religious affiliation is one we don’t often think about, but really, having a character with ANY stated religion is really rare. Most will, maybe, practice a sort of secularized Christmas maybe? But that’s about it.

The rationale you’ll hear for this is that this makes books more accessible and thus marketable. I would counter that if you really want your book to appeal to as many different people as possible, wouldn’t you want to have as many different types of characters as possible? But that comes with the assumption that outright bigots wouldn’t refuse a book because one of the secondary characters is in a wheelchair, I guess.

So, yeah. Most children's lit and young adult lit will be white, straight, cis, able bodied, neurotypical children or young adults with an unstated or vague religious affiliation, even if it gets absurdly, massively popular. Popular enough to take risks and work outside the box. I’m looking at you, JK Rowling. Looking at you.

This fact, this lack of diversity, does not bother some people. And we are not going to argue this point in this video. We are beginning with the assertion that this situation is not ideal, and that added quality, well written diversity is a positive. And we are going to look specifically at how Rick Riordan improves in this specific aspect of his writing over time.

--

Ok, so, Uncle Rick is a San Antonio , Texas native, and as someone who was also born and raised in central Texas, I love this fact. He went to MY alma mater, UT, and became a middle school teacher. We’re basically the same person.

Now, Percy Jackson isn’t actually his first book series. In the 90s he wrote a detective series set in San Antonio called Big Red Tequila. There’s like 7 books in this series and I have read none of them. I’m sure they’re great though. How could they not be with a name like that?

Our story really begins in 2004-ish. The story goes that he was telling his son Greek myths as bedtime stories, and when he ran out of myths (or at least child friendly myths I assume), he started to make one up. He invented a story about a boy named Percy, a son of Poseidon, who goes on an adventure to return Zeus's missing lightning bolts. His son told him that he should turn it into a book, his dad had published books before after all. So, Rick did just that. He then took his rough draft to his middle school students and used their feedback to revise.

He then sold this book to Miramax Books for enough money to retire from teaching and focus on writing. God damn. Rick was living the dream here. Life goals.

So, yeah. If you’ve never read the first Percy Jackson books they are...fine. They’re ok, good even. Definitely like, children’s books. But if you like bad puns and greek myths they are fun. I read all 5 in like...one weekend when I was in high school. I personally think the books really pick up in the third one: Titan’s Curse, mostly because we meet my favorite character, Nico. There’s some good world building in that book, and it really feels like Rick had figured out how he wanted to end the series by that point, so the plot feels more focused. Maybe that’s just me.

So, remember how I said that Children’s lit will tend to be filled with white, straight, cis, able bodied, neurotypical children with an unstated or vague religious affiliation? Well, Percy Jackson and all his friends are...mostly, white, straight, cis, able bodied, children with an unstated or vague religious affiliation who have ADHD and Dyslexia.

Because Rick Riordan’s son has ADHD and Dyslexia, and Rick wanted these heroes to be like him. So, yeah. The diversity isn’t AMAZING here, but the intent to provide representation for minority children was present from the very beginning. And ADHD and Dyslexia are, like, super powers here, proof the children are demi-gods, are side effects of their brains and bodies being ready for amazing quests. And there’s this great diversity in the characters with ADHD and Dyslexia and how it impacts them. Annabeth is depicted as super smart and studious. You have Percy who has always struggled in school. And so on.

Now, how you feel about this representation of ADHD and Dyslexia will vary. Some people really like it, others think it isn’t very well done or plays into some iffy tropes. I think we can safely say that the intent was very positive, but your milage may vary on the execution.

There’s also a movie adaption of the first 2 books which are…..bad. Logan Lerman was 18 when he played Percy- who should be like...12? And they made Hades the bad guy? And like..Persephone? Is? In? The? Underworld? In? Summer? Which….ugh. Like, they made Grover black, which was a cool choice, an attempt to address the lack of racial diversity it seems. but still these movies are not good….maybe if you haven't read the books, you’ll like them. I don’t like them. I didn't even watch the second one honestly. .

Alright so we will look at the rest of Rick Riordan’s books in part 2 of this video. I wanted to cut it here to keep it from getting super super long. So I will see you guys over on Part 2 to finish up

CUT --

Welcome back to my look at all of Rick Riordan’s books and how they have improved over time. We are going to jump right in where we left off at the end of Percy Jackson and the Olympians.

Ok, so after Percy Jackson, Rick Riordan started work on the Kane Chronicles. If you haven’t read the Kane Chronicles, I don’t blame you. They are kind of the forgotten half-siblings of the Percy Jackson universe, but you should read them. They are really good, and they feel like a really experimental time for Rick. Not only is this the first time we see him play with a split First Person narrator, where different chapters are from different character’s Point of View, but he also really tackles race in these books.

Carter and Sadie are biracial, and deal with all kinds of race issues- Sadie being white passing and Carter not, the books looks at how that impacts them and their experiences with others, their family, and their heritage. Plus all the Egyptian shit is really cool.

But even if you skipped this book series (seriously, go back and read them.) you can see this evolution in Rick’s writing in his sequel to Percy Jackson- Heroes of Olympus. These books actually came out at the same time as the Kane Chronicles, with The first Kane Chronicles book coming out in May 2010, then The first Heroes of Olympus book coming out in October 2010, and back and forth. And it’s clear that his new skills in Red Pyramid were influential on Heroes of Olympus.

Not only do we see the return of the Shifting narrator, now a Third Person Limited Point of View that follows different characters in different chapters, but where the first series was overwhelmingly white, these books seem to make a real effort to avoid that. The first two books- The Lost Hero and Son of Neptune take place, more or less concurrent and independent of each other. It’s not until the 3rd book when all the new characters meet up. But in those first 2 books, we get 5 new MAIN characters- Jason, a white boy; Piper, a Native American girl, specifically Cherokee if I remember; Leo, a Mexican American boy; Frank, a Chinese American boy; and Hazel, an African American girl. We also get Reyna, who isn’t a main character at first, but I would argue becomes one in House of Hades, and she is Puerto Rican.

And ALL of these characters and their racial identities are handled really well. Like, they are fully fleshed out and genuine characters. This doesn’t feel like shallow, lazy tokenism. Their heritage plays a part in who they are, but is not the ONLY thing about them. Piper, for example, has a father who refuses to play Native American roles in movies because he wants to avoid being stereotyped or type cast and Piper carries that struggle to connect with her heritage with her. Hazel’s experience as a black girl, and a black girl from the 1930s at that, impacted how she was treated growing up and makes up a big part of her backstory. But they aren’t solely defined by these experiences like shallow stereotypes.

It’s well done ,is that I’m saying

-

So at this point, we could say that while Rick had a good grasp on racial diversity and neuro-divergence representation. Most of his characters were still straight, cis, able bodied, children with an unstated or vague religious affiliation. (Seriously, did none of these kids have like..faith in a religion before?)

Now, here is a true, fun fact. On June 30th, 2013, 3 months before the release of House of Hades, I went on Tumblr and wrote an Open Letter to Rick Riordan about how he should really include LGBT+ characters in his books. He had written. Like, 11 children’s books at this point, and despite my headcanons, every character had been portrayed as assumed straight and cis. So I wrote a letter. How much I liked his books, but really, could we have some LGBT+ characters, this IS Greek mythology after all. I don’t think he ever saw this letter, despite me tweeting it at him.

Among other things in this letter, I go on to list several possibilities for LGBT+ representation in his books, including: quote: maybe Nico feels an unrequited crush on Percy. A headcanon I had since book 4, Battle of the Labyrinth.

And so, I want my moment, just to say: I. Was. Right. And I told you so.

House of Hades came out in 2013, and well, so did Nico. My favorite character came out of the closet, or, well, was outed and it was heart wrenching. The fandom kind of lost its shit over this. Anyone who had shipped Percy and Nico was throwing a party, homophobes were throwing a fit, it was very emotional. I was gloating a lot.

And let’s be clear- Nico’s sexuality in House of Hades is not...handled the best. It’s better than nothing certainly, and it’s better than Word of God reveals post publication. Rowling. But, by itself, it’s...well...single sad cis gay boy pines over unrequited straight crush hits some stereotypes. None of this is malicious, but it by itself is only so-so representation.

But Rick wasn’t done there, because we still had one more book- Blood of Olympus. Nico gets a super cute boyfriend in the form of Will Solace, and gets some closure with Percy. Now, your mileage may vary with that particular scene. Nico smugly telling Percy he “isn't his type” feels, well, a little out of character and, I dunno, corny. But it’s nice to see Nico get this happy relationship with Will, and I’ll forgive Rick for any stumbles in the exact execution to avert that sad-single-gay trope.

- -

Ok. So, now at last, we get to the 2 series that are still in publication: Gods of Asgard and Trials of Apollo. These two series are publishing concurrently, and because the Gods of Asgard started publishing first, let’s talk about it first.

I love Gods of Asgard. Truly. These might be my favorite of Riordan’s books. Part of that might just be that after 10 Greek and Roman books, a focus on Norse is refreshing, but I just love it. I love Magnus, I love the Annabeth cameos, I love Sam. Ok, so, the first Gods of Asgard book: Sword of Summer hits two important notes when it comes to minority representation.

Hearth is deaf and mute and uses sign language. This is the first time we’ve had a main character with a clear disability other than ADHD and Dyslexia. Which is really cool. And The consistent use of sign language throughout is neat.

Our second is Sam. Who is muslim and wears a hijab. Like, truly, how many stories do you know about a hijabi muslim valkyrie girl kicking all the ass.

Book 2, Hammer of Thor…well. Remember when I said Nico is my favorite character? Nico might have to fight Alex Fierro for my heart. Alex Fierro. A trans gender fluid child of Loki. I love Alex. Some people cried SJW Gay-Agenda bullshit over Alex like, being trans and gender fluid and, actually mentioning it more than once, but those people are unhappy assholes and I ignore them. I like Alex. Alex is an interesting, complicated character and I can’t wait for the next book.

Also, am I the only one who thinks Magnus and Alex are being set up for some romance? Just wishful thinking? Feels like romance. I ship it. I’ve been right before.

So ok, so We now have racial diversity, representation of multiple kinds of disabilities, a gay character, a gender fluid trans character, and a muslim character. -

Let’s talk about Trials of Apollo.

These books are really fun. If for no other reason than Apollo might actually be the most loud, entertaining narrator we’ve had yet. He’s funny, he’s an asshole. He’s also very loudly and clearly bisexual. Which, duh. How else would you even write Apollo if you have any understanding of Greek mythology? It’s mentioned a couple of times in the first book, and then even more in the second, where his prior relationships have plot relevance.

The second book also introduced us to Jo and Emmie, a biracial lesbian couple who used to be hunters of Artemis who are now raising a daughter together.

And this is kind of the joy of really GOOD diverse representation. Like, Apollo has faced hardship because of his relationships, with both men and women, but his sexuality itself isn’t a problem with him. Alex is very secure with who they are, but has clearly faced a lot of transphobia. Nico was very closeted and seemed to have a lot of pain tied up in his sexuality and is only just now healing from that with Will. Jo and Emmie clearly faced issues with their relationship, having to leave the hunters, but have built a new life together. We get this great array of experiences, rather than just one prevailing narrative.

I love it, and we’ve come so far from that first bedtime story about a boy trying to find some stolen lightning bolts. - -

So, what’s in the future for Rick Riordan? Well, he hasn’t announced any new book series for after Gods of Asgard and Trials of Apollo wrap up. However, we do know that he is starting his own Publishing Imprint with Disney Hyperion. Rick will only work as a curator it seems, focusing on having minority authors write fantasy/mythology based books from their native cultures. There are 3 books signed right now,

Jennifer Cervantes’s Storm Runner, which is about a boy having to save the world from a Mayan Prophesy.

Roshani Chokshi’s Aru Shah and the End of Time, about a 12-year-old Indian-American girl who unwittingly frees a demon intent on awakening the God of Destruction

And Yoon Ha Lee’s Dragon Pearl, about a teenage fox spirit on a space colony.

All of which sound AMAZING and I will preorder as soon as Amazon let’s me.

Look, Rick Riordan is not a perfect person or a perfect writer. Some people take issue with him because he has said some rather insulting things about the small number of people who still worship the greek gods. That he took these stories and was dismissive of the people who still value them religiously. Now, The majority of those comments seem to come from blog posts back in 2006, and he did have a brief apology for offending Hellenists on his facebook back in February. and one would hope that this interest on letting minority authors tell stories from their own culture in the future is evidence that he has learned and grown since then.

And not everyone will like Rick Riordan’s books no matter what. They are for kids. They are corny and have bad puns and sometimes meander or forget about important characters for long stretches of time. Sometimes the ideas he has are better than the execution. It happens.

But when I look at his books as a whole, I see Middle school teacher from San Antonio who started with a fun idea and never stopped growing as an author with a dedication to minority representation in his novels. And I certainly appreciate that, and look forward to more of his work for as long as he decides to produce it.

229 notes

·

View notes

Text

Digital Immigrants Helping to Build a Digital Nation

A instead silly fixation has actually created in our 'digital' globe today: that there is some type of divide between those who are 'digital natives,' and also those that are not.

Worse, some feel that there is no higher compliment to pay someone than to describe them as a digital local ... which it's perfectly acceptable to disregard 'non-natives' as in some way outré.

With the possible exception of Nicolas Sarkozy, that language is no much longer acceptable in the real world of constructing genuine nations. And a good idea, too.

Immigrant Nation

Little Italy...

Rocco Rossi, a previous Toronto mayoral candidate and previous Head of state of the Liberal Celebration of Canada, recently told a touching tale concerning his uncle's challenging beginnings as an 18-year-old Italian immigrant to Canada in 1951, landing at Pier 21 in Halifax, Nova Scotia. Later, after the uncle had damaged this fresh ground, others from his household and also the inadequate farming area he had emigrated from made the journey throughout. Today, 350 people from that neighborhood now call Greater Toronto home.

What struck me regarding the tale had not been concerning how various most of us are, but rather, the similarities. Outside of First Nations and also (now) a fairly little portion of those descended from those that showed up early, in the 16th as well as 17th centuries, the majority of Canadians are first, 2nd, and also 3rd generation immigrants. And many have moms and dads or grandparents that can associate tales of what life was like trying to obtain developed 'off the watercraft.'

Yet we frequently deny these similarities. Established immigrant teams typically aren't as inviting as they could be of the newer ones. The most recent ones cannot recognize the dull society of the well-known team. As well as the reputable, however still relatively new, groups like the Italians as immortalized by novelist Nino Ricci, desire to convey a Goldilocksian top quality of being not as well fresh, and also not as well stagnant, not delighting in the complete advantages of the establishment but not destitute anymore.

Everyone jockeying for placement - and also lobbing subtle putdowns at groups that showed up on a different watercraft. When in truth, we're all in the exact same boat.

The Digital Nation

That got me thinking about the "Digital Nation."

In Digital Nation, the chronology is in reverse: the newer generations are the 'citizens,' the older generations supposedly the uncomfortable, unpredictable 'immigrants.'

The Digital Nation...

In this version of truth, there is a shocking quantity of displaying around that qualifies to function as well as prosper in the sector. If you didn't just get here, possibly you're as well set in your means to really 'obtain it.' (As Dilbert when aptly shared, the older tech employee could be changed by the younger tech employee, that could subsequently be changed by a 'unborn child.')

Or on the various other hand, if you arrive far too late, maybe all the great get-rich-fast possibilities (Microsoft millionaires, Google worker # 76, obtained an excellent gig at Facebook 3 years ago) will certainly be gone!]

All of it is nonsense…

The Digital Generation Gap

At most ideal, this department offers to remind us of how younger individuals think, or ways to drop old baggage from our business techniques in order to gauge range, networks, and also the rate and also power of the info revolution.

Crossing the divide...

At its worst, it assigns excessive credit rating to any individual who merely shows comfort with using their new tablet, and also that could string a few buzzwords together from Silicon Valley startup culture.

And this underestimates simply exactly how strong the digital divide still is even among young, educated people under 25. There are those that make use of Facebook as well as jargons, and after that, those who can grasp sophisticated shows languages (as well as comply with official scholastic research study, at the very least for a while) to address hard troubles. The substantial majority of 'electronic citizens' are passive fans of the productions as well as improvements headed by a driven, accomplished few.

It's unsurprising to this Gen-X' er that it's often baby boomers who appear obsessed with electronic natives, and even desire to be viewed as digital locals. These are the ones who write books on ways to recognize those that are born electronic, or tweet constantly concerning this application or that.

How do they ever before get anything done? Some of it's downright weird, when you look closely.

Faking It Till You Make It

Most achieved individuals can take advantage of digital innovation as well as digital society - regardless of whether they are in the ideal age brace or straight able to code in the current languages. And also they do so in interesting ways.

Most notably, if they fake-it-till-they-make it hard sufficient, they're left with legions of followers that casually throw around discusses of usages of their systems as methods of faking-it-even-harder. Perhaps a few examples will certainly allow clarify.

Take Matt Drudge, publisher of a page of web links I do not know exactly what making of called the Drudge Report:

Drudge has commonly been lauded as a leader of the fast-moving electronic national politics press. Evaluating only by his age, he could have had a Commodore Pet dog in college, and also he can also do a mean pantomime of a rotating dial phone. Drudge's dad is an electronic leader, having started an on the internet study shop called refdesk. Drudge is an unlikely hero, offered that the style of his site was in fact ripped off from his Dad's.

But then once more, Techmeme's aggregation style was torn off from Drudge. It seems our heroes obtain unlikelier and also unlikelier with each passing generation.

And what about Arianna Huffington The queen of the vaguely dynamic soft-scraper empire referred to as the Huffington Blog post, is an effectively off Child Boomer. I make sure she goes to fantastic initiative to look laid-back when she utilizes her smart device (without reading glasses).

Then there's Nick Denton founder of Gawker Media. He's a splendidly creative business owner and also always exact commentator on the state of our industry. Denton is an authority of breathless, shameless, bawdy blogging.

Today's chatter is tomorrow's news

But did you recognize that Denton left his task as a financial reporter to launch just what was basically a kind of venture internet search engine modern technology? A news collector called Additionally, among a pack of very early solutions planned to transform the archaic technique of 'news clipping solutions' on its head. Denton acts all casual, but it takes a whole lot of deep understanding of the info transformation to develop a successful start-up that changes just how business works.

New York Mayor Michael Bloomberg started up something not so dissimilar to Denton's business, unlike the mildly wealthy Denton, Bloomberg got very abundant off it. Bloomberg is still a global information realm, majority-owned by Michael Bloomberg, regardless of being pre-Web in its genesis. It was started in 1981, around the same time Microsoft created MS-DOS.

A fellow named Alan Meckler belonged to a team who began up conferences with names like Internet Globe back in the early 1990's. He later went on to own companies with names like Internet.com. Before all that, he was associated with 'information changes' on media like CD-Roms. He is around 70 years old.

Google is stocked with young, wise coders. They're likewise filled with skilled Ph.D's, directed by many Silicon Valley elders, as well as have actually typically had their butts kicked by a seasoned company advisor called Costs Campbell, who not just holds a Master's level, yet was train of the Columbia University football team in the 1970's, VP of Advertising with Apple, as well as much more just recently, CEO as well as Chairman of Intuit. For every one of these factors, Googlers dubbed him 'Train.'

Sheryl Sandberg, also a vital number in the early days of Google's procedures as well as the ethical conscience of its advertising program, is currently COO of Facebook. She pertained to Google with a background in seeking advice from at McKinsey, and as an upper-level official in the Treasury Department under the Clinton Administration. Her role at Facebook has actually been so critical to the business's survival and profitability that her total (mainly stock-based) payment (until now) is valued at better compared to $1B.

Turning to non-media companies.

Amazon.com, led by the irrepressible Jeff Bezos, is today an $83 billion firm. Bezos began Amazon in 1994. He is a true digital pioneer as well as enthusiast. Yet he is not a 'digital indigenous' by today's meaning, neither was he viewed as an especially accomplished techie.

Like Steve Jobs, Bezos found out a great deal on the task, though he knew sufficient in 1994 to write job descriptions for established coders. He came down from Wall Street with a vision as well as implemented it with an outlandish degree of obsession to information. Amazon.com is so influential that its simplicity of usage ended up being a darkness under which all ecommerce suppliers lived for years.

Groupon is a 'laughable' development by a cabal of tech-agnostic financiers that take place to have actually made fairly a dent in the market. It's a digital company, kind of. Movie critics of the business appear to really feel that by slamming the company for 'not being actually electronic,' they could in some way talk down its evaluation. Best of luck with that said! Current appraisal: $11.4 billion. I'm not a follower of Groupon myself, yet it's extremely real.

How numerous various other examples would certainly you like?

In cloud computer and also SaaS, middle-aged to older conglomerates like IBM, Xerox, Oracle, and so on compose a substantial part of the value of the US stock exchanges. Also Salesforce.com, the 'startup,' is also mature to be amazing to the amazing youngsters. However it deserves $20.7 billion. Its 47-year-old founder, Marc Benioff, cut his teeth at business at Apple as well as Oracle after developing a software application firm in high school, selling games for machines like the Atari.

Digital Elders

' It's the wood that must fear your hand, not vice versa.' -Pai Mei

What's my verdict? Well, I don't suggest to reject the noticeable: that it could be a great benefit to be born right into the digital change. Several new and also important solutions will certainly be begun up by those that involve the table with a lot of the appropriate prerequisites in terms of understanding as well as disposition.

But 'digital immigrants' like Jeff Bezos, Michael Bloomberg, Marc Benioff, as well as Arianna Huffington bring something special to the table as well:

They create ventures and address problems self-consciously instead of intuitively. Perhaps it's 'you state tomato, and also I state tomahto,' but those who can bring aware, organized effort right into an area typically get to elevations that the plain virtuoso cannot.

More exceptionally, they understand just what's absolutely effective and game-changing about a fad or technology, and could evangelize that adjustment to those who aren't sure.

They could be ready to work harder, be more stressed, remain the course seemingly forever on a lengthy march to monotonous greatness.

And one more thing. Due to the fact that they're not captured up in the 'social scene' of 'being digital,' the 'digital immigrants' are suitable to inform the truth.

Alan Meckler, that somehow does not have a Wikipedia access (though his business does), uploaded merely and also directly: 'Wikipedia is a Farce' as well as Wikipedia is Dishonest:. And also just what did he need to lose? Lunch with Jimmy Wales?

Digital Nation Building

There is going to be much worth and an extraordinary amount of fresh cultural outcome rising from Digital Nation in the coming years. However Digital Country needs - desperately requires - the equilibrium, official academic histories, framework, greed, worries, planning experience, bridging abilities, and also irreverence of its 'electronic immigrants.' (' Immigrants' who represent, paradoxically, the older generations of technologies and also economic go-getters from worlds far, much away - specifically, previous years like the 1990's, 1980's, and also when it comes to IBM, long before that.)

Were you aware that good ol' Microsoft (MSFT, at $273B) is still valued more than Google to this day? Crazy, right? Resting pleasantly in the list of top 10 business by market capitalization in the Criterion as well as Poor's 500: IBM, at $238B. Google's holding its own at $203B. Facebook, after it goes public, is expected to be valued at $100B. We'll see if they have exactly what it requires to maintain. It's actually prematurely to say.

Or to sum it up most briefly: Zuckerberg, Schmuckerberg.

#business#business owner#marketing#marketing agency#search engine marketing#social media#social media management#social media news#social media strategy

1 note

·

View note

Text

This Much I Know: Byron Reese on Conscious Computers and the Future of Humanity

Recently GigaOm publisher and CEO, Byron Reese, sat down for a chat with Seedcamp’s Carlos Espinal on their podcast ‘This Much I Know.’ It’s an illuminating 80-minute conversation about the future of technology, the future of humanity, Star Trek, and much, much more.

You can listen to the podcast at Seedcamp or Soundcloud, or read the full transcript here.

Carlos Espinal: Hi everyone, welcome to ‘This Much I Know,’ the Seedcamp podcast with me, your host Carlos Espinal bringing you the inside story from founders, investors, and leading tech voices. Tune in to hear from the people who built businesses and products scaled globally, failed fantastically, and learned massively. Welcome everyone! On today’s podcast we have Byron Reese, the author of a new book called The Fourth Age: Smart Robots, Conscious Computers and the Future of Humanity. Not only is Byron an author, he’s also the CEO of publisher GigaOm, and he’s also been a founder of several high-tech companies, but I won’t steal his thunder by saying every great thing he’s done. I want to hear from the man himself. So welcome, Byron.

Byron Reese: Thank you so much for having me. I’m so glad to be here.

Excellent. Well, I think I mentioned this before: one of the key things that we like to do in this podcast is get to the origins of the person; in this case, the origins of the author. Where did you start your career and what did you study in college?

I grew up on a farm in east Texas, a small farm. And when I left high school I went to Rice University, which is in Houston. And I studied Economics and Business, a pretty standard general thing to study. When I graduated, I realized that it seemed to me that like every generation had… something that was ‘it’ at that time, the Zeitgeist of that time, and I knew I wanted to get into technology. I’d always been a tinkerer, I built my first computer, blah, blah, blah, all of that normal kind of nerdy stuff that I did.

But I knew I wanted to get into technology. So, I ended up moving out to the Bay Area and that was in the early 90s, and I worked for a technology company and that one was successful, and we sold it and it was good. And I worked for another technology company, got an idea and spun out a company and raised the financing for that. And we sold that company. And then I started another one and after 7 hard years, we sold that one to a company and it went public and so forth. So, from my mother’s perspective, I can’t seem to hold a job; but from another view, it’s kind of like the thing of our time instead. We’re in this industry that changes so rapidly. There are more opportunities that always come along and I find that whole feeling intoxicating.

That’s great. That’s a very illustrious career with that many companies having been built and sold. And now you’re running GigaOm. Do you want to share a little bit for people who may not be as familiar with GigaOm and what it is and what you do?

Certainly. And I hasten to add that I’ve been fortunate that I’ve never had a failure in any of my companies, but they’ve always had harder times. They’ve always had these great periods of like, ‘Boy, I don’t know how we’re going to pull this through,’ and they always end up [okay]. I think tenacity is a great trait in the startup world, because they’re all very hard. And I don’t feel like I figured it all out or anything. Every one is a struggle.

GigaOm is a technology research company. So, if you’re familiar with companies like Forrester or Gartner or those kinds of companies, what we are is a company that tries to help enterprises, help businesses deal with all of the rapidly changing technology that happens. So, you can imagine if you’re a CIO of a large company and there are so many technologies, and it all moves so quickly and how does anybody keep up with all of that? And so, what we have are a bunch of analysts who are each subject matter experts in some area, and we produce reports that try to orient somebody in this world we’re in, and say ‘These kinds of solutions work here, and these work there’ and so forth.

And that’s GigaOm’s mission. It’s a big, big challenge, because you can never rest. Big new companies I find almost every day that I’ve never even heard of and I think, ‘How did I miss this?’ and you have to dive into that, and so it’s a relentless, nonstop effort to stay current on these technologies.

On that note, one of the things that describes you on your LinkedIn page is the word ‘futurist.’ Do you want to walk us through what that means in the context of a label and how does the futurist really look at industries and how they change?

Well, it’s a lower case ‘f’ futurist, so anybody who seriously thinks about how the future might unfold, is to one degree or another, a futurist. I think what makes it into a discipline is to try to understand how change itself happens, how does technology drive changes and to do that, you almost by definition, have to be a historian as well. And so, I think to be a futurist is to be deliberate and reflective on how it is that we came from where we were, in savagery and low tech and all of that, to this world we are in today and can you in fact look forward.

The interesting thing about the future is it always progresses very neatly and linearly until it doesn’t, until something comes along so profound that it changes it. And that’s why you hear all of these things about one prediction in the 19th Century was that, by some year in the future, London would be unnavigable because of all the horse manure or the number of horses that would be needed to support the population, and that maybe would have happened, except you had the car, and like that. So, everything’s a straight-line, until one day it isn’t. And I think the challenge of the futurist is to figure out ‘When does it [move in] a line and when is it a hockey stick?’

So, on that definition of line versus hockey stick, your background as having been CEO of various companies, a couple of which were media centric, what is it that drew you to artificial intelligence specifically to futurize on?

Well, that is a fantastic question. Artificial intelligence is first of all, a technology that people widely differ on its impact, and that’s usually like a marker that something may be going on there. There are people who think it’s just oversold hype. It’s just data mining, big data renamed. It’s just the tool for raising money better. Then there are people who say this is going to be the end of humanity, as we know it. And philosophically the idea that a machine can think, maybe, is a fantastically interesting one, because we know that when you can teach a machine to do something, you can usually double and double and double and double and double its ability to do that over time. And if you could ever get it to reason, and then it could double and double and double and double, well that could potentially be very interesting.

Humans only evolve, computers are able to evolve kind of at the speed of light, they get better and humans evolve at the speed of life. It takes generations. And so, if a machine can think, a question famously posed by Alan Turing, if a machine could think then that could potentially be a game changer. Likewise, I have a similar fascination for robots because it’s a machine that can act, that can move and can interact physically in the world. And I got to thinking what would happen, what is it a human in a world where machines can think better and act better, then what are we? What is uniquely human at that point?

And so, when you start asking those kinds of questions about a technology, that gets very interesting. You can take something like air conditioning and you can say, wow, air conditioning. Think of the impact that had. It meant that in the evenings people wouldn’t… in warm areas, people don’t go out on their front porch anymore. They close the house up and air condition it, and therefore they have less interaction with their neighbors. And you can take some technology as simple as that and say that had all these ripples throughout the world.

The discovery of the new world ended the Italian Renaissance effectively, because it changed the focus of Europe to a whole different direction. So, when those sorts of things had those kinds of ripples through history, you can only imagine what if the machine could think, like that’s a big deal. Twenty-five years ago, we made the first browser, the Mosaic browser, and if you had an enormous amount of foresight and somebody said to you, in 25 years, 2 billion people are going to be using this, what do your think’s going to happen?

If you had an enormous amount of foresight, you might’ve said, well, the Yellow Pages are going to have it rough and the newspapers are, and travel agents are, and stock brokers are going to have a hard time, and you would have been right about everything, but nobody would have guessed there would be Google, or eBay, or Etsy, or Airbnb, or Amazon, or $25 trillion worth of a million new companies. And all that was, was computers being able to talk to each other. Imagine if they could think. That is a big question.

You’re right and I think that there is…I was joking and I said ‘Tinder’ in the background just because that’s a social transformation. Not even like a utility, but rather the social expectation of where certain things happen that was brought about that. So, you’re right… and we’re going to get into some of those [new platforms] as we review your book. In order to do that, let’s go through the table of contents. So, for those of you that don’t have the book yet, because hopefully you will after this chat, the book is broken up into five parts and in some ways these parts are arguably chronological in their stage of development.

The first one I would label as the historical, and it’s broken out into the fourth ages that we’ve had as humans, the first age being language and fire, the second one being agriculture and cities, the third one being writing and wheels, and the fourth one being the one that we’re currently in, which is robots and AI. And we’re left with three questions, which are: what is the composition of the universe, what are we, and what is yourself? And those are big, deep philosophical ones that will manifest themselves in the book a little bit later as we get into consciousness.

Part two of the book is about narrow AI and robots. Arguably I would say this is where we are today, and Seedcamp as an investor in AI companies has broadly invested in narrow AI through different companies. And this is I think the cutting edge of AI, as far as we understand it. Part three in the book covers artificial general intelligence, which is everything we’ve always wanted to see, where science fiction represents quite well, everything from that movie AI, with the little robot boy, to Bicentennial Man with Robin Williams, and sort of the ethical implications of that.

Then part four of the book is computer consciousness, which is a huge debate, because as Byron articulates in the book, there’s a whole debate on what is consciousness and there’s a distinction between a monist and the dualist and how they experience consciousness and how they define it. And hopefully Byron will walk us through that in more detail. And lastly, the road from here is the future, as far as we can see it in the futurist portion of the book, I mean part three, four and five are all futurist portions of the book, but this one is where I think, Byron, you go to the ‘nth’ degree possible with a few exceptions. So maybe we can kick off with your commentary on why you have broken up the book into these five parts.

Well you’re right that they’re chronological, and you may have noticed each one opens with what you could call a parable, and the parables themselves are chronological as well. The first one is about Prometheus and it’s about technology, and about how the technology changed and all the rest. And like you said, that’s where you want to kind of lay the groundwork of the last 100,000 years and that’s why it’s named something like ‘the road to here,’ it’s like how we got to where we are today.

And then I think there are three big questions that everywhere I go I hear one variant of them or another. The first one is around narrow AI and like you said, it’s a real technology that’s going to impact us, what’s it going to do with jobs, what’s this going to do in warfare, what will it do with income? All of these things we are certainly going to deal with. And then we’re unfortunate with the term ‘artificial intelligence,’ because it can mean many different things, but one is that it can be narrow AI, it can be a Nest thermometer that can adjust the temperature, but it can also be Commander Data of Star Trek. It can be C-3PO out of Star Wars. It can be something as versatile as a human and fortunately those two things share the same name, but they’re different technologies, so it has to kind of be drawn out on its own, and to say, “Is this very different thing that shares the same name likely? possible? What are its implications and whatnot?”

Interestingly, of the people who believe we’re going to build [an AGI] very immensely and when, some say as soon as five years, and some say as long away as five hundred. And that’s very telling that these people had such wide viewpoints on when we’ll get it. And then to people who believe we’re going to build one, the question then becomes, ‘well is it alive? Can it feel pain? Does it experience the world? And therefore, by that basis does it have rights?’ And if it does, does that mean you can no longer order it to plunge your toilet when it gets stopped up, because all you’ve made is a sentient being that you can control, and is that possible?

And why is it that we don’t even know this? The only real thing any of us know is our own consciousness and we don’t even know where that comes about. And then finally the book starts 100,000 years ago. I wanted to look 100,000 years out or something like that. I wanted to start thinking about, no matter how these other issues shake out, what is the long trajectory of the human race? Like how did we get here and what does that tell us about where we’re going? Is human history a story of things getting better or things getting worse, and how do they get better or worse and all of the rest. So that was a structure that I made for the book before I wrote a single word.

Yeah, and it makes sense. Maybe for the sake of not stealing the thunder of those that want to read it, we’ll skip a few of those, but before we go straight into questions about the book itself, maybe you can explain who you want this book to be read by. Who is the customer?

There are two customers for the book. The first is people who are in the orbit of technology one way or the other, like it’s their job, or their day to day, and these questions are things they deal with and think about constantly. The value of the book, the value prop of the book is that it never actually tells you what I think on any of these issues. Now, let me clarify that ever so slightly because the book isn’t just another guy with another opinion telling you what I think is going to happen. That isn’t what I was writing it for at all.

What I was really intrigued by is how people have so many different views on what’s going to happen. Like with the jobs question, which I’m sure we’ll come to. Are we going to have universal unemployment or are we going to have too few humans? These are very different outcomes all by very technical minded informed people. So, what I’ve written or tried to write is a guidebook that says I will help you get to the bottom of all the assumptions underlying these opinions and do so in a way that you can take your own values, your own beliefs, and project them onto these issues and have a lot of clarity. So, it’s a book about how to get organized and understand why the debate exists about these things.

And then the second group are people who, they just see headlines every now and then where Elon Musk says, “Hey, I hope we’re not just the boot loaders for the AI, but it seems to be the case,” or “There’s very little chance we’re going to survive this.” And Stephen Hawking would say, “This may be the last invention we’re permitted to make.” Bill Gates says he’s worried about AI as well. And the people who see these headlines, they’re bound to think, “Wow, if Bill Gates and Elon Musk and Stephen Hawking are worried about this, then I guess I should be worried as well.” Just on the basis of that, there’s a lot of fear and angst about these technologies.

The book actually isn’t about technology. It’s about how much you believe and what that means for your beliefs about technology. And so, I think after reading the book, you may still be afraid of AI, you may not, but you will be able to say, ‘I know why Elon Musk, or whoever, thinks what they think. It isn’t that they know something I don’t know, they don’t have some special knowledge I don’t have, it’s that they believe something. They believe something very specific about what people are, what the brain is. They have a certain view of the world as completely mechanistic and all these other things.’ You may agree with them, you may not, but I tried to get at all of the assumptions that live underneath those headlines you see. And so why would Stephen Hawking say that, why would he? Well, there are certain assumptions that you would have to believe to come to that same conclusion.

Do you believe that’s the main reason that very intelligent people will disagree on with respect to how optimistic they are about what artificial intelligence will do? You mentioned Elon Musk who is pretty pessimistic about what AI might do, whereas there are others like Mark Zuckerberg from Facebook, who is pretty optimistic, comparatively speaking. Do you think it’s this different account of what we are, that’s explaining the difference?

Absolutely. The basic rules that govern the universe and what our self is, what is that voice you hear in your head?

The three big questions.

Exactly. I think the answer to all these questions boil down to those three questions, which as I pointed out, are very old questions. They go back as far as we have writing, and presumably therefore they go back before that, way beyond that.

So we’ll try to answer some of those questions and maybe I can prod you. I know that you’ve mentioned in the past that you’re not necessarily expressing your specific views, you’re just laying out the groundwork for people to have a debate, but maybe we can tease some of your opinions.

I make no effort to hide them. I have beliefs about all those questions as well, and I’m happy to share them, but the reason they don’t have a place in the book is: it doesn’t matter whether I think I’m a machine or not. Who cares whether I think I’m a machine? The reader already has an opinion of whether a human being is a machine. The fact that I’m just one more person who says ‘yay’ or ‘nay,’ that doesn’t have any bearing on the book.

True. Although, in all fairness, you are a highly qualified person to give an opinion.

I know, but to your point, if Elon Musk says one thing and Mark Zuckerberg says another, and they’re diametrically opposed, they are both eminently qualified to have an opinion and so these people who are eminently qualified to have opinions have no consensus, and that means something.

That does mean something. So, one thing I would like to comment about the general spirit of your book, is that I generally felt like the book was built from a position of optimism. Even towards the very end of the book, towards the 100,000 years in the future, there was always this underlying tone of, we will be better off because of this entire revolution, no matter how it plays out versus not.And I think that maybe I can tease out of you that fact that you are telegraphing your view on ‘what are we?’ Effectively, are we a benevolent race in a benevolent existence, or are we something that’s more destructive in nature? So, I don’t know if you would agree with that statement about the spirit of the book or whether…

Absolutely. I am unequivocally, undeniably optimistic about the future, for a very simple reason, which is, there was a time in the past, maybe 70,000 years ago, that humans were down to something like maybe a 1000 breeding pairs. We were an endangered species and we were one epidemic, one famine, one away from total annihilation and somehow, we got past that. And then 10,000 years ago, we got agriculture and we learned to regularly produce food, but it took us 90 percent of our people for 10,000 years to make our food.

But then we learned a trick and the trick is technology, because what technology does is it multiplies what you are able to do. And what we saw is that all of a sudden, it didn’t take 90 percent, 80 percent, 70, 60, all the way down, in the West to 2 percent. And furthermore, we learned all of these other tricks we could do with technology. It’s almost magic that what it does is it multiplies human ability. And we know of no upward limit of what technology can do and therefore, there is no end to how it can multiply what we can do.

And so, one has to ask the question, “Are we on balance going to use that for good or ill?” And the answer obviously is for good. I know maybe it doesn’t seem obvious if you caught the news this morning, but the simple fact of the matter is by any standard you choose today, life is better than it was in the past, by that same standard anywhere in the world. And so, we have an unending story of 10,000 years of human progress.

And what has marred humanity for the longest time is the concept of scarcity. There was never enough good stuff for everybody, not enough food, not enough medicine, not enough education, not enough leisure, and technology lets us overcome scarcity. And so, I think if you keep that at the core, that on balance, there have been more people who wanted to build than destroy, we know that, because we have been building for 10,000 years. That on balance, on net, we use technology for good on net, always, without fail.