#and if you clicked through to the source website you WOULD see that same list... under the heading ''do NOT do the following''

Explore tagged Tumblr posts

Text

we never should have let programmers (or programmers bosses more likely) get away with calling AI fuck-ups "hallucinations". that makes it sound like the poor innocent machine is sick, oh no, give him another chance, it's not his fault.

but in reality the program is wrong. it has given you the wrong answer because it is incorrect and needs more work. its not "the definitely real and smart computer brain made a mistake" its the people behind the AI abdicating responsibility.

#i am pissed about the google ai summary shit#they were already having problems with summaries#u ever tried googling what to do if someone is having a seizure???#the old classic style summary would show you a list of actions saying ''do these things''#and if you clicked through to the source website you WOULD see that same list... under the heading ''do NOT do the following''#and they really thought automating that process further was a good idea huh????

15 notes

·

View notes

Text

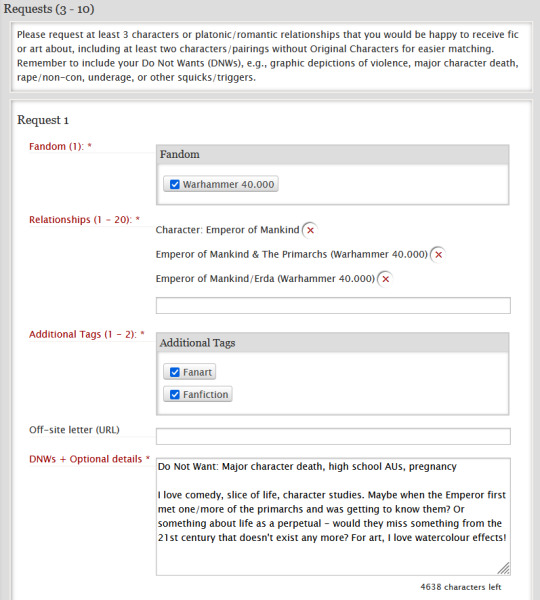

Sign-up is open!

Sign-up for the WH40K Summer Fest 2024 is open now!

You need to request and offer at least 3 characters/relationships to sign up; you are welcome to request/offer more. You can choose to create and receive either fanfiction, fanart, or both.

Helpful links:

Collection profile with full rules/info.

Request Summary shows other participants' requests.

The sign-up deadline is Sunday, June 16, 11:59am CET/5:59am EDT (countdown).

Signing up: Important info and step-by-step guide 👇

You must be 18+ and have an AO3 account to participate in the exchange. If you do not have an account, you can request an invitation to join the Archive here.

Note: In case the mod team needs to reach out to you, AO3 shows us the e-mail address you use to log in. If you end up unmatchable or if your creator sends us a question to forward to you, we will use this e-mail address. Be sure to be able to access it, check it regularly while the exchange is running—and, if your legal name is in it, to change it if you don’t want the mod team to see it! All e-mails from the mod team will be sent from [email protected] using the name Roboute Guilliman (he handles our paperwork) or through AO3.

How to sign up

Click on 'Sign Up'/'Sign-up Form' on the exchange's profile page (or click here) to fill out your requests and offers.

You must submit at least 3 requests (at least one per box) for characters and/or relationships that you would like to receive fanfiction and/or fanart about. You can add additional request boxes, up to 10.

For each request box, choose 'Warhammer 40.000' as the fandom.

Add 1-20 characters or relationships from the exchange tag set. You can search for nominated characters/relationships using the drop-down menu. We allow up to 20 character/relationship tags per request, but please be mindful of the person receiving your request and try not to go overboard. You have to fill out Request 1, 2 and 3 with at least one character/relationship in each; if possible, group together tags that share the same character(s) or canon source, DNWs, or other characteristics into a single request.

Choose whether you'd like to receive only fanfiction, only fanart, or both. You can choose different media(s) for each request.

Linking to a 'dear creator' letter on an external website, i.e., a tumblr blog post, Google Doc, Dreamwidth page, etc. going into further details about your preferences, is optional. The person assigned to you is not obligated to read it, but may find it helpful for inspiration. Please make sure your page is publicly available; some tumblr links can only be accessed by tumblr users.

Use the text box for suggestions, optional prompts and preferences. Keep in mind that we have all sorts of participants, so be as broad as possible to inspire others without restricting their creativity — optional details are optional and your creator is not required to stick to your suggestions, but having a general idea of whether you like fluff/action/comedy/smut, the Great Crusade/Horus Heresy/M40/M42-era, your favourite tropes, art styles, etc. is very helpful. You MUST list anything you Do Not Want (DNW) to see in a gift, such as squicks and triggers. The mods will check your gift to make sure it doesn't contain any of your DNWs, but we only check for the specific ones listed in this text box. Your listed DNWs should, as a minimum, state whether you wish to avoid any of the four specific AO3 Archive Warnings: 1) Graphic depictions of violence, 2) Major Character Death, 3) Rape/Non-con, 4) Underage. If you would be uncomfortable receiving Mature or Explicit gifts, please also mention this in your DNWs. You can have different DNWs for different requests.

If you are unsure about what to include in your DNWs or optional details, check out other people's requests for ideas.

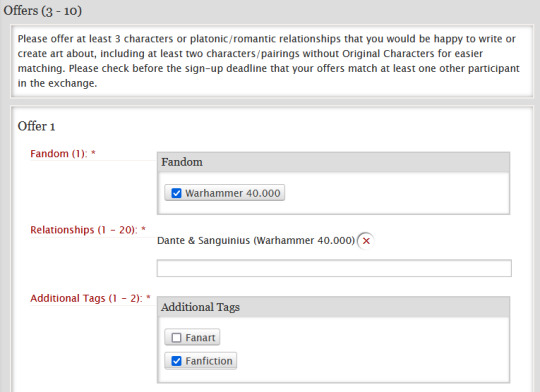

You must submit at least 3 offers (at least one per box) for characters and/or relationships that you would like to create fanfiction and/or fanart about. Your offered characters/relationships do not have to be identical to your requests.

For each offer, choose 'Warhammer 40.000' as the fandom.

Add 1-20 characters or relationships from the exchange tag set. You can search for nominated characters/relationships using the drop-down menu. You have to fill out Offer 1, 2 and 3 with at least one character/relationship in each, but it doesn't matter how you group your offers otherwise. No one will see your offers except you and the mods. You can make a total of 10 offers with up to 20 tags each = 200 characters/relationships. You are very welcome to do so, but make sure you are able and willing to create a gift if you match on any one of them! You will only be matched with one other participant and required to create a single gift regardless of how many offers you submit.

Choose whether you'd like to create only fanfiction, only fanart, or both. You'll only be required to make one gift for your assignment even if you offer both.

Please return to check before the sign-up deadline (June 16, 11:59am CET/5:59am EDT) that your offers match at least one request by another participant in the exchange.

If your offers don't match anyone else's requests by the time of the deadline, the mods will e-mail you to ask if you would like to add additional offers. If you do not respond to this e-mail within 12 hours or decline to add additional offers, your sign-up will be deleted.

Once you have filled out at least 3 requests and offers, hit 'Submit' at the bottom of the page. You have now signed up to participate in the exchange and your requests will be visible to other participants in the Request Summary.

You can edit/add to your requests and offers by clicking 'My Sign-up' on the exchange profile page. Note: After the sign-up deadline, only the mods will be able to edit your sign-up details.

"What if there is someone I don't want to be matched with?"

The best way to ensure a fun and enjoyable exchange experience is to keep an eye on the Request Summary and adjust your offers based on other participants' requests. Maybe you'll see something that inspires you to offer characters or relationships you didn't consider before — or maybe someone requests something for one of your offers that you would rather not create, in which case it's perfectly fine to edit your offers so you won't risk matching on that particular character/relationship.

If, however, there is another participant that you do not want to be matched with, please e-mail us at [email protected] before the sign-up deadline and let us know:

Your AO3 username;

the AO3 username of the participant you do not want to be matched with;

whether your Do Not Match request is for creating, receiving, or both;

what you would like us to do if that participant offers to pick up your pinch hit;

what you would like us to do if that participant creates a treat for you.

Thank you — see you in the sign-ups!

28 notes

·

View notes

Note

hi there! ive always loved your history posts, and was wondering if you had any insights/directions you could point me in to learn more about a specific area of queer history?

im personally most well-read on the history of gay men in britain between the labouchere amendment and ww2, so a pretty specific area. i dont know very much at all about the history of queer women, though id think its harder to get primary sources for them as the legal records wouldnt exist in the same way?

anyway, my main question is actually about cross-gender solidarity. i notice that a lot of the men i read about operated in very gender segregated spaces, which was typical for the era anyway and ofc there are plenty of spaces today where gay men want to be with other gay men exclusively. i suppose im wondering if there's any way of investigating how much queer men in the past would have felt solidarity with queer women, and how to look at whether/how those sentiments changed over time?

i realise this is a bit more of a "how to do history" question than about history proper - any insight or thoughts you'd like to share about this would also be really appreciated! and in either case, hope you're having a great week xx

First, thanks! Glad to help.

Second, the difficulty of doing premodern queer history largely or exclusively from legal documents is that a) it gives us a distorted version of what degree of prejudice actually existed in society, on a practical and not just theoretical level, and b) it makes it even more difficult (if not impossible) to speculate on what people "really" felt, thought, or personally identified. (I currently have a book chapter about John/Eleanor Rykener in the peer-review process, which touches on some of this difficulty, since our only major source in that case is also a legal record.) We are very rarely granted direct access to the actual voices or perspectives of premodern queer people, and if it's filtered through a hostile framework that has an interest in minimizing that person's existence and/or social experience, it provides an even more excessively or solely negative picture than is probably at all the case.

However, I would gently challenge your idea that it's harder to get primary sources on queer women, since the assumption is that premodern queer men are only memorialized by their (presumably punitive) encounters with the legal system, and that society cared less about female homosexuality and thus did not attempt to police it in the same way. Both ideas have some degree of truth, but not entirely and certainly not categorically, and there are many ways to access premodern queer experience and memorialization. I don't know what particular time/region you're interested in, but since you said Britain, I will once more recommend checking out the website of gay British historian Rictor Norton. He has everything, and I mean everything, you could possibly want as a resource/starting point (his specialty is 18th-20th century British LGBT history):

He has a wildly extensive list of links to subject, region, and chronological-specific LGBT history:

He has an equally comprehensive list of links sorted by region, methodology, art/music history, thematic approaches, etc:

Now, obviously I haven't combed through all of these to see if there is something that specifically addresses the question of premodern mlm/wlw solidarity, but since queer spaces have often been a lot more gender-mixed than people tend to think, there are certainly more than enough resources to get you clicking and searching.

43 notes

·

View notes

Note

hey! I have been meaning to get xkit for a while now and you seem to be well versed, any advice, pointers, or places where to look? the new layout is hell for my brain to navigate

hey! and yeah, i've been using xkit since at least 2014

it's been through a lot of updates and changing hands as people have stopped working on it and new people have started, but they've kept it called xkit for conveniences sake

the most recent version, the one that's still updating, is xkit rewritten, this is a fairly bare bones version in that it's solely about fixing things staff have done to the website + adding accessibility options - this extension will show up in your browser extension toolbar and can be modified from there. this doesn't have a fix yet to fix the twitter layout (i'll get to that), but its most recent update was fixing the fact that you can't click on urls to go to specific posts anymore - if you look under tweaks, and "restore links to posts in the post header" (or worded along those lines, on mobile atm), that'll fix that. and feel free to have a playaround with the other stuff it can do!

new xkit is the version that existed before that - this shows up as an additional icon in the tumblr header (or now the sidebar for the twitter layout). a lot of people will say don't get this, i honestly don't see a problem with it, but this was the xkit that existed for a long time and used to have extensions that modified the website just for fun, rather than solely to fix it. this version is no longer updating and it lost about half its extensions in the process, but the ones that remain still work fine, and if you care for something more whimsical, there's a couple fun extensions left, plus i just find the customisability of extensions easier with this

(new xkit and xkit rewritten can be used at the same time, and i have been using both since i got xkit rewritten - but if you only want one, xkit rewritten is definitely the way to go)

now, if you want to actually fix the twitter layout, xkit isn't what you need, there's two options for that

i use stylus, and this extension, which has so far been working fine for me (minus one slight issue in menu sizing that i fixed with a single line of code, i'll throw details to that in under the cut if it happens to you)

i'm also aware of dashboard-unfucker, which uses a different browser extension, i haven't used this personally, but i know some people have had better luck with it than stylus, so feel free to have a playaround and use what works for you!

(and the author does have a note added in that this version of dashboard unfucker only works with xkit rewritten, it has problems with new xkit, so if you wanna go this path keep that in mind)

but yeah, you should be able to find some combination of extensions that works for you, feel free to get back in touch if you're struggling to install anything, and good luck in your journey of modifying tumblr!

okay so the issue i was having is i think this extension was designed on a very specific screen size, and i'm assuming one smaller than my desktop screen? so it ended up making the menus up the top super wide when opened

i'm not a css expert (honestly if anyone who is knows how to make the other new icons that i don't care about disappear, and realign what's left back over to the right, would love to know how to do that), but i researched enough to add this line in

if you open stylus once this is installed, click manage, and then select tumblr.com, you should be taken to the source code and be able to edit it

around line 281 (after you install it, the preview version has more stuff added in) there's a section titled "Moves the menu so it appears over the top and not in the header", and several lines of code underneath that

somewhere in that list (doesn't matter where as long as it's in the brackets, i've put it under max height for organisation) add "max-width: calc(20vw);" in on a new line (minus the quotation marks, but make sure you keep the semicolon)

basically that just checks the width of your screen and tells the menu it can't be wider than 20% of the screen, which works for me, feel free to change that number according to what works for you, i find stylus very easy to modify, you just hit the save button (i don't think you even have to reload the page, but try doing that if it doesn't work) and it'll make the changes

4 notes

·

View notes

Text

Reblogging to add things I've learnt transcribing images of text (only on laptop though) and describing images:

If you right-click on an onscreen image while on Google Chrome – you can search for that image, and Google will automatically recognize the text contained in it (including other language fonts, like Thai, Chinese, Tamil, Korean, Japanese, etc.). You can select the text and copy it directly using Google, which means you can also tidy it up and format it in MS Word, or even in Tumblr directly before posting.

If you right-click somewhere on the screen that is not part of the image, you can draw a window over the part of the image (or anything else onscreen) to let Google know you want it to search for what's specifically in the window, which is useful for transcribing only the text part of an image. (To draw the window, click and hold down the mouse button, then drag to open a window over any text you want Google to search for and/or transcribe.)

Doing either of the above means you can go directly from screen to text without needing to save the image and uploading it into an OCR website.

Sometimes if a post has many reblogs, I often try scanning through the reblog list to see if anyone else has already described it. If there is already a described version, I'll leave a like for the person who reblogged the (undescribed) post on my dash, but then go on reblog the described version instead. The time this saves can be put to use describing other posts. Of course, scanning the reblogs in the notes is tedious, so I'll only spend a minute or so doing that, less if time is pressing.

If you can't find an already described version of a post by scanning the reblogs, you can also search for it by pasting any transcribed and copied text into a Google search. You can then reblog any described version that Google finds. It's surprising how successful Google can be at this. (This only saves formatting time since you already have the text transcribed and copied, but to me that's the tedious bit and I try to avoid it if I can.)

Transcribing and searching for textposts this way is especially useful for tracking down Twitter posts that were put on Tumblr as images. You can then copy the text directly from the source (useful for long threads on Twitter). If I'm not up to it, I will link directly to Twitter by pasting the tweet's URL into Tumblr, whereupon the link will automatically appear as an image with ALT text (in dashboard view). This also attributes the source of the text, which is the correct thing to do anyway.

If I can find the Twitter post, I like to add the UTC time and date of of the post as well, so that it is anchored in time. The time and date on a Twitter post is your local time and date, so I usually ask Google what the time and date would be in UTC.

When typing out a Twitter handle, I use a zero width non-joiner after the '@' so that Tumblr does not automatically turn it into a link, which sometimes happens.

When typing out timestamps and dates, it's better to keep certain blocks of text together for better legibility. For example, you don't want Tumblr to break up '3:00PM UTC', with '3:00PM' at the end of a line and 'UTC' at the beginning of the next line. Nor do you want '5 April 2024' to be broken up as '5 April' on a different line from '2024'. To prevent this, replace the original spaces with no-break spaces.

To keep hyphenated words together, use the non-breaking hyphen instead.

I think the Internet may be divided on this, but if an image already has ALT text I prefer to leave it undescribed in the blog text. I read somewhere that folks who use a text-to-speech reader find having both ALT text and the image description in the blog text to be highly annoying, because they have to listen to essentially the same thing twice.

How to Keep Doing Descriptions (from someone who does a fuckton)

Plain text: How to Keep Doing Descriptions (from someone who does a fuckton)

This is a list aimed mostly at helping people who already write IDs; for guides at learning how to do them yourself, check my accessibility and image description tags! I write this with close to two years of experience with IDs and chronic pain :)

Get used to writing some IDs by using both your phone and your computer, if you can! I find it easier to type long-form on my laptop, so I set up videos and long comics on my phone, which I then prop up against my laptop screen so I can easily reference the post without constantly scrolling or turning my head

I will never stop plugging onlineocr.net. I use it to ID everything from six-word tags to screenshots of long posts to even comic dialogue! On that last note, convertcase.net can convert text between all-caps, lowercase, sentence case, and title case, which is super helpful

Limit the number of drafts/posts-to-be-described you save. No, seriously. I never go above 10 undescribed drafts on any of my four blogs. It doesn’t have to be that low, but this has done wonders (italics: wonders) for my productivity and willingness to write IDs. If I ever get above that limit, even if it’s two or three more, I immediately either describe the lowest-effort post or purge some, and if I can’t do that then I stop saving things to drafts no matter what. No exceptions! Sticking to this will make your life so much easier and less stressful

My pinned post has a link to a community doc of meme description templates!

Ask! For! Help! Please welcome to the stage the People’s Accessibility Server! It’s full of lovely people and organized into channels where you can request/volunteer descriptions and ask/answer questions

I make great use of voice-to-text and glide typing on my phone to save my hands some effort!

Something is always better than nothing!!! A short two-sentence or one-sentence ID is better than no ID at all. Take it easy :)

If you feel guilty about being unable to reblog amazing but undescribed art, try getting into the habit of replying to OP’s post to let them know you liked it! This makes me feel less pressured to ID absolutely everything I see

This is a sillier one, but I tag posts I describe as “described” and “described by me.” When saving to drafts, I never preemptively tag with “described by me,” since for some reason that always makes me feel extra pressure and extra stress. Consider doing something similar for yourself if that applies!

I frequently find myself looking at pieces of art which feel like they need to be considered for a bit before I can write an ID for them, and those usually get thrown into drafts, where the dread for writing a comprehensive ID just builds. Don’t do that! Instead, try just staying in the reblog field for a bit and focus on the most relevant aspects of the piece. Marinate on them for a little; don’t rush, but don’t spend more than a handful of seconds either. I find after that the art becomes way easier to describe than it initially seemed!

On that note, look for shortcuts that make IDs less taxing for you to do! For example, I only ever describe clothes in art if they’re relevant to the piece; not doing that every time saves a lot of time and energy for me personally

Building off of that, consider excusing yourself from a particular kind of ID if you want to. Give yourself a free pass for 4chan posts, or fanart by an artist who does really good but really complex comics, whatever. Let it be someone else’s responsibility and feel twice as proud about the work that you can now allot more energy to!

As always, make an effort to find and follow fellow describers! It’s always encouraging to get described posts on your dash, and I find that sometimes I’m happier to ID an undescribed post when the person who put it on my dash is a friend who tagged it with “no ID”

TL;DR: To make ID-writing less stressful and more low-effort, use different devices and software like onlineocr.net and voice-to-text, limit the amount of work you expect yourself to do, and reach out to artists and other describers!

1K notes

·

View notes

Text

The SIFT Method - Evaluating Resources and Misinformation - Library Guides at UChicago

S - Stop Before you read or share an article or video, STOP! Be aware of your emotional response to the headline or information in the article. Headlines are often meant to get clicks, and will do so by causing the reader to have a strong emotional response. Before sharing, consider:

What you already know about the topic.

What you know about the source. Do you know it's reputation? Before moving forward or sharing, use the other three moves: Investigate the Source, Find Better Coverage, and Trace Claims, Quotes, and Media back to the Original Context. I - Investigate the Source The next step before sharing is to Investigate the Source. Take a moment to look up the author and source publishing the information. What can you find about the author/website creators? What is their mission? Do they have vested interests? Would their assessment be biased? Do they have authority in the area? Use lateral reading. Go beyond the 'About Us' section on the organization's website and see what other, trusted sources say about the source. You can use Google or Wikipedia to investigate the source. Hovering is another technique to learn more about who is sharing information, especially on social media platforms such as Twitter.

F - Find Better Coverage The next step is to Find Better Coverage or other sources that may or may not support the original claim. Again, use lateral reading to see if you can find other sources corroborating the same information or disputing it. What coverage is available on the topic? Keep track of trusted news sources. Many times, fact checkers have already looked into the claims. These fact-checkers are often nonpartisan, nonprofit websites that try to increase public knowledge and understanding by fact checking claims to see if they are based on fact or if they are biased/not supported by evidence. FactCheck.org Snopes.com Washington Post Fact Checker PolitiFact

T - Trace Claims, Quotes, and Media to their Original Context The final step is to Trace Claims, Quotes, and Media to their Original Context. When an article references a quote from an expert, or results of a research study, it is good practice to attempt to locate the original source of the information. Click through the links to follow the claims to the original source of information. Open up the original reporting sources listed in a bibliography if present Was the claim, quote, or media fairly represented? Does the extracted information support the original claims in the research? Is information being cherry-picked to support an agenda or a bias? Is information being taken out of context? Remember, headlines, blog posts, or tweets may sensationalize facts to get more attention or clicks. Re-reporting may omit, misinterpret, or select certain facts to support biased claims. If the claim is taken from a source who took it from another source, important facts and contextual information can be left out. Make sure to read the claims in the original context in which they were presented. When in doubt, contact an expert – like a librarian!.

0 notes

Text

Digital Marketing for Small Businesses in UK: Budget-Friendly Strategies to Boost Growth

Small businesses are highly constrained to resources, yet they may be very efficient while watching the budget closely. Here are actionable tips especially for maximizing reach, engagement, and conversions in the UK market.

1. Use local SEO to attract nearby customers

Optimize Your Google Business Profile:

You must sign up and optimize your Google Business Profile. You would add the right information and invite reviews to the business profile along with adding the operational hours of your business along with photographs.

Geo Specific Keywords in UK:

Your keywords can be targeted "best [product/service] in [city/town]" which attracts local search. Use tools like Google Keyword Planner and Ubersuggest.

List on UK-Based Directories: There are sites like Yell, Thomson Local, and 192.com, where listing your business for free or very low cost enhances local SEO.

2. Run Targeted Social Media Ads with a Small Budget

Start Small with Facebook and Instagram Ads: You can start setting daily limits of just £1 for ads on Facebook and Instagram. Focus your ad spend only to the specific audience in the UK, whether it is through location, interest, or behavior.

Test Your Content and Focus on Engagement: Test short video clips, customer testimonials, and local imagery and see what best works for you. Through the A/B testing feature, continue refining your approach based on your engagement rates.

Consider Seasonal and Local Events: Align to UK-based events, holidays, and trends. Example would be the summer festival season or Bonfire Night where you use them to have adverts that are relevant to time.

3. Value Creating Content for Authority Building

Start a Blog with Local Content: Write about whatever is topical in your community or about current UK industry trends. Suppose you had a small café - blog about the best locally sourced ingredients that are available to you.

Share Success Stories: Provide testimonials and case studies that focus on the local benefit or unique value of your products to the community. This will be a good reason for UK consumers who are keen on local involvement.

Use Free Tools to Create Visual Content: Create attractive content for blogs and social media using free design tools like Canva and Unsplash without hiring a designer.

4. Email Marketing with Personalization

Build a Quality Email List: You can encourage customers to subscribe to a newsletter either on your website or even in-store. Consider an incentive for them to sign up, like a discount for their first purchase or something free.

Segment Your List: Organize your list of emails by customer interest or by what they previously purchased. Tailored messaging opens more and clicks higher, which makes your campaign more effective.

Use Free Email Software: Mailchimp, Moosend, and Sendinblue all offer free or low-cost plans which are perfect for small businesses. Use one of these to start creating visually appealing emails that you can monitor your campaign performance with.

5. Engage in User-Generated Content (UGC)

Customer Reviews and Testimonials: Encourage satisfied customers to give you reviews on Google, Facebook, or Yelp. UK consumers are often motivated by social proof as a highly influential element.

Run a UGC Campaign on Social Media: This includes challenging your customers to click photos or shoot videos featuring your product. Designate a particular hashtag for your brand such that all the photographs are captured in the same feed and hence are trackable. You may even provide them with a little prize as a chance to win gift vouchers for willing participants.

Share UGC on Your Website and Social Channels: Resharing customer photos or reviews on your social media platforms and website creates trust and a human aspect to your marketing.

6. Use Influencer Marketing on a Budget

Partner with Micro-Influencers: Micro-influencers have between 1,000–10,000 followers. Sometimes, they are much cheaper than influencers with millions of followers and have a higher level of engagement. Find UK influencers who have a niche audience that fits your brand.

Offer Free Products or Services: If you cannot raise cash payment, offer products or services free in lieu of posting or mentioning about your brand.

Create Affiliate Partnerships: Offer small commission to the influencer on each sale made through a link placed by the influencer. In this way, the influencers will promote the brand and will not request any up-front payment

7. Engage Consumers through Online Communities and Forums

Join relevant Facebook and LinkedIn Groups: You join UK-focused groups in your sector; engage authentically, answer questions, and contribute insights. Do not sell on the hard sell; focus on adding value to discussion.

Use Reddit and Quora: Find relevant business threads and topics on either Reddit or Quora, paying special attention to UK-based Reddit boards (like r/AskUK or local threads). Helpful responses to questions can create brand awareness and credibility.

8. Monitor Your Performance with Free or Low-Cost Analytics Tools

Configure Google Analytics and Search Console: Those tools enable insights into a website's source of visitors, frequently viewed pages, and all-round performance, which one uses to gain more targeted knowledge and subsequently adjust a strategy.

Analytics across social networks: Facebook, Twitter, and Instagram include free analytical services. Often check data regarding engagement rate, the reach, or distribution of demographical information among views to comprehend which content reaches target audiences.

Trial Other Low-Cost Tools: Other low-cost tools such as Ubersuggest and SEMrush provide affordable SEO and keyword research insights into your content strategy, as well as track competitors in the UK.

Conclusion

With the right strategies, small businesses in the UK can reach a significant level of growth in digital marketing without blowing their budget. Whether localized SEO, social media advertising, or email marketing is the route, the tactic should focus on engagement and conversion maximization for boosting visibility and customer loyalty. Tracking and adjusting regularly will ensure that your campaigns are kept cost-effective and moving steadily forward.

#digital marketing agency#digital marketing agency in uk#digital marketing services#digital marketing company in uk#digita marketing

0 notes

Text

Read Laterally

When looking on X (Twitter), I came across a post from someone named Bo Loudon (someone who I have never heard of). In the post, Loudon mentions a recent Fox News discussion about RFK Jr. talking about how he was suing Donald Trump, but Trump still allowed RFK Jr. to travel with him despite being sued by him. After this, Loudon states "The FAKE NEWS has done EVERYTHING to hide Trump's true, loving side."

After seeing this, I wanted to look into Bo Loudon by 'reading laterally' to see if he is a reliable person to get information from.

First, I clicked on his profile. Right away, he has a banner of him standing next to Trump, both holding their thumbs up. This made me think right away that this person is a very pro-Trump account and his posts would reflect that. When looking through his posts, this is very true.

When looking through all of his posts, with a great example being shown above, a lot if not all of them are far right-leaning posts that are extremely anti-democrat. A lot of these posts are also rage-baiting posts, making compares and contrasts between real world issues/topics and use them to make republicans look better and democrats look worse. The post above, saying that Obama complimented Trump before he eventually ran for president and this being an example of 'people loving him before running for president', is a great example of making compares and contrasts to get a certain reaction from readers.

Next, I clicked on a link in his X bio that directed me to his personal website. On the website, no past experiences or credentials are listed. A YouTube page is listed, which does show videos of Bo appearing on news shows (again, all very right-leaning) like Fox News. All the videos, however, were posted in the last 3 months, which means this kid may have just started recently posting.

Looking at his personal website more, there is a section showing that Donald Trump has acknowledged him on his platform Truth Social. However, especially since after Trump talked about a false claim that illegal immigrants were eating pets like it was fact while at his debate with Kamala Harris, even an approval coming from a former president does not mean that the person in question is reliable. Because of this, I decided to dig further and threw Bo's name into Google.

After typing his name into Google, a few things stood out. One: it lists his followers on Instagram. As an earlier picture showed, on X, Bo has 147.6 thousand followers. On Instagram, however, Bo has over 253 thousand followers. This is interesting to me, as Instagram is known to be less politically driven then X, with X users usually having more followers and engagement for political-based accounts than Instagram does.

His Instagram page, however, is basically the exact same type of content as his X page, so this did not help anything at all.

The one notable thing about his Instagram page compared to his X page, however, is that the link on Instagram is completely different from his X link. On Instagram, the link posted brings you to a page where you can buy a Trump sticker pack (note: Not joking, between me writing this post to then going back and grabbing these links only a few hours later, the link on his Instagram changed sites. Originally, it was this sticker pack site as shown above. Now, it changed to a link to buy this book on Amazon). To me, having a link on a politically based social media platform like this comes off as caring more about selling merchandise than caring about actual politics, so this does not start Bo off on a good note, in my opinion.

When looking at the results of articles just by typing in Bo Loudon's name, the results given already give off negative implications for being a reliable source. The sources shown above, an article from a website called Hola.com and another from Hindustan Times, both sources that are pretty unknown. A lot of these smaller sources were the only sources really talking about or acknowledging Bo, which brings Bo's credibility down. Both articles do focus on Bo having a friendship with Trump's son Barron Trump and that he is apart of Trump's Gen Z influencer team. Even with this, I still do not know details on what experience specifically that Bo has to make him a reliable source in politics.

After this, I searched a little deeper, and found out an important piece of why Bo focuses on talking about right leaning politics online, and also something that leads me to believe that Bo is not only an unreliable source, but also be connected to someone who is a known liar of important information. I found a Wikipedia page of his mother, Gina Loudon. According to this page, she was a member of Trump's media advisory board for his 2020 campaign, as well as a member of Mar-a-Lago and Citizens Voters Inc. When looking further into the Wikipedia page, however, a troubling piece of information shows up. Under the 'persona life' section, it is stated that "The Daily Beast reported that Loudon's book falsely claimed that she has a PhD in psychology. Loudon does have a PhD in human and organization systems from Fielding Graduate Institute (now the Fielding Graduate University), an online school. A personal assistant for Loudon responded to the reports, saying that Loudon's PhD was in the field of psychology. The publishing company, Regnery, told the outlet that they were responsible for several misleading statements about Loudon's qualifications, including referring to her as "America's favorite psychological expert," (Wikipedia). Although this is stated to be a mistake on the publishers part, she is still liable of lying about a degree that she had.

When getting to this conclusion, the main thing that frustrated me about learning more about Bo is that there was no information on him. I even went to his Linkdln page and, even there, nothing is on the page regarding his degrees, general education or what experience he has to talk about politics. He barley even had any followers on that page. I was struggling to come up with a conclusion. Then, I was scrolling through his X page more, and found this piece of information out:

According to his own X post posted back in April of this year, he is a Trump activist (which was already obvious) but also that he is only 17 years old as of last April. Knowing his age makes it very telling why there is not much information on him outside of his social media posts. Only being 17, he is still probably a high school student or just started college (his friend, Barron Trump, just started college in the last month). Due to this, he does not even have an actual job in the government or politics, as you must be at least 18, sometimes even older, to have an actual working position in politics. This makes Bo not a reliable source for information about politics, as he is only just putting his feet in (his posts across X, Instagram and YouTube show that he has only started talking about politics in the last 6 months), no known degree in the political field, and does not have enough experience to be considered a reliable person to listen to and take as fact in the world of politics.

0 notes

Link

0 notes

Text

NTX Keto Gummies Review - Scam Or Legit FitScience Keto ACV Gummy Brand

If you're here, you're probably hoping we have something that will help you lose weight. Good news: we do! It's called NXT Keto + BHB Salts, and it's an easy formula. Because this weight loss product has been so successful, the company that makes it has an A+ grade with the Better Business Bureau. Thanks to their 100% money-back guarantee, you can try this product without any danger. We can also offer that deal because we have a temporary relationship with them. They gave us a small amount of the formula, which you can get at an NXT Keto Price deal that you can only get here. Are you ready to stop worrying about getting fat and having heart problems? Then you need to do something! If you click on any of the buttons on this page, you'll go right to our buy page!

►Visit The Official Website To Get Your Bottle Now◄

Why do we choose to talk about NXT Keto BHB Gummies?

It's simple: we want to be the ones to tell you about a way to lose weight that really works! Worrisomely, there are more overweight people now than ever before. It's happened because society makes it almost impossible for people to burn fat well. What does this mean? Well, think about how many carbohydrates are in the things we eat today. Then think about how, the more carbs you eat, the less energy you get from fat and more from carbs. You won't lose a single ounce of fat if you eat enough of them to meet your energy needs. This is why you need NXT Nutrition Keto Gummies. Using a new method based on the science of Keto, they teach your body's factories to burn fat instead of sugar. It's a low-effort plan with a lot of value that works! Click on the picture below to get your bottle!

►Visit The Official Website To Get Your Bottle Now◄

How Does NXT Work?

Keto Ingredients work because they take advantage of your body's natural ability to burn fat. They didn't change anything about you. They just use BHB chemicals that are real. When your liver is in ketosis, it makes the same chemicals. What does ketosis mean? It's a chemical state that only happens when the body has no carbs left. This is what the Keto Diet says you should do. In this way, you make the chemicals that help you lose weight. They send messages to every part of your body that tell your factories to use fat as your main source of energy. In this way, you lose weight quickly, and you can see the results in as little as four weeks. You'd be right if you thought that sounded too good to be true. Because following the Keto Diet will help you lose weight, but it also comes with a number of risks, some of which can kill you.

It's not worth putting your life at risk to lose weight, especially if your main goal is to get healthier. But even if you just want to look sexier, there's no reason not to try NXT Keto Ingredients since it comes with a 180-day money-back promise. Most people can see changes in less than a month when they use this treatment. That means it will take you half as long as that to decide if they are right for you. If you aren't happy, you can get your money back, no questions asked. So, why are you still waiting? You only need to tap one of the blue buttons up there!

Benefits of Keto: Burns fat as well as ketosis

All of the ingredients are 100% safe. Based on organic beta hydroxybutyrate, it gives you more energy than carbs.

Get a toned body in less than a month? You have almost six months to see if that's true.

►Visit The Official Website To Get Your Bottle Now◄

The Side Effects of NXT Keto

If you hate capitalism, you should hate big drugs even more. Because so many people believe their goods will help them, even though they are usually useless at best. In the worst case, they are actually bad for your health. It would be bad enough if they just used fake or untested chemicals, but they often don't list these ingredients. To get full and true information, you have to go through the trouble of getting in touch with the manufacturer. They are counting on you not going through with this trouble. But there's nothing to worry about with NXT Nutrition Keto Gummies. Before we agreed to spread this formula, we wanted to do the tests that its creators were said to have done. We were very happy to find that there were no cases at all of bad NXT Keto Side Effects happening. So, there you have it: a tool that helps you lose weight, works quickly, and is safe to use. Get yours right now!

►Visit The Official Website To Get Your Bottle Now◄

Time to give NTX Keto a try!

We hope that after reading our NXT Keto Review, you'll be convinced to try it. What do you have to lose by trying? If it doesn't work, you will get your money back in full. If it works, you've saved the health of your body for a small fraction of what you'd pay elsewhere. We don't have very much left, though. So, you only have a short time to save money with our NXT Keto Cost deal. But it's not too late! Order yours today and find out why the Better Business Bureau gave this method a great review.

Official Website : https://ntxketogummies.com

Tags:

#NTXKetoGummies

#NTXKetoGummiesUses

#NTXKetoGummiesReviews

#NTXKetoGummiesSideEffects

#NTXKetoGummiesCost

#NTXKetoGummiesPrice

#NTXKetoGummiesIngredients

#NTXKetoGummiesHowToUse

#NTXKetoGummiesBuy

#NTXKetoGummies300Mg

#NTXKetoGummies1000Mg

#NTXKetoGummiesOrder

#NTXKetoGummiesResults

#NTXKetoGummiesBenefits

#NTXKetoGummiesWhereToBuy

#NTXKetoGummiesHowToOrder

#NTXKetoGummiesResults

#NTXKetoGummiesWork

#NTXKetoGummiesFatBurnar

#NTXKetoGummiesWeightloss

☘📣Facebook Pages😍😍👇

☘📣Site Google😍😍👇

☘📣More Refrences😍😍👇

0 notes

Text

Introducing Miriam Guido: A Premier Realtor in Alaska's 5-Star Real Estate Market, Specializing in Wasilla and Nearby Areas!

SELL WITH MIRIAM

For most people there are only a few really big decisions in life, ones that are life changing, and selling a property is one of them. With spring and summer right around the corner, now is the best time to put your property on the market. Choosing a realtor that is competent and intuitive, one that is good at their job and knows the market well is an important first step.

I’ve helped sell many local properties, helping my clients navigate the process smoothly and always being available for questions. Getting you the price for your home that will put a smile on your face is what I’m here for, so give me a call!

How I Put More Money In Your Pocket. Not Ours. Any real estate professional can list your property. Some charge extra fees. When selling real estate it’s much more than just listing your property. What needs to happen is getting your home in front of the right buyers, that’s what I’ll do to help you get your property sold at the highest price possible, hassle and worry free.

I can promise you I will sell your home fast, and charge no extra fees to put more money in your pocket.

When You List with Me, I Do Not Charge: A Transaction, Administration, or Photography Fee!

What I can do for you: I will be vigilant and dedicated in the selling process to ensure a quick sale at a strong price that maximizes your return on investment. I stand ready to assist you in all aspects of the sale! Free professional photos of your listing! Professional marketing package that includes professional photography, a video tour, online syndication to over 500 search engines, a personal website for your home and full disclosure of your property available for public review. Sell your home or property Quickly and for Top Dollar!

BUY WITH MIRIAM!

Buying a property is a massive investment, not only in money, but time and emotional resources as well. In this market, good properties tend to zip right off into a pending sale faster than you can click next on one of the many real estate websites out there on the web. You could sit for months waiting for the right property to pop up in front of you, or you could utilize the correct resources that can maximize your reach in order to find the dream home you’ve been looking for.

This is where the right realtor comes in. With access to over five-hundred search engines through the Multiple Listing Service, effective realtors can put you in front of the right property faster, and without the tedious hassle of doing anything besides showing up.

An agent that knows the right home inspectors and other professionals in the industry can save you tens of thousands of dollars in missed structural, or health and safety issues with your prospective home.

Contrary to what some home buyers think, not all real estate agents are the same. Any agent can show you a property, but when it comes to negotiation with other realtors, or even brokers, having a realtor with the right experience can make or break a sale.

But many later find out that the wrong buyer’s agent could cause you to…

Lose the home you really want to another buyer because of an ill-prepared offer.

Pay too much for a house based on emotion vs. solid facts.

Waste hours and hours seeing homes that aren’t what you’re looking for.

Miss out on an amazing house because it was a “For Sale By Owner” or Foreclosure and that agent isn’t experienced dealing with those types of sellers (or finding those properties).

Overlooking issues with the house that could cost you tens of thousands of dollars and would have been noticed with years of experience working with the right inspectors and professionals.

ORIGINALLY FOUND ON- Source: Miriam Guido - REALTOR LLC(https://homesbymiriam.com/)

1 note

·

View note

Photo

HOW TO: Make a Pantone “Color of the Year” Gif

A few people have asked about my Pantone sets which use the “Color of the Year” swatch design. So, here’s a full tutorial with a downloadable template of my exact overlay! Disclaimer: This tutorial assumes you have a basic understanding of gif-making in Photoshop.

PHASE 1: PICKING A SCENE + PANTONE COLOR(S)

I’m starting with this because it’s crucial for planning your gifset as well as making sure the execution is smooth sailing. The steps in this phase won’t necessarily be literal steps but some tips for how I usually go about making a Pantone set:

1.1 – Picking a scene. Scene selection is everything. To make things easy on yourself, I suggest choosing scenes where the background is mainly ONE color — for example, a scene where the subject has a clear blue sky behind them. To make things even easier, choose a color that isn’t the same color as the subject of your gif. Like, if your subject is a human, I’d avoid using a gif with a red or yellow background unless you want to do a lot more work to mask their skin.

Rip me using a scene of green lil Grogu in green grass lmao. But I guess that goes to show you could really do this with any scene (I just did lots of masking and keyframe animations to perfect this green shade). BUT selecting your scenes wisely = a lot less work.

1.2 – Picking Pantone colors. People often ask me how I choose my colors and there are a few methods which I’ll go over below.

But note that not all Pantone colors have a cute name, or any name (fun fact: only Pantone textiles have official names and they end with TCX, TPX, or TPG).

METHOD A: Google Search “Pantone [Color]” Source: Google Easy but not always fruitful, all you do for this method is open Google and type “Pantone [insert color here].” For example, when searching for teal colors, I searched several things including: Pantone Teal, Pantone Turquoise, Pantone Blue, Pantone Green, Pantone Blue Green, etc. Then, just sift through the Google results and click on whatever comes up from the official Pantone website! Since Pantone’s site blocks some info behind a paywall, you won’t be able to get a hex code from them. But you can just screenshot the swatch from their site, put it in Photoshop, and use the eyedropper tool to figure out the color.

METHOD B: Color-Name Site Source: https://www.color-name.com/ This handy website lets you search by colors using the upper navigation bar. Or you can just type something like "magenta" or "blue pantone" or even “frog” and see what comes up lol. Color-name can put together palettes too! I like that this site also tells you the hex code of a color, which is really helpful for getting the right code to put in my overlay. Note: Not every color on this site is a Pantone textile, so not all of these names are Pantone-official names. You can tell it’s official if, in the Pantone row of the Color Codes table on the middle of the page, it has a code that’s 2 numbers, a dash, 4 numbers, and either TCX, TPX, or TPG.

METHOD C: User-Made Pantone Colors Archive Source: https://margaret2.github.io/pantone-colors/ For my Wednesday characters as Pantone colors set, it was all about matching the color name to the character’s vibe. So, before looking at the actual colors themselves, I wanted to find the perfect color names. I stumbled upon this page. The pros = it lists pretty much all of the current official Pantone names. The cons = it’s not convenient since there’s no filtering tool. You can do Command+F and search for keywords, but that’s it. I literally scrolled through this whole page for my Wednesday set and read every single name, which... I think means... something’s wrong with me /lh /hj

METHOD D: Official Pantone Color Finder Source: https://www.pantone.com/pantone-connect This is last on my list because I don’t actually recommend it. Unless you already have access to this resource from your school or work or something, I would never pay for it and it is a paid feature only. Boooo 👎 But there is a free trial (which I’ve never used), so if you want to see what it’s about, you can definitely go for it.

PHASE 2: MAKING THE BASE GIF

Again, just some super quick tips for making a gif that, I think, looks best with this kind of set — but if you’re still learning how to gif, I do have a basic gif-making tutorial here for extra guidance!

2.1 – Uncheck “Delete Cropped Pixels” before cropping your gif. When you use the crop tool, this checkbox appears in the top toolbar. Unchecking it allows you to move the positioning of your gif later on, which is handy in this case when you want to choose which part of your gif will be underneath the Pantone swatch. You can read more about this tip in my basic gif-making tutorial (linked above; Step 1.5 – Tip B).

2.2 – Make your gif 540px width. My gifs for these sets are usually 540x540px but I think 540x500px will also look good. I think it’s more impactful though to make a big gif to show off your coloring.

PHASE 3: ADDING THE PANTONE OVERLAY

3.1 – Download my template I made this template myself, so all I ask is that you don’t claim it as your own and that you give me proper insp or template credit in your caption if you decide to use it! Get the PSD with the transparent background here!

3.2 – Download the font Helvetica Neue Bold The font I use (and I’m pretty sure it’s the same font Pantone uses) is Helvetica Neue Bold, with some very specific letter spacing (which I determined by studying Pantone’s official Color of the Year Very Peri design). It’s already set in my .psd but here are specs in case: color name spacing = -40, color code spacing = -75 (sometimes I’ll do -25 for the numbers after the dash if I don’t like how tightly they’re packed together).

I uploaded Helvetica Neue Bold to my dropbox here!

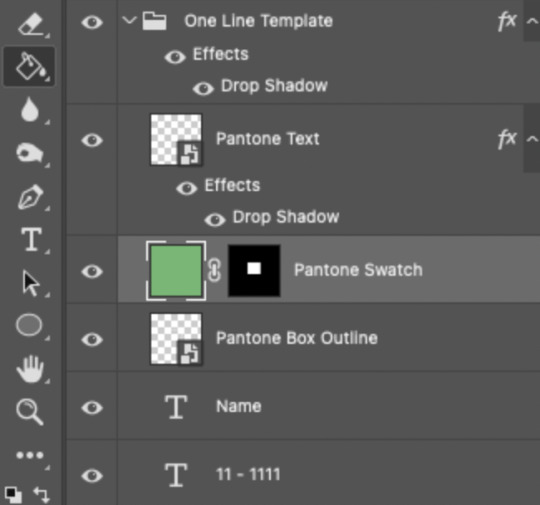

3.3 – Import my overlay You can either drag the whole folder onto your gif from tab to tab or right-click the folder, select Duplicate Group, and select your gif as the destination document. Just make sure this overlay group is above your base gif!

3.4 – Fill the color swatch In my .psd, on the layer labeled “Pantone Swatch,” just grab the hex code of your chosen Pantone color and fill that layer using the Paint Bucket tool! I’ve already put a layer mask on the layer for you so it fits perfectly inside the square outline.

If you’re using my .psd, all the blend mode settings are already in place! I usually set the colored square behind the Pantone logo to the Color blend mode, but sometimes, I prefer the way Hue looks. It’s up to you!

You can also adjust the drop shadow settings to make your text more visible as needed. The layers are arranged in this order so the drop shadows don’t interfere with the semi-transparent part of the colored swatch.

3.5 – Insert the color name and code My .psd has two versions to choose from: (1) a color name that fits on one line and (2) a color name that requires two lines. Use the one that applies to your color name and simply type that and color code into the corresponding text layers!

Note 1: Pantone doesn’t keep their font size uniform for every color of the year. They’ll sometimes shrink the text to fit longer names, but I like being consistent. So, I use this one font size for all my colors.

Note 2: My template has all the text left-justified and matching the starting point of the P in Pantone. BUT, sometimes the gif looks better if you nudge the text a bit so it looks more centered. Use your discretion when aligning the text!

Note 3: Btw, you definitely don’t have to use the TCX/TPG codes like me. (I’m a nut and there’s no way I’ll ever do a Pantone set and not use those types of codes to maintain uniformity across this series lol.) I’ve seen others do sets inspired by mine using different color codes or even just the hex code itself!

PHASE 4: COLORING THE BASE GIF

The key here is to make a majority of your gif feature your chosen Pantone swatch. If you’re really smart with your scene selections, this should be a breeze! If you’re stubborn like me and want to use specific scenes with the opposite color of your chosen Pantone swatch, there will be a bit more color manipulation involved... However, this isn’t a coloring tutorial, so again, I’m going to give some tips and resources that will hopefully help you out!

4.1 – Color matching. Now that you have the Pantone swatch on your gif, you should be able to reference that center square set to Color/Hue to match the rest of your gif to that color. Feel free to paint a little blob of your color onto another layer anywhere on your gif so you can refer to it closer over a specific part of your gif. For example, I put a little circle over Grogu’s head to see how closely I matched Pantone’s Peapod color, then I tweaked my adjustment layers a bit more until the colors matched near perfectly and I couldn’t tell where that blob begins or ends. The left is the solid color and the right is set to the blending mode Color (like the square):

4.2 – Moving the base gif. This isn’t really about coloring... but remember when I said to uncheck “Delete Cropped Pixels” in Step 2.1? Well, here’s your chance to adjust your canvas and move the gif around so the exact part you want under the color swatch is in the right position. I personally think these kinds of sets are more impactful when you put a differently colored part of your gif under the swatch so you can see through it and the difference is clearer. In my example, I put Grogu in the center so the green box would cover some of his brown potato sack robe.

4.3 – Color manipulation. Color manipulation is when you transform your media’s original color grading into a completely different color. The Grogu gif isn’t a great example because the original scene was already a green-yellow color:

I mean, the difference is still pretty drastic but that’s mostly because my file was HDR and washed out as a result.

So, here’s an example I made using a gif from my first Pantone set for ITSV (I’m not doing this demo to the Grogu gif because it’d be too much work to manipulate a green background with a green subject. This ITSV scene is perfect bc the majority of it is blue while the subjects are mostly red.)

For the “basic coloring,” I did everything as I normally would: mostly levels and selective color layers.

For color manipulation, my fav adjustment layer is Hue/Saturation (those are the screenshots that are on the gif above). When you’re smart with your scene selection, it’s pretty easy to manipulate colors with one Hue/Sat layer because you usually only need to tamper with 1-2 colors and, hopefully, they shouldn’t interfere with skin tones (obviously you’ll do other layers to further enhance your gif’s brightness, contrast, etc. — but I just mean the heavy lifting usually only takes me one layer with a good scene choice).

All you have to do is figure out what color the majority of your gif is, toggle to that color’s channel, and fiddle with the hue slider. In the gif above, you can see that I played with both the Blue and Cyan channels. Here’s why:

If I only adjust the Blue hue slider, I get those speckles of cyan peaking through in the gif above. Unless you’re working with completely flat colors — like 2D animation with zero shading/highlights — a color is never just one, solid color. Blue isn’t just blue, it may have some cyan. Purple isn’t just purple, I often have to toggle the Blue channel too. So, yeah, be mindful of that!

I’ll sometimes go in with the brush tool and paint over some areas of my gif to really smooth out the color and make it uniform. When I do that, I just set that painted layer to the Color blend mode. Some of the resources below go into that technique a bit more!

4.4 – Coloring resources. While not all of these tutorials cover the same type of color manipulation I did in my gifs, I think the principles are similar and would be helpful to anyone who’s a beginner: – color manipulation tutorial by usergif/me: I go a bit more in depth here (I think lol) – how to change the background of any gif by usergif/fionagallaqher: a great tutorial for using keyframes so you can manipulate the background of a gif with lots of motion – bea’s color isolation gif tutorial by nina-zcnik: this tutorial has more tips about hue/saturation layers as well as masking your subject – elio’s colouring tutorial by djarin: this tutorial shows a lot of examples of first manipulating the colors then brushing over the gif with a matching color for extra coverage

And just one last note on coloring, I always try to appreciate gifs with the mentality that “good” coloring is 100% subjective. One of the only things I would classify as “bad” coloring is when you whitewash or [color]wash someone’s skin tone. So, as long as you keep the integrity of your subjects’ natural skin — especially if they’re a POC — you should feel good about your coloring, because it’s yours and you worked hard! <3

PHASE 4: EXPORT

That’s it!! If you work in Video Timeline like me, just convert from Timeline back to Frames, export your gif, and voila!

Easy PEAsy. 🥁

If you have specific questions about this tutorial, my ask box is open <3

Also, check out these other Pantone-inspired sets by my friends @nobodynocrime (Mulan set) and @wakandasforever (Ponyo set)! There are so many ways to use Pantone colors in your set, so I hope this inspires you to create something beautifully colorful <3

#gif tutorial#completeresources#usershreyu#useryoshi#userelio#userannalise#userzaynab#userives#usermarsy#usertreena#usercim#userrobin#userkosmos#usersalty#usermills#userhella#alielook#resource*#gfx*#pantone*

1K notes

·

View notes

Text

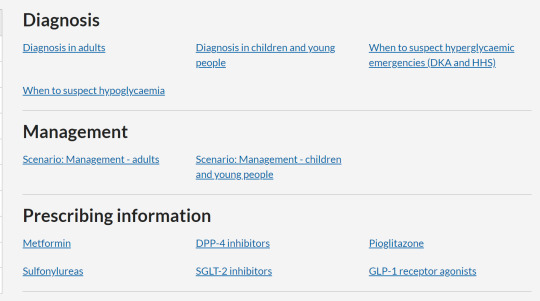

Resources that got me through (UK) med school

Hello everyone! As we are at the start of a new year, I’ve collated this list of online resources/apps that saved me during my revision at various points of med school. I’ll put the links and why I think they are good. These are UK-based resources as I often found when I searched things that US stuff came to the top of the list and that wasn’t always helpful for learning management because guidelines etc differ.

1. TeachMe Series

These are developing all the time and they have added Obs and Gynae, Paeds and Physiology which are new and I haven’t used. They are kept up to date and written by current health care professionals, and edited to ensure they are correct. The topics have really good diagrams, clinical relevance and key points.

a) TeachMe Anatomy

When: Preclinical years

Pros: colour-coordinated diagrams, each topic has a clear structure, including vasculature, nerves etc. Clinically relevant links

Cons: detail can be a little overwhelming, I would use this to make notes that I then revised from (definitely not for last minute revision!)

b) TeachMe Surgery

When: Clinical years - especially final year when we had a specific surgery placement

Pros: there are two ways you can approach things - by presentation or by diagnosis which really helped me for linking differentials. Also it has topics on the practicalities of surgery and perioperative care which I will probably continue to use as reminders for my surgical job next year

Cons: If you need to know exactly how a surgery works it might not go into specific details about the operation, but it is aimed at med students and we didn’t really need to know that. Also, it is kept up to date but check the date the topic was written and consider triangulating sources for management because guidelines change

2. Geeky Medics

These are again all topics written by healthcare professionals then edited. They have a wide range of topics but are less focused on conditions like diagnosis and management and more about practical OSCE guides or how to be a doctor, with videos and checklists. It also has an app but you have to pay for it.

When: Throughout medical school (and beyond!), particularly for practical or data interpretation exams (I go back to their interpreting LFTs and also death verification and certification posts a lot)

Pros: good for demonstrating how to do various examinations and for revision because you can test other people and use a checklist. Also have lots of free quiz questions on geekyquiz and also loads of cases to take histories from each other without having to make it up yourself

Cons: not comprehensive when it comes to revising, and the search function i find can be a little...erratic with what it shows you.

3. BNF

There are two places you can find the BNF - NICE or Medicines Complete. There’s the same information on both, try them out and see which you like better in terms of layout. Also download the app, because the interactions checker is a lot quicker to use than on the web.

When: whenever you’re learning about management of conditions and in the run up to the PSA you’re going to need to be very familiar with this website, so may as well start early (and you will carry on using it beyond med school)

Pros: The treatment summaries are up to date and based on NICE guidelines so are UK specific. I liked to refer to them in revision because it’s a good single reference point when doctors give you conflicting treatment ‘preferences’. The pages for the drugs are set out in a uniform format and it’s really easy to find the information you need.

Cons: the website is not always great for the pharmacology of drugs/saying which class a drug is part of. The treatment summaries are wordy and you have to sift through them sometimes, so not for last minute revision.

4. Almostadoctor

Again articles are written by doctors and then edited and ensures they are up to date. They have references at the bottom of the page for more detail but are a very good summary of conditions.

When: all through med school. Has basic clinical skills, diagnosis and management

Pros: The drugs section is very helpful for common drugs and has some simple pharmacology in there, and has flashcards for each topic if you find it helpful revising off those

Cons: tends to be split by condition rather than symptoms, there are some ways of comparing differentials for example chest pain but it makes a big table which i find confusing

5. NICE CKS

Oh boy is this site my saviour in GP, but I did use it in final year for some core conditions. It has referral criteria, decisions for management but it does allow for plenty of your own clinical judgement.

When: definitely more for finals to aid your revision, but after that if you end up on a GP rotation this is like a bible.

Pros: It’s really good for reminding you of red flag symptoms to check and also gives contraindications to certain management options

Cons: Not necessarily good for telling you what examination findings to expect and the site can involve clicking on lots of links taking you to other pages and then you can’t remember which part you came from.

If anyone has any others that I’ve not mentioned feel free to add! sorry for the long post, but I hope these are helpful and good luck everyone starting a new year of med school!

#med student#med school#medblr#uk medblr#medicine#revision#revision tips#med school resources#med school tips#medicine reference

66 notes

·

View notes

Note

Gloating about being an insider during a time of sadness is DISGUSTING

I'm not gloating, I'm posting INFO and FACTS like I always do...and showing restraint and discretion in not posting it sooner, and not posting the details, which I haven’t and won’t.

But you know what IS disgusting? Here’s a LONG list, and by no means, a comprehensive one, of what Extreme Shippers, Former Extreme Shippers, and Assorted Haters have done that is VERY DISGUSTING. I’ll write it stream of consciousness-like and not in order. Put your feet up and grab a tall drink. Here we go...

Click on Keep Reading

Extreme Shippers found Cait’s condo when she used to live in Los Angeles and sat outside for hours waiting to see if they saw her with Sam. ES blackmailed and coerced a minor, a 14 year old girl who was a super fan of Abbie’s sister, Charlotte Salt, into giving them info regarding Abbie and Sam. The girl was following Abbie’s locked Instagram account and could see the Sam related stuff Abbie was posting. ES won her trust, she gave them info about Abbie and Sam, they then told her if she didn’t screencap and give them the Sam related pics on Abbie’s IG account, they would tell Abbie and Charlotte that she had been giving them info. Sick doesn’t begin to describe it. ES tried to dox and did dox anyone and everyone who got in the way of their SamCait ship. Doxed, as in PUBLICLY posted, the names, addresses, pictures of their houses, professions, husbands’ and children’s names, employer names of ANYONE and EVERYONE who posted something to contradict the ship. They even posted pictures of their children. Again, messing with minors is a big no no, and usually a crime. ES created fake Ashley Madison accounts (that’s the website for married people who want to meet people to cheat on their spouses with) and pretended to be non-shippers’ husbands to try to make it seem like the husband was cheating. It got so bad, that in some cases, non-shippers had to get restraining orders, cease and desist orders, get the police, lawyers, and in TWO cases, the F B I involved. Yes, the F B I has come a knocking on a couple of Extreme Shipper’s doors because of their ILLEGAL actions. ES lured some of Sam’s girlfriends into believing they had their best interest at heart, gained their trust, and they PUBLICLY posted their PRIVATE messages. Luckily, in the case of one Sam’s ex, Abbie Salt, she later did confirm she and Sam dated, which totally negated everything that shipper had said Abbie told her. ES directly BULLIED and HARASSED fans, Outlander cast, crew, journalists, reporters, family and friends of Sam and Cait. ES contacted people’s employers to try to get them fired...literally messed with people’s livelihoods. They tried to get the Outlander drivers fired because they started posting stuff against shippers AFTER shippers turned on them. ES waited outside Sam and Cait’s residences in whatever location they were in to try to “catch them together.” Taking pics at someone’s private residence is very different than getting pics or video in PUBLIC places. For years, ES have manipulated pictures, gifs, video to sell the SamCait LIE to their gullible shipper friends. They’ve made money off selling these lies. ES have ostracized and banished any shipper friends who acknowledged the ship wasn’t real. They sent their best friend to Tony’s bar in London to try to prove he and Cait weren’t together, and when she unwittingly found out they were, they then bullied her and kicked her out of shipperville. ES created multiple hate sock accounts for the SOLE purpose of CYBERBULLYING Sam’s girlfriends and dates. Any time Sam dates a woman, ES follow the same pattern. They contact the women’s employers, parents, siblings, other family members, friends, ex-boyfriends trying to malign the women. Some examples: They pretended to have gone to high school with Mackenzie Mauzy and spread lies that she had a bad reputation in high school. They spread lies that Gia was a paid escort. ES contacted social media outlets to spread LIES about Sam and Cait and their significant others. Contacted anyone associated with Cait and Tony’s wedding trying to intimidate them into saying there was no wedding. They posted the picture of a waiter at one of the Outlander premieres and tried to pass him off as Tony to prove Tony didn’t go with Cait. ES have continuously posted pics of Cait with her naturally poochy belly trying to prove that she’s been pregnant with Sam’s children for the last 7 years. ES publicly questioned her if she was pregnant. Sam haters and disgruntled ex-shippers have spread rumors that Sam is gay. Nothing wrong with being gay, but what is wrong is spreading LIES. ES have badmouthed Cait’s HUSBAND, Tony McGill saying he was: her assistant, gay, her gay assistant, a loser, broke, boring, ugly, her purse holder, etc. And trust me, what I’ve posted above is the SHORT list.

And that’s not even mentioning what they’ve done to ME. Ever since I committed the unforgivable sin of posting source info CONFIRMING Sam and Cait were never a couple, and Cait was dating Tony, way back in 2014, this is what SamCait Extreme Shippers have done to me. Tagged me endlessly when I had my Twitter account telling me things like “Die, b*tch,” “Die, c*nt,” “You should be gang rap*d,” “Drop a house on her,” “You’re worse than AIDS,” and those are the “nice” comments. They literally BULLIED me every day, all day for YEARS. They also created hate accounts on Twitter and Instagram to mock me, parody me, and post lies about me. They were convinced they’d found my real identity (based on circumstantial evidence, which I’ve countered and can counter with the actual truth), and proceeded to post THAT woman’s FULL NAME, city where she lived, profession, reported her to her licensing board, and created a fake Twitter account pretending to be her. She got a lawyer and was able to get everything taken down, but they basically tried to ruin her life. They’ve spread LIES about me being the one harassing THEM and managed to convince over 60 dopes with disposable incomes to give them money for a GoFundMe campaign where they hired a Private Investigator to try to find me. They started a witchhunt letter writing campaign, hashtagged it on Twitter, #takebackourfandom, or some such bullsh*t, tagged everyone in Outlander cast and crew “telling” on me and even sent letters and e-mails to Starz and Sony executives trying to...I don’t know what. Hahahaha. It’s so ridiculous, my brain is scrambling as I write this. They told their followers not to believe anything I say and that I’m evil personified. ALL of that and more because they couldn’t face the FACT that their SamCait ship NEVER EXISTED and I was the one that confirmed it. When I think about it, I can’t believe I lived through all that. But I stayed because I knew I had the TRUTH on my side and that eventually it would all come out, which of course it did. And because I’m a bad bitch who doesn’t scare easily. EVERYTHING I’m referring to here is well DOCUMENTED with screencap proof. Or just ask anyone who’s been in the fandom long enough, they’ll attest that what I’m saying did actually happen, and that Extreme Shippers, Former Shippers, and Haters did do all of that.

So, Anon, when you come at me with “disgusting” things in this fandom, please refer to the above before you start pointing fingers at me.

PS. “Anon,” I’ve got your Los Angeles/Anaheim Samsung Galaxy S10e IP address tagged. So, send me another hate Ask and you’ll get blocked. And don’t bother using a VPN...once the tag is on, it follows the user no matter what IP they use. Now you know.

#extremeshippers#haters#samheughan#caitrionabalfe#disgusting#trolls#bullies#cybercrimes#abbiesalt#mackenziemauzy#missgiamarie#witchhunt#tonymcgill#charlottesalt

49 notes

·

View notes

Text

Aspiration. Yandere Chrollo x Reader [COMM]

click here for part 2!

Watching others has always been a hobby of yours.

There’s a lot to be learned from observing and watching how people behave and interact. Whether it be for your own simple amusement, or for the sake of gathering information. While some may find it creepy to keep such a keen eye out for others, you don’t look at it that way. Understanding human nature has an endless list of advantages, after all.