#MediaPipe

Explore tagged Tumblr posts

Text

New Webcam Based Full Body Tracking!

Made a short demo to show off some of the potential of this new software! You can find the creator's site here and the application has a Browser version and a Windows one (needed for VMC protocol)

#vtuber uprising#3d vtuber#3d#mmd#full body tracking#media pipe#mediapipe#3d model#3d modeling#mocap#xr animator#small streamer#indie vtuber#vtuber#3dvtuber#seadragonaniciavt

13 notes

·

View notes

Text

webサイトを作りました 見てください。

3 notes

·

View notes

Text

I'm so bad at focusing on stuff, it's unreal. Wanted to try reading a new book I received — it took less than a minute before I got distracted and started playing with pose detection software (mediapipe online pose landmark demo)... It's a pretty cool techdemo though :D

0 notes

Video

youtube

Control Net - Control the face expression using Blender

7 notes

·

View notes

Video

youtube

[EP #15] Assassins Creed III / Assassins Creed 3 -- No Commentary -- Xbox One https://youtu.be/7sfVMi6SeTA Check the latest gaming video on youtube - support us for more gaming content

2 notes

·

View notes

Text

Augmented Realty Bubble Pop Tutorial Bagian 1: Pengaturan Lingkungan Pengembangan

Selamat datang di seri tutorial tentang bagaimana membangun game sederhana menggunakan Python, OpenCV, dan MediaPipe! Dalam tutorial pertama ini, kita akan mempersiapkan lingkungan pengembangan yang diperlukan untuk proyek kita. Tutorial ini sangat penting karena memastikan Anda memiliki semua alat dan pustaka yang diperlukan sebelum kita mulai menulis kode. Mari kita mulai! Langkah 1:…

#Augmented Reality#Augmented Reality Tutorial#game AR bubble pop#instalasi anaconda#membuat environment python#opencv mediapipe pygame#setup python game#tutorial python pemula

0 notes

Text

so vtubestudio's new google mediapipe tracking is pretty decent and also it lets me have hand tracking so thats a plus

5 notes

·

View notes

Text

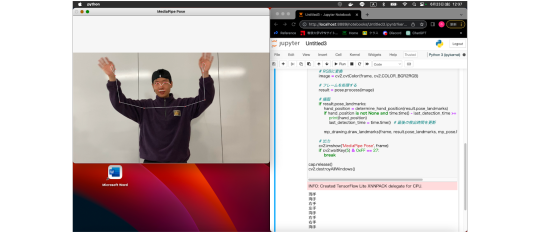

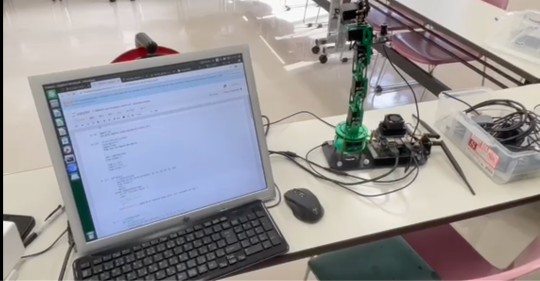

2023/6/30(金)第九回

作成:郭

本日の流れ

各自の作業を進める

(media)

・3日連続苦戦してラズパイでmediapipe実装が成功した。

これまでのRaspberry Piの初期設定、カメラの設定、JupyterでのOpenCVの実行はすべて完了しました。

次に、プログラムを使用してモーターの動作をテストします。面白いものができるかどうか確認してみましょう。

現在の問題:Raspberry Pi 3Bで骨格認識を実行する際、目視できるほどのカクつきが発生しています。

(robot)

プログラミングを使ってロボットを動かす事に成功しました。

(design)

ポスター3枚目、4枚目の作成途中

目標や課題

・ロボットとメディアパイプの合成

・ポスターの作成

参考資料

Dofbot

5 notes

·

View notes

Text

Uhh. MediaPipe V-Tuber tracking is kinda ableist/racist. Good job, Google.

So, my V-Tuber model is rigged for ARKit, right?

I tried MediaPipe. I can't use it. I have a facial musculoskeletal deformity, and what happens is my model's face ends up skewed to match the deformity. iPhone tracking works fine and doesn't do this.

Apparently MediaPipe also has similar issues tracking Asian faces, where it makes their models squint all the time.

Good job Google training your AI exclusively on white non-disabled people. Just…good job, guys.

Thaaat's not great.

2 notes

·

View notes

Text

Wow this has reached even Tumblr!?

I don’t know if me saying this even matters BUT, this girls project is a total rip off of this YouTuber project Nicholas Renottee

youtube

And you might say, ofc you can refer to peoples project and make your own stuff. I agree, expect he’s given a step by step tutorial and SHE HASNT EVEN BOTHERED TO CHANGE OR ADD A DIFFERENT SIGN.

How do I know this? Same college a year senior, did a similar project. Except we had all the letters of the alphabet to be able to spell out and write a paragraph including an erase option. And we tried to bring in Hindi as well as making the whole thing to speech. (Which is only a couple of line of code but still)

And the reason it makes me so mad is IT DOESN’T CREDIT THE ORIGINAL PERSON.

AND MORE IMPORTANTLY we should be paying attention to this:

There’s another Chennai based student (who was also deaf) news I saw who worked on an end to end solution for learning for herself Cant find the link at the moment.

And there’s this

And there’s LOADS more people and students working on such projects

My basic point is don’t copy code from YouTube and make it news for God’s sake!! Especially when you don’t have the knowledge to particularly scale it.

But yes AI is useful for that, the model used here in MediaPipe, through which you can make datasets for Hands, head and shoulder position recognitions, and it’s a pretty decent pipeline compared to MATLAB ones and way more robust.

Edit:

However for something like this to work smoothly there’s a LOT of work to be done and very rarely do these projects take into account disabled people views, which is why I stopped working on it. Example this helps people who use sign language to people don’t know it but not the other way around

A computer science student named Priyanjali Gupta, studying in her third year at Vellore Institute of Technology, has developed an AI-based model that can translate sign language into English.

#posting anonymously because I don't want personal info on my fandom main#ASL#engineering#I was working on it in 2022 btw#the youtube is made a year before that#I have no idea how no one has flagged it as yet

67K notes

·

View notes

Text

OpenPose vs. MediaPipe: In-Depth Comparison for Human Pose Estimation

Developing programs that comprehend their environments is a complex task. Developers must choose and design applicable machine learning models and algorithms, build prototypes and demos, balance resource usage with solution quality, and ultimately optimize performance. Frameworks and libraries address these challenges by providing tools to streamline the development process. This article will examine the differences between OpenPose vs MediaPipe, two prominent frameworks for human pose estimation, and their respective functions. We'll go through their features, limitations, and use cases to help you decide which framework is best suited for your project.

Understanding OpenPose: Features, Working Mechanism, and Limitations

At Saiwa , OpenPose is a real-time multi-person human pose detection library developed by researchers at Carnegie Mellon University. It has made significant strides in accurately identifying human body, foot, hand, and facial key points in single images. This capability is crucial for applications in various fields, including action recognition, security, sports analytics, and more. OpenPose stands out as a cutting-edge approach for real-time human posture estimation, with its open-sourced code base well-documented and available on GitHub. The implementation uses Caffe, a deep learning framework, to construct its neural networks.

Key Features of OpenPose

OpenPose boasts several noteworthy features, including:

- 3D Single-Person Keypoint Detection in Real-Time: Enables precise tracking of individual movements.

- 2D Multi-Person Keypoint Detections in Real-Time: Allows simultaneous tracking of multiple people.

- Single-Person Tracking: Enhances recognition and smooth visuals by maintaining continuity in tracking.

- Calibration Toolkit: Provides tools for estimating extrinsic, intrinsic, and distortion camera parameters.

How OpenPose Works: A Technical Overview

OpenPose employs various methods to analyze human positions, which opens the door to numerous practical applications. Initially, the framework extracts features from an image using the first few layers. These features are then fed into two parallel convolutional network branches.

- First Branch: Predicts 18 confidence maps corresponding to unique parts of the human skeleton.

- Second Branch: Predicts 38 Part Affinity Fields (PAFs) that indicate the relationship between parts.

Further steps involve cleaning up the estimates provided by these branches. Confidence maps are used to create bipartite graphs between pairs of components, and PAF values help remove weaker linkages from these graphs.

Limitations of OpenPose

Despite its capabilities, OpenPose has some limitations:

- Low-Resolution Outputs: Limits the detail level in keypoint estimates, making OpenPose less suitable for applications requiring high precision, such as elite sports and medical evaluations.

- High Computational Cost: Each inference costs 160 billion floating-point operations (GFLOPs), making OpenPose highly inefficient in terms of resource usage.

Exploring MediaPipe: Features, Working Mechanism, and Advantages

MediaPipe is a cross-platform pipeline framework developed by Google for creating custom machine-learning solutions. Initially designed to analyze YouTube videos and audio in real-time, MediaPipe has been open-sourced and is now in the alpha stage. It supports Android, iOS, and embedded devices like the Raspberry Pi and Jetson Nano.

Key Features of MediaPipe

MediaPipe is divided into three primary parts:

1. A Framework for Inference from Sensory Input: Facilitates real-time processing of various data types.

2. Tools for Performance Evaluation: Helps in assessing and optimizing system performance.

3. A Library of Reusable Inference and Processing Components: Provides building blocks for developing vision pipelines.

How MediaPipe Works: A Technical Overview

MediaPipe allows developers to prototype a vision pipeline incrementally. The pipeline is described as a directed graph of components, where each component, known as a "Calculator," is a node. Data "Streams" connect these calculators, representing time series of data "Packets." The calculators and streams collectively define a data-flow graph, with each input stream maintaining its queue to enable the receiving node to consume packets at its rate. Calculators can be added or removed to improve the process gradually. Developers can also create custom calculators, and MediaPipe provides sample code and demos for Python and JavaScript.

MediaPipe Calculators: Core Components and Functionality

Calculators in MediaPipe are specific C++ computing units assigned to tasks. Data packets, such as video frames or audio segments, enter and exit through calculator ports. The framework integrates Open, Process, and Close procedures for each graph run. For example, the ImageTransform calculator receives an image as input and outputs a transformed version, while the ImageToTensor calculator accepts an image and produces a tensor.

MediaPipe vs. OpenPose: A Comprehensive Comparison

When comparing MediaPipe and OpenPose, several factors must be considered, including performance, compatibility, and application suitability.

Performance: Efficiency and Real-Time Capabilities

MediaPipe offers end-to-end acceleration for ML inference and video processing, utilizing standard hardware like GPU, CPU, or TPU. It supports real-time performance and can handle complex, dynamic behavior and streaming processing. OpenPose, while powerful, is less efficient in terms of computational cost and may not perform as well in resource-constrained environments.

Compatibility: Cross-Platform Support and Integration

MediaPipe supports a wide range of platforms, including Android, iOS, desktop, edge, cloud, web, and IoT, making it a versatile choice for various applications. Its integration with Google's ecosystem, particularly on Android, enhances its compatibility. OpenPose, though also cross-platform, may appeal more to developers seeking strong GPU acceleration capabilities.

Application Suitability: Use Cases and Industry Applications

- Real-Time Human Pose Estimation: Both frameworks excel in this area, but MediaPipe's efficiency and versatility make it a better choice for applications requiring real-time performance.

- Fitness Tracking and Sports Analytics: MediaPipe offers accurate and efficient tracking, making it ideal for fitness and sports applications. OpenPose's lower resolution outputs might not provide the precision needed for detailed movement analysis.

- Augmented Reality (AR): MediaPipe's ability to handle complex, dynamic behavior and its support for various platforms make it suitable for AR applications.

- Human-Computer Interaction: MediaPipe's versatility and efficiency in processing streaming time-series data make it a strong contender for applications in human-computer interaction and gesture recognition.

Conclusion: Making an Informed Choice Between MediaPipe and OpenPose

Choosing between MediaPipe and OpenPose depends on the specific needs of your project. Both frameworks offer unique advantages, but MediaPipe stands out for its efficiency, versatility, and wide platform support. OpenPose, with its strong GPU acceleration capabilities, remains a popular choice for projects that can accommodate its computational demands.

By assessing your project's requirements, including the intended deployment environment, hardware preferences, and desired level of customization, you can make an informed decision on which framework to use. Both MediaPipe and OpenPose represent significant advancements in human pose estimation technology, empowering a wide range of applications and experiences in computer vision.

1 note

·

View note

Text

6月7日 第9回活動記録

こんにちは!尾形プロジェクトです。

動きを認識させるために「MediaPipe Model Maker」と呼ばれるものを使っていくことにした。難しい単語がたくさん出てきたけど頑張って食らいついた。

0 notes

Text

I’ve been trying to install some stuff for our project, so I can integrate Mediapipe with Unity, but the only online tutorial for it is confusing. Nevertheless, I must keep trying

0 notes

Video

youtube

[EP #23] Assassins Creed III / Assassins Creed 3 -- No Commentary -- Xbox One https://youtu.be/_2P-BNIlcqI Check the latest gaming video on youtube - support us for more gaming content

0 notes

Text

Membuat Augmented Reality Game Bubble Pop dengan Python

Pernahkah Anda ingin membuat game Augmented Reality (AR) sederhana dengan Python? Dalam serangkaian tutorial ini, Saya akan memandu Anda melalui langkah-langkah untuk menciptakan game Bubble Pop berbasis AR, di mana pemain dapat memecahkan gelembung yang muncul di layar hanya dengan gerakan tangan mereka. Proyek ini tidak hanya menyenangkan tetapi juga merupakan cara yang efektif untuk…

#Augmented Reality game dengan Python#Bubble Pop game Python#OpenCV dan MediaPipe#Python AR game tutorial#tutorial deteksi tangan

0 notes