#FPGA Accelerators Market

Explore tagged Tumblr posts

Text

Agilex 3 FPGAs: Next-Gen Edge-To-Cloud Technology At Altera

Agilex 3 FPGA

Today, Altera, an Intel company, launched a line of FPGA hardware, software, and development tools to expand the market and use cases for its programmable solutions. Altera unveiled new development kits and software support for its Agilex 5 FPGAs at its annual developer’s conference, along with fresh information on its next-generation, cost-and power-optimized Agilex 3 FPGA.

Altera

Why It Matters

Altera is the sole independent provider of FPGAs, offering complete stack solutions designed for next-generation communications infrastructure, intelligent edge applications, and high-performance accelerated computing systems. Customers can get adaptable hardware from the company that quickly adjusts to shifting market demands brought about by the era of intelligent computing thanks to its extensive FPGA range. With Agilex FPGAs loaded with AI Tensor Blocks and the Altera FPGA AI Suite, which speeds up FPGA development for AI inference using well-liked frameworks like TensorFlow, PyTorch, and OpenVINO toolkit and tested FPGA development flows, Altera is leading the industry in the use of FPGAs in AI inference workload

Intel Agilex 3

What Agilex 3 FPGAs Offer

Designed to satisfy the power, performance, and size needs of embedded and intelligent edge applications, Altera today revealed additional product details for its Agilex 3 FPGA. Agilex 3 FPGAs, with densities ranging from 25K-135K logic elements, offer faster performance, improved security, and higher degrees of integration in a smaller box than its predecessors.

An on-chip twin Cortex A55 ARM hard processor subsystem with a programmable fabric enhanced with artificial intelligence capabilities is a feature of the FPGA family. Real-time computation for time-sensitive applications such as industrial Internet of Things (IoT) and driverless cars is made possible by the FPGA for intelligent edge applications. Agilex 3 FPGAs give sensors, drivers, actuators, and machine learning algorithms a smooth integration for smart factory automation technologies including robotics and machine vision.

Agilex 3 FPGAs provide numerous major security advancements over the previous generation, such as bitstream encryption, authentication, and physical anti-tamper detection, to fulfill the needs of both defense and commercial projects. Critical applications in industrial automation and other fields benefit from these capabilities, which guarantee dependable and secure performance.

Agilex 3 FPGAs offer a 1.9×1 boost in performance over the previous generation by utilizing Altera’s HyperFlex architecture. By extending the HyperFlex design to Agilex 3 FPGAs, high clock frequencies can be achieved in an FPGA that is optimized for both cost and power. Added support for LPDDR4X Memory and integrated high-speed transceivers capable of up to 12.5 Gbps allow for increased system performance.

Agilex 3 FPGA software support is scheduled to begin in Q1 2025, with development kits and production shipments following in the middle of the year.

How FPGA Software Tools Speed Market Entry

Quartus Prime Pro

The Latest Features of Altera’s Quartus Prime Pro software, which gives developers industry-leading compilation times, enhanced designer productivity, and expedited time-to-market, are another way that FPGA software tools accelerate time-to-market. With the impending Quartus Prime Pro 24.3 release, enhanced support for embedded applications and access to additional Agilex devices are made possible.

Agilex 5 FPGA D-series, which targets an even wider range of use cases than Agilex 5 FPGA E-series, which are optimized to enable efficient computing in edge applications, can be designed by customers using this forthcoming release. In order to help lower entry barriers for its mid-range FPGA family, Altera provides software support for its Agilex 5 FPGA E-series through a free license in the Quartus Prime Software.

Support for embedded applications that use Altera’s RISC-V solution, the Nios V soft-core processor that may be instantiated in the FPGA fabric, or an integrated hard-processor subsystem is also included in this software release. Agilex 5 FPGA design examples that highlight Nios V features like lockstep, complete ECC, and branch prediction are now available to customers. The most recent versions of Linux, VxWorks, and Zephyr provide new OS and RTOS support for the Agilex 5 SoC FPGA-based hard processor subsystem.

How to Begin for Developers

In addition to the extensive range of Agilex 5 and Agilex 7 FPGAs-based solutions available to assist developers in getting started, Altera and its ecosystem partners announced the release of 11 additional Agilex 5 FPGA-based development kits and system-on-modules (SoMs).

Developers may quickly transition to full-volume production, gain firsthand knowledge of the features and advantages Agilex FPGAs can offer, and easily and affordably access Altera hardware with FPGA development kits.

Kits are available for a wide range of application cases and all geographical locations. To find out how to buy, go to Altera’s Partner Showcase website.

Read more on govindhtech.com

#Agilex3FPGA#NextGen#CloudTechnology#TensorFlow#Agilex5FPGA#OpenVINO#IntelAgilex3#artificialintelligence#InternetThings#IoT#FPGA#LPDDR4XMemory#Agilex5FPGAEseries#technology#Agilex7FPGAs#QuartusPrimePro#technews#news#govindhtech

2 notes

·

View notes

Text

At Pulsewave Semiconductor Leading provider of semiconductor design and verification services specializing in ASIC, FPGA, SoC, and IP core development. Our expert team delivers high-performance, low-power solutions using cutting-edge EDA tools and industry best practices. From RTL design to functional verification, we ensure robust, scalable, and reliable silicon solutions for a wide range of applications. Partner with us to accelerate your product development cycle and meet time-to-market goals with confidence.

1 note

·

View note

Text

AI Accelerators for Automotive Market Analysis and Key Developments to 2033

Introduction

The automotive industry is experiencing a paradigm shift with the integration of artificial intelligence (AI). AI is driving innovations across vehicle safety, automation, connectivity, and performance. However, implementing AI in automobiles requires high computational power, low latency, and energy efficiency. This demand has led to the emergence of AI accelerators—specialized hardware designed to optimize AI workloads in automotive applications.

AI accelerators enhance the capabilities of automotive systems by improving real-time decision-making, enabling advanced driver-assistance systems (ADAS), and facilitating autonomous driving. This article explores the role, types, benefits, and challenges of AI accelerators in the automotive market and their future potential.

Download a Free Sample Report:-https://tinyurl.com/ybxj6dp2

The Role of AI Accelerators in the Automotive Industry

AI accelerators are specialized processors designed to handle AI tasks efficiently. They optimize the execution of machine learning (ML) and deep learning (DL) models, reducing power consumption while enhancing computational performance. The automotive sector leverages AI accelerators for multiple applications, including:

Autonomous Driving: AI accelerators enable real-time processing of sensor data (LiDAR, radar, cameras) to make instantaneous driving decisions.

Advanced Driver-Assistance Systems (ADAS): Features such as adaptive cruise control, lane departure warning, and automatic emergency braking rely on AI accelerators for rapid processing.

Infotainment Systems: AI accelerators support voice recognition, gesture controls, and personalized in-car experiences.

Predictive Maintenance: AI-driven analytics help detect potential mechanical failures before they occur, improving vehicle longevity and reducing maintenance costs.

Energy Management in Electric Vehicles (EVs): AI accelerators optimize battery management systems to improve efficiency and extend battery life.

Types of AI Accelerators in Automotive Applications

There are various types of AI accelerators used in automotive applications, each catering to specific processing needs.

Graphics Processing Units (GPUs)

GPUs are widely used in automotive AI applications due to their parallel processing capabilities. Companies like NVIDIA have developed automotive-grade GPUs such as the NVIDIA Drive series, which power autonomous vehicles and ADAS.

Field-Programmable Gate Arrays (FPGAs)

FPGAs offer flexibility and power efficiency, allowing manufacturers to optimize AI models for specific tasks. They are widely used for in-vehicle sensor processing and real-time decision-making.

Application-Specific Integrated Circuits (ASICs)

ASICs are custom-designed chips optimized for specific AI workloads. Tesla's Full Self-Driving (FSD) chip is a prime example of an ASIC developed to support autonomous driving capabilities.

Neural Processing Units (NPUs)

NPUs are specialized AI accelerators designed for deep learning tasks. They provide efficient computation for tasks such as object detection, scene understanding, and natural language processing in automotive applications.

System-on-Chip (SoC)

SoCs integrate multiple processing units, including GPUs, CPUs, NPUs, and memory controllers, into a single chip. Leading automotive AI SoCs include Qualcomm’s Snapdragon Ride and NVIDIA’s Drive AGX platforms.

Benefits of AI Accelerators in the Automotive Sector

AI accelerators provide several advantages in automotive applications, including:

Enhanced Real-Time Processing

AI accelerators process vast amounts of sensor data in real time, allowing vehicles to make rapid and accurate decisions, which is crucial for autonomous driving and ADAS.

Energy Efficiency

AI accelerators are designed to maximize computational efficiency while minimizing power consumption, which is critical for electric and hybrid vehicles.

Improved Safety and Reliability

By processing complex AI algorithms quickly, AI accelerators enhance vehicle safety through advanced features such as pedestrian detection, collision avoidance, and driver monitoring systems.

Optimized Connectivity and Infotainment

AI accelerators enable smart voice assistants, real-time traffic navigation, and personalized infotainment experiences, improving the overall in-vehicle experience.

Reduced Latency

With dedicated AI processing units, accelerators minimize the delay in executing AI-driven tasks, ensuring seamless vehicle operations.

Challenges in Implementing AI Accelerators in Automotive Applications

Despite their advantages, AI accelerators face several challenges in the automotive market:

High Development Costs

The design and production of AI accelerators require significant investment, making them expensive for automakers and suppliers.

Heat Dissipation and Power Consumption

AI accelerators generate heat due to their intensive processing requirements, necessitating efficient cooling solutions and power management techniques.

Complex Integration

Integrating AI accelerators into existing automotive architectures requires robust software-hardware compatibility, which can be challenging for automakers.

Regulatory and Safety Compliance

AI-powered vehicles must comply with stringent safety and regulatory standards, which can slow down the adoption of AI accelerators.

Data Privacy and Security Concerns

Connected vehicles generate massive amounts of data, raising concerns about cybersecurity and data protection.

Future Trends in AI Accelerators for Automotive Applications

The automotive AI accelerator market is rapidly evolving, with several trends shaping its future.

Edge AI Computing

AI accelerators are enabling edge AI computing, reducing the dependency on cloud-based processing by handling AI tasks directly within the vehicle. This enhances real-time decision-making and reduces latency.

AI-Driven Sensor Fusion

AI accelerators will play a key role in sensor fusion, integrating data from multiple sensors (LiDAR, radar, cameras) to enhance autonomous vehicle perception and decision-making.

Advancements in AI Chips

Major semiconductor companies are investing in next-generation AI chips with higher processing power and lower energy consumption. Companies like NVIDIA, Intel, Qualcomm, and Tesla are leading innovations in this space.

Expansion of AI in EVs

With the rise of electric vehicles, AI accelerators will be instrumental in optimizing battery management, energy efficiency, and predictive maintenance.

5G and V2X Connectivity

AI accelerators will enable enhanced vehicle-to-everything (V2X) communication, leveraging 5G networks for real-time data exchange between vehicles, infrastructure, and the cloud.

Conclusion

AI accelerators are transforming the automotive industry by enhancing vehicle intelligence, safety, and efficiency. With advancements in AI chip technology, the integration of AI accelerators will continue to grow, enabling fully autonomous vehicles and smarter transportation systems. While challenges remain, the future of AI accelerators in the automotive market is promising, paving the way for safer, more efficient, and intelligent mobility solutions.Read Full Report:-https://www.uniprismmarketresearch.com/verticals/automotive-transportation/ai-accelerators-for-automotive

0 notes

Text

Zesty Zero‑Knowledge: Proofs Market Hits $10.132 B by ’35

In the data privacy coliseum, zero‑knowledge proofs (ZKPs) are the undisputed gladiators—propelling the market to $10.132 billion by 2035. By letting parties validate facts without revealing underlying data, ZKPs are rewriting trust in blockchain, finance, healthcare, and beyond.

Today’s champions are zk‑SNARKs (succinct, with small proof sizes) and zk‑STARKs (transparent setup and quantum‑resistance). Developers leverage Circom and Halo2 toolkits to build modular circuits, while hardware accelerators—ASICs and FPGAs—slash proof‑generation times from minutes to milliseconds.

In DeFi, ZKPs cloak transaction amounts and counterparties, soothing regulatory concerns around AML and KYC. Enterprises in healthcare deploy ZKPs to audit pharmacovigilance data without exposing patient details. Governments experiment with e‑voting, using ZKPs to confirm vote integrity while preserving ballot secrecy.

Adoption hurdles remain: complex math intimidates newcomers, and proving costs can spike under heavy computation. That’s why ZKP‑as‑a‑Service startups are booming—abstracting cryptography behind RESTful APIs and low‑code SDKs, letting dev teams integrate privacy‑by‑default in weeks, not years.

Funding funnels from VCs chasing blockchain’s next frontier: Circuit‑compiler platforms, proof‑optimizing middleware, and educational hubs offering zero‑knowledge bootcamps. Standardization bodies (W3C, ISO) are drafting ZKP guidelines, while consortiums like the Enterprise Ethereum Alliance incubate cross‑industry pilots.

For product leads, the playbook is two‑fold: prototype a ZKP module for your most sensitive workflow (e.g., salary audits, supply‑chain provenance), and partner with ZKP middleware providers to minimize build time. Early wins—reduced data‑breach liability, faster compliance cycles—will cement ZKPs as non‑negotiable infrastructure.

The zesty future of zero‑knowledge isn’t hype—it’s the bedrock of a privacy‑first digital economy. Stake your claim now, or watch your competitors build unbreakable trust boundaries without you.

Source: DataStringConsulting

0 notes

Text

Servotech’s Edge in Embedded Control Software Systems

Introduction

In today’s fast-evolving technological landscape, embedded control software systems play a pivotal role in driving efficiency, automation, and precision across industries. Servotech has established itself as a leader in this domain, offering cutting-edge solutions tailored to meet the dynamic needs of automotive, industrial automation, healthcare, and IoT sectors. By leveraging advanced algorithms, real-time processing, and robust hardware integration, Servotech delivers superior embedded control software systems that enhance performance and reliability.

Understanding Embedded Control Software Systems

Embedded control software systems are specialized programs designed to manage and control hardware devices efficiently. These systems are integrated into microcontrollers and processors, ensuring seamless operation and real-time decision-making for various applications. They are widely used in automotive systems, smart appliances, industrial machines, medical devices, and more.

Key Features of Embedded Control Software Systems

Real-time Processing: Ensures rapid response and seamless execution of commands.

Scalability: Adapts to different hardware configurations and application requirements.

Power Efficiency: Optimized to consume minimal energy while maintaining high performance.

Robust Security: Implements encryption and access control measures to prevent unauthorized access.

Customizability: Designed to meet specific industry standards and functional needs.

Servotech’s Expertise in Embedded Control Software Systems

Servotech has distinguished itself in the embedded software industry by integrating state-of-the-art technology, innovative engineering approaches, and industry-specific solutions. The company focuses on delivering high-quality software that optimizes hardware functionality and ensures seamless interoperability.

Advanced Hardware-Software Integration

Servotech specializes in creating embedded solutions that efficiently bridge the gap between hardware and software. Its software seamlessly integrates with microcontrollers, FPGAs, and DSPs, enabling real-time operations and enhanced control across multiple domains.

Industry-Specific Solutions

Servotech provides tailor-made embedded control solutions for various industries, ensuring optimal performance and compliance with regulatory standards.

Automotive: ECU software for engine management, ADAS (Advanced Driver Assistance Systems), and infotainment control.

Industrial Automation: PLCs, SCADA systems, and motion control software for manufacturing and process automation.

Healthcare: Embedded software for medical imaging devices, diagnostic tools, and wearable health monitors.

IoT and Smart Devices: Connectivity solutions for smart home devices, industrial IoT systems, and wireless communication networks.

The Competitive Edge of Servotech

Servotech differentiates itself from competitors by emphasizing innovation, reliability, and efficiency. Here are some of the key factors that give Servotech an edge in the embedded control software domain:

1. Cutting-Edge Software Development

Servotech employs modern development methodologies, including Agile and DevOps, to ensure the rapid deployment of embedded solutions. Their use of model-based design (MBD) and software-in-the-loop (SIL) testing enhances software quality and accelerates time-to-market.

2. High-Performance Real-Time Operating Systems (RTOS)

The integration of real-time operating systems (RTOS) in Servotech’s embedded solutions ensures deterministic behavior, efficient multitasking, and optimal resource utilization. These systems are crucial for applications requiring millisecond-level precision, such as automotive safety systems and industrial automation.

3. AI-Driven Embedded Systems

Servotech is at the forefront of integrating artificial intelligence (AI) and machine learning (ML) into embedded control software. AI-driven embedded systems enhance predictive maintenance, adaptive control, and autonomous decision-making, leading to improved efficiency and reduced operational costs.

4. Cybersecurity and Data Protection

With increasing cybersecurity threats, Servotech implements advanced encryption techniques, secure boot mechanisms, and anomaly detection algorithms to safeguard embedded systems from cyber-attacks and data breaches.

5. Compliance with Industry Standards

Servotech ensures that all its embedded solutions comply with industry regulations such as ISO 26262 (automotive safety), IEC 62304 (medical device software), and IEC 61508 (industrial functional safety). Compliance guarantees reliability, safety, and interoperability of the systems.

Applications of Servotech’s Embedded Control Software

Servotech's embedded solutions are deployed in a wide range of applications across different industries:

Automotive Sector

Electronic Control Units (ECUs) for engine, transmission, and braking systems.

ADAS software for collision avoidance, lane departure warnings, and adaptive cruise control.

Infotainment and navigation systems for enhanced user experience.

Industrial Automation

Robotics control software for precision manufacturing.

SCADA and PLC software for monitoring and automating industrial processes.

Smart sensors and actuators for predictive maintenance and real-time analytics.

Healthcare and Medical Devices

Embedded control software for pacemakers, MRI machines, and blood pressure monitors.

Software for remote patient monitoring and telemedicine applications.

AI-driven diagnostic tools for medical imaging and analysis.

IoT and Smart Devices

Embedded firmware for smart home automation systems.

Secure IoT communication protocols for data transmission.

AI-enhanced edge computing solutions for real-time decision-making.

Future Prospects and Innovations

Servotech continues to push the boundaries of embedded control software systems with ongoing research and development initiatives. Some of the upcoming trends and innovations include:

1. Edge Computing for Real-Time Processing

Servotech is investing in edge computing technologies to reduce latency and improve real-time decision-making in embedded systems. This approach enhances the efficiency of IoT devices and industrial automation systems.

2. 5G-Enabled Embedded Systems

With the advent of 5G networks, Servotech is developing embedded solutions that leverage high-speed, low-latency communication for applications such as connected cars, remote surgery, and industrial automation.

3. Blockchain for Secure Embedded Systems

To enhance data integrity and security, Servotech is exploring blockchain-based authentication and encryption methods for embedded systems, particularly in IoT and financial technology applications.

4. AI-Driven Predictive Analytics

Machine learning algorithms integrated into embedded control systems will enable predictive maintenance, self-learning automation, and autonomous decision-making, reducing downtime and increasing efficiency.

Conclusion

Servotech stands out as a leader in embedded control software systems by delivering high-performance, secure, and innovative solutions across industries. With a focus on real-time processing, AI integration, cybersecurity, and compliance with industry standards, Servotech continues to drive advancements in embedded technology. As industries evolve towards greater automation and connectivity, Servotech’s expertise in embedded systems will remain crucial in shaping the future of smart, efficient, and intelligent systems.

0 notes

Text

AI Chips = The Future! Market Skyrocketing to $230B by 2034 🚀

Artificial Intelligence (AI) Chip Market focuses on high-performance semiconductor chips tailored for AI computations, including machine learning, deep learning, and predictive analytics. AI chips — such as GPUs, TPUs, ASICs, and FPGAs — enhance processing efficiency, enabling autonomous systems, intelligent automation, and real-time analytics across industries.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS25086 &utm_source=SnehaPatil&utm_medium=Article

Market Trends & Growth:

GPUs (45% market share) lead, driven by parallel processing capabilities for AI workloads.

ASICs (30%) gain traction for customized AI applications and energy efficiency.

FPGAs (25%) are increasingly used for flexible AI model acceleration.

Inference chips dominate, optimizing real-time AI decision-making at the edge and cloud.

Regional Insights:

North America dominates the AI chip market, with strong R&D and tech leadership.

Asia-Pacific follows, led by China’s semiconductor growth and India’s emerging AI ecosystem.

Europe invests in AI chips for automotive, robotics, and edge computing applications.

Future Outlook:

With advancements in 7nm and 5nm fabrication technologies, AI-driven cloud computing, and edge AI innovations, the AI chip market is set for exponential expansion. Key players like NVIDIA, Intel, AMD, and Qualcomm are shaping the future with next-gen AI architectures and strategic collaborations.

#aichips #artificialintelligence #machinelearning #deeplearning #neuralnetworks #gpus #cpus #fpgas #asics #npus #tpus #edgeai #cloudai #computervision #speechrecognition #predictiveanalytics #autonomoussystems #aiinhealthcare #aiinautomotive #aiinfinance #semiconductors #highperformancecomputing #waferfabrication #chipdesign #7nmtechnology #10nmtechnology #siliconchips #galliumnitride #siliconcarbide #inferenceengines #trainingchips #cloudcomputing #edgecomputing #aiprocessors #quantumcomputing #neuromorphiccomputing #iotai #aiacceleration #hardwareoptimization #smartdevices #bigdataanalytics #robotics #aiintelecom

0 notes

Text

How Electronic Design Services Boost Innovation in the Tech Industry

Alcove Electronic Services plays a pivotal role in driving innovation within the tech industry. By offering specialized electronic design services, such as PCB design, embedded systems, and FPGA programming, they help companies accelerate time-to-market, improve product quality, and create custom solutions. Their expertise enables businesses to stay ahead of the competition while optimizing costs and resources for maximum efficiency.

0 notes

Text

Intel Agilex 7 FPGA and SoC Improve Hardware Acceleration

Intel Agilex 7 FPGA

Synchronising Wireless RAN Timing with Altera Agilex 7 SoC FPGAsUsing AI

FPGA intelligent holdover and adaptive clock correction reduce GNSS reliance.

Modern Radio Access Networks (RAN) require precise timing for performance and stability. Low-latency scheduling, base station synchronisation, and coordinated multi-point (CoMP) broadcasts need precise frequency and phase alignment in wireless infrastructure.

Synchronisation usually uses GNSS, PTP, and SyncE protocols. When urban canyon effects block GNSS signals, indoor deployment, jamming, or spoofing devices must switch to holdover, which often decreases accuracy, increases jitter, and interrupts service.

Clock Drift Prediction with Machine Learning | AI-Enhanced Holdover

Altera's innovative technology provides AI-driven timing holdover using MLP and LSTM neural networks that are taught to recognise and anticipate clock drift tendencies in real time. Direct implementation of these models onto Agilex 7 SoC FPGAs ensures ultra-low-latency GNSS signal loss adaption.

This method dynamically modifies the Digital Phase-Locked Loop (DPLL) per learning environmental behaviour.

Maintains frequency synchronisation without GNSS.

Up to 10 times less electricity and upkeep.

Adjusts for age, temperature, and voltage-induced oscillator drift

Guarantees real-time clock correction for next-generation RANs.

Resilient Open and Edge RAN

The Altera FPGA AI Suite, Quartus Prime, and PTP Servo IP were used to develop this MATLAB solution. Stress-tested in various environments and validated by multi-day drift simulations. It provides temporal robustness even in poor deployment conditions, making it suitable for Open RAN, private 5G, and remote edge deployments without GNSS.

We Value Intelligence at FPGAi

FPGAi lets system builders build hardware with intelligence that adapts to more complicated timing challenges as networks approach the edge. This AI-native synchronisation solution shows how neural inference and programmable logic cut TCO and improve RAN dependability.

SoC with Intel Agilex 7 FPGA

The top FPGAs provide industry-leading fabric and IO rates for most bandwidth, compute, and memory-intensive applications.

Agilex 7 devices outperform 7 nm FPGAs in fabric performance per watt. 32GB HBM2e, PCIe 5.0, CXL, integrated Arm-based CPUs, and 116Gbps transceivers are also available. These qualities make them perfect for broadcast, data centre, networking, industrial, and defence.

Agilex 7 SoC FPGA F-Series

F-Series FPGAs use Intel's 10 nm SuperFin fabrication process. They are ideal for many applications in many markets due to their high-performance crypto blocks, strong digital signal processing (DSP) blocks that enable various precisions of fixed-point and floating-point operations, and transceiver speeds up to 58 Gbps.

I-Series Agilex 7 FPGA and SoC

I-Series devices provide the finest I/O interfaces for bandwidth-intensive applications. This series, based on Intel's 10 nm SuperFin manufacturing technology, extends on the F-Series' PCIe 5.0 capability, cache- and memory-coherent connection to CPUs via CXL, and up to 116 Gbps transfer speeds.

Agilex 7, SoC FPGA M-Series

Memory and computation-intensive applications are ideal for M-Series devices. This series uses Intel 7 process technology to expand on I-Series device features like integrated high-bandwidth memory (HBM) with digital signal processing (DSP) and high-efficiency interfaces to DDR5 memory with a hard memory Network-on-Chip (NoC) to maximise memory bandwidth.

Advantages

Design Optimisation Benefits from Core Architecture

The second-generation Intel Hyperflex FPGA Architecture improves performance, power consumption, design capabilities, and designer productivity, enabling design optimisation.

Increase DSP speed and performance

The first FPGA with protected half-precision floating point (FP16) and BFLOAT16 delivers up to 38 tera floating point operations per second (TFLOPS) of DSP performance for AI and other compute-intensive applications.

Maintain Integrity and Privacy with Strong Security Features

The dedicated Secure Device Manager (SDM) manages configuration, authentication, bitstream encryption, key protection, tamper sensors, and active tamper detection and response. You may pick the functionality you need to meet your security requirements.

Application and Use Cases

Build Advanced Networking Solutions using Agilex 7 FPGAs and F-Tiles

Silicon and chiplet technologies provide scalability, flexibility, power economy, and hardened function performance, making them essential for FPGA system-level design.

Agilex 7 FPGAs Create Affordable and Effective mMIMO Solutions

Mobile communications demand is rising exponentially due to the number of users and their data consumption. To meet rising demand, mobile network operators (MNOs) are moving to 5G mobile networks and HF RF bands.

Agilex 7 FPGAs Target 5G, SmartNICs, IPUs

When fast networks are assaulted, edge-to-cloud cyberattacks and data breaches grow. Since cyberattacks and data breaches are increasing, encrypted communications are useful. 5G networks, OvS, and network storage.

Key Features

Second-generation Intel Hyperflex FPGA Architecture: The Intel Hyperflex FPGA design adds Hyper-Registers, bypassable registers, throughout the FPGA fabric. They are available at functional block and interconnect routing segment inputs.

Variable-Precision DSP: The unique DSP design allows DSP blocks to do multiplication, multiply-add, multiply-accumulate, floating point and integer addition, and variable-precision signal processing.

Interface for DDR4: Hardened memory controllers solve memory system constraints in high-performance computers and data centres with performance, density, low power, and control.

Hardened Arm Cortex-A53 quad-core SoC.

#technology#technews#govindhtech#news#technologynews#Intel Agilex 7 FPGA#Agilex 7 FPGA#Agilex 7#Agilex 7 F series#Agilex 7 M Series#Agilex 7 I Series#Intel Agilex 7

0 notes

Text

How AI and Defense Initiatives Are Shaping the Semiconductor IP Market

The global semiconductor intellectual property (IP) market was valued at US$ 7.1 billion in 2023 and is expected to grow at a compound annual growth rate (CAGR) of 5.9%, reaching US$ 13.5 billion by 2034. The market is being propelled by the rising demand for AI-based applications, government initiatives to modernize defense technologies, and advancements in semiconductor IP commercialization strategies.

Discover valuable insights and findings from our Report in this sample - https://www.transparencymarketresearch.com/sample/sample.php?flag=S&rep_id=15791

Top Market Trends

AI-Driven Growth: AI-based applications, particularly deep learning (DL) neural networks, are significantly influencing the demand for robust semiconductor IP solutions. AI systems rely on highly efficient and customizable IP cores to enhance processing power, reduce latency, and improve energy efficiency.

Security and Encryption Technologies: As digital threats grow, Hardware Root of Trust (HRoT) and encryption/decryption solutions are becoming critical in semiconductor IP, particularly in defense, IoT, automotive, and industrial applications.

Commercialization of Captive Semiconductor IP: Key players in the industry are developing new business models to commercialize in-house semiconductor IP. This trend is driving innovation and enabling companies to unlock additional revenue streams.

Regional Market Expansion: While North America leads in semiconductor IP due to investments in semiconductor manufacturing and security measures, Asia Pacific is rapidly expanding, with China dominating global semiconductor production and consumption.

Government Investments in Semiconductor Manufacturing: Policies such as the U.S. CHIPS and Science Act are catalyzing semiconductor manufacturing and IP development, ensuring a steady market growth trajectory.

Analysis of Key Players

Key players operating in the global Semiconductor IP market are focusing on licensing ASIC and FPGA semiconductor IP solutions and patent licensing. They are licensing their broad portfolio of memory interface patents to semiconductor and systems companies.

Arm Limited, Rambus, Synopsys, Inc., CEVA, Inc., Maven Silicon, Cadence Design Systems, Inc., Microchip Technology Inc., Achronix Semiconductor Corporation, Marvell, Imagination Technologies, Lattice Semiconductor, Menta, Taiwan Semiconductor Manufacturing Company Limited, Movellus, and Allegro DVT are key players operating in the semiconductor IP industry.

Key Market Drivers

Demand for AI-based Applications: AI, DL, and machine learning (ML) applications are accelerating the demand for high-performance semiconductor IP solutions.

Government Initiatives in Defense Technologies: Defense organizations globally are integrating semiconductor IP to enhance security and performance in military-grade applications.

Increase in Semiconductor Manufacturing Investments: Significant investments in semiconductor fabs and advanced chip manufacturing, particularly in North America and Asia Pacific, are driving market expansion.

Visit our report to discover essential insights and analysis - https://www.transparencymarketresearch.com/semiconductor-ip-market.html

Market Challenges

Intellectual Property Theft and Security Risks: As semiconductor IP gains more prominence, issues related to unauthorized access and IP theft are increasing, posing challenges for industry players.

High R&D Costs: Developing new semiconductor IP solutions requires significant investments in research and development, which can be a barrier for new entrants.

Regulatory and Compliance Issues: The semiconductor IP industry is subject to stringent regulations, especially concerning data security and export controls, which can affect market growth.

Market Segmentation

By Type

Processor IP

Memory IP

Interface IP

ASIC

Verification IP

By Architecture Design

Hard IP Core

Soft IP Core

By IP Source

Licensing

Royalty

By End-user

Integrated Device Manufacturer (IDM)

Foundry

Others

By Industry Vertical

Consumer Electronics

Telecommunications & Data Center

Industrial

Automotive

Commercial

Healthcare

Others

Future Outlook The semiconductor IP market is expected to witness steady growth due to the increasing integration of AI into consumer electronics, automotive, and industrial applications. Furthermore, as AI-driven systems require more advanced SoC architectures, semiconductor IP providers will continue innovating to meet market demands. Investments in security solutions, particularly encryption and HRoT, will remain a focal point for market growth.

Future Prospects: What’s Next for the Industry?

Advancements in Neural Network Processing (NNP): AI-driven processing requirements will continue to shape semiconductor IP, leading to more sophisticated and efficient chip architectures.

Integration of Physical Unclonable Functions (PUF): Security technologies such as PUF are expected to play a significant role in ensuring semiconductor IP integrity.

Expansion of IP Licensing and Royalty Models: Companies will increasingly focus on licensing and royalty-based revenue models to optimize IP monetization.

Strategic Collaborations and Acquisitions: Key players will engage in partnerships and acquisitions to strengthen their semiconductor IP portfolios.

About Transparency Market Research

Transparency Market Research, a global market research company registered at Wilmington, Delaware, United States, provides custom research and consulting services. Our exclusive blend of quantitative forecasting and trends analysis provides forward-looking insights for thousands of decision makers. Our experienced team of Analysts, Researchers, and Consultants use proprietary data sources and various tools & techniques to gather and analyses information. Our data repository is continuously updated and revised by a team of research experts, so that it always reflects the latest trends and information. With a broad research and analysis capability, Transparency Market Research employs rigorous primary and secondary research techniques in developing distinctive data sets and research material for business reports. Contact:

Transparency Market Research Inc. CORPORATE HEADQUARTER DOWNTOWN, 1000 N. West Street, Suite 1200, Wilmington, Delaware 19801 USA Tel: +1-518-618-1030 USA - Canada Toll Free: 866-552-3453 Follow Us: LinkedIn| Twitter| Blog | YouTube

0 notes

Text

AI Accelerators for Automotive Market Investment Trends and Market Expansion to 2033

Introduction

The automotive industry is experiencing a paradigm shift with the integration of artificial intelligence (AI). AI is driving innovations across vehicle safety, automation, connectivity, and performance. However, implementing AI in automobiles requires high computational power, low latency, and energy efficiency. This demand has led to the emergence of AI accelerators—specialized hardware designed to optimize AI workloads in automotive applications.

AI accelerators enhance the capabilities of automotive systems by improving real-time decision-making, enabling advanced driver-assistance systems (ADAS), and facilitating autonomous driving. This article explores the role, types, benefits, and challenges of AI accelerators in the automotive market and their future potential.

Download a Free Sample Report:-https://tinyurl.com/ybxj6dp2

The Role of AI Accelerators in the Automotive Industry

AI accelerators are specialized processors designed to handle AI tasks efficiently. They optimize the execution of machine learning (ML) and deep learning (DL) models, reducing power consumption while enhancing computational performance. The automotive sector leverages AI accelerators for multiple applications, including:

Autonomous Driving: AI accelerators enable real-time processing of sensor data (LiDAR, radar, cameras) to make instantaneous driving decisions.

Advanced Driver-Assistance Systems (ADAS): Features such as adaptive cruise control, lane departure warning, and automatic emergency braking rely on AI accelerators for rapid processing.

Infotainment Systems: AI accelerators support voice recognition, gesture controls, and personalized in-car experiences.

Predictive Maintenance: AI-driven analytics help detect potential mechanical failures before they occur, improving vehicle longevity and reducing maintenance costs.

Energy Management in Electric Vehicles (EVs): AI accelerators optimize battery management systems to improve efficiency and extend battery life.

Types of AI Accelerators in Automotive Applications

There are various types of AI accelerators used in automotive applications, each catering to specific processing needs.

1. Graphics Processing Units (GPUs)

GPUs are widely used in automotive AI applications due to their parallel processing capabilities. Companies like NVIDIA have developed automotive-grade GPUs such as the NVIDIA Drive series, which power autonomous vehicles and ADAS.

2. Field-Programmable Gate Arrays (FPGAs)

FPGAs offer flexibility and power efficiency, allowing manufacturers to optimize AI models for specific tasks. They are widely used for in-vehicle sensor processing and real-time decision-making.

3. Application-Specific Integrated Circuits (ASICs)

ASICs are custom-designed chips optimized for specific AI workloads. Tesla's Full Self-Driving (FSD) chip is a prime example of an ASIC developed to support autonomous driving capabilities.

4. Neural Processing Units (NPUs)

NPUs are specialized AI accelerators designed for deep learning tasks. They provide efficient computation for tasks such as object detection, scene understanding, and natural language processing in automotive applications.

5. System-on-Chip (SoC)

SoCs integrate multiple processing units, including GPUs, CPUs, NPUs, and memory controllers, into a single chip. Leading automotive AI SoCs include Qualcomm’s Snapdragon Ride and NVIDIA’s Drive AGX platforms.

Benefits of AI Accelerators in the Automotive Sector

AI accelerators provide several advantages in automotive applications, including:

1. Enhanced Real-Time Processing

AI accelerators process vast amounts of sensor data in real time, allowing vehicles to make rapid and accurate decisions, which is crucial for autonomous driving and ADAS.

2. Energy Efficiency

AI accelerators are designed to maximize computational efficiency while minimizing power consumption, which is critical for electric and hybrid vehicles.

3. Improved Safety and Reliability

By processing complex AI algorithms quickly, AI accelerators enhance vehicle safety through advanced features such as pedestrian detection, collision avoidance, and driver monitoring systems.

4. Optimized Connectivity and Infotainment

AI accelerators enable smart voice assistants, real-time traffic navigation, and personalized infotainment experiences, improving the overall in-vehicle experience.

5. Reduced Latency

With dedicated AI processing units, accelerators minimize the delay in executing AI-driven tasks, ensuring seamless vehicle operations.

Challenges in Implementing AI Accelerators in Automotive Applications

Despite their advantages, AI accelerators face several challenges in the automotive market:

1. High Development Costs

The design and production of AI accelerators require significant investment, making them expensive for automakers and suppliers.

2. Heat Dissipation and Power Consumption

AI accelerators generate heat due to their intensive processing requirements, necessitating efficient cooling solutions and power management techniques.

3. Complex Integration

Integrating AI accelerators into existing automotive architectures requires robust software-hardware compatibility, which can be challenging for automakers.

4. Regulatory and Safety Compliance

AI-powered vehicles must comply with stringent safety and regulatory standards, which can slow down the adoption of AI accelerators.

5. Data Privacy and Security Concerns

Connected vehicles generate massive amounts of data, raising concerns about cybersecurity and data protection.

Future Trends in AI Accelerators for Automotive Applications

The automotive AI accelerator market is rapidly evolving, with several trends shaping its future.

1. Edge AI Computing

AI accelerators are enabling edge AI computing, reducing the dependency on cloud-based processing by handling AI tasks directly within the vehicle. This enhances real-time decision-making and reduces latency.

2. AI-Driven Sensor Fusion

AI accelerators will play a key role in sensor fusion, integrating data from multiple sensors (LiDAR, radar, cameras) to enhance autonomous vehicle perception and decision-making.

3. Advancements in AI Chips

Major semiconductor companies are investing in next-generation AI chips with higher processing power and lower energy consumption. Companies like NVIDIA, Intel, Qualcomm, and Tesla are leading innovations in this space.

4. Expansion of AI in EVs

With the rise of electric vehicles, AI accelerators will be instrumental in optimizing battery management, energy efficiency, and predictive maintenance.

5. 5G and V2X Connectivity

AI accelerators will enable enhanced vehicle-to-everything (V2X) communication, leveraging 5G networks for real-time data exchange between vehicles, infrastructure, and the cloud.

Conclusion

AI accelerators are transforming the automotive industry by enhancing vehicle intelligence, safety, and efficiency. With advancements in AI chip technology, the integration of AI accelerators will continue to grow, enabling fully autonomous vehicles and smarter transportation systems. While challenges remain, the future of AI accelerators in the automotive market is promising, paving the way for safer, more efficient, and intelligent mobility solutions.Read Full Report:-https://www.uniprismmarketresearch.com/verticals/automotive-transportation/ai-accelerators-for-automotive.html

0 notes

Text

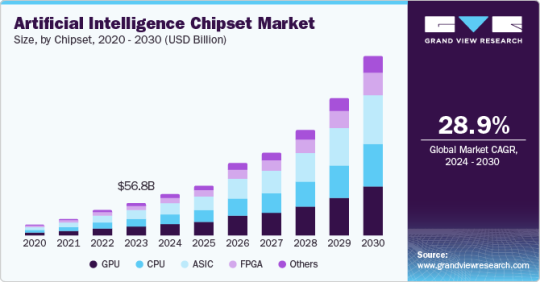

Artificial Intelligence Chipset Market Size To Reach USD 323.14 Billion By 2030

Artificial Intelligence Chipset Market Growth & Trends

The global artificial intelligence chipset market size is expected to reach USD 323.14 billion by 2030, registering a CAGR of 28.9% from 2024 to 2030, according to a new report by Grand View Research, Inc. The artificial intelligence (AI) chipset market is anticipated to expand at a CAGR of 33.6% from 2019 to 2025. An artificial intelligence chipset is built on the concept of adding a dedicated component in an electronic device, to execute machine learning tasks. In addition, an increased amount of data has led to the need for high-speed processors and faster computing, which is addressed by incorporating artificial intelligence into the set of electronic components. For instance, Apple has implemented a neural engine in its A11 Bionic chip’s GPU to speed-up the third-party applications. With the growing implementation of AI in smartphones and other smart devices, the growth in the market has been boosted.

With the rise in artificial intelligence applications ranging from smartphones to the automobile industry, the adoption of AI-enabled chipsets has been noted considerably. In addition, the adoption of smart homes and smart cities is directly influencing the AI chipset market’s growth by providing numerous opportunities. High demand in high-speed processors due to increased data complexity is one of the major factors driving the growth of the market.

Artificial intelligence chipset is being adopted rapidly in the consumer electronics industry owing to the rising demand for faster processors. New types of hardware/semiconductor accelerators are being introduced with the rapid evolution in artificial intelligence with machine learning and deep learning. AI has been introduced to almost every industry and majorly in consumer electronics, which has come up with numerous opportunities in the semiconductors industry. Growing demand for faster computing processors with reduced operational and maintenance costs has certainly boosted the AI chipset market growth.

Request a free sample copy or view report summary: https://www.grandviewresearch.com/industry-analysis/artificial-intelligence-chipset-market

Artificial Intelligence Chipset Market Report Highlights

GPU chipsets dominated the market with a revenue share of 30.9% in 2023. This is attributed to the exceptional parallel processing capabilities of GPUs, making them an ideal choice for compute-intensive AI workloads such as deep learning and neural networks.

The inference segment accounted for the largest share of the market in 2023. This is attributed to the growing demand for AI-powered applications in various industries, such as healthcare, finance, and automotive, which rely heavily on inference workloads to interpret and make decisions based on learned models.

Cloud AI computing held the largest market share in 2023. This is owing to a high demand for flexible and cost-effective AI infrastructure.

The consumer electronics segment accounted for the largest market share in 2023. The steadily rising adoption of smart home devices, smartphones, laptops, and tablets has created a vast market for AI chipsets that can enable features such as voice recognition, image processing, and predictive maintenance.

North America artificial intelligence chipset market dominated the market with a revenue share of 43.8% in 2023. The region's well-developed IT infrastructure, including data centers and cloud computing services, has supported the growth of this market.

Artificial Intelligence Chipset Market Segmentation

Grand View Research has segmented global artificial intelligence chipset market report based on chipset, workload domain, computing technology, vertical, and region:

Artificial Intelligence Chipset Chipset Outlook (Revenue, USD Million, 2018 - 2030)

CPU

GPU

FPGA

ASIC

Others

Artificial Intelligence Chipset Workload Domain Outlook (Revenue, USD Million, 2018 - 2030)

Training

Inference

Artificial Intelligence Chipset Computing Technology Outlook (Revenue, USD Million, 2018 - 2030)

Cloud AI Computing

Edge AI Computing

Artificial Intelligence Chipset Vertical Outlook (Revenue, USD Million, 2018 - 2030)

Consumer Electronics

Marketing

Healthcare

Manufacturing

Automotive

Retail and E-commerce

BFSI

Others

Artificial Intelligence Chipset Regional Outlook (Revenue, USD Million, 2018 - 2030)

North America

U.S.

Canada

Mexico

Europe

UK

Germany

France

Asia Pacific

Japan

India

China

Australia

South Korea

Latin America

Brazil

Middle East & Africa

South Africa

Saudi Arabia

UAE

List of Key Players of Artificial Intelligence Chipset Market

Advanced Micro Devices, Inc.

Apple Inc.

Baidu, Inc.

Google LLC

Graphcore

Huawei Technologies Co., Ltd.

Intel Corporation

NVIDIA Corporation

Qualcomm Technologies, Inc.

SK HYNIX INC.

Browse Full Report: https://www.grandviewresearch.com/industry-analysis/artificial-intelligence-chipset-market

#Artificial Intelligence Chipset Market#Artificial Intelligence Chipset Market Size#Artificial Intelligence Chipset Market Share#Artificial Intelligence Chipset Market Trends

0 notes

Text

Enhancing High-Speed Data Transfers with AXI DMA Scatter-Gather: A Complete Guide!

Efficient data movement is crucial in high-performance computing and embedded systems. AXI DMA Scatter-Gather plays a vital role in optimizing high-speed data transfers, reducing CPU workload, and enhancing overall system efficiency. In this guide, we will explore the fundamentals of AXI DMA Scatter-Gather, its advantages, and how Digital Blocks provides industry-leading solutions to meet your design needs.

Understanding AXI DMA Scatter-Gather

AXI DMA Scatter-Gather is an advanced Direct Memory Access (DMA) technique that enables efficient data transfer across multiple memory locations. Unlike traditional DMA, which moves data in fixed blocks, scatter-gather mode allows data to be transferred dynamically, improving system flexibility and performance.

Key Benefits of AXI DMA Scatter-Gather:

Reduced CPU Overhead: By offloading data movement tasks, it frees up CPU resources for other critical operations.

Increased Data Transfer Efficiency: It minimizes memory fragmentation and optimizes data throughput.

Low-Latency Performance: Ideal for real-time applications requiring high-speed data processing.

Scalability: Supports high-bandwidth applications in networking, video processing, and AI/ML systems.

How AXI DMA Scatter-Gather Works

The Scatter-Gather mechanism in AXI DMA utilizes a descriptor-based architecture, where each descriptor defines the source, destination, and transfer parameters. This enables seamless handling of multiple data chunks without CPU intervention.

The Process Includes:

Descriptor Preparation: The CPU prepares a linked list of descriptors for data movement.

DMA Controller Execution: The AXI DMA engine reads the descriptors and executes transfers autonomously.

Completion Notification: The system receives an interrupt when the transfer is complete, ensuring seamless data flow.

Digital Blocks: Leading the Way in AXI DMA Scatter-Gather Solutions

At Digital Blocks, we specialize in providing silicon-proven AXI DMA Scatter-Gather IP cores designed to enhance the performance of your FPGA, ASIC, and SoC designs. Our high-performance DMA controllers enable efficient, low-latency data movement, making them ideal for applications in networking, automotive, video processing, and artificial intelligence.

Why Choose Digital Blocks?

Proven Expertise: Over two decades of experience in developing high-speed IP cores.

Optimized Performance: Designed for high-throughput, low-latency applications.

Seamless Integration: Fully compatible with industry-standard AXI architectures.

Customizable Solutions: Tailored to meet specific project requirements.

Conclusion

AXI DMA Scatter-Gather is a game-changer in high-speed data transfers, enabling efficient memory management and reducing CPU workload. With Digital Blocks' industry-leading AXI DMA solutions, engineers can accelerate their designs, improve system performance, and achieve faster time-to-market. For More Details:- https://www.digitalblocks.com/axi-dma-verilog-ip-core/

0 notes

Text

AI Infrastructure Companies - NVIDIA Corporation (US) and Advanced Micro Devices, Inc. (US) are the Key Players

The global AI infrastructure market is expected to be valued at USD 135.81 billion in 2024 and is projected to reach USD 394.46 billion by 2030 and grow at a CAGR of 19.4% from 2024 to 2030. NVIDIA Corporation (US), Advanced Micro Devices, Inc. (US), SK HYNIX INC. (South Korea), SAMSUNG (South Korea), Micron Technology, Inc. (US) are the major players in the AI infrastructure market. Market participants have become more varied with their offerings, expanding their global reach through strategic growth approaches like launching new products, collaborations, establishing alliances, and forging partnerships.

For instance, in April 2024, SK HYNIX announced an investment in Indiana to build an advanced packaging facility for next-generation high-bandwidth memory. The company also collaborated with Purdue University (US) to build an R&D facility for AI products.

In March 2024, NVIDIA Corporation introduced the NVIDIA Blackwell platform to enable organizations to build and run real-time generative AI featuring 6 transformative technologies for accelerated computing. It enables AI training and real-time LLM inference for models up to 10 trillion parameters.

Major AI Infrastructure companies include:

NVIDIA Corporation (US)

Advanced Micro Devices, Inc. (US)

SK HYNIX INC. (South Korea)

SAMSUNG (South Korea)

Micron Technology, Inc. (US)

Intel Corporation (US)

Google (US)

Amazon Web Services, Inc. (US)

Tesla (US)

Microsoft (US)

Meta (US)

Graphcore (UK)

Groq, Inc. (US)

Shanghai BiRen Technology Co., Ltd. (China)

Cerebras (US)

NVIDIA Corporation.:

NVIDIA Corporation (US) is a multinational technology company that specializes in designing and manufacturing Graphics Processing Units (GPUs) and System-on-Chips (SoCs) , as well as artificial intelligence (AI) infrastructure products. The company has revolutionized the Gaming, Data Center markets, AI and Professional Visualization through its cutting-edge GPU Technology. Its deep learning and AI platforms are recognized as the key enablers of AI computing and ML applications. NVIDIA is positioned as a leader in the AI infrastructure, providing a comprehensive stack of hardware, software, and services. It undertakes business through two reportable segments: Compute & Networking and Graphics. The scope of the Graphics segment includes GeForce GPUs for gamers, game streaming services, NVIDIA RTX/Quadro for enterprise workstation graphics, virtual GPU for computing, automotive, and 3D internet applications. The Compute & Networking segment includes computing platforms for data centers, automotive AI and solutions, networking, NVIDIA AI Enterprise software, and DGX Cloud. The computing platform integrates an entire computer onto a single chip. It incorporates multi-core CPUs and GPUs to drive supercomputing for drones, autonomous robots, consoles, cars, and entertainment and mobile gaming devices.

Download PDF Brochure @ https://www.marketsandmarkets.com/pdfdownloadNew.asp?id=38254348

Advanced Micro Devices, Inc.:

Advanced Micro Devices, Inc. (US) is a provider of semiconductor solutions that designs and integrates technology for graphics and computing. The company offers many products, including accelerated processing units, processors, graphics, and system-on-chips. It operates through four reportable segments: Data Center, Gaming, Client, and Embedded. The portfolio of the Data Center segment includes server CPUs, FPGAS, DPUs, GPUs, and Adaptive SoC products for data centers. The company offers AI infrastructure under the Data Center segment. The Client segment comprises chipsets, CPUs, and APUs for desktop and notebook personal computers. The Gaming segment focuses on discrete GPUs, semi-custom SoC products, and development services for entertainment platforms and computing devices. Under the Embedded segment are embedded FPGAs, GPUs, CPUs, APUs, and Adaptive SoC products. Advanced Micro Devices, Inc. (US) supports a wide range of applications including automotive, defense, industrial, networking, data center and computing, consumer electronics, networking

0 notes

Text

The Future of Digital Circuits: Voler Systems' FPGA Solutions at the Forefront

In the ever-evolving world of technology, where speed, efficiency, and flexibility are paramount, FPGA design has emerged as a game-changer in digital circuit performance. At Voler Systems, we take pride in delivering cutting-edge FPGA solutions that power a diverse array of applications, from medical devices to high-speed data processing systems. Our expertise in FPGA design empowers businesses to create customized, high-performance digital solutions that drive innovation and accelerate time to market.

FPGAs offer unparalleled versatility, allowing for the development of digital circuits that can be reprogrammed and optimized to meet evolving needs. Whether you're designing a next-generation medical device or integrating complex video and image processing systems, FPGA technology provides the agility and performance required to stay ahead in competitive markets.

At Voler Systems, our embedded systems digital circuit design engineers specialize in developing FPGA designs with industry-leading platforms such as Xilinx, Intel (formerly Altera), and Microchip . Our breadth of experience enables us to tackle complex design challenges, ensuring seamless system integration, optimized architecture development, and robust design performance.

One of the key advantages of FPGA design is the ability to customize hardware to specific application requirements. Unlike fixed-function ASICs, FPGAs allow for reconfiguration, enabling engineers to adapt and refine designs without costly and time-consuming hardware revisions. This flexibility not only accelerates product development cycles but also reduces risk, making FPGA an attractive solution for industries that demand rapid innovation and adaptability.

Voler Systems has successfully developed FPGA solutions for a variety of high-demand applications. In the medical sector, our FPGA designs enable advanced signal processing for diagnostic and therapeutic devices, ensuring accuracy and reliability. For image and video processing applications, our designs facilitate real-time data handling and high-speed memory interfaces, delivering seamless performance for critical visual systems. Our expertise extends to industrial automation, telecommunications, and consumer electronics, where FPGA technology enhances performance, scalability, and efficiency.

Our collaborative approach ensures that each project benefits from comprehensive system analysis and meticulous design optimization. We work closely with clients to understand their unique requirements, crafting FPGA solutions that align with their goals and operational demands. By leveraging our extensive knowledge of FPGA platforms and design tools, we deliver solutions that maximize performance while minimizing power consumption and footprint.

In addition to our technical expertise, Voler Systems is committed to providing exceptional support throughout the design and development process. From initial concept and feasibility studies to prototyping and final deployment, our team is dedicated to ensuring that each FPGA design meets the highest standards of quality and reliability. Our rigorous testing and validation procedures guarantee that our solutions perform flawlessly under real-world conditions, giving clients the confidence to launch products that exceed expectations.

In today's fast-paced technological landscape, the ability to innovate and adapt is crucial. With Voler Systems' FPGA solutions, businesses gain a competitive edge, harnessing the power of FPGA technology to redefine digital circuit performance. Whether you're developing the next breakthrough medical device or optimizing data processing systems, our FPGA design expertise positions you for success in a dynamic and demanding market.

#electronic product design#electronic design services#wearable medical device#medical device design#Medical Device Design Consultant#Medical Devices Development

0 notes