#Even some (VERY LIMITED) dev work can be automated but needs oversight

Explore tagged Tumblr posts

Text

The future of mobile growth teams

Last week I wrote about why ad creative has become such an important part of the mobile marketing workflow and provided a general framework for producing performant mobile ad creative at scale (the content is an adaptation of what will be presented in the forthcoming Modern Mobile Marketing at Scale workshop series taking place in October). In that article, I provided some background on the tectonic shift that has taken place within mobile marketing over the past two years as ad platforms have prioritized algorithmic campaign management, and while the piece explores the role that ad creative plays in rapid experimentation of both audiences and the ad creatives that best resonate with them, it doesn’t explain the role that experimentation itself plays in this modern mobile growth paradigm.

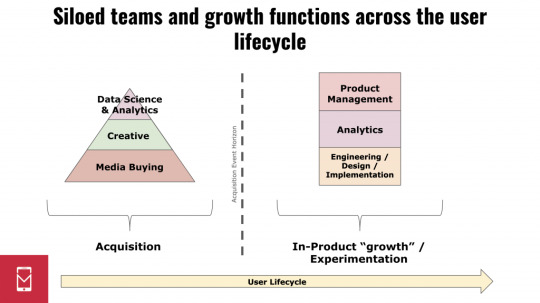

The process of experimentation generally appears to be siloed within different specializations at many companies: with ad creative, or with event testing, or with in-app content personalization, etc. In other words, while experimentation is at the very heart of what growth teams do, many teams see it not as a functional process in and of itself but rather as an abstract exercise that they apply to specific aspects of growth. For many developers, this results in various components of “growth” being broken out into disparate teams that operate independently across the user lifecycle:

This arrangement — a functional separation between acquisition and in-product “growth” teams — is not efficient, and it will become extinct as more and more companies adapt to the operating standards of the new mobile distribution environment. But before exploring that, two notes about the above diagram:

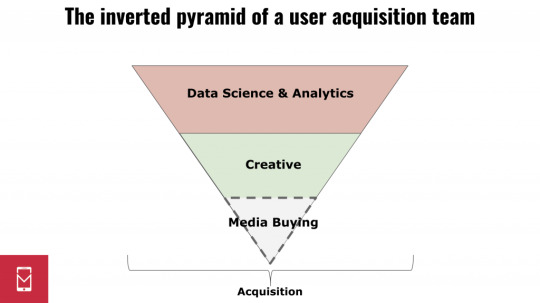

The acquisition team pyramid as depicted here represents how many advertisers today may think about team composition (with the size of each layer corresponding to the amount of resources / headcount allocated to it), but that is rapidly changing, too. Media buying as a distinct role is becoming almost obsolete as analysis and creative experimentation become the high-impact levers available to marketing teams. The acquisition team of the future looks more like the below, with data science and analytics teams driving marketing spend and with media buying being potentially completely automated away:

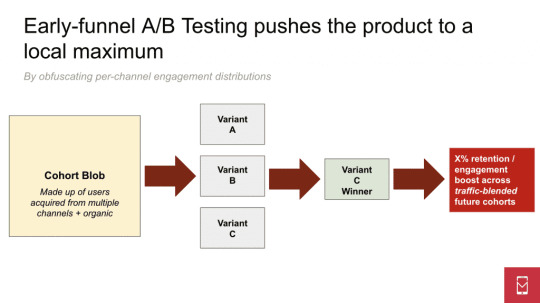

The impact of A/B testing-oriented “growth” teams is, I believe, generally exaggerated. My belief is that endless A/B testing, when it is constrained entirely within the product and not connected to marketing, can actually destroy value as it pushes users into local maxima and ignores opportunities for personalization. I have seen growth teams thrash on product iterations so aggressively that experiments confounded each other and almost certainly subvert the work of acquisition teams in terms of finding high-potential users.

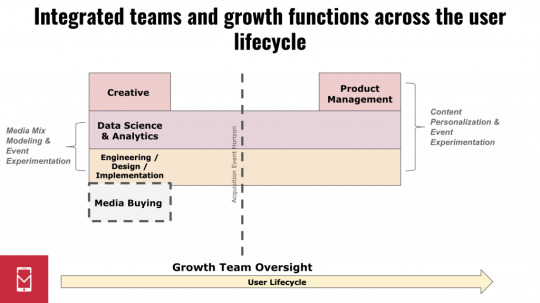

Those points out of the way, it’s useful to consider how the siloed teams depicted in the diagram amalgamate and evolve collectively into the growth team of the future. In order to do so, it’s important to identify what I believe to be are the critical modern functional components of growth:

Media mix modeling. The increasing opacity of placements on Facebook and Google as well as the general growth of non-direct response budgets for mobile advertisers (which is in part a reaction to the aforementioned opacity) is creating a situation where per-user marketing attribution is impossible or impractical. As mobile moves into a post-attribution era, media mix modeling is becoming the means by which mobile advertisers make budgeting decisions. Media mix modeling is mostly a data science exercise that informs media buying, but it also has a tremendous impact on product metrics. Media mix modeling and marketing incrementality are the at the frontier of exciting marketing science at the moment, and these functional skillsets will sit at the core of the growth team of the future;

Content personalization. A/B testing is a blunt instrument and, as noted earlier, tends to not work well or even be counterproductive when it isn’t aligned with what an acquisition team is doing. Rather than optimizing the entire user base as a monolithic cohort, I am seeing the most sophisticated advertisers now personalize the user experience on the basis of many demographic features that include the user’s acquisition channel. With this approach, signal from the source of acquisition flows through the app install and results in a personalized experience for everyone based on data that is gathered throughout the entire user lifecycle (and not just the portion that is spent in-app, after acquisition);

Event signal measurement. As algorithmic campaign management obfuscates audience development and targeting, advertisers increasingly rely on in-app events sent back to campaigns in campaign strategies like Google UAC and Facebook AEO and VO to help ad platforms identify high-value users and target them disproportionately. Part of scaling advertising campaigns is experimenting with these events to find the ones that deliver the best, most meaningful signal to the ad platforms, which requires tight coordination between the user acquisition and product teams;

Creative experimentation. As the second set of fundamental inputs (along with in-app events) of modern mobile advertising campaigns, creative experimentation is a critical aspect of scaling mobile growth. I cover this in great detail in this post.

These two practices are obviously inter-related: as media mix modeling informs budget allocations across channels and media formats based on overall, top-line revenue, content personalization optimizes the experience at the user level on the basis of information that is generated in the acquisition process (eg. users from acquisition channel X are initially placed on content path Y within the FTUE). In an article titled A/B testing can kill product growth, I visualized the approach that most growth teams take in A/B testing product features and user flows:

With content personalization that includes acquisition information, users are put on content paths on the basis of their source of acquisition from the time of install. But what’s more, the A/B testing mechanic, which is very manual and prone to superfluous specificity, is replaced by online bandits mechanics in most cases of modern personalization, which are easier to manage and scale. Implementing this kind of personalization is clearly not so much a product or marketing exercise but rather a data science activity: building, measuring, and tuning these models is at the heart of the process. And media mix modeling is no different: a model tests budget allocations for incrementality and overall return and adjusts it accordingly.

So what does this mean for the growth team of the future? It means three things:

The team has oversight of both acquisition and in-app behavioral optimization;

The team is focused on building systems that determine optimal content paths for each user;

The team recognizes that it has opportunities to drive improvements in product LTV not just by optimizing acquisition but also by optimizing the product experience at the user level and finding the best in-app signals of value to transmit to ad platforms.

In practice, this means that the growth team — as a singular, integrated entity — has broad oversight over both acquisition and in-app content optimization that benefits from visibility into acquisition source:

So just as ad creative has grown in importance to necessitate a fundamental change in the way growth teams operate, so has experimentation: it has extended into the app, requiring a growth team’s purview to be enlarged past the “acquisition event horizon,” or the point after which a user downloads an app.

One question that the above analysis raises is: why is this the future of mobile growth and not the present, if these systems are already in place? One reason is that, generally, teams are slow to adapt to significant ecosystem changes and they do so very gradually. A mobile-first company just being formed right now might build its growth team in a way that facilitates this modern reality, but existing teams must re-tool and re-hire.

A second reason is that there is a limited talent pool of growth marketers with the skills needed to excel in this environment, and they are in great demand. Hiring a competent growth marketer with a background in scaling mobile products into millions of dollars of advertising spend per month (or millions of DAU) is difficult; advertisers struggle to build growth teams and often run into the chicken-and-egg problem of mobile advertising. But this new paradigm undoubtably is the future of mobile growth, and whether slowly or wholesale, teams must prepare for and adapt to it.

Photo by Ross Findon on Unsplash

The post The future of mobile growth teams appeared first on Mobile Dev Memo.

The future of mobile growth teams published first on https://leolarsonblog.tumblr.com/

0 notes

Link

Key Takeaways

Edge cloud systems face security issues when it comes to fragmenting data, locking down physical access, and the tendency of edge cloud systems to grow beyond the boundaries of what they were originally designed to operate in.

Most security systems are stuck with edge cloud systems, and need to figure out how to harden them against attack rather than abandon them

Overcoming the security challenges of edge cloud security systems will really come down to decentralization, encryption, and utilizing full spectrum security measures

A big determining factor for the security of edge cloud systems will be the speed at which businesses deploy them

Edge cloud was a major topic of debate at RSA this year. Multiple panels were devoted to the subject, and even in those that weren’t the utility of edge solutions was often raised. At the same time, however, a tension was apparent: operations and dev staff were quick to stress the performance gains of edge cloud infrastructures, and cybersecurity pros raised concerns about the security implications of these same architectures.

This tension has been apparent for a while. In their 2020 Outlook report, Carbon Black pointed to a bit of a rift between IT and security teams regarding resource allocation in cloud edge structures.

Edge, Cloud, and Edge Cloud

In order to see the challenges involved in deploying edge cloud solutions whilst retaining strong security, it’s worth reminding yourself why edge cloud solutions such as Software-as-a-Service (SaaS) were initially developed. Such solutions are becoming virtually commonplace, to the point that SaaS in particular is projected to account for almost all of the software needs for 86% of companies within the next two years (it already is being used to a lesser extent by 90% of companies right now).

Some security firms will tell you that their edge cloud SaaS solutions are only designed to ensure security, but that's not quite true. In reality, edge cloud systems were developed with one simple factor in mind: bandwidth. That's why, for instance, the Open Glossary of Edge Computing, an open source effort led by the Linux Foundation's LF Edge group, defines edge cloud systems primarily in terms of performance: "by shortening the distance between devices and the cloud resources that serve them," the glossary explains, "and also reducing network hops, edge computing mitigates the latency and bandwidth constraints of today's Internet, ushering in new classes of applications."

In other words, as the number of IoT devices connected to networks began to increase exponentially around five or so years ago, many systems engineers found that their cloud providers were not keeping up with the increased computing load. The solution was to insert another level of processing between devices and cloud storage providers, and thereby reduce the data loads that cloud services had to process. Only later, in fact, were edge cloud systems thought about as a tool to secure the devices they interact with. And that's primarily why cybersecurity pros don't trust them.

These concerns are well illustrated by the ongoing worry that the most high-profile example of edge cloud systems – automated vehicles – can be easily hacked. The ease with which data used by autonomous vehicles can be accessed and manipulated has been a concern for years, and as a result many of the security protocols used in ege cloud systems have been designed, primarily, to protect autonomous vehicles.

The Challenges

There are a few problems with edge cloud solutions from a security perspective. Some are technical, and some relate to the way in which these services are used within a typical organization.

Architecture

First, let's think about the structure of edge cloud systems. In most implementations, edges are within organizations' computing boundaries, and so they will be protected by a wide variety of tools that focus on perimeter scanning and intrusion detection. However, that's not quite the whole story: in most systems, there will also be a tunnel between the edge straight to cloud storage.

Sending data from the edge to the cloud in a secure way is fairly straightforward, because organizations will control the infrastructure that is used to encrypt and verify it. The problem arises when the cloud needs to send data back to the edge for processing. The challenge here is to ensure that this data is authenticated and verified, and is therefore safe to enter into an organizations' internal systems.

Fragmentation

First, and most obviously, edge cloud systems fragment data. Having each device connected directly to cloud services might incur a performance loss, but at least this data is centralized, and can be covered by a single cloud security policy. Because edge cloud servers – almost by definition – need to be connected to many different devices, they represent a nightmare when it comes to securing these same connections.

Fragmentation is not only a problem when it comes to protecting data, though. With a growing number of IoT devices running via edge processing, each needs to be authenticated and follow a privacy policy that allows network admins to keep control of their data. The edge cloud model makes it inherently difficult to apply global privacy policies to each device, since each is communicating independently.

Physical Security

A third issue with edge cloud systems is that locking down physical access to these devices can be a challenge. The devices typically used in edge cloud infrastructures are designed, after all, to be portable, and as such are more susceptible to physical tampering than standard data devices.

An example of this is the "micro data centers" that many telecommunications providers are now making use of. These centers sometimes sit at the base of cell towers, and pre-process data before feeding either back to consumer devices or into corporate data systems. Micro data centers like this can dramatically improve the performance of cell networks, but they are also vulnerable to physical tampering.

Sprawl

All of these issues are compounded by the tendency of edge cloud systems to grow beyond the boundaries they were originally designed to operate within. In large organizations, building edge cloud functionality can be an invitation for other engineers, from other parts of your organization, to shift their computing demands to your edge cloud system.

Overcoming this challenge requires a dual approach. On the one hand, management needs to be made aware of the limitations of edge cloud systems, both in terms of computing power and security, in order to prevent many new devices being connected to them. Secondly, engineers should design edge cloud systems with a view to the future, and make sure that the security that is built into these systems is easily understandable for other employees working with them.

User Error

The problem of "sprawl" is related to another: that many IT professionals simply don't take IoT device security seriously. Despite the well-documented security issues that these devices present, many people simply don't realize the level of connectivity – and the level of cybersecurity risk – that they provide.

In this context, it is all too common for IoT devices to be connected to networks (and connected together) in poorly secured horizontal structures. Not only does this make them more susceptible to attack, but it also allows intruders a huge degree of lateral movement once they are inside IoT networks.

So Why Use Edge Cloud?

Given all these security risks, and given that a recent study by Tech Republic found that two-thirds of IT teams considered edge computing as more of a threat than an opportunity, it's worth wondering why we need edge cloud solutions at all. This is, in fact, a very pertinent question, because some analysts have argued that the advent of 5G networks, coupled with the increased computing power of contemporary IoT devices, means that most of the processing currently done by edge cloud systems can now be done by devices themselves.

That doesn't seem to be born out by the facts, though. In its recent report “5G, IoT and Edge Compute Trends,” Futuriom writes that 5G will actually be a catalyst for edge-compute technology. “Applications using 5G technology will change traffic demand patterns, providing the biggest driver for edge computing in mobile cellular networks,” the report states.

In other words: whilst connection and cloud technologies are developing rapidly, demand for them is increasing even faster. This is a particular problem when it comes to managing online backup services, because without proper oversight a cloud edge system can end up undermining the integrity of backup policies implemented by individual teams.

Put simply, companies can’t afford to give up their cloud-based systems or devices. Cloud connected VoIP systems can save businesses 70% of their total phone bills on average, and companies that turn to cloud computing to fulfill their software needs have seen major increases in productivity to help drive business growth. At the same time, the bandwidth available to these same companies lags far behind the amount of data they need to process on the cloud. Edge cloud computing, in this context, seems like an obvious choice.

Ensuring Security

This means that, for now, security teams are stuck with edge cloud solutions, and will have to work out how to harden them further against cyberattack. Crucial to this attempt will be the deployment of perimeter scanning systems that are able to analyse not just standard network data, but a huge variety of other forms of data such as that produced by embedded IoT devices. Overcoming the security challenges posed by edge cloud systems can be broken into a number of interconnected processes.

Decentralization and Resilience

First, it's worth pointing out that one of the features that makes edge cloud infrastructures so hard to secure – the fragmentation of data – can also make them more resilient. This is because, as Proteus Duxbury, a transformation expert at PA Consulting, said recently, "instead of one or two or even three data centers, where if they're close enough together that, say, a big storm could impact them all, you have distributed data and compute on the edge, which makes it much more resilient to malicious and nonmalicious events."

In some ways, then, pushing data to the edge can mean that attacks on organizations are less effective, because they are not able to compromise a centralized data storage system which holds every piece of sensitive data. On the other hand, and as seen above, this fragmentation can make the application of global security measures more difficult.

The IoT and Encryption

Another issue that is raised by the widespread adoption of edge cloud systems is the security of the IoT itself. Concerns about the security of IoT devices are not new, of course: it has long been noted that the design of these devices prioritizes connectivity over security. However, in traditional cloud systems, the processing required to run these devices can be managed centrally. As IoT devices begin to utilize edge cloud solutions, this exposes them to increased threats.

The most commonly suggested solution to this problem is to increase the security of IoT devices themselves. However, at the moment many embedded devices lack the computing power to encrypt data before sending it to either cloud or edge cloud systems. As a result, network engineers have been forced to rely on other forms of security.

Full Spectrum Security

Securing edge cloud systems is ultimately a problem of scale rather than of essence. Security professionals already have access to many of the tools that are required to protect these systems, but will need to hugely extend their reach in order to protect data on the edge.

In fact, in many ways securing edge cloud systems requires network engineers to return to the basic principles of network security, but then to apply them outside the systems that they directly manage. These elements include:

Perimeter scanning techniques that use encrypted tunnels, firewalls, and access control policies to protect data held in edge cloud systems.

Securing applications running on the edge in the same ways that applications running within your organization are already secured.

Upgrading threat detection capabilities so that intrusion can be detected not just in relation to cloud or in-house systems, but also for the edge.

Automated patching that allows network managers to trust that both software and firmware automatically receives security updates.

Secure Access Service Edge (SASE)

All of these approaches and tools have been combined by Gartner into a new category of hardware and services that are specifically designed to improve edge cloud security. In 2019, the firm coined a new term – Secure Access Service Edge (SASE) – to define these systems.

Gartner has defined SASE as a combination of multiple existing technologies. The new paradigm, they say, "combines network security functions (such as SWG, CASB, FWaaS and ZTNA), with WAN capabilities (i.e., SDWAN) to support the dynamic secure access needs of organizations. These capabilities are delivered primarily aaS and based upon the identity of the entity, real time context and security/compliance policies." At a basic level, SASE combines SD-WAN, SWG, CASB, ZTNA and FWaaS as core abilities, with the ability to identify sensitive data or malware and the ability to decrypt content at line speed, with continuous monitoring of sessions for risk and trust levels.

Though SASE is still a new approach, Gartner has high hopes for the new technology. They predict that, by 2024, at least 40% of enterprises will have in place strategies to adopt this approach.

The End Of Zero Trust?

Securing edge cloud systems also involves overturning some basic misconceptions about threat hunting. Namely, it might be that the increased popularity of edge cloud solutions overturns another piece of received wisdom, the superiority of the zero trust model. Many of these new systems, or at least the devices that they interface with, will be extremely difficult to bring into single sign-on and user access control processes.

Instead, ensuring security in edge cloud solutions might require a more pragmatic approach, in which individual networks are segmented and protected individually. This, in turn, requires that networks be configured to automatically perform authentication and verification steps on every connected device, at a frequency that ensures that the data being handled stays secure whilst not affecting global network performance.

Pushing The Edge

Whilst cloud edge computing offers many opportunities, it also comes with challenges. To make matters worse, these challenges come at a time when security teams are struggling to keep up with other developments – the necessity to go multi-cloud whilst still using cloud-native tools, and becoming involved in DevSecOps migrations.

For that reason, a major determining factor in the security of edge cloud systems will be the speed at which they are deployed by businesses. Though edge cloud can offer significant gains in terms of performance, it will not replace traditional cloud models where these are currently working well.

In some ways, this removes some of the pressure on security teams, who can afford to design each edge cloud system with security in mind at the earliest possible stage. On the other hand, as edge cloud systems grow in importance, security professionals will be in the unenviable position of having to secure cloud, edge cloud, and in-house system simultaneously.

As with any piece of new technology, the level of security of cloud edge solutions is unlikely to become apparent anytime soon. But that doesn’t mean that we shouldn’t put in place tools and processes to protect these systems as far as is practical.

Four Point Summary:

Edge cloud systems face security issues when it comes to fragmenting data, locking down physical access, and the tendency of edge cloud systems to grow beyond the boundaries of what they were originally designed to operate in.

Most security systems are stuck with edge cloud systems, and need to figure out how to harden them against attack rather than abandon them

Overcoming the security challenges of edge cloud security systems will really come down to decentralization, encryption, and utilizing full spectrum security measures

A big determining factor for the security of edge cloud systems will be the speed at which businesses deploy them

0 notes