Text

Apologies

when we say, “can i please have another?”

let’s instead say, “i’d like another”

there’s enormous power is in the smallest words

let’s stop asking permission to live

1 note

·

View note

Text

How to actually connect two monitors to a MacBook Air and live

It took a ridiculously long time to get two monitors working on my MacBook Air. Why? because I’m ridiculous. I have a 13″ MacBook circa 2014 that serves me very well, but I could only connect one external non-Apple monitor to it. I had business reasons (2 big monitors for analytics work), and I had vanity reasons - I have a lovely white Samsung S27D360 and wanted to match it closely with a second white Samsung monitor.

Challenge: MacBook Air’s models (circa before 2018) have one Thunderbolt 2 port, and 2 USB 3 ports. The monitors in question (Samsung S27 series) have an HDMI and an RGA port.

What I did was buy a couple of connectors. One is a Thunderbolt to VGA, and another is a USB 3 to HDMI. I also purchased a male/female RGA cable, and a male to male HDMI. These worked with the connectors, and with the monitors I purchased - the M/F endpoints can differ by monitor so be aware of this when searching for connectors and cables.

What I bought:

Connectors:

HDMI to USB 3 from CableCreations

Belkin 4K Mini DisplayPort to HDMI Adapter

Cables:

VGA

HDMI

I plugged everything in, turned on the monitors. Rebooted a few times.

What happened:

Nothing: A dark screen. No cursor.

Iterated. Same.

What I did:

Looked up the manufacturer website and found drivers at CableCreation, linking to their vendor DisplayLink.

The drivers on DisplayLink came with very little/no documentation online, or in their installation instructions

It’s very important to download the drivers that are a match for your Mac OS version and model. Read this page carefully.

Special note: the CD that comes with the CableCreation connector contains outdated drivers. Also, as in all MacBook Air’s, it has no CD reader. I have an external HP CD reader, so I was able to open the CD in order to note that the drivers were invalid (one of my steps in this process).

I iterated on every driver I could find that was remotely related, and matched my OS version, or, that didn’t.

This didn’t work.

I repeated the process of installing, rebooting, uninstalling on each of 3 driver sets.

Searched online for help. Found many sources. None helped.

Submitted a help ticket to CableCreation.

Did not wait for the help ticket response (holiday weekend).

Stuck with it. I’m a product manager, and an analytics professional. I had to remember that, and not allow my impatience to prevent the solution from presenting itself.

After staying with it, I decided to dive into the Troubleshooting pages on the DirectLink website. Down far on the page - viola.

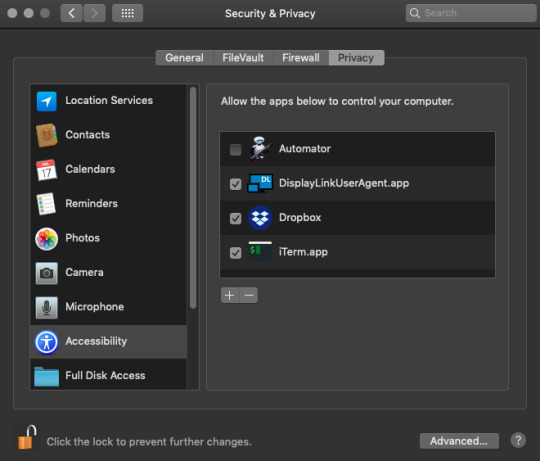

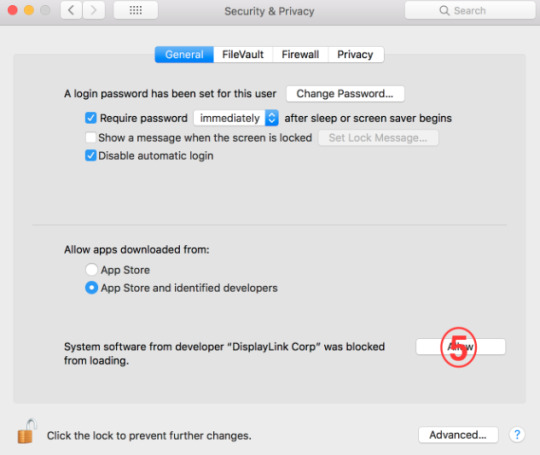

This article described the issue where the DirectLink driver was not automatically enabled in MacOS Security and Privacy settings.

There are a few steps included here that are not a part of the installation steps, and I’ve outlined them below for convenience:

The Security and Privacy settings need to be opened and changed to enable the DirectLink driver within 30 minutes of their installation - after the reboot. While Security and Privacy is sometimes opened automatically, this is not always the case.

Once open, in the Privacy tab, click on the Lock icon on the bottom left.

Enter your admin name and password. Then click on the checkbox next to the DisplayLinkUserAgent.app on the right side window.

Navigate back to the “General” tab, and, this is the critical step, note the text below and click on the “Allow” button. “System software from developer ‘DisplayLink Corp’ was blocked from loading.” - Bingo.

(Please ignore the “5″ below - I screen-grabbed from the DisplayLink page).

You’re almost done. Connect and restart the monitors before rebooting (if not connected).

Reboot.

This did the trick for me.

Christopher

#macbookair#macbook#twomonitors#dualmonitors#usb3#hdmi#cablecreation#displaylink#apple#analytics#mac#diy

0 notes

Text

Reblogging this because it comes up again, and again, and...

One update: size of utm_content: it can extend to the size limit of the URL, which is roughly 255 characters. Above that, there is normally loss in attribution. (e.g. If the rest of the URI and the parameters take up 200 characters, then utm_content should be limited to 55 characters.

Campaign Tracking with UTM Parameters

Distinguishing between Paid and Non-Paid traffic in Google Analytics is more of an art-form than a science. It’s difficult to find online help or literature that adds insight into this issue, and that’s the motivation behind this blog entry: I want to make things easier for you, and me.

There are a few tricks that make it easy to ensure that the inbound URLs you have tagged with Google Analytics tracking parameters actually contribute to your “Paid Traffic” segmentation.

In review, Google Analytics offers the following URL parameters that are termed to be for Campaign tracking. These provide extremely valuable insight to you when combing through inbound traffic to your website for meaningful traffic metrics. By default, Google Analytics does not differentiate between Paid and Non-Paid traffic sources. Campaign tagging is required to accomplish this but Google doesn’t seem to document this very clearly.

utm_medium = [Required] the how or channel or type of marketing, important to differentiate because behaviors tend to vary by type, i.e. banner visits behave very differently than email visitors usually

utm_source = [Required] the place from whence it comes, i.e. website, email marketing list or distributor, partner, more…

utm_campaign = [Required] name of marketing initiative

utm_term = used for paid search. keywords for this ad, or another term to distinguish this traffic source by it’s most basic atomic element.

utm_content = identifier for the type of marketing/advertising creative, the message, the ad itself, etc… good to slice across other dimensions to see patterns that stand regardless of medium, source, campaign, more…

For a reference on Google campaign tagging click here. Google utm parameters can appear in any order in the URL after the “?” or “&”.

Example URL with Google Analytics campaign tagging parameters:

www.site.com?utm_source=yahoo&utm_medium=cpc&utm_term=newkeyword &utm_campaign=mycampaign&utm_content=adtext

The parameter “utm_medium” is used by Google Analytics to classify inbound traffic to your site as Paid or Non-Paid. No other parameter is taken into account in this determination. When Auto-tagging is turned ON in AdWords, URL tagging is replaced with the AdWords “gclid=xxxxxxx…” parameter. The parameter is read by GA in a handshake between GA and AdWords where a complex attribution to AdWords keywords and campaigns is accomplished, yielding cost and click data for Google. No other paid traffic partner but Google receives this treatment – only with Google AdWords auto-tagging will your site receive cost data associated with normal campaign tagging traffic.

Here is a cheat sheet for campaign tagging to ensure that your incoming traffic is categories as Paid by Google Analytics.

The following values for utm_medium are supported and resolve as “Paid” in GA (as of this article’s date):

cpc, ppc, cpa, cpv, cpp, cpm

Tip: make them lower case only, or they will NOT be included in “Paid” reports/segments.

The “cpm” value in utm_medium has a conditional quality to it’s attribution as paid.

It attributes if the Paid segment is selected.

It does not attribute in a custom report showing Paid traffic.

Screen grab of Paid Segment testing in GA:

Update: if utm_medium = referral, then the tagged inbound URL will be listed as a Referral, not a Campaign visit.

#analytics#google tag manager#GoogleAnalytics#analytics 360#utm#socialmedia#facebook#seo#sem#paid marketing

1 note

·

View note

Text

Ten Security Assists from GTM

I first started using GTM in about 2013. Before that, I was prevented.

Even though I was Director of Analytics for a super duper big company with an $X00M advertising spend under my supervision, I couldn’t get the approval for the developer time needed to convert the sites and apps from analytics.js to the GTM snippet. Also there was a degree of fear associated with the concept of the “remote control” nature of a TMS for our websites.

Could malicious users/bots get control of our tagging and take our data?

Worse yet, could someone do js writes to our site and take over content?!

Would someone try to take away donut Friday?! (that was real)

Sometimes it comes down to fear. In my 25+ years in the software engineering, semiconductor automation, internet engineering, and analytics of everything industries, decision-making in tech hasn’t changed. We’re primates at heart and all of us have basic reactions to the unknown.

While our ancestors quaked in fear when they they first saw fire, we now shudder during the technology review process. Our gut tightens. Heart-rate elevates. Cerebral cortex gets flooded with blood while our left and right hemispheres have less, weakening reasoning but peaking our fight or flight reactions.

Except, we’re in office chairs, maybe in Lulu Lemon yoga garb, and we’re barely listening to a boring powerpoint preso. A little “nam-myoho-renge-kyo” might have nailed it.

In my company, I saw it as an opportunity to to raise the level of consciousness.

After many powerpoints, lively discussions, and then a repeat of the exact same powerpoints (yup, that does work), we all won by forever adopting GTM. From that point on we never looked back.

In the review process, security was a real concern for my president. This was primarily because he read several articles outlining conceptual security issues in tag management systems. I’m not proposing that TMS’s are free from security risk. But when comparing them to including all analytics and other tagging on the page in plain site, it becomes clearer. On-page tagging means that that anyone with a laptop, 60 seconds, a coffee, and chrome developer tools can see, copy, hack and control your content or data.

TMSs like GTM offer a layer of off-page obfuscation by design. The heart of GTM tagging and its logic doesn’t reside in the code at all. It’s in the hands of only a select few who have access in the cloud. By default, more security.

The Google GTM team is vigilant about security, and have created some amazing security features that also add to ease of use.

10 Key GTM Security Features

In-page tag whitelist/blacklist

This feature gives control back to site owners, the release process, or to developers to determine the types of Tags, Triggers, and Variables that are allowed to run on your site. They are whitelisted or blacklisted based upon ID numbers associated to each element type, preset in GTM.

For example, Custom HTML tags can be simply disallowed. Some of my clients have chosen this exclusion as it provides not only security comfort, but high performance by excluding customization outside of standard tags.

It enables a secure set of “keys” that the company can use to approve / disapprove assets, making it possible for a business to nimbly change security allowances.

https://developers.google.com/tag-manager/devguide#restricting-tag-deployment

Ability to require 2FA - Two Factor Approval for Changes:

My new favorite feature. Two-Factor Approval can be setup so that no one can do any of the following without 2FA.

Publish

Create/Edit JavaScript variables

Create/Edit custom HTML tags

Anything User Settings

https://support.google.com/tagmanager/answer/4525539?hl=en

User Permissions

At the container level, users can be granted or revoked access.

Read, Edit, Approve, and Publish are all separate levels to be approved.

https://support.google.com/tagmanager/answer/6107011?hl=en

Approval Workflow

For 360 account holders, the approval workflow using GTM Workspaces makes it possible to allow multiple groups or users to simultaneously make changes in isolation.

These workspaces can then enter an approval flow, enforced by User Permissions in #3, and a process flow.

See Simo’s article: https://www.simoahava.com/analytics/new-approval-workflow-gtm-360/#comments

Testing and Release: Preview Mode & Environments

Preview Mode:

Preview mode is a super great option for debug testing container changes.

Simply turn on Preview mode, and the debugger window will pop up on your site when you launch it. I especially feel gratitude for the ability to dive into the dataLayer and variables at any event.

Preview mode was updated a bit ago to let you simply “refresh” it with a click, allowing you to do live edits while still in preview

https://support.google.com/tagmanager/answer/6107056?hl=en

Environments

This capability is an amazing way to do what we used to do in the olden days by creating a test container that resided in a test environment on our site, and then was manually disabled and enabled, etc.

Instead, we now have the ability to create multiple server environments, configured in GTM at the container. Great for isolating Dev, Test, and Prod without impacting any of them outside of our release process. Bravo!

https://support.google.com/tagmanager/answer/6311518?hl=en

Change History

Very simply, versioning of containers is a very simple, great process for clearly identifying releases by name, purpose of the release, name of creator, and creation date. Creates a permanent tag audit trail.

Also, version history provides a side by side comparison of before and after changes, even highlighting the changes in a different color. It’s awesome, and saves hours of time debugging, and in identifying potentially unwelcome changes to the container.

Versions can be rolled back or removed entirely.

Programmatic API

Welcome to remote control for remote control. With the GTM APIs, you can build secure tools to monitor and take actions on your container via 2FA scripts. Simo has created a pretty amazing set of GTM Tools using the GTM APIs.

Here’s the list of what you can view or change from an authorized account: Accounts, Containers, Workspaces, Tags, Triggers, Folders, Built-In Variables, Variables, Container Versions, Container Version Headers, User Permissions, Environments.

https://developers.google.com/tag-manager/api/v2/

Vendor Tag Template Program

GTM has created a huge, and ever-growing list of pre-baked integrations with key vendors called the “Vendor Tag Template Program”.

For security, this is a huge win. The code behind these integrations is buried deep in GTM-land. Only Google has access and it’s bullet-proofed with each vendor in the acceptance program.

It removes the need to create Custom HTML tags or Custom Javascript variables for each vendor tag that gets created. This by itself removes the risk for malware and security issues involved with custom tags. TMS’s that rely on custom HTML tags (several) are most exposed to security risks. GTM is actively working to make those undesirable by providing excellent vendor integrations.

See here for an updated List of GTM Vendor Tags on Wonkydata.com (will update as they are released).

Malware Scanning

Without us knowing, GTM automatically scans your containers for malware. If any is found, it blocks the container from firing and signals issues.

It’s totally in Google’s interest to always make sure your containers fire, and that they fire completely free of malware and malicious code. Continual update of their methods for identifying will ensure persistence and accuracy in KTBR (keeping the business running).

https://support.google.com/tagmanager/answer/6328489?hl=en&ref_topic=3441532

Contextual Auto-escaping

Another behind the closed doors of GTM security precaution that helps to remove and prevent unsafe HTML and XSS from running in custom tags.

Custom tags are analyzed, “sanitized” and escaped by a robust engine.

Check-in for updates on security items for GTM.

Google likes making things secure. It keeps business going, makes ads render, and makes it possible for content and user experiences to grow like fields of wild flowers.

GTM is an incredible tool. It’s become a ubiquitous tag management system, deeply featured for full path staging and production releases, provides excellent security precautions, scalable to any enterprise, is constantly being grown, and it’s totally free.

#analytics#google tag manager#gtm#google analytics#GoogleAnalytics#Googletagmanager#google360#GA#analytis consulting#cebridges#wonkydata#google

0 notes

Text

Ya... OK, but what 3 calls can I make because of this data?

* Photo credit -Entertainment.ie

This was what I was asked after an hour meeting with one of the top entertainment agencies in world, talking with them about working on analytics projects together.

What does this get me?

I’ve spent a career in the creation of data - rather, creating data, and translating it into information through lots of hours and money and teams of people.

Some companies consider data to be an asset. I agree and disagree. Chairs are assets, as are computers, buildings, and material goods.

If data is an asset, then it should be easy to see its worth.

In order to be an asset, it needs to be used, like cement to build a building, or programming to build the OS for a phone, or decision criteria helping you know when to launch your new electronic vehicle and at what pricepoint.

In the case of the agency who posed this challenge to me, it was the kind of question that you dream of hearing from a company. It means that they possess the keen understanding that everything in their business is designed to either enhance or detract from making goals move forward for the company.

I’ve only heard this question asked a handful of times, in 30 years.

As an analytics and business intelligence creator of data systems for nearly 30 years, I’ve seen data stores grow to exceed the capabilities of CPUs, Memory, and Storage again and again and... In each company, we huddled together and vowed to reduce our overall data storage, reviewed the types and volumes of information being gathered and reduce to needs only, and analyzed reporting requirements precisely before agreeing to future data-increasing projects. Then, we’d eventually forget about it for another few years after buying the next amazing, eclipsing computers and storage systems. Massive, fast, a little bit pricier per unit, but soooo powerful... (RAID, SAN, Parallel servers (Hadoop clusters), now many splendored flavors of Cloud).

Behavior like this can lead to a misuse of cloud computing too. Nothing prevents bad design except for great design practices.

"if fashion were easy, wouldn’t everyone look great?” - Tim Gunn

When storage and CPU and overall scalability are no longer huge concerns because you don’t have to build your own data center any more, there are far fewer barriers to over-creating data stores and even less encouragement for using them for something results-oriented.

I warn about this, because it’s a sine-wave pattern that follows Moore’s Law absolutely.

“...the number of transistors in a dense integrated circuit doubles approximately every two years. “

My time at Intel cemented this, since he was one of the founders, and co-inventor of the integrated circuit at Fairchild.

Computing capability follows the same pattern, and it’s because the inventiveness of humanity and our desire for more collide and complement in an arm wrestle for dominance. In the early days it was led by hardware concerns. Now, it’s the internet, and our expectation of continuous, high speed, and richer service and experience through networks and computer, TVs and phones that have graduated to the role of appliances to purvey our information, communication and entertainment needs. What was a novelty in the 1980′s and 1990′s is now something that barely generates a shrug, unless service is down.

Today, hardware is still key and core, but the struggle to build faster, better, cheaper to fuel the rate of growth in the internet is a hidden one unless you’re Intel, AMD, Samsung, etc.

Technology grows. Data sizes grow. Investment grows. The singular best question remains, and if it is unanswered, then every single bit of what is considered a “data asset” should be questioned, and in some cases, radically shut down.

When I was at Intel during the 1990s and 2000′s, I personally managed large budget projects and teams who’s end results were enormous, well-organized, strategically devised data stores, with excellent reporting. In the balance of things, the data was often used in a handful of reports, that were printed on the FAB (factory) floor in clean room paper, and handed out manually to changing shifts of production engineers. Fancy dashboards gave way to a one page printout, backed by hundreds even thousands of person-hours to create the statistical models and simple algebraic equations that resulted in informative numbers that told them to do a few simple tasks, but in the right way, at the right time, and with the right product. The data also categorized CPUs into their proper “Bins” of MHz quality. Translation: how fast the CPU was, and what to charge for it.

That’s environment I came from when I moved into the internet world during my baptism at MySpace for a brief but exciting time. Then as I decided to become an analytics practitioner and director, I realized that analytics were back in the earlier days of creating tons of information, and not always knowing what to do with it, but wasn’t Google Analtyics, or Adobe creating pretty reports? More events maybe? Dimensions? We can do that, with some hours of work.

Today, the right questions are emerging, and what I’m encouraged about is that while in L.A., the top of Hollywood has been asking them for years.

“What three (things) phone calls can I (do) make because of this data?”

What three decisions can you make from your data today, that result in increased revenue, or decreased cost, or an informative stance regarding the launch or removal of a major project and service shift for your company?

What information doesn’t boil up from the vats in the basement, to distill into a fine digestif to steady our stomachs so engorged with data?

The question is easy, but it has to be asked at the right levels in a company, and led into action by the top-most leader. Centralized embrace of analytics and business information direction is paramount, and that’s why this simple example question was so interesting.

The right question was asked, at the right level in the company, and it’s being led and directed by that person. The CEO.

One of the key tenets of the ground-making and breaking book on analytics called Competing on Analytics says (paraphrasing) that it’s vitally important for the analytics competitor to have that process of creating analtyics greatness led by the top of the company. It has to be their passion, and to be taken seriously and enforced at every level down in the company.

Is our information a revenue driver, or a revenue detractor? Are the costs for creating and storing our information today, tomorrow higher than the benefits we are currently accruing from our information resources? Are we still paying for past mistakes by keeping huge or costly information assets afloat? What are we going to get from this project, and when?

If you’re building a building, hopefully the costs don’t exceed the projected net revenue you will get from selling it, or renting out the space over the next century after it’s built. Hope isn’t a factor. Financials are, and buildings are generally built on clear targets relative to cost and time.

Since information is wobbly, and easy to hide, and also easy to talk-up as an asset, it’s super important to keep the blinders on about it’s data-greatness by itself, and remain focused on answering the question of the ages, which is just a restatement of the main driver question of this article.

Why does it have value?

If your CEO doesn’t believe in your information resource, and you can’t identify its value in a few sentences, then it doesn’t have value.

If you buy a million gallons of gasoline and store it underground in case you might need it someday, there are two main outcomes that can be easily anticipated with a little thought

It goes bad. Stale gasoline isn’t usable.

It will someday be worthless. Electricity is going to win.

Data resources are similar. Don’t create it, buy it, or store it unless it’s possible to clearly identify a direct through-line from its existence, to even a singular use where it enhances your business. It could have value in a couple of years, and that’s ok, as long as it can be clearly traced to an absolute use case that achieves well articulated business objectives.

CEO’s, what three things can you do with your data today that improve business?

Assets can be sold. If you can’t sell your data, it isn’t an asset.

#google analytics#googleanalytics#business intelligence#data#information#analytics#data science#machine learning#google cloud services#cebridges#wonkydata#business analysis#forbes#google

1 note

·

View note

Text

Google Tag Manager Vendor Tag Template List

This is a super basic but functional post to act as a reference. I refer to it in other writing, and want to put it here as a fixture for copy/paste for anyone to share.

The GTM Vendor Tag Template Program is a pretty amazing set of pre-baked tags for use with a large and growing list of vendors. It replaces the need to do custom html and some custom js in GTM. By doing so, increases performance, and reduces potential security risks.

Without adieu, the list:

AB TASTY Generic Tag

Adometry

AdRoll Smart Pixel

Audience Center 360

AWIN Conversion

AWIN Journey

Bizrate Insights Buyer Survey Solution

Bizrate Insights Site Abandonment Survey Solution

ClickTale Standard Tracking

comScore Unified Digital Measurement

Crazy Egg

Criteo OneTag

Dstillery Universal Pixel

Eulerian Analytics

Google Trusted Stores

Hotjar Tracking Code

Infinity Call Tracking Tag

Intent Media - Search Compare Ads

K50 tracking tag

LeadLab

LinkedIn Insight

Marin Software

Mediaplex - IFRAME MCT Tag

Mediaplex - Standard IMG ROI Tag

Bing Ads Universal Event Tracking

Mouseflow

AdAdvisor

DCR Static Lite

Nudge Content Analytics

Optimise Conversion Tag

Message Mate

Perfect Audience Pixel

Personali Canvas

Placed

Pulse Insights Voice of Customer Platform

Quantcast Measure

SaleCycle JavaScript Tag

SaleCycle Pixel Tag

SearchForce JavaScript Tracking for Conversion Page

SearchForce JavaScript Tracking for Landing Page

SearchForce Redirection Tracking

Shareaholic

Survicate Widget

Tradedoubler Lead Conversion

Tradedoubler Sale Conversion

Turn Conversion Tracking

Turn Data Collection

Twitter Universal Website Tag

Upsellit Confirmation Tag

Upsellit Global Footer Tag

Ve Interactive JavaScript

Ve Interactive Pixel

VisualDNA Conversion Tag

Yieldify

#analytics#gtm#google tag manager#google analytics#analytics 360#google#tms#tag management#html#javascript#security#computers#software#web#internet#cebridges

0 notes

Text

Why it’s OK to Click on Ads You Like

It's annoying to get ads. We don't like them. Interestingly, that's what makes the internet exist. Ads. It pays my salary and maybe yours if you're reading this.

How to use Demographics in GA360 as targets for DFP Ads -- but were afraid to ask

BTW: If you're in Marketing, Publishing, or Analytics, the meat of this how to article is down below under "Now to Get Nerdy".

Buying things. Sharing stories, tweeting, texting, learning. Everything boils down to ads that move us toward or away from eventually buying something or doing something that gets someone else to buy something. The internet functions like a billboard on a highway exit ramp.

How those internet ads are relevant to us or not is up to a subtle and complicated art-form called "Ad Ops" - short for ad operations.

These magicians of ad content convince us to buy things by making them relevant, timely, and available. Also, by showing us cool things we really might want.

Specifically below, I'm geeking out, and talking only about how to target using DoubleClick for Publishers, and Google Analytics 360. The rest of this article is really for those people... my people... who are in the business of delivering and measuring ads to all of the rest of us.

It's a tough and very competitive business. Keeping our attention is only more difficult with the choices we have that redirect our attention away from a page on a website or app within a second or two max. So... What do they do?

They make sure that the ads that we DO see actually matter to us. We don't want to see ads for a dentist when we're buying candy. We don't want to see ads for office furniture when we're planning our vacation to Kiwi.

How do they target us? By knowing who we are... or really, who we might be. "They" don't really know unless they know our demographics - info that helps to classify us and determine what we might want to see, read, and buy. And it's difficult to get precise data around it.

It's a Conspiracy

The conspiracy theories about advertising are all a bit wrong: yes, anyone can be tracked very closely based upon their internet and phone use. No, we are usually not. Not because it's not possible (just confirmed it is), it's because it's expensive. Like one of my longest friends who is a Colonel in the Air Force told me, "It's not that the NSA can't find you, it's that they don't care to... you're not important". Wiping my tears I realized that my not being a "person of interest" to the NSA also means that I'm safe to travel, won't have a Black Hawk helicopter circling my apartment today, and the companies who want me to buy their products and services don't always know who I am or where I'm going, or what I want just based upon my existence alone. I'm too expensive to track consistently. Also because I am like anyone else. I'm inconsistent in my behavior and habits.

If you walked into a Walmart, and it was able to know you are a male between the ages of 24 and 30, and that you are interested in hiking, jeeping, and reading poetry, you probably would have isles lit up near you as you walked, and devices showing off products you should be buying. That doesn't happen yet. Thus, when we're on a website just looking for a shirt, it doesn't know my demographics either, unless I've opted into providing them.

I would like my demographics and interests available, because when they are, I get better ads coming my way and not aggravating ones that are either insulting or have no meaning. Gone are the days of jumping to a new channel because a commercial came on during my show. I use Apple TV, and I don't have commercials. So why would I want junky ads on a web page I'm only visiting to see if I'm interested? It's an unfair question :).

Now to get nerdy

Below is an explanation of how to connect opted-in demographics back to DoubleClick for Publishers, through Google Analytics 360. It's only possible via an indirect method, and breaks no terms of service for either DFP or GA360.

This is just about connecting Audience data from GA360 to DFP, by using the opted-in user demographics from a website. It's straightforward, and works.

Why is it important to get audiences in GA as targets for DFP? Because you can craft the audience list around users demographics, PLUS include for flavor what they did on the site, in every nuanced way conceivable, and that audience becomes usable inside of DFP as a "target". Not possible without Google Analytics 360 and it's native connection to DFP.

It basically rules. It's why we can sit back and wait for ads that sell us stuff we want.

Publishers: please read below.

Google Analytics doesn’t automatically pass demographic data from an Audience to DFP, but it will to AdWords. It’s the nature of things, and it makes a lot of sense.

There are many reasons why demographics aren’t connected through GA360 to DFP. I’m not pretending to know them all. Partly it’s about that fact that Google is being a good steward of people’s data, and of the internet. Also it can be a tough nut to crack since the GDN connects all of those worlds together in very different ways. One thing is certain: this is the way things are set up right now, and there is a means to get the job done anyway.

Below I outline a simple way to achieve the result of targeting DFP ads with demographics from your site without having to use GA360′s demographic data specifically. But it requires some specific work.

Caveat: nothing gets around the need for every company to be mindful of how they are using their client data. This solution below is only possible if the client opts in to using their data in this way.

Why would you want to use demographics as part of your target for DFP ads? Answer: to find the elusive groups that are most or least active on your site, and even more specifically, to meet their desires to have meaningful ads appear that are beneficial to them in their information journey. This is also the sweet spot for money making for a business that relies on ad revenue.

Solution:

User Sign-on recorded

Demographics questions answered

Custom Dimensions set

Audiences created

DFP targeted

Components:

If there is a a user sign-in environment, and the user has been asked their age, gender, interests, show size, etc. this can be exposed as custom dimensions

write a procedure to expose the needed demographic / info to a dataLayer variable on the webpage.

This should be set on every single page

The value would be filled after a user logged in (the logged in state would be used to fill the variable)

GTM reads this variable, and sets it as a custom dimension in GA

In GA, use this Custom Dimension as part of the Audience definition

Notes:

For Age, and other demographics do NOT name the Custom Dimensions “age”, “gender”, etc. Name them differently so that they don’t expose what they are to random crawlers of the site who are hunting for marketing information and PII

For numeric values like age, they are generally most valuable when used in Audiences with comparison operators like =, <, >, etc. This means they need to be stored in Custom Metrics too. But it can be useful to store in both CD’s and CMs. CDs can be used as dimensions in reports, where a CM is always just a metric/quantity.

Example:

User logs in

site grabs their shoe size from the backend, and puts it into the front end (web page) in a dataLayer variable named “custShoe”

a dataLayer.push statement is used to set that value at the Page level, up high, above the GTM container code, just after the open <body> tag

GTM grabs the “custShoe” and puts it into a variable

GTM then sets the variable as a value for a Custom Dimension on the pageview tag, and on every other UA tag so that it works with every customer interaction

Also set as a Custom Metric since this is a number, and you might want to do a comparison test when creating an audience or using as a filter or segment in GA

GA: create an audience based upon users having a Custom Metric for userShoe > 7

Set it to be used in DFP or Adwords. DFP is possible because you aren’t using GA’s demographics or user information. It’s yours, and you aren’t passing PII

Warning:

Do not pass or store PII in custom dimensions or metrics, or send into GA, or try to use as audiences or segments. Google under it’s TOU's will rightly delete your analytics data. And they should. It’s not good for your people.

Conclusion:

It’s possible. Use Custom Dimensions and Metrics, and user Opted-In data. Do not pass PII or anything the user didn’t allow. Do not name it so that it looks visible to site crawlers or your users will be jeopardized, as will your company.

Enjoy your new powers

#analytics#google analytics#gtm#google tag manager#dfp#doubleclickforpublishers#doubleClick#advertising#sem#marketing#seo#linkedin#GoogleAnalytics

2 notes

·

View notes

Text

Making Money with GA360 / DFP Publisher Reports

Being in an analytics consultancy with lots of publishing clients of all sizes, we joined the crowds of excited analytics people when the GA-DFP integration for Analytics 360 came out last year. This amazing addition came in the form of Publisher Reports, and DFP Audiences, and has proven to be a powerful enhancement to Analytics 360, offering 10 new metrics giving insights into impressions, clicks, and revenue for DFP and Backfill Ads, and the ability to create Audiences to target DFP ads.

One Client Call

We have loved this integration, and during an initial call with a prospective Analytics 360 client about a month ago, we had an awakening that helped us make its true value clear and explainable. We were asked the simple question, “I like Analytics 360 but why should I care if DFP is integrated?”. I thought about it, and then just blurted out:

“The reason this [GA-DFP integration] is really awesome is because it’s not just great to have more metrics in GA, it’s because when you analyze your page lift, or create Audiences for DFP, Analytics 360 will pay for itself, and you’ll make more money”.

It seemed really obvious when we all looked at each other in our meeting room at Analytics Pros, but after bringing up the Mac calculator and doing some quick grade-school math, it was also explainable. The estimation is explained further below, but to get there, we’ll need to outline the two uses of Analytics 360 that generate the value-add:

Finding opportunities for lift in content based upon Publisher Reports

Creating GA Audiences for DFP, and DFP Targets

First Things First:

Verify the DFP integration in GA is enabled

In GA verify that Remarketing, and Advertising Reporting Features are enabled in the property settings

In DFP audiences as targets must be switched on

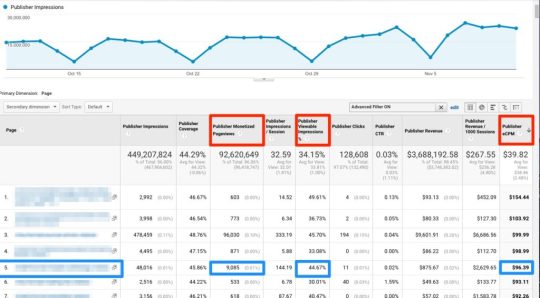

Finding opportunities for lift in content:

Start by bringing up the report: Behavior / Publisher / Publisher Pages. Pay particular attention to three of the Metrics in this report (Google definitions and below)

Publisher Monetized Pageviews:

Viewable Impressions:

Publisher eCPM:

“Monetized Pageviews measures the total number of pageviews on your property that were shown with an ad from one of your linked publisher accounts (AdSense, AdX, DFP). Note - a single page can have multiple ad units.”

“The percentage of ad impressions that were viewable. An impression is considered a viewable impression when it has appeared within a user’s browser and had the opportunity to be seen.”

“The effective cost per thousand pagviews. It is your total estimated revenue from all linked publisher accounts (AdSense, AdX, DFP) per 1000 pageviews.”

The two goals of analyzing this report are to uncover the following:

Pages that have Low Publisher Monetized Pageviews (PMP) but high eCPM

Pages with a High PMP and Low eCPM

Pages with Low PMP and high eCPM:

In the example below the PMP for this page is low, but above 1,000 making it eligible for a real eCPM

eCPM is decent, and about average for well performing pages with higher PMP

The Viewable Impression % is reasonable but if much lower you should consider the viability of the ad vendor’s ads, or technical aspects of the page for low rendering (low % ad renders mean lower payout for those pages)

The page boxed in blue is a perfect case for alerting the publisher of the potential for earning more money by improving the user experience with this content or similar content, or as simply as getting more people to know about it and read this type of content now or in the future

Recommendations for publisher to increase PMPs:

Rewrite the content toward the actual targeted audience

Improve quality of the article

Change the content type entirely

Consider the author and their likability - how do their other articles fare?

Send more Marketing traffic to this page at a bid that pays out at this eCPM

Pages with High PMP’s but low eCPM:

In the example below the PMPs for this page are pretty great, but the eCPM is very low

Recommendations to lift eCPM:

This page in blue has low eCPM for a high amount of PMPs. People are reading, but the payout is low for each impression.

The publisher Ad Monetization folks need to take a look at the Line Item Targets for the ads rendering on this page, the networks / ad vendors represented, and increase the average eCPM on this page, and pages of similar content category across the site (using custom dimensions).

Take a look at articles by this Author, on similar Categories or Tags on the content, and determine if this is endemic to these variables, or that the ads truly need to be rethought to increase eCPM.

Page Lift Estimation - Back of a napkin calculation does wonders:

Demonstrating value to the analytics user with examples on their website is crucial, and super powerful because it somehow causes the synapses to fire about making money, instead of saving money or finding more and better data. We’re sometimes so caught up in those other activities that it takes stepping back, doing basic arithmetic and just simply estimating to visualize how GA-DFP integration makes us money. This example below is far from correct accounting, but it’s a super valuable tool:

Increasing pageviews and finding more money:

Let’s say our website has 5mm pv/m

Low pageview page has 1k pv/m but eCPM of $20 {ideal state of impressions/pv}

Increase pageviews 10x = 10,000 pv/m

Repeat on 100 pages and you make a $240,000 increase in a year!

10,000/1,000 = 10 x eCPM $20 == $200/mo

$200 X 12 mo’s ⇒ $2,400 / year increase on one page

Result: We just paid for Analytics 360, and bought a Tesla

Now on top of increasing pageviews, let’s play with eCPM to find more money:

For the same article, if eCPM were were increased from $20 to $80 by doing some targeting or network changes, then this happens:

Pageviews 10x = 10k pv/m

Repeat on 100 pages and you make a $960,000 increase in a year!

10,000/1,000 = 10 x eCPM $80 == $800/mo

$800 X 12 mo’s ⇒ $9,600 / year increase on one page

Result: We just paid for Analytics 360, and hired a new engineering staff!

While these numbers above are only examples, they are a powerful visual to demonstrate how even small tweaks across a site’s content or eCPM can lead to huge wins.

Creating GA Audiences for DFP, and DFP Targets

Audiences in Analytics 360 can be used in DFP as Line Item Targets. This isn’t well documented all in one place, but below are the steps to make it happen. This is the other amazing use of GA-DFP integration that is ONLY available in Analytics 360. Retargeted ads on article pages will increase eCPM for that page, and all other pages that are tied to any DFP Line Item.

Caveat: in this context, DFP means the whole interface that is used to create Placements, set networks to run within them (whether direct sold DFP, AdX, or straight up Adsense) and set Line Items to run these ads on specific pages.

Create the GA Audience for DFP -

Warning: demographics are not enabled for GA → DFP audiences, but they are for use in GA → Adwords audiences

Open Audience Definitions / Audiences in Admin for the property that has been linked to DFP. I already created some audiences, but will walk through creating another.

Type on the far right indicates the Destination Account that will receive this audience. In this case, only Adwords and DFP are integrated. Notice how the Female 18-24 Audience is only available for Adwords - the demographics limitation.

To Create an Audience click “+ NEW AUDIENCE”. Then click on on Destination Account, and choose a DFP account. Note: multiple DFP accounts can be linked and will show up here as Destination Account options.

We’re creating an Audience for All Users, which should be your first audience if you’ve never created one. Save it, and it will appear in the Audience list.

Select All Users from the list -

Notice the “Eligibility” list on the bottom right of the Define Audience selector. For All Users it shows all accounts eligible for connection, including Adwords and DFP.

In the DFP interface assign the DFP Audience we just created to a Line Item

This is the step that can be confusing because online documentation didn’t reference it together with GA Audience creation steps.

Steps:

Open a “Line Item” in DFP and navigate to “Add targeting” (DFP interface, bottom left)

Click on “Key-values and Audience” on left

Then select “Audience segment” in the dropdown I(below)

Search for an existing target audience by name, or for the GA audience in the search box. In this case, we’ll use the “All Users Test” audience we created in the previous steps. Other audiences are greyed out but this is where they all would be seen (those imported into DFP from third party providers, and all GA audiences)

Check the checkbox next to All Users Test

After selecting, and hitting return in the search box, the Audience is listed in the right under “Selected Criteria”, “Key-values and Audiences”

We’re done adding the GA Audience we created called “All User Test” to DFP, and once this Line Item is launched, it will be targeted based upon our Audience.

Trick: But there is one more trick up our sleeves as analytics people -- we can combine audiences in DFP. Audiences can be purchased from third-party lead gen providers and they would appear in DFP under Key-values and Audiences along side GA Audiences. We saw that in the smudged out audience lists in previous examples. Any of these can be added together in Selected criteria as components of the Line Item target. There are two ways to do this.

A. The “OR” method:

B. The “AND” method:

Click on “+ Add Set”, and search in the Audience Segment is searchbox, selecting another audience from the list. This will Add it to the Selected criteria list, and it will be “ORed” with any other target already associated. In will operate as follows “All Users Test “ OR “People from Nebraska”. So ads will target either population available in the ad impression from Ad Network, but not both.

Detailed below, click the “Add key” link from just below the Audience segment is” box

This brings up another selection/search for additional Audiences

Select an audience, and it will be used in a logical “AND” with the audience(s) you’ve previously selected. This is more desirable in most cases since you can increase the size and scope of your targeted audience, instead of narrowing it with the “OR” option in A. One particular use case is when it would be desirable to use third party demographic information alongside another audience from GA. It’s not perfect since GA is 1sty party cookie and the other audiences are 3rd party, but it’s a useful workaround for approximate targeting, and still more specific than general audience targets

Example of the “AND” method using “Add key” to enable multiple audience targets in the Line Item. Note on the right side there are two sets of targets under Key-values and Audiences, with an “and” separating.

Summary:

The DFP-GA integration makes Analytics 360 users more money if they know how to see it

Analytics 360 pays for itself with the DFP-GA integration and a little work

It also can provide huge dividends

This is best demonstrated through:

GA Publisher Reports and content engagement analysis

Audiences from GA used in DFP to target ads

For anyone doing analytics as a living whether as a practitioner, or in sales of analytics solutions, it’s easy to ignore the DFP Ad Monetization side of a business. Traditionally it doesn’t get a lot of analytics attention, and is sometimes owned by one or two very busy people. It’s also complicated, and involves spending some quality time learning how the business leverages DFP Line Item targeting, and this is very specific to the company. Get to know these people, buy them lunch, and make friends. They work hard and are responsible for most of the money for that company. They also are pros at using DFP.

The benefits of the GA-DFP integration are not limited to publishers.

Many business verticals use DFP for everything from house-ads to product placements, or for it’s core use as a container for housing Ad Exchange networks to monetize any page regardless of site content.

Every business that uses DFP will benefit the same way from the GA-DFP integration, but the analysis for Finding Lift might deviate into custom dimensions from enhanced ecommerce hits, or deep diving into event data to more thoroughly understand what to tweak on a given page to increase per page revenue.

Automate reports that show lift or lack thereof on major content categories

Use BigQuery extracts and analysis in Python to mine for ad lift opportunities

Publishers and Analytics Professionals: Work directly with the Ad Monetization side of the publishing business, and optimize GA Audiences for use in DFP Line Item Targets.

Also determine other target audiences (GA or 3rd party) to use in combination

Enjoy!

* A special thanks to Nick Lascheck for being instrumental in the DFP analysis

#googleanalytics#google360#googletagmanager#gtm#dfp#doubleclick#analytics#roi#monetization#publishing#publishers#marketing#sem#seo#repost#doubleclickforpublishers#money

0 notes

Photo

Brilliant

Artist Combines Passion for Math, Nature, and Art to Create Incredible Topographical Art

2K notes

·

View notes

Text

Campaign Tracking with UTM Parameters

Distinguishing between Paid and Non-Paid traffic in Google Analytics is more of an art-form than a science. It's difficult to find online help or literature that adds insight into this issue, and that's the motivation behind this blog entry: I want to make things easier for you, and me.

There are a few tricks that make it easy to ensure that the inbound URLs you have tagged with Google Analytics tracking parameters actually contribute to your "Paid Traffic" segmentation.

In review, Google Analytics offers the following URL parameters that are termed to be for Campaign tracking. These provide extremely valuable insight to you when combing through inbound traffic to your website for meaningful traffic metrics. By default, Google Analytics does not differentiate between Paid and Non-Paid traffic sources. Campaign tagging is required to accomplish this but Google doesn't seem to document this very clearly.

utm_medium = [Required] the how or channel or type of marketing, important to differentiate because behaviors tend to vary by type, i.e. banner visits behave very differently than email visitors usually

utm_source = [Required] the place from whence it comes, i.e. website, email marketing list or distributor, partner, more...

utm_campaign = [Required] name of marketing initiative

utm_term = used for paid search. keywords for this ad, or another term to distinguish this traffic source by it's most basic atomic element.

utm_content = identifier for the type of marketing/advertising creative, the message, the ad itself, etc... good to slice across other dimensions to see patterns that stand regardless of medium, source, campaign, more...

For a reference on Google campaign tagging click here. Google utm parameters can appear in any order in the URL after the "?" or "&".

Example URL with Google Analytics campaign tagging parameters:

www.site.com?utm_source=yahoo&utm_medium=cpc&utm_term=newkeyword &utm_campaign=mycampaign&utm_content=adtext

The parameter "utm_medium" is used by Google Analytics to classify inbound traffic to your site as Paid or Non-Paid. No other parameter is taken into account in this determination. When Auto-tagging is turned ON in AdWords, URL tagging is replaced with the AdWords "gclid=xxxxxxx..." parameter. The parameter is read by GA in a handshake between GA and AdWords where a complex attribution to AdWords keywords and campaigns is accomplished, yielding cost and click data for Google. No other paid traffic partner but Google receives this treatment -- only with Google AdWords auto-tagging will your site receive cost data associated with normal campaign tagging traffic.

Here is a cheat sheet for campaign tagging to ensure that your incoming traffic is categories as Paid by Google Analytics.

The following values for utm_medium are supported and resolve as "Paid" in GA (as of this article's date):

cpc, ppc, cpa, cpv, cpp, cpm

Tip: make them lower case only, or they will NOT be included in "Paid" reports/segments.

The "cpm" value in utm_medium has a conditional quality to it's attribution as paid.

It attributes if the Paid segment is selected.

It does not attribute in a custom report showing Paid traffic.

Screen grab of Paid Segment testing in GA:

Update: if utm_medium = referral, then the tagged inbound URL will be listed as a Referral, not a Campaign visit.

1 note

·

View note

Text

Our Culture of "Mine"

Just a month after I was hired in the summer of 2008, I sat in the office of the SVP of Technology at MySpace. He was an authentic guy. Full of ideas. Undoubtedly brilliant. I was hired as Director of Engineering over what was then called the “Tools” group. The obvious jokes were not spared me.

In my visit with the SVP who I’ll call, “James”, we talked for what must have been two hours while we shared dreamscape concepts, and he asked me plenty about processes and technology at Intel where I spent nearly 2 decades. Just near the end, he asked me a final question. “Chris, where do YOU think the next social explosion will happen… what will be that next social media company?”.

I paused, and gave him my most complete, best answer. “None”, I said.

"The evolution of social media is not toward a company, its away from branding, and moving toward what’s "mine". My notes, my music, my videos, my friends… my stuff. All together. Available. People want what’s theirs, and eventually the brand of the information becomes cumbersome, unless only one company owns all of it and then branding is ubiquitous… unimportant. It’s just about your stuff."

Some of the above is paraphrased because I don’t have total recall. But his response was etched in my brain. His smile turned to a flat-line, and our meeting was over. I never had a repeat long-talk meeting again.

What I was trying to say is that when I go for my music collection, it used to be a bunch of records, tapes, and CD’s tilted on the floor against the cabinet. I didn’t have a separate copy of them for every room in the house, or for each friend who might come over, or a separate copy bought and then labeled with some brand name arbitrarily placed on them to describe my association with some faceless organization. One set. Available. Mine. Multiples are pricey, and a ridiculous concept.

Six months later, James put in his resignation. Two months before that, the crash of the economy hit in November, 2008. One year, and two months to the day I was hired, I was escorted out, along with nearly 500 others that summer.

What I learned that day was not that I was wrong, it’s that i was right. But being right has a cost. I haven’t made money in the social media industry since MySpace. Haven’t really wanted to. And now we do have one ubiquitous General Motors of Social Media, called Facebook. While at MySpace I participated in the Safety and Security meetings that highlighted how Facebook had evaded responsibility for image review, because they weren’t owned by Rupert Murdock. The Attorney General of the US wasn’t interested in the little growing social media company, they wanted to make a name for themselves by crushing Rupert’s unrealized social dream. It happened. The pressure from content review and constant barrage of legal matters conjoined with the already slowing market for MySpace’s style. The Google deal was infamous at that point, and its golden shackles made MySpace’s demise even more imminent. It was a great company, and an amazing memory.

Today we have the same basic social media engines we had then, except for the late entry, Google + which is great for Hangouts but not so popular for sharing cat pictures.

Cloud storage has boomed. Cloud itself is seen as a location name-space. My things are in “The Cloud”. Companies have a built-in detachment from the consumer. They put your stuff in “the cloud”. You get your stuff from there, and buy more space for it. Apple, Amazon, Google all have clouds.

Where is my stuff? My stuff, and your stuff, my Mom’s stuff for her Apple TV, and her iPad. All of this is on bays of servers that we now call “the cloud”. We don’t know exactly where our data is situated physically, and that’s what makes it almost euphorically detached from our psyche. When I started programming for a living in 1988, I entered ice cold computer rooms that had row upon row of computers within. Intel’s western distribution center computers were downstairs from my desk where I spilled coffee. An entire building with rows of racks, humming, dim green and blue lights, cable management underneath floor tiles, and invariably some field service person working on his or her knees laying new network connects for a full day.

My Stuff has been dispersed into several Clouds. But they are all accessible on my iPhone, Apple TV, Mac, and iPad. Steve Jobs and the amazing Apple teams knew that possession requires accessibility. Seventy year old parents, and seventeen year old kids aren’t in love with the concept of a computer as my generation was when we were teenagers. They want their stuff to be accessible, friendly, available, accurate, and cool. And today, I want my stuff too. When I was speaking to James, I was talking about myself. I put myself into the mind of every consumer who has ever seen an innovation, and then asked “what’s next?” Telegraph, Trains, Automobiles, the Airplane, Radio, FM Radio, AC power, the Computer, the PC, the Mac, the Tesla car — all were blessed by the furnace that faces all innovation and greatness. Doubters. People with power who lack vision, and see change as a threat.

Great spirits often encounter violent opposition from mediocre minds - Einstein

The cloud makes this world invisible to my Mom with her iPad. At the age of 75 my adept Mom can sit on her patio and read the New York Times. But she thinks it’s actually resident on her iPad. Unbeknownst to her, her stuff, isn’t her stuff. She’s simply signed up for the right to look at it for a period of time that has no immediate ending, or beginning. She can download past articles for a fee, and enjoy “receiving” the paper every morning. Her “stuff” is everyone’s stuff, with an invisible little concept of ownership attached that in the ether-sphere somewhere deep inside of Amazon’s cloud stores, her user id appears in an account table, and since she’s paid up, her subscription is labeled, “active”. If someone set off an EMP (electro-magnetic pulse) weapon over the corn fields in Kansas where Amazon happens to have some server stores buried in an old salt mine, then Mom’s papers will stop coming. And she’ll have to call them to get her stuff. My Mom, for all of her distrust of online banking, and insisting upon extracting money manually by a written slip inside of her banking branch, is dependent upon the cloud and the faith built into it, for her newspaper.

Ownership is really just a matter of dates.

There was a house in my old neighborhood in Brookfield, Illinois that scared me as a child. I would go by it on my bike, and hurry past without looking because I thought ghosts lived there. It’s because there were newspapers stacked up in heaps on the lawn, and the shades were drawn, and it was spooky and dark. At age 7 I was still wondering why they hadn’t stopped receiving the paper. Why did the paperboy still throw them a paper every day? I talked with my Mom about that just last year, and she told me that the woman there was troubled. She wasn’t mean, but found it difficult to go outside. Like Boo Radley. I wonder if The Cloud will ever make people afraid of going outside. Will there be stacks upon stacks of our data on our lawns some day, waiting for us to pick them up and make use of them? Will the rain make the data run like ink lines into the soil? Will we have a use for our collected sets of independent Clouds where My Stuff is stored, or will we simply forget, and find a new form of weather within which to pour our identities.

3 notes

·

View notes

Text

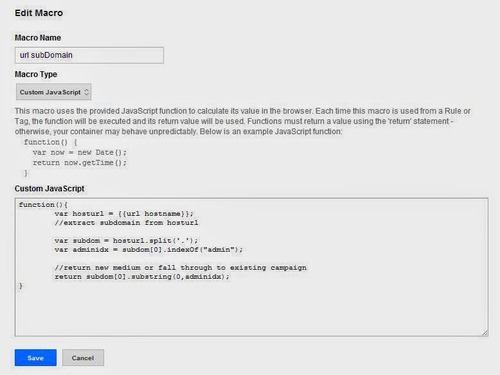

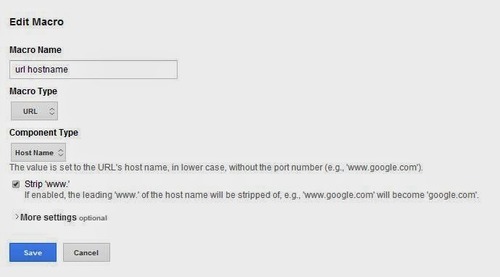

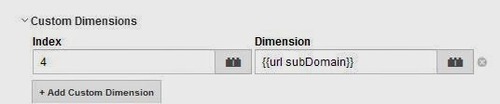

Google Tag Manager: Custom Javascript Macro - simple and awesome

Making a Custom JavaScript macro in Google Tag Manager is a very effective way to get some tricky little tasks done. Sometimes you may need to parse something out of a longer string, and then put that sub-string into a Custom Dimension, or use as a macro in other Tags. The way to do this is to create a Custom JavaScript macro. In the example below, I'll take you through the step by step process for creating a Custom Dimension to hold the domain or sub-domain name for any page. In this example, I'm extracting it from the "hostname url" via another macro.

Getting Started

Required: Universal Analytics, and GTM.

Start by creating a Custom JavaScript Macro. From the NEW button, select "Macro". Macro Type: select "Custom JavaScript".

Diving In

- A JavaScript function needs to be created to parse the element you're interested in. - In this case, I'm referencing another macro called "{{url hostname}}", which as you've surmised, it holds the value of the hostname url for the current page being viewed on your website. Components of the JS: - First, store the "url hostname" into a var called 'suddom' parse the var three times (easier to break into steps than nest) var subdom = hosturl.split('.'); - This uses the '.split' function to extract each piece of a string by using the '.' as a separator between words, and then stuffs each word into a separate - index in the array you provided (in this case, 'subdom'). e.g. If the url hostname was 'jello.coolfoods.com', then after the .split command ran, 'subdom' looks like:

subdom[0] == "jello", subdom[1] == "coolfoods"

subdom[2] == "com"

Our New Function

Whew: all we're interested in is the first word, "jello". That's what we'll be returning at the end of the function In the case above, I'm doing an extra step because I'm removing the word "admin" from the subdom[0] element through some other code. You can skp this, or include it in case you have a bit of the subdomain / domain that you want to strip off. In that case, replace "admin" below with your unwanted string bits.

- var adminidx = subdom[0].indexOf("admin"); - The index number 'adminidx' is the position of the word admin within the first array element

Now return only the first part of the 'subdom' as desired by the adminidx above. Return subdom[0].substring(0,adminidx);

This returns only the first piece of the subdomain by using the substring feature to extract the string between each of the two array indices [0] and ['adminidx] value

Cool. The function is done SAVE

Next - A Macro

The url subDomain macro is dependent upon the url hostname macro that extracts the hostname from the DOM

- Create a macro of type "URL"

- Set component type to "Host Name"

- Check the check-box for "Strip WWW"

- SAVE

Custom Dimensions Please

- Next, go into Admin in GA, and create a Custom Dimension in your web Property under. "Custom Definitions --> Custom Dimensions".

- In our example, it's named "{{url subDomain}}"

- Now that we have a Custom Javascript Macro called 'url subDomain', we need to use it to create a Custom Dimension in your Page View tag -- a firing rule must trigger this for ALL pages

We're Done!

Save, Debug, and Publish!.

1 note

·

View note

Text

What is the best bid management tool for several popular platforms?

Search Engine Marketing: What is the best bid management tool for several popular platforms? Answer by Christopher Bridges:

Kenshoo and Marin are the top two tools I'd recommend. All bid management tools are geared toward the top three paid search partners Google, Bing, and Yahoo. Yahoo is only differentiated from Bing in Asia. All are also expensive, and take a portion of your CPC/CPA, but try to guarantee higher bidding returns and upward bid optimizations -- as opposed to many in house bidding methodologies that optimize on lowering bidding costs. Kenshoo is my top pick due to it's much more agreeable technical support, and deep back end that will support a wide variety of custom tagging, and intricate attribution situations, including reading any in-house parameters you provide them. Marin has similar overall bidding offerings, but where they are tall in salesmanship, they are short in technology when it's time to deal with custom tagging. Both offer social tracking solutions. When you stray away from paid search or paid social, you'll be in no man's land like everyone else. DFS - DoublieClick for Search is another contender, but as the name states, it only operates on paid search. It's social integration is in development. There are no successful tools on the market at this point that can interface with the host of intext, email, display, and other contextual traffic partners that compete with paid search today. These all require customized API or UI scrapes to upload their cost data into a bidding system, and very few have bidding upload systems that are sophisticated. My choice for you is Kenshoo. As stated below, your daily budget is on the low end in comparison to the types of businesses that are served by Kenshoo and Marin. Let me know what you choose to do.

View Answer on Quora

1 note

·

View note

Text

Google Analytics Secret of Paid SEM Traffic Attribution through Campaign Parameters

Distinguishing between Paid and Non-Paid traffic in Google Analytics is more of an art-form than a science. It's difficult to find online help or literature that adds insight into this issue, and that's the motivation behind this blog entry: I want to make things easier for you, and me. There are a few tricks that make it easy to ensure that the inbound URLs you have tagged with Google Analytics tracking parameters actually contribute to your "Paid Traffic" segmentation. In review, Google Analytics offers the following URL parameters that are termed to be for Campaign tracking. These provide extremely valuable insight to you when combing through inbound traffic to your website for meaningful traffic metrics. By default, Google Analytics does not differentiate between Paid and Non-Paid traffic sources. Campaign tagging is required to accomplish this but Google doesn't seem to document this very clearly. Definitions of the utm parameters: [by AnalyticsPros]

utm_medium = [Required] the how or channel or type of marketing, important to differentiate because behaviors tend to vary by type, i.e. banner visits behave very differently than email visitors usually

utm_source = [Required] the place from whence it comes, i.e. website, email marketing list or distributor, partner, more...

utm_campaign = [Required] name of marketing initiative

utm_term = used for paid search. keywords for this ad, or another term to distinguish this traffic source by it's most basic atomic element.

utm_content = identifier for the type of marketing/advertising creative, the message, the ad itself, etc... good to slice across other dimensions to see patterns that stand regardless of medium, source, campaign, more...

For a reference on Google campaign tagging click here. Google utm parameters can appear in any order in the URL after the "?" or "&". Example URL with Google Analytics campaign tagging parameters: www.site.com?utm_source=yahoo&utm_medium=cpc&utm_term=newkeyword &utm_campaign=mycampaign&utm_content=adtext The parameter "utm_medium" is used by Google Analytics to classify inbound traffic to your site as Paid or Non-Paid. No other parameter is taken into account in this determination. When Auto-tagging is turned ON in AdWords, URL tagging is replaced with the AdWords "gclid=xxxxxxx..." parameter. The parameter is read by GA in a handshake between GA and AdWords where a complex attribution to AdWords keywords and campaigns is accomplished, yielding cost and click data for Google. No other paid traffic partner but Google receives this treatment -- only with Google AdWords auto-tagging will your site receive cost data associated with normal campaign tagging traffic. Here is a cheat sheet for campaign tagging to ensure that your incoming traffic is categories as Paid by Google Analytics. The following values for utm_medium are supported and resolve as "Paid" in GA (as of this article's date):

cpc, ppc, cpa, cpv, cpp, cpm

Tip: make them lower case only, or they will NOT be included in "Paid" reports/segments. The "cpm" value in utm_medium has a conditional quality to it's attribution as paid.

It attributes if the Paid segment is selected.

It does not attribute in a custom report showing Paid traffic.

Screen grab of Paid Segment testing in GA:

Update: if utm_medium = referral, then the tagged inbound URL will be listed as a Referral, not a Campaign visit.

#google analytics#sem#marketing attribution#marketing#campaign tracking#campaign parameters#utm_medium#paid attribution#ga#utm#gtm#googletagmanager#wonkydata#analytics#googleanalytics

1 note

·

View note