#deepfake political abuse

Explore tagged Tumblr posts

Text

The Perils of Unbridled AI: A Deepfake Dystopia

The world had long debated the ethics of artificial intelligence, but never had the consequences been as tangible—or as grotesque—as the controversy that erupted inside the headquarters of the U.S. Department of Housing and Urban Development (HUD). It began with a single, shocking deepfake: a hyper-realistic AI-generated video of U.S. President Donald Trump sucking the toes of billionaire entrepreneur Elon Musk. It wasn’t just an internet prank or a piece of satirical art; it was broadcast inside a government facility, weaponized as a political statement, and designed to manipulate public perception.

For years, experts had warned about the unchecked growth of AI, but policymakers, seduced by its economic and strategic advantages, failed to implement meaningful safeguards. What followed was a world where reality blurred with fiction, and the consequences of digital deception reached far beyond a mere moment of embarrassment.

#AI deepfake dangers#unregulated AI risks#deepfake political abuse#AI misinformation#deepfake technology impact#AI regulation#digital deception#truth manipulation#AI-generated propaganda

1 note

·

View note

Text

Trolls Used Her Face to Make Fake Porn. There Was Nothing She Could Do.

Sabrina Javellana was a rising star in local politics — until deepfakes derailed her life.

https://www.nytimes.com/2024/07/31/magazine/sabrina-javellana-florida-politics-ai-porn.html

Most mornings, before walking into City Hall in Hallandale Beach, Fla., a small city north of Miami, Sabrina Javellana would sit in the parking lot and monitor her Twitter and Instagram accounts. After winning a seat on the Hallandale Beach city commission in 2018, at age 21, she became one of the youngest elected officials in Florida’s history. Her progressive political positions had sometimes earned her enemies: After proposing a name change for a state thoroughfare called Dixie Highway in late 2019, she regularly received vitriolic and violent threats on social media; her condemnation of police brutality and calls for criminal-justice reform prompted aggressive rhetoric from members of local law enforcement. Disturbing messages were nothing new to her.

The morning of Feb. 5, 2021, though, she noticed an unusual one. “Hi, just wanted to let you know that somebody is sharing pictures of you online and discussing you in quite a grotesque manner,” it began. “He claims that he’s one of your ‘guy friends.’”

Javellana froze. Who could have sent this message? She asked for evidence, and the sender responded with pixelated screenshots of a forum thread that included photos of her. There were comments that mentioned her political career. Had her work drawn these people’s ire? Eventually, with a friend’s help, she found a set of archived pages from the notorious forum site 4chan. Most of the images were pulled from her social media and annotated with obscene, misogynistic remarks: “not thicc enough”; “I would breed her”; “no sane person would date such a stupid creature.” But one image further down the thread stopped her short. She was standing in front of a full-length mirror with her head tilted to the side, smiling playfully. She had posted an almost identical selfie, in which she wore a brown crew-neck top and matching skirt, to her Instagram account back in 2015. “It was the exact same picture,” Javellana said of the doctored image. “But I wasn’t wearing any clothes.”

There were several more. These were deepfakes: A.I.-generated images that manipulate a person’s likeness, fusing it with others to create a false picture or video, sometimes pornographic, in a way that looks authentic. Although fake explicit material has existed for decades thanks to image-editing software, deepfakes stand out for their striking believability. Even Javellana was shaken by their apparent authenticity.

“I didn’t know that this was something that happened to everyday people,” Javellana told me when I visited her earlier this year in Florida. She wondered if anyone else had seen the photos or the abusive comments online. Several of the threads even implied that people on the forum knew her. “I live in Broward County,” one comment read. “She just graduated from FIU.” Other users threatened sexual violence. In the days that followed, Javellana became increasingly fearful and paranoid. She stopped walking alone at night and started triple-checking that her doors and windows were locked before she slept. In an effort to protect her personal life, she made her Instagram private and removed photographs of herself in a bathing suit.

Discovering the images changed how Javellana operated professionally. Attending press events was part of her job, but now she felt anxious every time someone lifted their camera. She worried that public images of her would be turned into pornography, so she covered as much of her body as she could, favoring high-cut blouses and blazers. She knew she wasn’t acting rationally — people could create new deepfakes regardless of how much skin she showed in the real world — but changing her style made her feel a sense of control. If the deepfakes went viral, no one could look at how she dressed and think that she had invited this harassment.

148 notes

·

View notes

Text

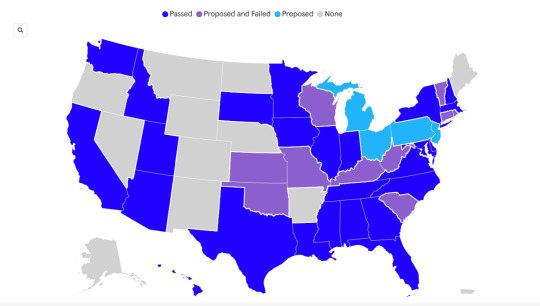

As national legislation on deepfake pornography crawls its way through Congress, states across the country are trying to take matters into their own hands. Thirty-nine states have introduced a hodgepodge of laws designed to deter the creation of nonconsensual deepfakes and punish those who make and share them.

Earlier this year, Democratic congresswoman Alexandria Ocasio-Cortez, herself a victim of nonconsensual deepfakes, introduced the Disrupt Explicit Forged Images and Non-Consensual Edits Act, or Defiance Act. If passed, the bill would allow victims of deepfake pornography to sue as long as they could prove the deepfakes had been made without their consent. In June, Republican senator Ted Cruz introduced the Take It Down Act, which would require platforms to remove both revenge porn and nonconsensual deepfake porn.

Though there’s bilateral support for many of these measures, federal legislation can take years to make it through both houses of Congress before being signed into law. But state legislatures and local politicians can move faster—and they’re trying to.

Last month, San Francisco City Attorney David Chiu’s office announced a lawsuit against 16 of the most visited websites that allow users to create AI-generated pornography. “Generative AI has enormous promise, but as with all new technologies, there are unintended consequences and criminals seeking to exploit the new technology. We have to be very clear that this is not innovation—this is sexual abuse,” Chiu said in a statement released by his office at the time.

The suit was just the latest attempt to try to curtail the ever-growing issue of nonconsensual deepfake pornography.

“I think there's a misconception that it's just celebrities that are being affected by this,” says Ilana Beller, organizing manager at Public Citizen, which has been tracking nonconsensual deepfake legislation and shared their findings with WIRED. “It's a lot of everyday people who are having this experience.”

Data from Public Citizen shows that 23 states have passed some form of nonconsensual deepfake law. “This is such a pervasive issue, and so state legislators are seeing this as a problem,” says Beller. “I also think that legislators are interested in passing AI legislation right now because we are seeing how fast the technology is developing.”

Last year, WIRED reported that deepfake pornography is only increasing, and researchers estimate that 90 percent of deepfake videos are of porn, the vast majority of which is nonconsensual porn of women. But despite how pervasive the issue is, Kaylee Williams, a researcher at Columbia University who has been tracking nonconsensual deepfake legislation, says she has seen legislators more focused on political deepfakes.

“More states are interested in protecting electoral integrity in that way than they are in dealing with the intimate image question,” she says.

Matthew Bierlein, a Republican state representative in Michigan, who cosponsored the state’s package of nonconsensual deepfake bills, says that he initially came to the issue after exploring legislation on political deepfakes. “Our plan was to make [political deepfakes] a campaign finance violation if you didn’t put disclaimers on them to notify the public.” Through his work on political deepfakes, Bierlein says, he began working with Democratic representative Penelope Tsernoglou, who helped spearhead the nonconsensual deepfake bills.

At the time in January, nonconsensual deepfakes of Taylor Swift had just gone viral, and the subject was widely covered in the news. “We thought that the opportunity was the right time to be able to do something,” Beirlein says. And Beirlein says that he felt Michigan was in the position to be a regional leader in the Midwest, because, unlike some of its neighbors, it has a full-time legislature with well-paid staffers (most states don’t). “We understand that it's a bigger issue than just a Michigan issue. But a lot of things can start at the state level,” he says. “If we get this done, then maybe Ohio adopts this in their legislative session, maybe Indiana adopts something similar, or Illinois, and that can make enforcement easier.”

But what the penalties for creating and sharing nonconsensual deepfakes are—and who is protected—can vary widely from state to state. “The US landscape is just wildly inconsistent on this issue,” says Williams. “I think there's been this misconception lately that all these laws are being passed all over the country. I think what people are seeing is that there have been a lot of laws proposed.”

Some states allow for civil and criminal cases to be brought against perpetrators, while others might only provide for one of the two. Laws like the one that recently took effect in Mississippi, for instance, focus on minors. Over the past year or so, there have been a spate of instances of middle and high schoolers using generative AI to make explicit images and videos of classmates, particularly girls. Other laws focus on adults, with legislators essentially updating existing laws banning revenge porn.

Unlike laws that focus on nonconsensual deepfakes of minors, on which Williams says there is a broad consensus that there they are an “inherent moral wrong,” legislation around what is “ethical” when it comes to nonconsensual deepfakes of adults is “squishier.” In many cases, laws and proposed legislation require proving intent, that the goal of the person making and sharing the nonconsensual deepfake was to harm its subject.

But online, says Sara Jodka, an attorney who specializes in privacy and cybersecurity, this patchwork of state-based legislation can be particularly difficult. “If you can't find a person behind an IP address, how can you prove who the person is, let alone show their intent?”

Williams also notes that in the case of nonconsensual deepfakes of celebrities or other public figures, many of the creators don’t necessarily see themselves as doing harm. “They’ll say, ‘This is fan content,’ that they admire this person and are attracted to them,” she says.

State laws, Jobka says, while a good start, are likely to have limited power to actually deal with the issue, and only a federal law against nonconsensual deepfakes would allow for the kind of interstate investigations and prosecutions that could really force justice and accountability. “States don't really have a lot of ability to track down across state lines internationally,” she says. “So it's going to be very rare, and it's going to be very specific scenarios where the laws are going to be able to even be enforced.”

But Michigan’s Bierlein says that many state representatives are not content to wait for the federal government to address the issue. Bierlein expressed particular concern about the role nonconsensual deepfakes could play in sextortion scams, which the FBI says have been on the rise. In 2023, a Michigan teen died by suicide after scammers threatened to post his (real) intimate photos online. “Things move really slow on a federal level, and if we waited for them to do something, we could be waiting a lot longer,” he says.

96 notes

·

View notes

Text

The extent of "activism" coming from most "leftist" men is literally just them making deepfake porn videos of women whose political opinions they don't agree with, and calling them whores, bitches and cunts.

They can't otherwise be openly misogynistic while still being considered a performative nice-guy marxist leftie, so they save all their hate for the women they don't like.

But once there's an "acceptable class" of woman that can be targeted, it's only a matter of time before the criteria narrows regarding what it takes to be considered a "good" woman. As in, a woman who shuts up and doesn't have unpopular opinions and has never done or said anything that could be perceived as "problematic" ever, and is thus granted the privilege of being exempt from harassment and abuse.

#gender critical#radfem friendly#terfblr#feminism#radical feminists do interact#dropthet#terf#lgb#radical feminism#radblr

59 notes

·

View notes

Text

https://www.nytimes.com/2024/07/31/magazine/sabrina-javellana-florida-politics-ai-porn.html

-- Trolls Used Her Face to Make Fake Porn. There Was Nothing She Could Do. --

Another reason why I am no free speech absolutist, given how easy it is to destroy someone's life with abusive and harassing speech, with no practical recourse. As we can see, these deepfakes are themselves being used to stifle speech through intimidation and pain and suffering.

I have slowly been moving towards supporting exempting social media from Section 230 protections, thus incentivizing platforms to remove such material. Making them potential defendants in defamation cases stemming from users' pornographic deepfakes would do much to inspire more responsible corporate citizenship of them.

3 notes

·

View notes

Text

Blog Post Week 2

Should AI be a commodity?

AI should not be a commodity as companies do not have the interests of the public in mind but rather only profit incentives when making AI products. Currently, companies are able to take data from the public and use it in any way they want and since these companies hold the power in the producer and consumer relationship that they inhabit, similar to that mentioned on page 20 of Fuchs’ 2014 article, Social Media: A Critical Introduction, consumers would not be truly equal with these companies. This unequal treatment will allow companies to extend their power over the public and influence them however they want.

Should AI be legal?

While AI has many negative aspects such as the ability for companies to steal data through user input and the possible applications in using AI deepfake technology in criminal activities, it should still be kept legal due to the benefits of easy data organization such as those used on search engines, and especially due to its applications in free speech. The ability to create deep fakes of political officials in order to parody them must be preserved not only because it serves as a strong method of protest, but also as it has begun to ingrain itself in society through presidential deep fakes; marking deepfakes as an upcoming historical method of protest that should be preserved.

If AI should be kept legal, how should it be controlled?

To control AI, the government should have clear and easy access to AI based websites in order to monitor the usage of AI in illegal activities. This would also prevent companies from misusing AI in a similar manner to government actions mentioned in the pdf document, Gonzalez and Torres’ News For All The People, as only with government interference were media companies willing to focus on integration, and only through government interference will companies be prevented from abusing consumers. While this would put extreme power over the people in the hands of the government, it is necessary so that deepfake and AI technology is not used to harm the public or used for nefarious purposes.

How will we keep free speech available for AI?

However, if AI is controlled by the government how will the free speech potentialities of AI be protected? It would become necessary for the government to publish uniform reports of their activities surveilling government monitored AI websites along with all arrests and activities. A legal precedent would also need to be created through legislation in order to preserve the parody aspects of deep fakes and AI to ensure this method of free speech can’t be muted by the government or private parties.

References:

Fuchs, C. (2014). Social media : A critical introduction (3rd ed.). Sage.

Gonzalez, Torres. News For All The People.pdf

6 notes

·

View notes

Text

We Looked at 78 Election Deepfakes. Political Misinformation is not an AI Problem.

New Post has been published on https://thedigitalinsider.com/we-looked-at-78-election-deepfakes-political-misinformation-is-not-an-ai-problem/

We Looked at 78 Election Deepfakes. Political Misinformation is not an AI Problem.

AI-generated misinformation was one of the top concerns during the 2024 U.S. presidential election. In January 2024, the World Economic Forum claimed that “misinformation and disinformation is the most severe short-term risk the world faces” and that “AI is amplifying manipulated and distorted information that could destabilize societies.” News headlines about elections in 2024 tell a similar story:

In contrast, in our past writing, we predicted that AI would not lead to a misinformation apocalypse. When Meta released its open-weight large language model (called LLaMA), we argued that it would not lead to a tidal wave of misinformation. And in a follow-up essay, we pointed out that the distribution of misinformation is the key bottleneck for influence operations, and while generative AI reduces the cost of creating misinformation, it does not reduce the cost of distributing it. A few other researchers have made similar arguments.

Which of these two perspectives better fits the facts?

Fortunately, we have the evidence of AI use in elections that took place around the globe in 2024 to help answer this question. Many news outlets and research projects have compiled known instances of AI-generated text and media and their impact. Instead of speculating about AI’s potential, we can look at its real-world impact to date.

We analyzed every instance of AI use in elections collected by the WIRED AI Elections Project, which tracked known uses of AI for creating political content during elections taking place in 2024 worldwide. In each case, we identified what AI was used for and estimated the cost of creating similar content without AI.

We find that (1) most AI use isn’t deceptive, (2) deceptive content produced using AI is nevertheless cheap to replicate without AI, and (3) focusing on the demand for misinformation rather than the supply is a much more effective way to diagnose problems and identify interventions.

To be clear, AI-generated synthetic content poses many real dangers: the creation of non-consensual images of people and child sexual abuse material and the enabling of the liar’s dividend, which allows those in power to brush away real but embarrassing or controversial media content about them as AI-generated. These are all important challenges. This essay is focused on a different problem: political misinformation.

Improving the information environment is a difficult and ongoing challenge. It’s understandable why people might think AI is making the problem worse: AI does make it possible to fabricate false content. But that has not fundamentally changed the landscape of political misinformation.

Paradoxically, the alarm about AI might be comforting because it positions concerns about the information environment as a discrete problem with a discrete solution. But fixes to the information environment depend on structural and institutional changes rather than on curbing AI-generated content.

We analyzed all 78 instances of AI use in the WIRED AI Elections Project (source for our analysis). We categorized each instance based on whether there was deceptive intent. For example, if AI was used to generate false media depicting a political candidate saying something they didn’t, we classified it as deceptive. On the other hand, if a chatbot gave an incorrect response to a genuine user query, a deepfake was created for parody or satire, or a candidate transparently used AI to improve their campaigning materials (such as by translating a speech into a language they don’t speak), we classify it as non-deceptive.

To our surprise, there was no deceptive intent in 39 of the 78 cases in the database.

The most common non-deceptive use of AI was for campaigning. When candidates or supporters used AI for campaigning, in most cases (19 out of 22), the apparent intent was to improve campaigning materials rather than mislead voters with false information.

We even found examples of deepfakes that we think helped improve the information environment. In Venezuela, journalists used AI avatars to avoid government retribution when covering news adversarial to the government. In the U.S., a local news organization from Arizona, Arizona Agenda, used deepfakes to educate viewers about how easy it is to manipulate videos. In California, a candidate with laryngitis lost his voice, so he transparently used AI voice cloning to read out typed messages in his voice during meet-and-greets with voters.

Reasonable people can disagree on whether using AI in campaigning materials is legitimate or what the appropriate guardrails need to be. But using AI for campaign materials in non-deceptive ways (for example, when AI is used as a tool to improve voter outreach) is much less problematic than deploying AI-generated fake news to sway voters.

Of course, not all non-deceptive AI-generated political content is benign. Chatbots often incorrectly answer election-related questions. Rather than deceptive intent, this results from the limitations of chatbots, such as hallucinations and lack of factuality. Unfortunately, these limitations are not made clear to users, leading to an overreliance on flawed large language models (LLMs).

For each of the 39 examples of deceptive intent, where AI use was intended to make viewers believe outright false information, we estimated the cost of creating similar content without AI—for example, by hiring Photoshop experts, video editors, or voice actors. In each case, the cost of creating similar content without AI was modest—no more than a few hundred dollars. (We even found that a video involving a hired stage actor was incorrectly marked as being AI-generated in WIRED’s election database.)

In fact, it has long been possible to create media with outright false information without using AI or other fancy tools. One video used stage actors to falsely claim that U.S. Vice President and Democratic presidential candidate Kamala Harris was involved in a hit-and-run incident. Another slowed down the vice president’s speech to make it sound like she was slurring her words. An edited video of Indian opposition candidate Rahul Gandhi showed him saying that the incumbent Narendra Modi would win the election. In the original video, Gandhi said his opponent would not win the election, but it was edited using jump cuts to take out the word “not.” Such media content has been called “cheap fakes” (as opposed to AI-generated “deepfakes”).

There were many instances of cheap fakes used in the 2024 U.S. election. The News Literacy Project documented known misinformation about the election and found that cheap fakes were used seven times more often than AI-generated content. Similarly, in other countries, cheap fakes were quite prevalent. An India-based fact checker reviewed an order of magnitude more cheap fakes and traditionally edited media compared to deepfakes. In Bangladesh, cheap fakes were over 20 times more prevalent than deepfakes.

Let’s consider two examples to analyze how cheap fakes could have led to substantially similar effects as the deepfakes that got a lot of media attention: Donald Trump’s use of Taylor Swift deepfakes to campaign and a voice-cloned robocall that imitated U.S. President Joe Biden in the New Hampshire primary asking voters not to vote.

A Truth Social post shared by Donald Trump with images of Taylor Swift fans wearing “Swifties for Trump” t-shirts. Top left: A post with many AI-generated images of women wearing “Swifties for Trump” t-shirts, with a “satire” label. Top right: A real image of Trump supporter Jenna Piwowarczyk wearing a “Swifties for Trump” t-shirt. Bottom left: A fabricated image of Taylor Swift in front of the American flag with the caption, “Taylor wants you to vote for Donald Trump.” It is unclear if the image was created using AI or other editing software. Bottom right: A Twitter post with two images: one AI-generated, the other real, of women wearing “Swifties for Trump” t-shirts.

Trump’s use of Swift deepfakes implied that Taylor Swift had endorsed him and that Swift fans were attending his rallies en masse. In the wake of the post, many media outlets blamed AI for the spread of misinformation.

But recreating similar images without AI is easy. Images depicting Swift’s support could be created by photoshopping text endorsing Trump onto any of her existing images. Likewise, getting images of Trump supporters wearing “Swifties for Trump” t-shirts could be achieved by distributing free t-shirts at a rally—or even selectively reaching out to Swift fans at Trump rallies. In fact, two of the images Trump shared were real images of a Trump supporter who is also a Swift fan.

Another incident that led to a brief panic was an AI clone of President Joe Biden’s voice that asked people not to vote in the New Hampshire primary.

News headlines in the wake of the Biden robocall.

Rules against such robocalls have existed for years. In fact, the perpetrator of this particular robocall was fined $6 million by the Federal Communications Commission (FCC). The FCC has tiplines to report similar attacks, and it enforces rules around robocalls frequently, regardless of whether AI is used. Since the robocall used a static recording, it could have been made about as easily without using AI—for instance, by hiring voice impersonators.

It is also unclear what impact the robocall had: The efficacy of the deepfake depends on the recipient believing that the president of the United States is personally calling them on the phone to ask them not to vote in a primary.

Is it just a matter of time until improvements in technology and the expertise of actors seeking to influence elections lead to more effective AI disinformation? We don’t think so. In the next section, we point out that structural reasons that drive the demand for misinformation are not aided by AI. We then look at the history of predictions about coming waves of AI disinformation that have accompanied the release of new tools—predictions that have not come to pass.

Misinformation can be seen through the forces of supply and demand. The supply comes from people who want to make a buck by generating clicks, partisans who want their side to win, or state actors who want to conduct influence operations. Interventions so far have almost entirely tried to curb the supply of misinformation while leaving the demand unchanged.

The focus on AI is the latest example of this trend. Since AI reduces the cost of generating misinformation to nearly zero, analysts who look at misinformation as a supply problem are very concerned. But analyzing the demand for misinformation can clarify how misinformation spreads and what interventions are likely to help.

Looking at the demand for misinformation tells us that as long as people have certain worldviews, they will seek out and find information consistent with those views. Depending on what someone’s worldview is, the information in question is often misinformation—or at least would be considered misinformation by those with differing worldviews.

In other words, successful misinformation operations target in-group members—people who already agree with the broad intent of the message. Such recipients may have lower skepticism for messages that conform to their worldviews and may even be willing to knowingly amplify false information. Sophisticated tools aren’t needed for misinformation to be effective in this context. On the flip side, it will be extremely hard to convince out-group members of false information that they don’t agree with, regardless of AI use.

Seen in this light, AI misinformation plays a very different role from its popular depiction of swaying voters in elections. Increasing the supply of misinformation does not meaningfully change the dynamics of the demand for misinformation since the increased supply is competing for the same eyeballs. Moreover, the increased supply of misinformation is likely to be consumed mainly by a small group of partisans who already agree with it and heavily consume misinformation rather than to convince a broader swath of the public.

This also explains why cheap fakes such as media from unrelated events, traditional video edits such as jump cuts, or even video game footage can be effective for propagating misinformation despite their low quality: It is much easier to convince someone of misinformation if they already agree with its message.

Our analysis of the demand for misinformation may be most applicable to countries with polarized close races where leading parties have similar capacities for voter outreach, so that voters’ (mis)information demands are already saturated.

Still, to our knowledge, in every country that held elections in 2024 so far, AI misinformation had much less impact than feared. In India, deepfakes were used for trolling more than spreading false information. In Indonesia, the impact of AI wasn’t to sow false information but rather to soften the image of then-candidate, now-President Prabowo Subianto (a former general accused of many past human rights abuses) using AI-generated digital cartoon avatars that depicted him as likable.

The 2024 election cycle wasn’t the first time when there was widespread fear that AI deepfakes would lead to rampant political misinformation. Strikingly similar concerns about AI were expressed before the 2020 U.S. election, though these concerns were not borne out. The release of new AI tools is often accompanied by worries that it will unleash new waves of misinformation:

2019. When OpenAI released its GPT-2 series of models in 2019, one of the main reasons it held back on releasing the model weights for the most capable models in the series was its alleged potential to generate misinformation.

2023. When Meta released the LLaMA model openly in 2023, multiple news outlets reported concerns that it would trigger a deluge of AI misinformation. These models were far more powerful than the GPT-2 models released by OpenAI in 2019. Yet, we have not seen evidence of large-scale voter persuasion attributed to using LLaMA or other large language models.

2024. Most recently, the widespread availability of AI image editing tools on smartphones has prompted similar concerns.

In fact, concerns about using new technology to create false information go back over a century. The late 19th and early 20th centuries saw the advent of technologies for photo retouching. This was accompanied by concerns that retouched photographs would be used to deceive people, and, in 1912, a bill was introduced in the U.S. that would have criminalized photo editing without subjects’ consent. (It died in the Senate.)

Thinking of political misinformation as a technological (or AI) problem is appealing because it makes the solution seem tractable. If only we could roll back harmful tech, we could drastically improve the information environment!

While the goal of improving the information environment is laudable, blaming technology is not a fix. Political polarization has led to greater mistrust of the media. People prefer sources that confirm their worldview and are less skeptical about content that fits their worldview. Another major factor is the drastic decline of journalism revenues in the last two decades—largely driven by the shift from traditional to social media and online advertising. But this is more a result of structural changes in how people seek out and consume information than the specific threat of misinformation shared online.

As history professor Sam Lebovic has pointed out, improving the information environment is inextricably linked to the larger project of shoring up democracy and its institutions. There’s no quick technical fix, or targeted regulation, that can “solve” our information problems. We should reject the simplistic temptation to blame AI for political misinformation and confront the gravity of the hard problem.

This essay is cross-posted to the Knight First Amendment Institute website. We are grateful to Katy Glenn Bass for her feedback.

#2023#2024#advertising#ai#AI image#AI Image Editing#ai tools#ai-generated content#American#Analysis#attention#avatars#bass#biden#california#challenge#change#chatbot#chatbots#clone#communications#content#course#Database#Deceptive AI#deepfake#deepfakes#Democracy#democratic#deploying

0 notes

Text

The Rise of AI Deepfakes: Navigating the Challenges of a Digital Illusion

In recent years, AI deepfakes have become a growing concern in both the digital and real worlds. Deepfakes use artificial intelligence and machine learning to create hyper-realistic manipulated videos, audio, and images that can convincingly depict people saying or doing things they never did. While deepfake technology has some legitimate uses, such as in entertainment, education, and filmmaking, its potential for harm has raised alarms.

The Dark Side of Deepfakes

One of the biggest dangers of deepfakes lies in their ability to spread misinformation. Malicious actors can create false videos or audio clips of public figures or even private individuals, leading to the spread of fake news, reputational damage, and political manipulation. The realism of deepfakes makes it increasingly difficult for the average person to distinguish between what’s real and what’s fabricated, contributing to a crisis of trust in online media.

Ethical Concerns

Beyond misinformation, deepfakes can also be used for more harmful purposes, such as creating explicit content without consent. This raises serious ethical questions about privacy, consent, and the potential for digital harassment. Victims of deepfake abuse can face emotional distress and long-lasting consequences in both their personal and professional lives.

Combating Deepfakes

While the risks are evident, efforts are being made to combat the misuse of deepfake technology. AI-driven tools are being developed to detect and identify manipulated media, while social media platforms are increasing their monitoring to take down harmful content. Legal frameworks are also evolving to address the creation and distribution of deepfakes, though this remains an ongoing challenge.

Conclusion

AI deepfakes represent both the incredible potential and serious risks of artificial intelligence in the digital age. As the technology continues to improve, it’s essential for society to stay vigilant, promote digital literacy, and work towards solutions that protect individuals from the harmful impacts of deepfakes, while still embracing the positive applications of AI innovation.

0 notes

Text

Tech and Human Rights - Navigating Ethical Dilemmas Part 2

As the world becomes increasingly reliant on technology and its potential for good, it’s important to remember that the use of new technologies can also undermine human rights. From authoritarian states using technology to monitor political dissidents, to the proliferation of fake news and “deepfakes” that destabilize a democratic public sphere, there are many ways in which technological innovation can have negative impacts on human rights.

Tech and Human Rights: Navigating Ethical Dilemmas is the second in a series of articles that explores how to think about human rights and technology in a complex world. This article examines how to consider the impact of technology on power, mechanisms for accountability and private authority in human rights law and practice.

The rapid growth of new technologies offers huge opportunities to advance the human rights agenda in a number of areas, such as tracking and identifying migrants using satellite data; aiding the search for evidence of rights abuses through the use of artificial intelligence to assist with image recognition; and providing forensic technology that can reconstruct crime scenes and hold perpetrators accountable. But they also pose significant challenges, such as the risk that new What is techogle? technologies could be used by state actors to violate rights or exacerbate inequality for people already at risk, and a lack of capacity among human rights professionals to take full advantage of these advances (AHRRC 2018).

One key challenge is that while technology moves quickly, law and human rights move very slowly. This often means that new technologies are racing out of legal control, and the societal consequences can be devastating for human rights. But it is possible for human rights experts to shape and guide the development of technology in order to ensure that its potential positive benefits outweigh the risks.

This can include ensuring that human rights considerations are built into the design process, and supporting developers to build ethical systems into their products from the ground up. It can also involve engaging with the wider community of technology workers and engineers, through initiatives such as the Association for Computing Machinery Conference on Fairness, Accountability and Transparency, to push back against the deployment of their work in ways that clearly violate human rights (Gregory 2019).

However, despite these efforts, the gap between what technologists know and how humans technology website are actually using their products remains vast, especially when it comes to emerging technologies like blockchain, AI, machine learning and virtual reality. As a result, it is essential to continue educating and training human rights practitioners on how to harness these new tools effectively and ensure that they are being used in line with international legal frameworks that protect people’s rights, including the UN Guiding Principles on Business and Human Rights. This is an area of great opportunity, and a crucial responsibility, for all those working in this field.

1 note

·

View note

Text

As much as I fucking despise Musk, and the system which creates people like Musk, this particular example of ludicrously out of touch behavior is not true.

For some genuine scumbag behavior:

The ‘free speech defender’ sharing deepfakes for political campaigning.

The ‘entrepreneur’ doing advertising for grift.

His abusive, transphobic behavior towards his own daughter.

74K notes

·

View notes

Text

In his spare time, Tony Eastin likes to dabble in the stock market. One day last year, he Googled a pharmaceutical company that seemed like a promising investment. One of the first search results Google served up on its news tab was listed as coming from the Clayton County Register, a newspaper in northeastern Iowa. He clicked, and read. The story was garbled and devoid of useful information—and so were all the other finance-themed posts filling the site, which had absolutely nothing to do with northeastern Iowa. “I knew right away there was something off,” he says. There’s plenty of junk on the internet, but this struck Eastin as strange: Why would a small Midwestern paper churn out crappy blog posts about retail investing?

Eastin was primed to find online mysteries irresistible. After years in the US Air Force working on psychological warfare campaigns he had joined Meta, where he investigated nastiness ranging from child abuse to political influence operations. Now he was between jobs, and welcomed a new mission. So Eastin reached out to Sandeep Abraham, a friend and former Meta colleague who previously worked in Army intelligence and for the National Security Agency, and suggested they start digging.

What the pair uncovered provides a snapshot of how generative AI is enabling deceptive new online business models. Networks of websites crammed with AI-generated clickbait are being built by preying on the reputations of established media outlets and brands. These outlets prosper by confusing and misleading audiences and advertisers alike, “domain squatting” on URLs that once belonged to more reputable organizations. The scuzzy site Eastin was referred to no longer belonged to the newspaper whose name it still traded in the name of.

Although Eastin and Abraham suspect that the network which the Register’s old site is now part of was created with straightforward moneymaking goals, they fear that more malicious actors could use the same sort of tactics to push misinformation and propaganda into search results. “This is massively threatening,” Abraham says. “We want to raise some alarm bells.” To that end, the pair have released a report on their findings and plan to release more as they dig deeper into the world of AI clickbait, hoping their spare-time efforts can help draw awareness to the issue from the public or from lawmakers.

Faked News

The Clayton County Register was founded in 1926 and covered the small town of Ekader, Iowa, and wider Clayton County, which nestle against the Mississippi River in the state’s northeast corner. “It was a popular paper,” says former coeditor Bryce Durbin, who describes himself as “disgusted” by what’s now published at its former web address, claytoncountyregister.com. (The real Clayton County Register merged in 2020 with The North Iowa Times to become the Times-Register, which publishes at a different website. It’s not clear how the paper lost control of its web domain; the Times-Register did not return requests for comment.)

As Eastin discovered when trying to research his pharma stock, the site still brands itself as the Clayton County Register but no longer offers local news and is instead a financial news content mill. It publishes what appear to be AI-generated articles about the stock prices of public utility companies and Web3 startups, illustrated by images that are also apparently AI-generated.

“Not only are the articles we looked at generated by AI, but the images included in each article were all created using diffusion models,” says Ben Colman, CEO of deepfake detection startup Reality Defender, which ran an analysis on several articles at WIRED’s request. In addition to that confirmation, Abraham and Eastin noticed that some of the articles included text admitting their artificial origins. “It’s important to note that this information was auto-generated by Automated Insights,” some of the articles stated, name-dropping a company that offers language-generation technology.

When Eastin and Abraham examined the bylines on the Register’s former site they found evidence that they were not actual journalists—and probably not even real people. The duo’s report notes that many writers listed on the site shared names with well-known people from other fields and had unrealistically high output.

One Emmanuel Ellerbee, credited on recent posts about bitcoin and banking stocks, shares a name with a former professional football player. When Eastin and Abraham started their investigation in November 2023, the journalist database Muck Rack showed that he had bylined an eye-popping 14,882 separate news articles in his “career,” including 50 published the day they checked. By last week, the Muck Rack profile for Ellerbee showed that output has continued apace—he’s credited with publishing 30,845 articles. Muck Rack’s CEO Gregory Galant says the company “is developing more ways to help our users discern between human-written and AI-generated content." He points out that Ellerbee’s profile is not included in Muck Rack’s human-curated database of verified profiles.

The Register’s domain appears to have changed hands in August 2023, data from analytics service Similar Web shows, around the time it began to host its current financial news churn. Eastin and Abraham used the same tool to confirm that the site was attracting most of its readership through SEO, targeting search keywords about stock purchasing to lure clicks. Its most notable referrals from social media came from crypto news forums on Reddit where people swap investment tips.

The whole scheme appears aimed at winning ad revenue from the page views of people who unwittingly land on the site’s garbled content. The algorithmic posts are garnished with ads served by Google’s ad platform. Sometimes those ads appear to be themed on financial trading, in line with the content, but others are unrelated—WIRED saw an ad for the AARP. Using Google's ad network on AI-generated posts with fake bylines could fall foul of the company's publisher policies, which forbid content that “misrepresents, misstates, or conceals” information about the creator of content. Occasionally, sites received direct traffic from the CCR domain, suggesting its operators may have struck up other types of advertising deals, including a financial brokerage service and an online ad network.

Unknown Operator

Eastin and Abraham’s attempts to discover who now owns the Clayton County Register’s former domain were inconclusive—as were WIRED’s—but they have their suspicions. The pair found that records of its old security certificates linked the domain to a Linux server in Germany. Using the internet device search engine Shodan.io, they found that a Polish website that formerly advertised IT services appeared associated with the Clayton County Register and several other domains. All were hosted on the same German server and published strikingly similar, apparently AI-generated content. An email previously listed on the Polish site was no longer functional and WIRED’s LinkedIn messages to a man claiming to be its CEO got no reply.

One of the other sites within this wider network was Aboutxinjiang.com. When Eastin and Abraham began their investigation at the end of 2023 it was filled with generic, seemingly-AI-generated financial news posts, including several about the use of AI in investing. The Internet Archive showed that it had previously served a very different purpose. Originally, the site had been operated by a Chinese outfit called “the Propaganda Department of the Party Committee of the Xinjiang Uyghur Autonomous Region,” and hosted information about universities in the country’s northwest. In 2014, though, it shuttered, and sat dormant until 2022, when its archives were replaced with Polish-language content, which was later replaced with apparently-automated clickbait in English. Since Eastin and Abraham first identified the site it has gone through another transformation. Early this month it began redirects to a page with information about Polish real estate.

Altogether, Eastin and Abraham pinpointed nine different websites linked to the Polish IT company that appeared to comprise an AI clickbait network. All the sites appeared to have been chosen because they had preestablished reputations with Google that could help win prominence in search rankings to draw clicks.

Google claims to have systems in place to address attempts to game search rankings by buying expired domains, and says that it considers using AI to create articles with the express purpose of ranking well to be spam. “The tactics described as used with these sites are largely in violation of Search’s spam policies,” says spokesperson Jennifer Kutz. Sites determined to have breached those policies can have their search ranking penalized, or be delisted by Google altogether.

Still, this type of network has become more prominent since the advent of generative AI tools. McKenzie Sadeghi, a researcher at online misinformation tracking company NewsGuard, says her team has seen an over 1,000 percent increase in AI-generated content farms within the past year.

WIRED recently reported on a separate network of AI-generated clickbait farms, run by Serbian DJ Nebojša Vujinović Vujo. While he was forthcoming about his motivations, Vujo did not provide granular details about how his network—which also includes former US-based local news outlets—operates. Eastin and Abraham’s work fills in some of the blanks about what this type of operation looks like, and how difficult it can be to identify who runs these moneymaking gambits. “For the most part, these are anonymously run,” Sadeghi says. “They use special services when they register domains to hide their identity.”

That’s something Abraham and Eastin want to change. They have hopes that their work might help regular people think critically about how the news they see is sourced, and that it may be instructive for lawmakers thinking about what kinds of guardrails might improve our information ecosystem. In addition to looking into the origins of the Clayton County Register’s strange transformation, the pair have been investigating additional instances of AI-generated content mills, and are already working on their next report. “I think it’s very important that we have a reality we all agree on, that we know who is behind what we’re reading,” Abraham says. “And we want to bring attention to the amount of work we’ve done just to get this much information.”

Other researchers agree. “This sort of work is of great interest to me, because it’s demystifying actual use cases of generative AI,” says Emerson Brooking, a resident fellow at the Atlantic Council’s Digital Forensic Research Lab. While there’s valid concern about how AI might be used as a tool to spread political misinformation, this network demonstrates how content mills are likely to focus on uncontroversial topics when their primary aim is generating traffic-based income. “This report feels like it is an accurate snapshot of how AI is actually changing our society so far—making everything a little bit more annoying.”

9 notes

·

View notes

Text

The Dark Side of Deepfakes: Challenges and Solutions

The emergence of deepfakes, which swaps faces and alters voices to create hyper-realistic videos, has stirred up concern about the potential for abuse. These technologies are being used to create non-consensual porn, as well as in disinformation and fake news campaigns that can destabilize precarious peace and re-ignite frozen or low-intensity conflicts.

These new tools will increase the reach of misinformation and decrease our connection to a shared understanding of facts. They will also undermine trust in institutions that people turn to for information and increase the speed at which disinformation spreads. In addition, they will allow criminals to exploit the technology for everything from stock-market manipulation (faking corporate CEOs making false statements that send the company’s stocks soaring) to insurance fraud, where a fake video of an accident victim can trigger fraudulent payouts.

One of the most serious concerns is that deepfakes can have a psychological impact, particularly for those exposed to them on an ongoing basis. In one study, researchers found that individuals exposed to deeply manipulated content on a regular basis had lower self-esteem and were more likely to report feelings of inadequacy and anxiety than those not exposed. The authors of this study suggest that these techogle.co findings could be a result of the fact that people who are exposed to deepfakes on a regular basis may feel their perceptions of themselves are continuously challenged and need reinforcement to maintain a sense of resiliency.

There are a number of ways to mitigate the effects of deepfakes, including education and the development of robust detection systems. Technology companies must continue to innovate and develop detection mechanisms, and government agencies should coordinate with these entities to keep abreast of emerging threats. Further, media literacy programs must be augmented with teaching individuals how to critically evaluate online content, which can help them spot a deepfake when they see it.

A further issue is that these new technologies present a real threat to personal privacy, as they enable individuals’ faces and voice to be used without their consent. Policymakers may need to establish laws that require a person’s permission before their image is used in a deepfake. They may also need to consider imposing “know your customer” legislation, similar to the requirements that exist in the banking industry, in order to ensure that deepfakes are not being created and distributed with stolen identities.

Finally, there are also technology website national security concerns that must be considered. Strategic deepfakes can be used to manipulate elections, sway the political process or to discredit opposition – all of which are significant national security concerns, especially when they are created by foreign powers. This requires close collaboration between law enforcement and computer science experts to ensure that these technological tools are being employed for good and not evil. These efforts must be coordinated at the federal, state and local levels as well as within regional computer crime task forces.

1 note

·

View note

Text

New Post has been published on Lokapriya.com

New Post has been published on https://www.lokapriya.com/deepfake-technology-the-potential-risks-of-future-part-i/

DeepFake Technology: The Potential Risks of Future! Part I

Imagine you are watching a video of your favorite celebrity giving a speech. You are impressed by their eloquence and charisma, and you agree with their message. But then you find out that the video was not real. It was a deep fake, a synthetic media created by AI “(Artificial Intelligence) that can manipulate the appearance and voice of anyone. You feel deceived and confused.

This is no longer a hypothetical scenario; this is now real. There are several deepfakes of prominent actors, celebrities, politicians, and influencers circulating the internet. Some include deepfakes of Film Actors like Tom Cruise and Keanu Reeves on TikTok, among others. Even Indian PM Narendra Modi’s deepfake edited video was made.

In simple terms, Deepfakes are AI-generated videos and images that can alter or fabricate the reality of people, events, and objects. This technology is a type of artificial intelligence that can create or manipulate images, videos, and audio that look and sound realistic but are not authentic. Deepfake technology is becoming more sophisticated and accessible every day. It can be used for various purposes, such as in entertainment, education, research, or art. However, it can also pose serious risks to individuals and society, such as spreading misinformation, violating privacy, damaging reputation, impersonating identity, and influencing public opinion.

In this article, I will be exploring the dangers of deep fake technology and how we can protect ourselves from its potential harm.

How is Deepfake Technology a Potential Threat to Society?

Deepfake technology is a potential threat to society because it can:

Spread misinformation and fake news that can influence public opinion, undermine democracy, and cause social unrest.

Violate privacy and consent by using personal data without permission, and creating image-based sexual abuse, blackmail, or harassment.

Damage reputation and credibility by impersonating or defaming individuals, organizations, or brands.

Create security risks by enabling identity theft, fraud, or cyber attacks.

Deepfake technology can also erode trust and confidence in the digital ecosystem, making it harder to verify the authenticity and source of information.

The Dangers and Negative Uses of Deepfake Technology

As much as there may be some positives to deepfake technology, the negatives easily overwhelm the positives in our growing society. Some of the negative uses of deepfakes include:

Deepfakes can be used to create fake adult material featuring celebrities or regular people without their consent, violating their privacy and dignity. Because it has become very easy for a face to be replaced with another and a voice changed in a video. Surprising, but true.

Deepfakes can be used to spread misinformation and fake news that can deceive or manipulate the public. Deepfakes can be used to create hoax material, such as fake speeches, interviews, or events, involving politicians, celebrities, or other influential figures.

Since face swaps and voice changes can be carried out with the deepfake technology, it can be used to undermine democracy and social stability by influencing public opinion, inciting violence, or disrupting elections.

False propaganda can be created, fake voice messages and videos that are very hard to tell are unreal and can be used to influence public opinions, cause slander, or blackmail involving political candidates, parties, or leaders.

Deepfakes can be used to damage reputation and credibility by impersonating or defaming individuals, organizations, or brands. Imagine being able to get the deepfake of Keanu Reeves on TikTok creating fake reviews, testimonials, or endorsements involving customers, employees, or competitors.

For people who do not know, they are easy to convince and in an instance where something goes wrong, it can lead to damage in reputation and loss of belief in the actor.

Ethical, Legal, and Social Implications of Deepfake Technology

Ethical Implications

Deepfake technology can violate the moral rights and dignity of the people whose images or voices are used without their consent, such as creating fake pornographic material, slanderous material, or identity theft involving celebrities or regular people. Deepfake technology can also undermine the values of truth, trust, and accountability in society when used to spread misinformation, fake news, or propaganda that can deceive or manipulate the public.

Legal Implications

Deepfake technology can pose challenges to the existing legal frameworks and regulations that protect intellectual property rights, defamation rights, and contract rights, as it can infringe on the copyright, trademark, or publicity rights of the people whose images or voices are used without their permission.

Deepfake technology can violate the privacy rights of the people whose personal data are used without their consent. It can defame the reputation or character of the people who are falsely portrayed in a negative or harmful way.

Social Implications

Deepfake technology can have negative impacts on the social well-being and cohesion of individuals and groups, as it can cause psychological, emotional, or financial harm to the victims of deepfake manipulation, who may suffer from distress, anxiety, depression, or loss of income. It can also create social divisions and conflicts among different groups or communities, inciting violence, hatred, or discrimination against certain groups based on their race, gender, religion, or political affiliation.

Imagine having deepfake videos of world leaders declaring war, making false confessions, or endorsing extremist ideologies. That could be very detrimental to the world at large.

I am afraid that in the future, deepfake technology could be used to create more sophisticated and malicious forms of disinformation and propaganda if not controlled. It could also be used to create fake evidence of crimes, scandals, or corruption involving political opponents or activists or to create fake testimonials, endorsements, or reviews involving customers, employees, or competitors.

Read More: Detecting and Regulating Deepfake Technology: The Challenges! Part II

#ai#ai-and-deepfakes#cybersecurity#Deep Fake#DeepFake Technology#deepfakes#generative-ai#Modi#Narendra Modi#Security#social media#synthetic-media#web

0 notes

Text

The connections between AI, digital media, and democracy are multifaceted and complex, and they have both positive and negative implications for the functioning of democratic societies. Here's an analysis of these connections:

Information Dissemination:

Positive: AI algorithms can help analyze vast amounts of data from digital media sources to identify trends, patterns, and emerging issues. This can aid journalists, policymakers, and citizens in making informed decisions and promoting transparency in a democracy.

Negative: AI-powered algorithms on social media platforms can amplify misinformation and filter bubbles. These algorithms prioritize content that generates engagement, which often leads to the spread of sensationalist or polarizing content. This can erode the quality of information available to citizens and undermine the democratic process.

Personalization:

Positive: AI can personalize content delivery, tailoring news and information to individual preferences. This can enhance user experience and engagement with digital media, making it more accessible and appealing.

Negative: Personalization can create echo chambers, where individuals are exposed only to information that aligns with their existing beliefs. This can lead to confirmation bias and hinder open dialogue, which is essential for democratic deliberation.

Censorship and Surveillance:

Positive: AI can be used for content moderation to remove harmful or illegal content, such as hate speech or graphic violence, from digital media platforms. This helps maintain a safer online environment.

Negative: AI-based surveillance and censorship can be abused by governments to stifle dissent and limit freedom of expression. This poses a significant threat to democracy, as it curtails citizens' ability to voice their opinions and access diverse information.

Manipulation and Deepfakes:

Negative: AI can generate highly convincing deepfake videos and manipulate digital content. This can be used to deceive the public, create fake news, and undermine trust in digital media and democratic institutions.

Accessibility and Inclusivity:

Positive: AI can improve accessibility by providing automated transcription, translation, and other assistive technologies for digital media content. This ensures that information is available to a wider and more diverse audience, promoting democratic inclusivity.

Election Interference:

Negative: AI can be used to manipulate elections through disinformation campaigns, voter profiling, and micro-targeting. This can undermine the integrity of democratic processes and lead to outcomes that do not accurately reflect the will of the people.

Ethical Considerations:

Positive: Discussions around the ethical use of AI in digital media can lead to the development of guidelines and regulations that protect democratic values, such as transparency in algorithmic decision-making.

Negative: The lack of clear ethical standards and regulations for AI in digital media can result in unintended consequences that threaten democracy, as seen in instances of algorithmic bias or discrimination.

In conclusion, AI's role in digital media has profound implications for democracy. While it has the potential to enhance information dissemination, personalization, and accessibility, it also poses risks such as misinformation, censorship, and election interference. The impact of AI on democracy will depend on how it is developed, deployed, and regulated, making it essential to strike a balance between innovation and safeguarding democratic principles.

--------------------------------------------------------------------------------------------------------------------------------

How can AI technology be positively deployed to underpin political institutions?

AI technology has the potential to positively impact political institutions in various ways, promoting transparency, efficiency, and better decision-making. Here are several ways in which AI can be deployed to underpin political institutions:

Data Analysis and Predictive Analytics: AI can help political institutions analyze vast amounts of data, including polling data, social media sentiment, and historical election results. Predictive analytics can be used to forecast election outcomes, identify emerging issues, and gauge public opinion.

Voter Engagement: AI-powered chatbots and virtual assistants can engage with citizens to provide information about elections, candidates, and important issues. These tools can also help with voter registration and absentee ballot requests, making the electoral process more accessible.

Enhancing Policy Making: AI can assist policymakers in identifying trends and patterns in data that may inform better policy decisions. Natural language processing (NLP) algorithms can help in summarizing research papers, public comments, and legislative texts, making it easier for lawmakers to understand complex issues.

Election Security: AI can be used to enhance the security of elections by identifying and mitigating cyber security threats, such as hacking attempts and disinformation campaigns. Machine learning algorithms can help detect anomalies in voter registration data and voting patterns to prevent fraud.

Constituent Services: AI-powered chat bots and virtual assistants can handle routine constituent inquiries and complaints, freeing up human staff to focus on more complex issues. These tools can provide quick and efficient responses to common questions.

Redistricting: AI algorithms can assist in the redistricting process by ensuring that electoral districts are drawn fairly and without bias. By analyzing demographic data and historical voting patterns, AI can help create more representative and equitable districts.

Public Engagement and Feedback: AI can facilitate public engagement through online forums and social media. Sentiment analysis can help political institutions understand public sentiment and concerns, allowing them to respond more effectively to citizen feedback.

Resource Allocation: AI can help political campaigns and parties optimize their resource allocation by identifying key demographics and regions where they should focus their efforts to maximize impact.

Transparency and Accountability: AI can assist in monitoring campaign finance and political contributions, helping to ensure transparency and accountability in the political process.

Language Translation and Accessibility: AI-powered translation tools can make political information more accessible to citizens who speak different languages. This can help bridge language barriers and ensure that information is available to a wider audience.

Disaster Response and Crisis Management: During emergencies or natural disasters, AI can help political institutions analyze real-time data, predict the impact of disasters, and coordinate response efforts more effectively.

However, it's crucial to deploy AI technology in a way that prioritizes ethics, privacy, and fairness. Additionally, there should be transparency and accountability in the use of AI in political institutions to maintain public trust. Regular audits, data protection measures, and oversight mechanisms are essential to ensure that AI is deployed responsibly and for the benefit of society as a whole.

--------------------------------------------------------------------------------------------------------------------------------

Describe the process of audio event detection, recognition, and monitoring with AI Audio event detection, recognition, and monitoring with AI involves the use of artificial intelligence and machine learning techniques to analyze and understand audio signals, identify specific events or patterns, and continuously monitor audio data for relevant information. This process can have various applications, including surveillance, security, environmental monitoring, and more. Here's an overview of the steps involved:

Data Collection:

The process begins with the collection of audio data. This data can come from various sources, such as microphones, sensors, or audio recordings.

Data Preprocessing: Raw audio data is often noisy and may contain irrelevant information. Preprocessing steps are applied to clean and prepare the data for analysis. This can include noise reduction, filtering, and audio normalization.

Feature Extraction: Extracting relevant features from the audio data is crucial for AI models to understand and identify events. Common audio features include spectral features (e.g., Mel-frequency cepstral coefficients - MFCCs), pitch, tempo, and more. These features help represent the audio data in a format suitable for machine learning.

Machine Learning Models: AI models, such as deep neural networks (e.g., convolutional neural networks - CNNs, recurrent neural networks - RNNs) or more advanced models like deep learning-based spectrogram analysis models, are trained using labeled audio data. This training process allows the AI model to learn patterns and characteristics associated with specific audio events.

Event Detection:In this stage, the trained AI model is applied to real-time or recorded audio streams. The model analyzes the audio data in segments, attempting to detect the presence of specific events or sounds. This could be anything from detecting gunshots in a security system to identifying animal sounds in environmental monitoring.

Event Recognition:Once an event is detected, the AI system can further analyze and recognize the event's nature or category. For instance, it can differentiate between different types of alarms, voices, musical instruments, or specific words in speech.

Monitoring and Alerting:The system continuously monitors the audio data and keeps track of detected and recognized events. When a relevant event is detected, the system can trigger notifications or alerts. This is especially useful in security and surveillance applications, where timely response is crucial.

Feedback and Improvement: Over time, the AI model can be fine-tuned and improved by continuously feeding it more labeled data, incorporating user feedback, and adjusting its parameters to reduce false positives and false negatives.

Post-processing: To enhance the accuracy of the system, post-processing techniques can be applied to the detected events. This may involve contextual analysis, temporal analysis, or combining audio data with other sensor data for better event understanding.

Visualization and Reporting: The results of the audio event detection and monitoring can be visualized through user interfaces or reports, making it easier for users to understand and act on the information provided by the AI system.

Overall, audio event detection, recognition, and monitoring with AI leverage machine learning to provide real-time insights and actionable information from audio data, enabling various applications across different domains. The effectiveness of such systems depends on the quality of training data, the sophistication of AI models, and the post-processing techniques applied.

For AI generator, please click this

0 notes

Text

The Reality Game by Samuel Woolley reflection

The Reality Game by Samuel Woolley gives readers an idea of the many facets of technology that could lead to the degradation of “truth” and how this impacts social and political systems.

The book discusses the prominence of technology like bots, augmented reality techniques and deepfakes in politics and how it can and has impacted elections. For example, bots that originated from Russia spread significant disinformation during the 2016 presidential election, and emerging technology can only strengthen these bots.

The book also discussed Section 230 of the Communications Decency Act which removed blame from content posted online from the internet service providers. This kept companies like Facebook and Reddit from being under constant legal scrutiny. However, Woolley argues that this has kept them from taking accountability for rapid disinformation or hate speech on these sites. Similarly, I believe companies should do the morally correct thing in addition to the legal requirements. For example, a few years ago Reddit removed the community R/Incel and created a new policy that communities that incited violence would be banned. This came after a man shot six people in California and it was revealed he was a prominent figure in this community. These changes show how internet service providers can do the “right” thing to protect people online even if they are not legally required to do so.

I found the portion on deepfakes very interesting and also very apt as apps like TikTok make the creation and consumption of deepfakes more widespread. For example, last year there was a series of comedic videos that sounded like President Biden and Barack Obama were having a heartfelt conversation on how difficult it is to be president. While these were very funny, the ability to mimic voices so accurately does create the risk of bad actors using this technology to spread disinformation. For example, recently a phone call was leaked that featured President Biden telling his troubled son Hunter that he loved him and was supporting him through his struggle with addiction. When I first heard this clip, I was’t entirely sure that it was real because I had seen previous Biden deepfakses.

Here’s an example of the comedic deepfakes people make of the former presidents:

youtube

Outside of politics, the advanced technology described in the book can create many other problems for people online. This is briefly touched on, but some people use images influencers post online to create fake pornography that can be damaging to their reputation. This actually happened pretty recently to an influencer that streams herself playing video games. The issue with this, besides the obvious, is that this sort of content has never been regulated. Unlike revenge pornography, the influencer this happened to had no legal recourse to take when her image was superimposed on the inappropriate content.

Here’s the vice article I read on this issue and the young woman’s response :

One part of the book that really intrigued me was the portion where he discussed the many items and tools that have been replaced by technology. I made a graphic reflection on this notion of tools becoming obsolete. For example, I never carry my wallet because I have Apple Pay enabled in most places. I never go to the physical library anymore because I can access free ebooks with my library card that I can read on my phone or Kindle.

After reading this book and the related readings, I did have some questions I thought would be interesting for the class.

How do we balance using AI and deepfakes for comedy when they can actually be used maliciously? To what extent do you think social media companies like TikTok should limit their AI capabilities to avoid it being used for misinformation?

The book discussed section 230 and the protections it gives social media platforms against the content posted on their site. what extent do you think consumers of social media accounts can and should demand more than the legal minimum for these sites to monitor content?

I can't wait to discuss this in class on Friday!

0 notes

Text

Jon Potter, Partner at The RXN Group – Interview Series

New Post has been published on https://thedigitalinsider.com/jon-potter-partner-at-the-rxn-group-interview-series/

Jon Potter, Partner at The RXN Group – Interview Series

Jon Potter is a Partner and leads the State-Level AI Practice at RXN Group. He is an experienced lawyer, lobbyist, and communicator, has founded and led two industry associations and a consumer organization, and consulted many industries and organizations on legislative, communications and issue advocacy challenges. Jon founded the Digital Media Association, Fan Freedom, and the Application Developers Alliance, was Executive Vice President of the global communications firm Burson-Marsteller, and a lawyer for several years with the firm of Weil, Gotshal, & Manges.

As both a client and consultant, Jon has overseen federal, multistate and international advocacy campaigns and engaged lobbyists, communications firms, and law firms on three continents. Jon has testified in Congress and state legislatures several times, has spoken at dozens of conferences throughout the U.S. and internationally, and has been interviewed on national and local radio and television news programs, including CNN, Today Show, and 60 Minutes.

Can you provide an overview of the key trends in AI legislation across states in 2024?

2024 has been an extraordinary year for state-level AI legislation, marked by several trends.

The first trend is volume. 445 AI bills were introduced across 40 states, and we expect this will continue in 2025.

A second trend is a consistent dichotomy—bills about government use of AI were generally optimistic, while bills about AI generally and private sector use of AI were skeptical and fearful. Additionally, several states passed bills creating AI “task forces,” which are now meeting.

What are the main concerns driving state legislators to introduce AI bills, and how do these concerns vary from state to state?

Many legislators want government agencies to improve with AI – to deliver better services more efficiently.