#apache default indexes

Explore tagged Tumblr posts

Note

idk if you do ooc zampanio asks but do you just like have a massive document with like all the info somewhere

and is it even possible to understand what’s going on

lol

the maze is, more of less, the massive document

and i gotta navigate it any time i want to remember something

the reason theres so many secrets in the literal backrooms of the maze is its so i can remember things

for example, most reference art of the mall blorbos is in TwoGayJokes/Stories/ unless its in the secret location of South's images unless its less than a year old in which case its in the bathrooms root unless its in TruthSim's root.

every so often i hafta ask ppl if i REALLY made x or just dreamed it if i have trouble finding something

10/10, no notes, ideal way to create a maze is to accidentally trap yourself in there along with everyone else

#zampanio#plus#past me is constantly playing pranks on current and future me#theres FAKE parts of the maze#you can only see if youre in the backrooms of it#to make me think things are broken when they are not#when i say backrooms#i mean the servers console#or the occasional#apache default indexes

14 notes

·

View notes

Text

Your Essential Guide to Python Libraries for Data Analysis

Here’s an essential guide to some of the most popular Python libraries for data analysis:

1. Pandas

- Overview: A powerful library for data manipulation and analysis, offering data structures like Series and DataFrames.

- Key Features:

- Easy handling of missing data

- Flexible reshaping and pivoting of datasets

- Label-based slicing, indexing, and subsetting of large datasets

- Support for reading and writing data in various formats (CSV, Excel, SQL, etc.)

2. NumPy

- Overview: The foundational package for numerical computing in Python. It provides support for large multi-dimensional arrays and matrices.

- Key Features:

- Powerful n-dimensional array object

- Broadcasting functions to perform operations on arrays of different shapes

- Comprehensive mathematical functions for array operations

3. Matplotlib

- Overview: A plotting library for creating static, animated, and interactive visualizations in Python.

- Key Features:

- Extensive range of plots (line, bar, scatter, histogram, etc.)

- Customization options for fonts, colors, and styles

- Integration with Jupyter notebooks for inline plotting

4. Seaborn

- Overview: Built on top of Matplotlib, Seaborn provides a high-level interface for drawing attractive statistical graphics.

- Key Features:

- Simplified syntax for complex visualizations

- Beautiful default themes for visualizations

- Support for statistical functions and data exploration

5. SciPy

- Overview: A library that builds on NumPy and provides a collection of algorithms and high-level commands for mathematical and scientific computing.

- Key Features:

- Modules for optimization, integration, interpolation, eigenvalue problems, and more

- Tools for working with linear algebra, Fourier transforms, and signal processing

6. Scikit-learn

- Overview: A machine learning library that provides simple and efficient tools for data mining and data analysis.

- Key Features:

- Easy-to-use interface for various algorithms (classification, regression, clustering)

- Support for model evaluation and selection

- Preprocessing tools for transforming data

7. Statsmodels

- Overview: A library that provides classes and functions for estimating and interpreting statistical models.

- Key Features:

- Support for linear regression, logistic regression, time series analysis, and more

- Tools for statistical tests and hypothesis testing

- Comprehensive output for model diagnostics

8. Dask

- Overview: A flexible parallel computing library for analytics that enables larger-than-memory computing.

- Key Features:

- Parallel computation across multiple cores or distributed systems

- Integrates seamlessly with Pandas and NumPy

- Lazy evaluation for optimized performance

9. Vaex

- Overview: A library designed for out-of-core DataFrames that allows you to work with large datasets (billions of rows) efficiently.

- Key Features:

- Fast exploration of big data without loading it into memory

- Support for filtering, aggregating, and joining large datasets

10. PySpark

- Overview: The Python API for Apache Spark, allowing you to leverage the capabilities of distributed computing for big data processing.

- Key Features:

- Fast processing of large datasets

- Built-in support for SQL, streaming data, and machine learning

Conclusion

These libraries form a robust ecosystem for data analysis in Python. Depending on your specific needs—be it data manipulation, statistical analysis, or visualization—you can choose the right combination of libraries to effectively analyze and visualize your data. As you explore these libraries, practice with real datasets to reinforce your understanding and improve your data analysis skills!

1 note

·

View note

Text

What are some good ways to protect a Wordpress site from hackers?

WordPress security is a topic of huge importance for every website owner. Google blacklists around 10,000+ websites every day for malware and around 50,000 for phishing every week.

If you are serious about your website, then you need to pay attention to the WordPress security best practices. In this guide, we will share all the top WordPress security tips to help you protect your website against hackers and malware.

WordPress security steps need to follow to secure the WordPress website

Keeping your WordPress website, theme and plugin updated: The WordPress web application has to keep update and maintain on a regular basis. They have an update for automatically from WP admin console and also manually. There are a different plugin and themes which support by third-party web developers and get updated. You can also update the theme and plugin from WP administrative area.

Change your admin user name: Change your default admin username to stop the brute force attack.

Disable your Appearance > file editing: After disable file editing no one can edit your theme files from admin console. For stop edition you can write following code in your “wp-config.php: file. // Disallow file edit define( ‘DISALLOW_FILE_EDIT’, true );

Strong passwords and user permission: Hacker can easily steal the password. You need to provide some strong password and characteristics must be unique. It must be for the WordPress area, FTP accounts, database, hosting accounts and also the personal email address.

Keep secure WordPress hosting: This is one type of good shared hosting providers. Please read reviews about secure WP hosting companies and select one of them for your website. It keeps with them some extra measures to protect websites from common threats. However, this hosting will provide a more secure platform to the websites.

Install a WordPress backup services or software: For any WordPress attack, backup is the main thing. The backup will help to quickly restore your data if you have lost it. There are some free and paid plugins. For the safer site, you must take backup regularly. Storing can be Amazon, Dropbox, or any private clouds. You can do the backup as per your convenient. Nowadays easily can be done through these plugin like vaultPress or Backupbuddy.

By installation of WordPress s securities plugins: you need to be install some WP security plugin that can keep your business or blog WP website safe and secure.

Protect Your WordPress Admin Area : You can protect your admin section for your IP address only. You can do this using .htacess or take help of apache or web developer.

Limit login attempts : You may use the Login LockDown plugin to limit the login attempts for admin user.

Change WordPress database prefix : The default database WordPress prefix is WP. You may change it your own prefix from the “wpconfig.php”. The code is as below $table_prefix = = ‘wp_r5466_”

Disable directory browsing and indexing : By the directory browsing hackers are able to view known vulnerabilities files on your hosting server. It is also help in other people about your files and images on your website. It is highly recommended for securities to stop indexing and browsing of directory. Again you can put .htacess file. code that is below to stop indexing. Options -Indexes

Logout automatically logged in user after some time : You can use the plugin Idea User logout for sign out of inactive user.

Web | Design | Strategy

for construction and real estate companies

AS Creative Services is a Web Design firm based in Kensington, MD. We focus on Strategy, Web Design and Development, Graphic Design & Internet Marketing.

Too know more visit:

AS Creative Services | DC Metro | Web Design and Development FirmAS Creative Services is a DC Metro based Web Design and Development Firm specializing in strategic design, development & graphic design services for Nonprofits and Associations, Construction and Property Management companies and Small to Medium Sized Businesses.https://ascreativeservices.com/

#WordPressMaintenanceService#Wordpress#Wordpress MaintenanceServiceforsmallbusiness#wordpress websitemaintenance

0 notes

Link

Configure Commerce and Magento to use Elasticsearch

This part examines the base settings you should decide to test Elasticsearch with Magento 2. For extra insights regarding designing Elasticsearch, see the elasticsearch for magento 2.

Configure Elasticsearch within Magento

To configure Magento to use Elasticsearch:

Log in to the Magento Admin as an administrator.

Click Stores > Settings > Configuration > Catalog > Catalog > Catalog Search.

From the Search Engine list, select your Elasticsearch version.

The following table lists the required configuration options to configure and test the connection with Magento. Unless you changed Elasticsearch server settings, the defaults should work. Skip to the next step.

Elasticsearch Server Hostname- Enter the fully qualified hostname or IP address of the machine running Elasticsearch. Cloud for Adobe Commerce: Get this value from your integration system.

Elasticsearch Server Port- Enter the Elasticsearch web server proxy port. The default is 9200. Cloud for Adobe Commerce: Get this value from your integration system.

Elasticsearch Index Prefix- Enter the Elasticsearch index prefix. If you use a single Elasticsearch instance for more than one Magento installation (Staging and Production environments), you must specify a unique prefix for each installation. Otherwise, you can use the default prefix magento2.

Enable Elasticsearch HTTP Auth- Click Yes only if you enabled authentication for your Elasticsearch server. If so, provide a username and password in the provided fields.

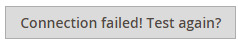

Click Test Connection.

You will see either:

Continue with:

Configure Apache and Elasticsearch

Configure nginx and Elasticsearch

or you will see:

If so, try the following:

Make sure the Elasticsearch server is running.

If the Elasticsearch server is on a different host from Magento, log in to the Magento server and ping the Elasticsearch host. Resolve network connectivity issues and test the connection again.

Examine the command window in which you started Elasticsearch for stack traces and exceptions. You must resolve those before you continue. In particular, make sure you started Elasticsearch as a user with root privileges.

Make sure that UNIX firewall and SELinux are both disabled, or set up rules to enable Elasticsearch and Magento to communicate with each other.

Verify the value of the Elasticsearch Server Hostname field. Make sure the server is available. You can try the server’s IP address instead.

Use the netstat -an | grep **listen-port** command to verify that the port specified in the Elasticsearch Server Port field is not being used by another process.

For example, to see if Elasticsearch is running on its default port, use the following command:

netstat -an | grep 9200

Reindexing catalog search and refreshing the full page cache

After you change Magento’s Elasticsearch configuration, you must reindex the catalog search index and refresh the full page cache using the Admin or command line.

To refresh the cache using the Admin:

In the Admin, click System > Cache Management.

Select the checkbox next to Page Cache.

From the Actions list in the upper right, click Refresh. The following figure shows an example.

To clean the cache using the command line, use the magento cache:clean command.

To reindex using the command line:

Log in to your Magento server as, or switch to, the Magento file system owner.

Enter any of the following commands:

Enter the following command to reindex the catalog search index only:

bin/magento indexer:reindex catalogsearch_fulltext

Enter the following command to reindex all indexers:

bin/magento indexer:reindex

Wait until reindexing completes.

Unlike the cache, indexers are updated by a cron job. Make sure cron is enabled before you start using Elasticsearch. for more visit our site: mirasvit

1 note

·

View note

Text

Unscatter, What Is It?

Welcome to Unscatter.com, what is it?

I think perhaps the best way to put it is, Unscatter.com is a news discovery and search engine that cares about your privacy. You can check it out if you have not already - Unscatter.com

I think the other thing to point out before I get any deeper is, Unscatter is a personal project being developed by one guy in his spare time. Currently it has no revenue generation, however I would love for it to one day at least pay for itself. Current budget for hosting and Cloudflare is just under $50 a month.

Ok, but what is it?

Unscatter.com is a searchable index of the stories people have been sharing with each other for the past 30 days. By default search results will only include stories shared in the past day. There is an option to expand your search to last week or the last 30 days.

Stories are currently sourced from Reddit.

What about that “cares about your privacy” statement?

Unscatter doesn’t track you. I don’t use cookies and I don’t even log the ip of visitors. It’s dropped from the access logs.

Unscatter does use CloudFlare for CDN purposes, so ideally we don’t even see most requests. CloudFlare was chosen mostly for cost, Unscatter is completely self-funded right now. That means if traffic grows, most requests won’t even make it to the site.

I depend on Cloudflare for analytics. The only self-hosted analytics I do is capture the term searched for every search. It’s a 2 field table, term and timestamp. This actually was added 5/1/2021 and I have not built anything to use that information yet. I do plan to start dropping everything older than a month there as well.

The full privacy policy is posted here https://www.unscatter.com/privacypolicy.

Why only Reddit?

Truth is, I would love to get more sources. I think only pulling data from reddit means I am pulling from the reddit communities echo chamber, especially because I’m letting reddit tell me what the top 100 stories are.

My goal is to eventually try to find more sources. I started with reddit because their API and PRAW SDK made it extremely easy to get a data set I could work with.

I am currently working on integration with Twitter to add it as an additional source for content. I’m open to suggestions for more sites to integrate with, feel free to hit me up on Twitter @joerussbowman or @unscatter.

I think the one important distinction to make is the focus of Unscatter is I’m trying to find and rank stories people are sharing. I’m not interested in finding and crawling all the news sites on the Internet to end up with a database of stories people may not be interested in. Sourcing from link sharing sites and social media is the goal.

What technology is Unscatter using?

I’ll do more articles later on the technology I’m using for Unscatter. I’d say one of the primary purposes of the site has been to create opportunity for me to learn new things and practice existing skills.

The database for Unscatter which also does the full text search is PostgreSQL. I recently discovered CouchBase, I may try it out to see how well I like it compared to Postgres, but I haven’t spent enough time researching it yet. The Unscatter agent is written in python. This was a no-brainer decision, it gives me immediate access to PRAW for working with reddit and also spacy and newspaper for text analysis.

Unscatter.com, the website is apache acting as proxy for a custom application written in Go. And I’d like to point out Let’s Encrypt was the final push to getting me started building the site. When I had been dreaming about building the site in the past paying for SSL certs was always one barrier to getting started.

Wrapping up

I think this is good for a first post. I’m open to questions and comments. Would love to hear any suggestions and hey, if you find this site at all useful I’d really love to hear that too. I use it every day, I post stories I find on it regularly to Facebook, Twitter and Instagram.

Thanks for taking the time to get all the way to the bottom of this blog post.

1 note

·

View note

Text

Thinking Any Of These 10 Misconceptions Concerning Moviebox.Apk Maintains You From Expanding

Application Facility.

- Czas trwania: 68 sekund.

He left Google in August 2013 to sign up with Chinese phone manufacturer Xiaomi. Much less than six months earlier, Google's then-CEO Larry Page revealed in a post that Andy Rubin had relocated from the Android department to tackle new projects at Google, which Sundar Pichai would end up being the brand-new Android lead. Pichai himself would at some point change positions, ending up being the new Chief Executive Officer of Google in August 2015 complying with the firm's restructure right into the Alphabet corporation, making Hiroshi Lockheimer the brand-new head of Android. Considering that 2008, Android has seen numerous updates which have incrementally enhanced the operating system, adding brand-new functions and dealing with pests in previous launches. Each significant launch is called in indexed order after a treat or sugary reward, with the initial few Android versions being called "Cupcake", "Donut", "Eclair", as well as "Froyo", in that order.

Android comes preinstalled on a couple of laptops (a comparable capability of running Android applications is likewise offered in Google's Chrome OS) as well as can additionally be installed on computers by end individuals. On those systems Android supplies added capability for physical keyboards as well as computer mice, together with the "Alt-Tab" key mix for switching applications swiftly with a keyboard.

Android (operating system).

In addition, Google announced a new "target API degree requirement" (targetSdkVersion in show) at the very least Android 8.0 (API degree 26) for all brand-new apps as well as app updates. The API degree need could battle practice of app designers bypassing some consent displays by specifying very early Android variations that had much more rugged authorization version.

Previous Crucial worker discloses designs for unreleased PH-2 and PH-3 phonesEssential's 2nd Android phone seemed to be virtually total.

Although your phone could claim it's encrypted, practically it isn't completely secured till you establish your own PIN/password/pattern at startup via your phone's settings. Encrypting with your very own password is the most protected choice. To see which version of Duo Mobile is installed on your device, most likely to the Android Settings food selection, tap Applications, after that scroll down as well as tap Duo Mobile.

Czech Republic

In May 2012, the court in this instance discovered that Google did not infringe on Oracle's licenses, and the test judge ruled that the framework of the Java APIs made use of by Google was not copyrightable. The parties consented to absolutely no dollars in statutory problems for a small amount of duplicated code.

Android at Google I/O Odtwórz wszystkie.

By the 4th quarter of 2010, its worldwide share had actually grown to 33% of the marketplace coming to be the top-selling smartphone system, surpassing Symbian. In the United States it became the top-selling platform in April 2011, overtaking BlackBerry OS with a 31.2% mobile phone share, according to comScore.

In many cases it might not be feasible to refute specific permissions to pre-installed applications, neither be feasible to disable them. The Google Play Services app can not be uninstalled, nor handicapped.

OEMs will certainly no longer be prevented from offering any type of gadget running incompatible versions of Android in Europe. Android has an expanding selection of third-party applications, which can be acquired by customers by downloading and install and also installing the application's APK (Android application plan) documents, or by downloading them using an application shop program that moviebox pro apk mirror allows customers to mount, update, as well as eliminate applications from their devices. Google Play Shop is the key application store set up on Android gadgets that comply with Google's compatibility requirements and certify the Google Mobile Providers software program. Google Play Shop allows individuals to search, download as well as update applications released by Google and also third-party designers; as of July 2013 [update], there are greater than one million applications offered for Android in Play Shop. Since July 2013 [update], 50 billion applications have actually been set up.

Duo Mobile's dark theme relies on your Android system settings. If your gadget has the system-wide dark setting allowed, Duo Mobile immediately changes to dark style. Fingerprint VerificationDuo Mobile 3.10 and up additionally sustains fingerprint verification for Duo Push-based logins as an extra layer of safety to validate your individual identification.

Review It with the Google Assistant: Listen to website - Czas trwania: 2 minuty i 47 sekund.

In January 2014, Google revealed a framework based on Apache Cordova for porting Chrome HTML 5 internet applications to Android, wrapped in an indigenous application covering. Applications (" applications"), which extend the capability of gadgets, are created using the Android software application advancement set (SDK) and, often, the Java shows language. Java may be incorporated with C/C++, along with a selection of non-default runtimes that permit far better C++ support. The Go shows language is also supported, although with a minimal set of application shows user interfaces (API). In May 2017, Google introduced support for Android application development in the Kotlin programming language.

1 note

·

View note

Photo

hydralisk98′s web projects tracker:

Core principles=

Fail faster

‘Learn, Tweak, Make’ loop

This is meant to be a quick reference for tracking progress made over my various projects, organized by their “ultimate target” goal:

(START)

(Website)=

Install Firefox

Install Chrome

Install Microsoft newest browser

Install Lynx

Learn about contemporary web browsers

Install a very basic text editor

Install Notepad++

Install Nano

Install Powershell

Install Bash

Install Git

Learn HTML

Elements and attributes

Commenting (single line comment, multi-line comment)

Head (title, meta, charset, language, link, style, description, keywords, author, viewport, script, base, url-encode, )

Hyperlinks (local, external, link titles, relative filepaths, absolute filepaths)

Headings (h1-h6, horizontal rules)

Paragraphs (pre, line breaks)

Text formatting (bold, italic, deleted, inserted, subscript, superscript, marked)

Quotations (quote, blockquote, abbreviations, address, cite, bidirectional override)

Entities & symbols (&entity_name, &entity_number,  , useful HTML character entities, diacritical marks, mathematical symbols, greek letters, currency symbols, )

Id (bookmarks)

Classes (select elements, multiple classes, different tags can share same class, )

Blocks & Inlines (div, span)

Computercode (kbd, samp, code, var)

Lists (ordered, unordered, description lists, control list counting, nesting)

Tables (colspan, rowspan, caption, colgroup, thead, tbody, tfoot, th)

Images (src, alt, width, height, animated, link, map, area, usenmap, , picture, picture for format support)

old fashioned audio

old fashioned video

Iframes (URL src, name, target)

Forms (input types, action, method, GET, POST, name, fieldset, accept-charset, autocomplete, enctype, novalidate, target, form elements, input attributes)

URL encode (scheme, prefix, domain, port, path, filename, ascii-encodings)

Learn about oldest web browsers onwards

Learn early HTML versions (doctypes & permitted elements for each version)

Make a 90s-like web page compatible with as much early web formats as possible, earliest web browsers’ compatibility is best here

Learn how to teach HTML5 features to most if not all older browsers

Install Adobe XD

Register a account at Figma

Learn Adobe XD basics

Learn Figma basics

Install Microsoft’s VS Code

Install my Microsoft’s VS Code favorite extensions

Learn HTML5

Semantic elements

Layouts

Graphics (SVG, canvas)

Track

Audio

Video

Embed

APIs (geolocation, drag and drop, local storage, application cache, web workers, server-sent events, )

HTMLShiv for teaching older browsers HTML5

HTML5 style guide and coding conventions (doctype, clean tidy well-formed code, lower case element names, close all html elements, close empty html elements, quote attribute values, image attributes, space and equal signs, avoid long code lines, blank lines, indentation, keep html, keep head, keep body, meta data, viewport, comments, stylesheets, loading JS into html, accessing HTML elements with JS, use lowercase file names, file extensions, index/default)

Learn CSS

Selections

Colors

Fonts

Positioning

Box model

Grid

Flexbox

Custom properties

Transitions

Animate

Make a simple modern static site

Learn responsive design

Viewport

Media queries

Fluid widths

rem units over px

Mobile first

Learn SASS

Variables

Nesting

Conditionals

Functions

Learn about CSS frameworks

Learn Bootstrap

Learn Tailwind CSS

Learn JS

Fundamentals

Document Object Model / DOM

JavaScript Object Notation / JSON

Fetch API

Modern JS (ES6+)

Learn Git

Learn Browser Dev Tools

Learn your VS Code extensions

Learn Emmet

Learn NPM

Learn Yarn

Learn Axios

Learn Webpack

Learn Parcel

Learn basic deployment

Domain registration (Namecheap)

Managed hosting (InMotion, Hostgator, Bluehost)

Static hosting (Nertlify, Github Pages)

SSL certificate

FTP

SFTP

SSH

CLI

Make a fancy front end website about

Make a few Tumblr themes

===You are now a basic front end developer!

Learn about XML dialects

Learn XML

Learn about JS frameworks

Learn jQuery

Learn React

Contex API with Hooks

NEXT

Learn Vue.js

Vuex

NUXT

Learn Svelte

NUXT (Vue)

Learn Gatsby

Learn Gridsome

Learn Typescript

Make a epic front end website about

===You are now a front-end wizard!

Learn Node.js

Express

Nest.js

Koa

Learn Python

Django

Flask

Learn GoLang

Revel

Learn PHP

Laravel

Slim

Symfony

Learn Ruby

Ruby on Rails

Sinatra

Learn SQL

PostgreSQL

MySQL

Learn ORM

Learn ODM

Learn NoSQL

MongoDB

RethinkDB

CouchDB

Learn a cloud database

Firebase, Azure Cloud DB, AWS

Learn a lightweight & cache variant

Redis

SQLlite

NeDB

Learn GraphQL

Learn about CMSes

Learn Wordpress

Learn Drupal

Learn Keystone

Learn Enduro

Learn Contentful

Learn Sanity

Learn Jekyll

Learn about DevOps

Learn NGINX

Learn Apache

Learn Linode

Learn Heroku

Learn Azure

Learn Docker

Learn testing

Learn load balancing

===You are now a good full stack developer

Learn about mobile development

Learn Dart

Learn Flutter

Learn React Native

Learn Nativescript

Learn Ionic

Learn progressive web apps

Learn Electron

Learn JAMstack

Learn serverless architecture

Learn API-first design

Learn data science

Learn machine learning

Learn deep learning

Learn speech recognition

Learn web assembly

===You are now a epic full stack developer

Make a web browser

Make a web server

===You are now a legendary full stack developer

[...]

(Computer system)=

Learn to execute and test your code in a command line interface

Learn to use breakpoints and debuggers

Learn Bash

Learn fish

Learn Zsh

Learn Vim

Learn nano

Learn Notepad++

Learn VS Code

Learn Brackets

Learn Atom

Learn Geany

Learn Neovim

Learn Python

Learn Java?

Learn R

Learn Swift?

Learn Go-lang?

Learn Common Lisp

Learn Clojure (& ClojureScript)

Learn Scheme

Learn C++

Learn C

Learn B

Learn Mesa

Learn Brainfuck

Learn Assembly

Learn Machine Code

Learn how to manage I/O

Make a keypad

Make a keyboard

Make a mouse

Make a light pen

Make a small LCD display

Make a small LED display

Make a teleprinter terminal

Make a medium raster CRT display

Make a small vector CRT display

Make larger LED displays

Make a few CRT displays

Learn how to manage computer memory

Make datasettes

Make a datasette deck

Make floppy disks

Make a floppy drive

Learn how to control data

Learn binary base

Learn hexadecimal base

Learn octal base

Learn registers

Learn timing information

Learn assembly common mnemonics

Learn arithmetic operations

Learn logic operations (AND, OR, XOR, NOT, NAND, NOR, NXOR, IMPLY)

Learn masking

Learn assembly language basics

Learn stack construct’s operations

Learn calling conventions

Learn to use Application Binary Interface or ABI

Learn to make your own ABIs

Learn to use memory maps

Learn to make memory maps

Make a clock

Make a front panel

Make a calculator

Learn about existing instruction sets (Intel, ARM, RISC-V, PIC, AVR, SPARC, MIPS, Intersil 6120, Z80...)

Design a instruction set

Compose a assembler

Compose a disassembler

Compose a emulator

Write a B-derivative programming language (somewhat similar to C)

Write a IPL-derivative programming language (somewhat similar to Lisp and Scheme)

Write a general markup language (like GML, SGML, HTML, XML...)

Write a Turing tarpit (like Brainfuck)

Write a scripting language (like Bash)

Write a database system (like VisiCalc or SQL)

Write a CLI shell (basic operating system like Unix or CP/M)

Write a single-user GUI operating system (like Xerox Star’s Pilot)

Write a multi-user GUI operating system (like Linux)

Write various software utilities for my various OSes

Write various games for my various OSes

Write various niche applications for my various OSes

Implement a awesome model in very large scale integration, like the Commodore CBM-II

Implement a epic model in integrated circuits, like the DEC PDP-15

Implement a modest model in transistor-transistor logic, similar to the DEC PDP-12

Implement a simple model in diode-transistor logic, like the original DEC PDP-8

Implement a simpler model in later vacuum tubes, like the IBM 700 series

Implement simplest model in early vacuum tubes, like the EDSAC

[...]

(Conlang)=

Choose sounds

Choose phonotactics

[...]

(Animation ‘movie’)=

[...]

(Exploration top-down ’racing game’)=

[...]

(Video dictionary)=

[...]

(Grand strategy game)=

[...]

(Telex system)=

[...]

(Pen&paper tabletop game)=

[...]

(Search engine)=

[...]

(Microlearning system)=

[...]

(Alternate planet)=

[...]

(END)

4 notes

·

View notes

Text

Php training course

PHP Course Overview

PHP is a widely-used general-purpose scripting language that is especially suited for Web development and can be embedded into HTML.

PHP can generate the dynamic page content

PHP can create, open, read, write, and close files on the server

PHP can collect form data

PHP can send and receive cookies

PHP can add, delete, modify data in your database

PHP can restrict users to access some pages on your website

PHP can encrypt data

With PHP you are not limited to output HTML. You can output images, PDF files, and even Flash movies. You can also output any text, such as XHTML and XML.

PHP Training Course Prerequisite

HTML

CSS

Javascript

Objectives of the Course

PHP runs on different platforms (Windows, Linux, Unix, Mac OS X, etc.)

PHP is compatible with almost all servers used today (Apache, IIS, etc.)

PHP has support for a wide range of databases

PHP is free. Download it from the official PHP resource: www.php.net

PHP is easy to learn and runs efficiently on the server-side

PHP Training Course Duration

45 Working days, daily 1.30 hours

PHP Training Course Overview

An Introduction to PHP

History of PHP

Versions and Differences between them

Practicality

Power

Installation and configuring Apache and PHP

PHP Basics

Default Syntax

Styles of PHP Tags

Comments in PHP

Output functions in PHP

Datatypes in PHP

Configuration Settings

Error Types

Variables in PHP

Variable Declarations

Variable Scope

PHP’s Superglobal Variables

Variable Variables

Constants in PHP

Magic Constants

Standard Pre-defined Constants

Core Pre-defined Languages

User-defined Constants

Control Structures

Execution Control Statements

Conditional Statements

Looping Statements with Real-time Examples

Functions

Creating Functions

Passing Arguments by Value and Reference

Recursive Functions

Arrays

What is an Array?

How to create an Array

Traversing Arrays

Array Functions

Include Functions

Include, Include_once

Require, Require_once

Regular Expressions

Validating text boxes,emails,phone number,etc

Creating custom regular expressions

Object-Oriented Programming in PHP

Classes, Objects, Fields, Properties, _set(), Constants, Methods

Encapsulation

Inheritance and types

Polymorphism

Constructor and Destructor

Static Class Members, Instance of Keyword, Helper Functions

Object Cloning and Copy

Reflections

PHP with MySQL

What is MySQL

Integration with MySQL

MySQL functions

Gmail Data Grid options

SQL Injection

Uploading and downloading images in Database

Registration and Login forms with validations

Pegging, Sorting,…..

Strings and Regular Expressions

Declarations styles of String Variables

Heredoc style

String Functions

Regular Expression Syntax(POSIX)

PHP’s Regular Expression Functions(POSIX Extended)

Working with the Files and Operating System

File Functions

Open, Create and Delete files

Create Directories and Manipulate them

Information about Hard Disk

Directory Functions

Calculating File, Directory and Disk Sizes

Error and Exception Handling

Error Logging

Configuration Directives

PHP’s Exception Class

Throw New Exception

Custom Exceptions

Date and Time Functions

Authentication

HTTP Authentication

PHP Authentication

Authentication Methodologies

Cookies

Why Cookies

Types of Cookies

How to Create and Access Cookies

Sessions

Session Variables

Creating and Destroying a Session

Retrieving and Setting the Session ID

Encoding and Decoding Session Data

Auto-Login

Recently Viewed Document Index

Web Services

Why Web Services

RSS Syntax

SOAP

How to Access Web Services

XML Integration

What is XML

Create an XML file from PHP with Database records

Reading Information from XML File

MySQL Concepts

Introduction

Storage Engines

Functions

Operators

Constraints

DDL commands

DML Commands

DCL Command

TCL Commands

Views

Joins

Cursors

Indexing

Stored Procedures

Mysql with PHP Programming

Mysql with Sqlserver(Optional)

SPECIAL DELIVERY

Protocols

HTTP Headers and types

Sending Mails using PHP

Email with Attachment

File Uploading and Downloading using Headers

Implementing Chating Applications using PHP

and Ajax

SMS Gateways and sending SMS to Mobiles

Payments gateways and How to Integrate them

With Complete

MVC Architecture

DRUPAL

JOOMLA

Word Press

AJAX

CSS

JQUERY (Introduction and few plugins only)

1 note

·

View note

Text

How can I run a Java app on Apache without Tomcat?

Apache Solr is a popular enterprise-level search platform which is being used widely by popular websites such as Reddit, Netflix, and Instagram. The reason for the popularity of Apache Solr is its well-built text search, faceted search, real-time indexing, dynamic clustering, and easy integration. Apache Solr helps building high level search options for the websites containing high volume data.

Java being one of the most useful and important languages gained the reputation worldwide for creating customized web applications. Prominent Pixel has been harnessing the power of Java web development to the core managing open source Java-based systems all over the globe. Java allows developers to develop the unique web applications in less time with low expenses.

Prominent Pixel provides customized Java web development services and Java Application development services that meets client requirements and business needs. Being a leading Java web application development company in India, we have delivered the best services to thousands of clients throughout the world.

For its advanced security and stable nature, Java has been using worldwide in web and business solutions. Prominent Pixel is one of the best Java development companies in India that has a seamless experience in providing software services. Our Java programming services are exceptional and are best suitable to the requirements of the clients and website as well. Prominent Pixel aims at providing the Java software development services for the reasonable price from small, medium to large scale companies.

We have dealt with various clients whose requirements are diversified, such as automotive, banking, finance, healthcare, insurance, media, retail and e-commerce, entertainment, lifestyle, real estate, education, and much more. We, at Prominent Pixel support our clients from the start to the end of the project.

Being the leading java development company in India for years, success has become quite common to us. We always strive to improve the standards to provide the clients more and better than they want. Though Java helps in developing various apps, it uses complex methodology which definitely needs an expert. At Prominent Pixel, we do have the expert Java software development team who has several years of experience.

Highly sophisticated web and mobile applications can only be created by Java programming language and once the code is written, it can be used anywhere and that is the best feature of Java. Java is everywhere and so the compatibility of Java apps. The cost for developing the web or mobile application on Java is also very low which is the reason for people to choose it over other programming languages.

It is not an easy task to manage a large amount of data at one place if there is no Big Data. Let it be a desktop or a sensor, the transition can be done very effectively using Big Data. So, if you think your company requires Big Data development services, you must have to choose the company that offers amazing processing capabilities and authentic skills.

Prominent Pixel is one of the best Big Data consulting companies that offer excellent Big Data solutions. The exceptional growth in volume, variety, and velocity of data made it necessary for various companies to look for Big Data consulting services. We, at Prominent Pixel, enable faster data-driven decision making using our vast experience in data management, warehousing, and high volume data processing.

Cloud DevOps development services are required to cater the cultural and ethical requirements of different teams of a software company. All the hurdles caused by the separation of development, QA, and IT teams can be resolved easily using Cloud DevOps. It also amplifies the delivery cycle by supporting the development and testing to release the product on the same platform. Though Cloud and DevOps are independent, they together have the ability to add value to the business through IT.

Prominent Pixel is the leading Cloud DevOps service provider in India which has also started working for the abroad projects recently. A steady development and continuous delivery of the product can be possible through DevOps consulting services and Prominent Pixel can provide such services. Focusing on the multi-phase of the life cycle to be more connected is another benefit of Cloud DevOps and this can be done efficiently with the best tools we have at Prominent Pixel.

Our Cloud DevOps development services ensure end-to-end delivery through continuous integration and development through the best cloud platforms. With our DevOps consulting services, we help small, medium, and large-scale enterprises align their development and operation to ensure higher efficiency and faster response to build and market high-quality software.

Prominent Pixel Java developers are here to hire! Prominent Pixel is one of the top Java development service providers in India. We have a team of dedicated Java programmers who are skilled in the latest Java frameworks and technologies that fulfills the business needs. We also offer tailored Java website development services depending on the requirements of your business model. Our comprehensive Java solutions help our clients drive business value with innovation and effectiveness.

With years of experience in providing Java development services, Prominent Pixel has developed technological expertise that improves your business growth. Our Java web application programmers observe and understand the business requirements in a logical manner that helps creating robust creative, and unique web applications.

Besides experience, you can hire our Java developers for their creative thinking, efforts, and unique approach to every single project. Over the years, we are seeing the increase in the count of our clients seeking Java development services and now we have satisfied and happy customers all over the world. Our team of Java developers follows agile methodologies that ensure effective communication and complete transparency.

At Prominent Pixel, Our dedicated Java website development team is excelled in providing integrated and customized solutions to the Java technologies. Our Java web application programmers have the capability to build robust and secure apps that enhance productivity and bring high traffic. Hiring Java developers from a successful company gives you the chance to get the right suggestion for your Java platform to finalize the architecture design.

Prominent Pixel has top Java developers that you can hire in India and now, many clients from other countries are also hiring our experts for their proven skills and excellence in the work. Our Java developers team also have experience in formulating business class Java products that increase the productivity. You will also be given the option to choose the flexible engagement model if you want or our developers will do it for you without avoiding further complications.

With a lot of new revolutions in the technology world, the Java platform has introduced a new yet popular framework that is a Spring Framework. To avoid the complexity of this framework in the development phase, the team of spring again introduced Spring Boot which is identified as the important milestone of spring framework. So, do you think your business needs an expert Spring Boot developer to help you! Here is the overview of spring Boot to decide how our dedicated Spring Boot developers can support you with our services.

Spring Boot is a framework that was designed to develop a new spring application by simplifying a bootstrapping. You can start new Spring projects in no time with default code and configuration. Spring Boot also saves a lot of development time and increases productivity. In the rapid application development field, Spring helps developers in boilerplate configuration. Hire Spring Boot developer from Prominent Pixel to get stand-alone Spring applications right now!

Spring IO Platform has complex XML configuration with poor management and so Spring Boot was introduced for XML-free development which can be done very easily without any flaws. With the help of spring Boot, developers can even be free from writing import statements. By the simplicity of framework with its runnable web application, Spring Boot has gained much popularity in no time.

At prominent Pixel, our developers can develop and deliver matchless Spring applications in a given time frame. With years of experience in development and knowledge in various advanced technologies, our team of dedicated Spring Boot developers can provide matchless Spring applications that fulfill your business needs. So, hire Java Spring boot developers from Prominent Pixel to get default imports and configuration which internally uses some powerful Groovy based techniques and tools.

Our Spring Boot developers also help combining existing framework into some simple annotations. Spring Boot also changes Java-based application model to a whole new model. But everything of this needs a Spring Boot professional and you can find many of them at Prominent Pixel. At Prominent Pixel, we always hire top and experienced Spring boot developers so that we can guarantee our clients with excellent services.

So, hire dedicated Spring Boot developers from Prominent Pixel to get an amazing website which is really non-comparable with others. Our developers till now have managed complete software cycle from all the little requirements to deployment, our Spring Boot developers will take care of your project from the beginning to an end. Rapid application development is the primary goal of almost all the online businesses today which can only be fulfilled by the top service providers like Prominent Pixel.

Enhance your Business with Solr

If you have a website with a large number of documents, you must need good content management architecture to manage search functionality. Let it be an e-commerce portal with numerous products or website with thousand pages of lengthy content, it is hard to search for what you exactly want. Here comes integrated Solr with a content management system to help you with fast and effective document search. At Prominent Pixel, we offer comprehensive consulting services for Apache Solr for eCommerce websites, content-based websites, and internal enterprise-level content management systems.

Prominent Pixel has a team of expert and experienced Apache Solr developers who have worked on several Solr projects to date. Our developers’ will power up your enterprise search with flexible features of Solr. Our Solr services are specially designed for eCommerce websites, content-based websites and enterprise-level websites. Also, our dedicated Apache Solr developers create new websites with solar integrated content architecture.

Elasticsearch is a search engine based on Lucene that provides a distributed, multitenant-capable full-text search engine with an HTTP web interface and schema-free JSON documents. At Prominent Pixel, we have a team of dedicated Elasticsearch developers who have worked with hundreds of clients worldwide, providing them with a variety of services.

Before going into the production stage, the design and data modeling are two important steps one should have to take care of. Our Elasticsearch developers will help you to choose the perfect design and helps in data modeling before getting into the actual work.

Though there are tons of Elastic search developers available in the online world, only a few of them have experience and expertise and Prominent Pixel developers are one among them. Our Elastic search developers will accelerate your progress in Elastic journey no matter where you are. Whether you are facing technical issues, business problems, or any other problems, our professional developers will take care of everything.

All your queries of your data and strategies will be answered by our Elastic search developers. With in-depth technical knowledge, they will help you to realize possibilities which increase the search results, push past plateaus and find out new and unique solutions with Elastic Stack and X-Pack. Finally, we build a relationship and understand all the business and technical problems and solve them in no time.

There are thousands of Big Data development companies who can provide the services for low price. Then why us? We have a reason for you. Prominent Pixel is a company that was established a few years but gained a huge success with its dedication towards work. In a short span of time, we have worked on more than a thousand Big Data projects and all of them were successful.

Our every old clients will choose us with confidence remembering the work we have done for them! At Prominent Pixel, we always hire the top senior Big Data Hadoop developers who can resolve the issues of clients perfectly within a given time frame. So, if you are choosing Prominent Pixel for your Big Data project, then you are in safe hands.

These days, every business needs an easy access to data for exponential growth and for that, we definitely need an emerging digital Big Data solution. Handling the great volume of data has become a challenge even to the many big Data analysts as it needs an expertise and experience. At Prominent Pixel, you can hire an expert Hadoop Big Data developer who can help you handle a large amount of data by analyzing your business through extensive research.

The massive data needs the best practices to handle carefully to not lose any information. Identifying the secret technique to manage Big Data is the key factor to develop the perfect strategy to help your business grow. So, to manage your large data using the best strategy and practices, you can hire top senior Big Data Hadoop developer from Prominent Pixel who will never disappoint you. For the past few years, Prominent Pixel has become the revolution by focusing on quality of work. With a team of certified Big Data professionals, we have never comprised in providing quality services at the reasonable price.

Being one of the leading Big Data service providers in India, we assist our customers in coming up with the best strategy that suits their business and gives a positive outcome. Our dedicated big data developers will help you selecting the suitable technology and tools to achieve your business goals. Depending on the technology our customer using, our Big Data Hadoop developers will offer vendor-neutral recommendations.

At Prominent Pixel, our Hadoop Big Data developers will establish the best-suitable modern architecture that encourages greater efficiency in everyday processes. The power of big data can improve the business outcomes and to make the best utilization of big Data, you must have to hire top senior big Data Hadoop developer from prominent Pixel.

The best infrastructure model is also required to achieve the business goals meanwhile, great deployment of big data technologies is also necessary. At Prominent Pixel, our Big Data analysts will manage the both at the same time. You can hire Big Data analytics solutions from Prominent Pixel to simplify the integration and installation of big Data infrastructure by eliminating complexity.

At Prominent Pixel, we have the experts in all relative fields and so same for the Hadoop. You can hire dedicated Hadoop developer from Prominent Pixel to solve your analytical challenges which include the complex Big Data issues. The expertise of our Hadoop developers is far beyond your thinking as they will always provide more than what you want.

With vast experience in Big Data, our Hadoop developers will provide the analytical solution to your business very quickly with no flaws. We can also solve the complexities of misconfigured Hadoop clusters by developing new ones. Through the rapid implementation, our Hadoop developers will help you derive immense value from Big Data.

You can also hire our dedicated Hadoop developer to build scalable and flexible solutions for your business and everything comes for an affordable price. Till now, our developers worked on thousands of Hadoop projects where the platform can be deployed onsite or in the cloud so that organizations can deploy Hadoop with the help of technology partners. In this case, the cost of hardware acquisition will be reduced which benefits our clients.

At Prominent Pixel, we have the experts in all relative fields and so same for the Hadoop. You can hire dedicated Hadoop developer from Prominent Pixel to solve your analytical challenges which include the complex Big Data issues. The expertise of our Hadoop developers is far beyond your thinking as they will always provide more than what you want.

With vast experience in Big Data, our Hadoop developers will provide the analytical solution to your business very quickly with no flaws. We can also solve the complexities of misconfigured Hadoop clusters by developing new ones. Through the rapid implementation, our Hadoop developers will help you derive immense value from Big Data.

You can also hire our dedicated Hadoop developer to build scalable and flexible solutions for your business and everything comes for an affordable price. Till now, our developers worked on thousands of Hadoop projects where the platform can be deployed onsite or in the cloud so that organizations can deploy Hadoop with the help of technology partners. In this case, the cost of hardware acquisition will be reduced which benefits our clients.

Apache Spark is an open-source framework for large-scale data processing. At Prominent Pixel, we have expert Spark developers to hire and they will help you to achieve high performance for batch and streaming data as well. With years of experience in handling Apache spark projects, we integrate Apache Spark that meets the needs of the business by utilizing unique capabilities and facilitating user experience. Hire senior Apache Spark developers from prominent Pixel right now!

Spark helps you to simplify the challenges and the tough task of processing the high volumes of real-time data. This work can be only managed effectively by the senior Spark developers where you can find many in Prominent Pixel. Our experts will also make high-performance possible using Batch and Data streaming.

Our team of experts will explore the features of Apache Spark for data management and Big Data requirements to help your business grow. Spark developers will help you by user profiling and recommendation for utilizing the high potential of Apache spark application.

A well-built configuration is required for the in-memory processing which is a distinctive feature over other frameworks and it definitely needs an expert spark developer. So, to get the uninterrupted services, you can hire senior Apache Spark developer from Prominent Pixel.

With more than 14 years of experience, our Spark developers can manage your projects with ease and effectiveness. You can hire senior Apache Spark SQL developers from Prominent Pixel who can develop and deliver a tailor-made Spark based analytic solutions as per the business needs. Our senior Apache Spark developers will support you in all the stages until the project is handed over to you.

At Prominent Pixel, our Spark developers can handle the challenges efficiently that encounter often while working on the project. Our senior Apache Spark developers are excelled in all the Big Data technologies but they are excited to work on Apache Spark because of its features and benefits.

We have experienced and expert Apache Spark developers who have worked extensively on data science projects using MLlib on Sparse data formats stored in HDFS. Also, they have been practising on handling the project on multiple tools which have undefined benefits. If you want to build an advanced analytics platform using Spark, then Prominent Pixel is the right place for you!

At Prominent Pixel, our experienced Apache spark developers are able to maintain a Spark cluster in bioinformatics, data integration, analysis, and prediction pipelines as a central component. Our developers also have analytical ability to design the predictive models and recommendation systems for marketing automation.

1 note

·

View note

Text

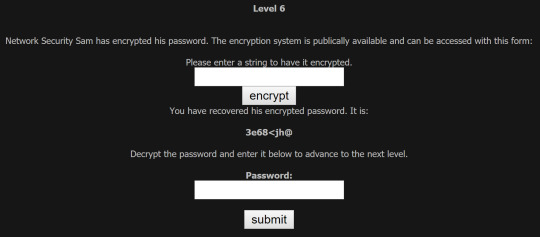

Hack This Site - Basic #6-#11

Reflection

The 6th mission was more of a puzzle than an exploit in the HTML. It relied on testing a number of inputs to determine if there were any obvious vulnerabilities in the encryption; if it was more complex I may have required to write a more automatic method of checking. I was fairly familiar with abusing a lack of input validation so I didn’t find the 7th mission particularly difficult.

Weirdly enough I know a fair bit about SQL injections, but I wasn’t actually aware of SSI attacks. It relies on the web server having them enabled (however it is usually disabled by default) and not parsing the user input. However it doesn’t seem to be that uncommon a vulnerability even today - found an interesting blog from a guy who was doing some penetrating testing and found a vulnerability in 2017. Basically he managed to quickly craft himself a terminal to do whatever he wanted on the web server. Mission 9 utilised the same vulnerability that hadn’t been patched in mission 8, although it took a bit of time to realise you actually had to leave the current mission and re-abuse that bug.

I had already done a bit of work with authentication and sessions on web servers - usually you would do the checking server-side and give the user a session id after logging in. You might choose to store a couple of variables in cookies, however these would be the ones purely for display or other trivial purposes. However they didn’t do that in this case, so you could quite easily abuse it and modify the cookie.

The final mission actually confused the hell out of me because it was a puzzle in which they were just hiding the password. Finding that first directory and where to exactly put the password took the most time. I’ve actually had to deploy my own Apache web server before so I was fairly familiar with the configurations.

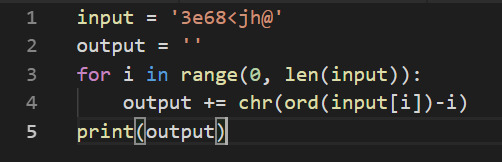

Basic #6

Looking in the source code we can’t find anything about the encryption or where the password may be. So I began by testing his encryption algorithm to see if I could note any patterns - I observed the following:

“aaaaaaaaaa” -> “abcdefghij”

“bbbbbbbbbb” -> “bcdefghijk”

“ccccccccccc” -> “cdefghijkl“

We can clearly see a flaw in his encryption algorithm already - it appears that all the encryption does is add 1 to the ASCII code of each of the characters depending on their index in the string. To reverse this we simply need to subtract 1 from the ASCII code depending on its position in the string - we can write a basic Python script to do this for us:

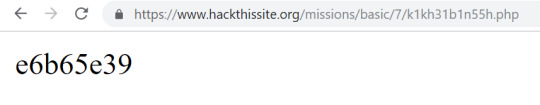

And the output was “3d458eb9″ which is the password:

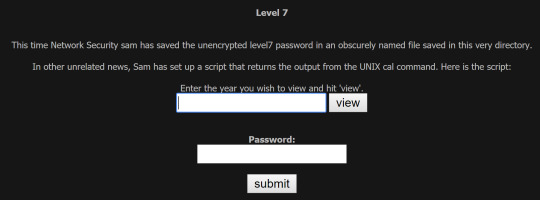

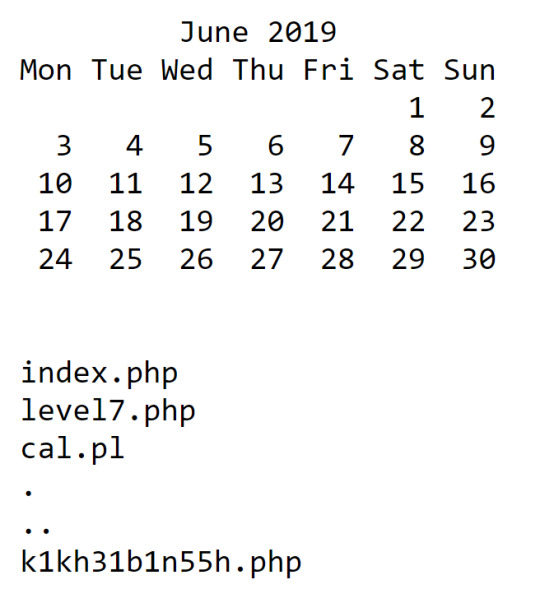

Basic #7

Based on using the command, it seems that all the form basically does is add the text in the form after the “cal “ in the terminal. So we could probably abuse this to find the name of the obscured password file. If we enter “; ls -l” it will probably execute the cal command with no arguments then the ls command to show us the files in the directory.

And viewing the obscured file at the bottom, we find the password:

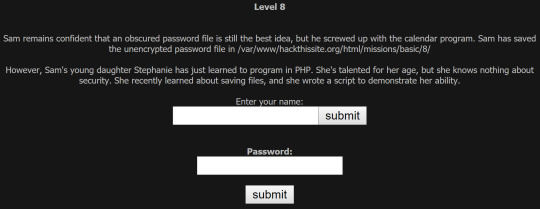

Basic #8

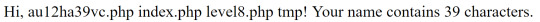

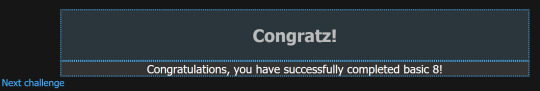

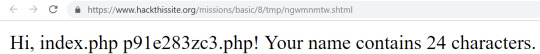

Basically the script she made creates a file and redirects you to a page that gives you a link to the file. For example, when I entered in Joe I got the result:

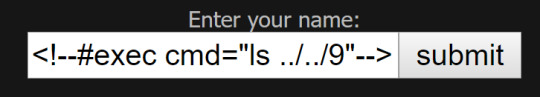

Initially I wasn’t sure how to approach this - I figured that it must be some sort of code injection attack although I didn’t know enough about HTML to execute it. After a little bit of research I discovered that there is something called a SSI (Server Side Includes) injection attack which would be relevant in this situation. Basically when you are serving a html page the web server will look for SSI instructions in the code and execute them; then it will show the page. So if we want to execute a command in the terminal we can use “<!–#exec cmd=“insertcommandhere”–> to do it. This only works because they did a crappy job at parsing the input.

In this case to find the name of the password file we want to get a list of files one directory up from where the file is created, so we use:

Now when we view the file we see this result:

If we view the file “au12ha39vc.php” we obtain the password “ 59879d35 ”:

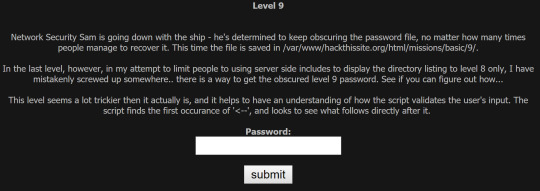

Basic #9

So basically Sam has ditched the script on this level so it seems like there may not be a way to find the password file in the directory. However the second paragraph seems to hint to the fact that the previous exploit may be able to be used on this level as well. I jumped back to level 8 and tried to abuse the same trick to get the directory listing here as well:

It seems like the hint was in the right direction:

Through accessing this file we were able to find the password “3bdcfde6″. It just goes to show security by obscurity is really crappy.

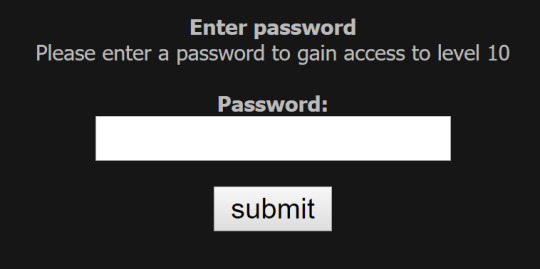

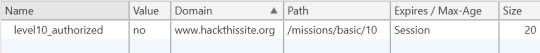

Basic #10

So there are basically no hints for this level - I’m just required to enter a password in order to gain access. There appears to be no hints in the HTML so initially just tried “password” to see what would happen. I was redirected to a page which said this:

Now this message could be displayed for one of two reasons: the first one is that it was determined server-side, the other based on cookies keeping track on whether I was logged in. I looked in the storage and cookies section and saw this:

I modified the “level10_authorized” cookie to a value of “yes”. Then after attempting “password” again it let me in!

Basic #11

Basically when you go to the mission, you get served the following:

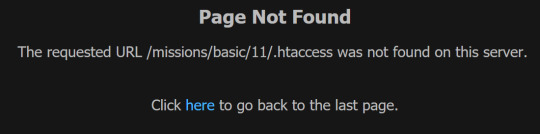

Basically after refreshing the page a couple times, it seems pretty obvious that it’s just printing out names of Elton John songs. The description of the challenge seems to hint that he screwed up the Apache configuration, so I first try and access the .htaccess file:

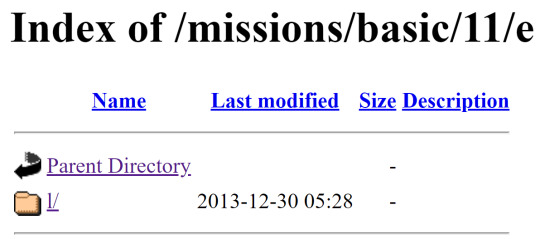

I wasn’t really sure of any means to determine where to find the passwords so I tried a heap of other things it may be including “/music/”, “/collection/”, “/1/”, “/2/”, “/3/”, “/elton/”, “/eltonjohn/”, “/john/”, “/ejohn/” and “e”. That final attempt got me the directory listing - to be honest if it hadn’t been something simple I would have had no hope:

Exploring through the directory you get to a dead end:

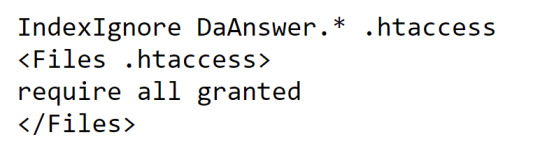

However seeing as the hint was specific about a Apache misconfiguration, I thought I’d try to get to the .htaccess file again - this actually proved successful at this level!

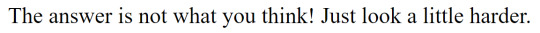

Now going to look at DaAnswer you get this:

At this point I wasn’t even sure where to enter the answer as the main page you started on was actually just listing out Elton John song names. I tried a couple typical main html page names in directories like “/home”, “/home.html”, “/home.php”, “/index”, “/index.html” and “/index.php”. The final php one appears to be where you need to enter the solution:

I assumed the sentence I had found earlier in “DaPassword” actually gave me the password in the literal sense - so it would be “not what you think”. This redirected me to a page with a button:

I just got re-directed back to the challenges password - even though it didn’t say I’m pretty sure that must have been the answer as my profile completion says the following.

1 note

·

View note

Text

Magento 2 in Ubuntu

Joomla, Drupal, Wordpress, Magneto CE, and other PHP based Information System Installation in the Ubuntu.

--------------------------------------------------------------------

Install and Configure apache2

--------------------------------------------------------------------

# apt update

# apt install apache2

# systemctl stop apache2.service

# systemctl start apache2.service

# systemctl enable apache2.service

--------------------------------------------------------------------

Install and Configure the database (MySql or MariaDB)

--------------------------------------------------------------------

# apt-get install mariadb-server mariadb-client -y

# systemctl start mariadb.service

# systemctl enable mariadb.service

# systemctl stop mariadb.service

-----------------------------------------------------------

Create Database in the MySql or MariaDB server.

-----------------------------------------------------------

# mysql -u root -p

mysql> CREATE DATABASE (DATABASE NAME);

mysql> CREATE USER 'USERNAME'@' web_server_IP' IDENTIFIED BY 'PASSWORD';

mysql> GRANT ALL ON DATABASE NAME.* TO ' USERNAME '@'localhost' IDENTIFIED BY 'PASSWORD' WITH GRANT OPTION;

mysql> GRANT ALL PRIVILEGES ON wordpress.* TO 'WEB_SERVER_USER'@'WEB_SERVER_IP';

mysql> FLUSH PRIVILEGES;

mysql> SET GLOBAL innodb_file_format = barracuda;

mysql> SET GLOBAL innodb_file_per_table = 1;

mysql> SET GLOBAL innodb_large_prefix = 'on';

mysql> EXIT;

--------------------------------------------------------------------

To change the MySql or MariaDB password use this line.

--------------------------------------------------------------------

# mysql_secure_installation

--------------------------------------------------------------------

Install the PHP and its extension

--------------------------------------------------------------------

# apt-get install software-properties-common -y

# add-apt-repository ppa:ondrej/php -y

# apt update -y

# apt install php -y

# apt install libapache2-mod-php -y

# apt install php-common -y

# apt install php-gmp -y

# apt install php-curl -y

# apt install php-soap -y

# apt install php-bcmath -y

# apt install php-intl -y

# apt install php-mbstring -y

# apt install php-xmlrpc -y

Note: In Ubuntu 18.04, We have to use

# apt install php7.1-mcrypt -y

Note: In Ubuntu 16.04, We have to use

# apt install php-mcrypt -y

# apt install php-mysql -y

# apt install php-gd -y

# apt install php-xml -y

# apt install php-cli -y

# apt install php-zip -y

# apt install zip -y

# apt install upzip -y

# apt install curl -y

# apt install git -y

--------------------------------------------------------------------

Edit the php.ini file in the etc directory

--------------------------------------------------------------------

# nano /etc/php/7.1/apache2/php.ini

Note: Update these lines in the file.

file_uploads = On

allow_url_fopen = On

short_open_tag = On

memory_limit = 2G

upload_max_filesize = 100M

max_execution_time = 360

date.timezone = America/Chicago

Note: Unchecked all the extension lines in the file

--------------------------------------------------------------------

Create php information file in the root directory

--------------------------------------------------------------------

# systemctl restart apache2.service

# nano /var/www/html/phpinfo.php

// php information file creator

<?php

// Show all information, defaults to INFO_ALL

phpinfo();

?>

--------------------------------------------------------------------

Edit the apache configuration file in the etc directory.

--------------------------------------------------------------------

# nano /etc/apache2/sites-available/000-default.conf

<VirtualHost *:80>

ServerAdmin [email protected]

DocumentRoot /var/www/html/FOLDER NAME/

ServerName example.com

ServerAlias www.example.com

<Directory /var/www/html/FOLDER NAME/>

Options Indexes FollowSymLinks MultiViews

AllowOverride All

Order allow,deny

allow from all

</Directory>

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

</VirtualHost>

--------------------------------------------------------------------

Download the CMS and install in the Root Directory

--------------------------------------------------------------------

// Here, we are going to install Magento 2

// Here, we are going to download Magento 2 from github download

# cd /var/www/html/

# cd /var/www/html

# curl -sS https://getcomposer.org/installer | sudo php -- --install-dir=/usr/local/bin --filename=composer

# cd /var/www/html

# composer create-project --repository=https://repo.magento.com/ magento/project-community-edition (FOLDER NAME)

--------------------------------------------------------------------

For downloading the Magento 2, we will have to generate a set of public and private key in the Magento stie.

--------------------------------------------------------------------

Public Key 060f1460c97de693f3de3a8525db0ae8

Private Key b5c35b486038e871d5baca9fb326988e

# chmod 777 -R /var/www/html/

# chown -R www-data:www-data /var/www/html/FOLDER NAME /

# chmod -R 777 /var/www/html/FOLDER NAME/

# a2ensite magento2.conf

# a2enmod rewrite

# systemctl restart apache2.service

# chmod 777 -R /var/www/html/ // Most Important command in the installation

--------------------------------------------------------------------

After installation command

--------------------------------------------------------------------

# cd /var/www/html/magento2

# bin/magento maintenance:enable

# php bin/magento indexer:reindex

# php bin/magento cron:install

# php bin/magento maintenance:disable

# php bin/magento setup:store-config:set --base-url="http://www.cellon.com/ma/"

--------------------------------------------------------------------

Magento Update command

--------------------------------------------------------------------

# composer require magento/product-community-edition 2.3.0 --no-update

# composer update

# php bin/magento setup:upgrade

# php bin/magento setup:di:compile

1 note

·

View note

Text

This guide will focus on monitoring of Redis application on a Linux server. Redis is an open source in-memory data structure store, used as a database, cache and message broker. Redis provides a distributed, in-memory key-value database with optional durability. Redis supports different kinds of abstract data structures, such as strings, sets, maps, lists, sorted sets, spatial indexes, and bitmaps. So far we have covered the following monitoring with Prometheus: Monitoring Ceph Cluster with Prometheus and Grafana Monitor Linux Server Performance with Prometheus and Grafana in 5 minutes Monitor BIND DNS server with Prometheus and Grafana Monitoring MySQL / MariaDB with Prometheus in five minutes Monitor Apache Web Server with Prometheus and Grafana in 5 minutes What’s exported by Redis exporter? Most items from the INFO command are exported, see http://redis.io/commands/info for details. In addition, for every database there are metrics for total keys, expiring keys and the average TTL for keys in the database. You can also export values of keys if they’re in numeric format by using the -check-keys flag. The exporter will also export the size (or, depending on the data type, the length) of the key. This can be used to export the number of elements in (sorted) sets, hashes, lists, etc. Setup Pre-requisite Installed Prometheus Server – Install Prometheus Server on CentOS 7 and Ubuntu Installed Grafana Data Visualization & Monitoring – Install Prometheus Server on CentOS 7 and Ubuntu Step 1: Download and Install Redis Prometheus exporter This Prometheus exporter for Redis metrics supports Redis: curl -s https://api.github.com/repos/oliver006/redis_exporter/releases/latest | grep browser_download_url | grep linux-amd64 | cut -d '"' -f 4 | wget -qi - Extract the downloaded archive file tar xvf redis_exporter-*.linux-amd64.tar.gz sudo mv redis_exporter-*.linux-amd64/redis_exporter /usr/local/bin/ rm -rf redis_exporter-*.linux-amd64* redis_exporter should be executable from your current SHELL $ redis_exporter --version INFO[0000] Redis Metrics Exporter v1.27.0 build date: 2021-08-30-23:37:23 sha1: ef05f8642cd71d632ca14d728c9c7534835d3674 Go: go1.17 GOOS: linux GOARCH: amd64 To get a list of all options supported, pass --help option # redis_exporter --help Usage of redis_exporter: -check-keys string Comma separated list of key-patterns to export value and length/size, searched for with SCAN -check-single-keys string Comma separated list of single keys to export value and length/size -debug Output verbose debug information -log-format string Log format, valid options are txt and json (default "txt") -namespace string Namespace for metrics (default "redis") -redis-only-metrics Whether to export go runtime metrics also -redis.addr string Address of one or more redis nodes, separated by separator -redis.alias string Redis instance alias for one or more redis nodes, separated by separator -redis.file string Path to file containing one or more redis nodes, separated by newline. NOTE: mutually exclusive with redis.addr -redis.password string Password for one or more redis nodes, separated by separator -script string Path to Lua Redis script for collecting extra metrics -separator string separator used to split redis.addr, redis.password and redis.alias into several elements. (default ",") -use-cf-bindings Use Cloud Foundry service bindings -version Show version information and exit -web.listen-address string Address to listen on for web interface and telemetry. (default ":9121") -web.telemetry-path string Path under which to expose metrics. (default "/metrics") ... Step 2: Create Prometheus redis exporter systemd service / Init script The user prometheus will be used to run the service. Add Promethes system user if it doesn’t exist